The aim of his repository is to evaluate the loading performance of various audio I/O packages interfaced from python.

This is relevant for machine learning models that today often process raw (time domain) audio and assembling a batch on the fly. It is therefore important to load the audio as fast as possible. At the same time a library should ideally support a variety of uncompressed and compressed audio formats and also is capable of loading only chunks of audio (seeking). The latter is especially important for models that cannot easily work with samples of variable length (convnets).

| Library | Version | Short-Name/Code | Out Type | Supported codecs | Excerpts/Seeking |

|---|---|---|---|---|---|

| scipy.io.wavfile | 1.9.3 | scipy |

Numpy | PCM (only 16 bit) | ❌ |

| scipy.io.wavfile memmap | 1.9.3 | scipy_mmap |

Numpy | PCM (only 16 bit) | ✅ |

| soundfile (libsndfile) | 0.12.1 | soundfile |

Numpy | PCM, Ogg, Flac, MP3 | ✅ |

| pydub | 0.25.1 | pydub |

Python Array | PCM, MP3, OGG or other FFMPEG/libav supported codec | ❌ |

| aubio | 0.4.9 | aubio |

Numpy Array | PCM, MP3, OGG or other avconv supported code | ✅ |

| audioread (FFMPEG) | 2.1.9 | ar_ffmpeg |

Numpy Array | all of FFMPEG | ❌ |

| librosa | 0.10.0 | librosa |

Numpy Array | all of soundfile | ✅ |

tensorflow tf.io.audio.decode_wav |

2.11.0 | tf_decode_wav |

Tensorflow Tensor | PCM (only 16 bit) | ❌ |

tensorflow-io from_audio |

0.30.0 | tfio_fromaudio |

Tensorflow Tensor | PCM, Ogg, Flac | ✅ |

| torchaudio (sox_io) | 0.13.1 | torchaudio |

PyTorch Tensor | all codecs supported by Sox | ✅ |

| torchaudio (soundfile) | 0.13.1 | torchaudio |

PyTorch Tensor | all codecs supported by Soundfile | ✅ |

| soxbindings | 0.9.0 | soxbindings |

Numpy Tensor | all codecs supported by Soundfile | ✅ |

| stempeg | 0.2.3 | stempeg |

Numpy Tensor | all codecs supported by FFMPEG | ✅ |

- audioread (coreaudio): only available on macOS.

- audioread (gstreamer): too difficult to install.

- madmom: same ffmpeg interface as

ar_ffmpeg. - pymad: only support for MP3, also very slow.

- python builtin

wave: too limited cocdec support.

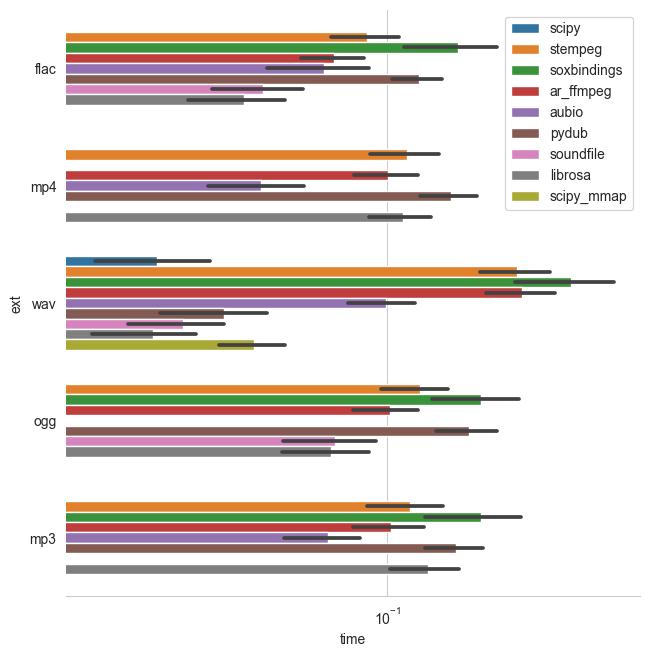

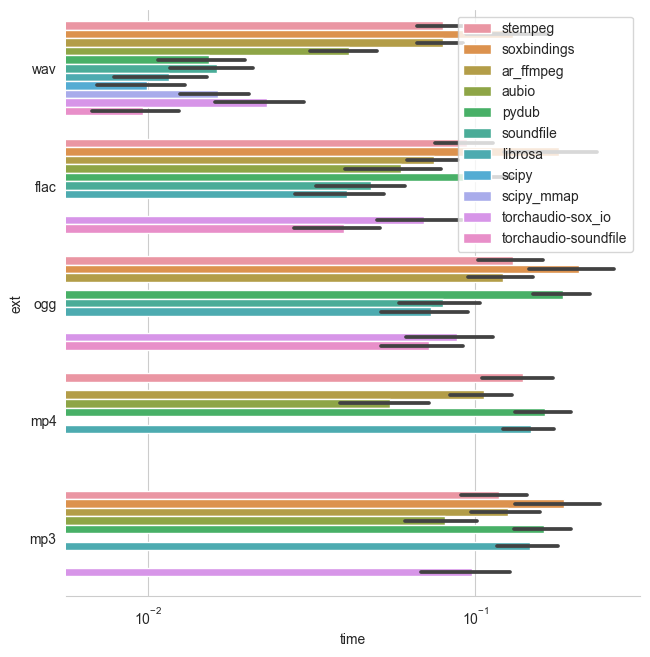

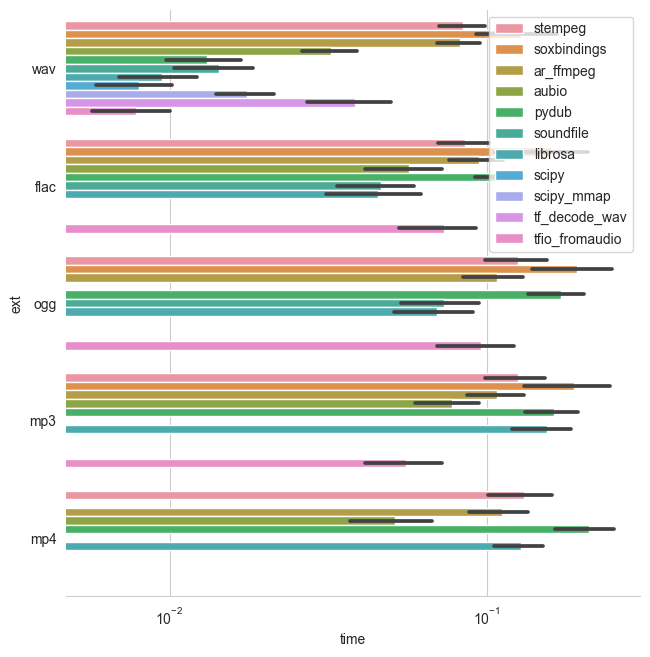

The benchmark loads a number of (single channel) audio files of different length (between 1 and 151 seconds) and measures the time until the audio is converted to a tensor. Depending on the target tensor type (either numpy, pytorch or tensorflow) a different number of libraries were compared. E.g. when the output type is numpy and the target tensor type is tensorflow, the loading time included the cast operation to the target tensor. Furthermore, multiprocessing was disabled for data loaders. So especially for deep learning applications the loading speed doesn't necessarily reprent the batch loading speed.

**All results shown below, depict loading time **in seconds**.

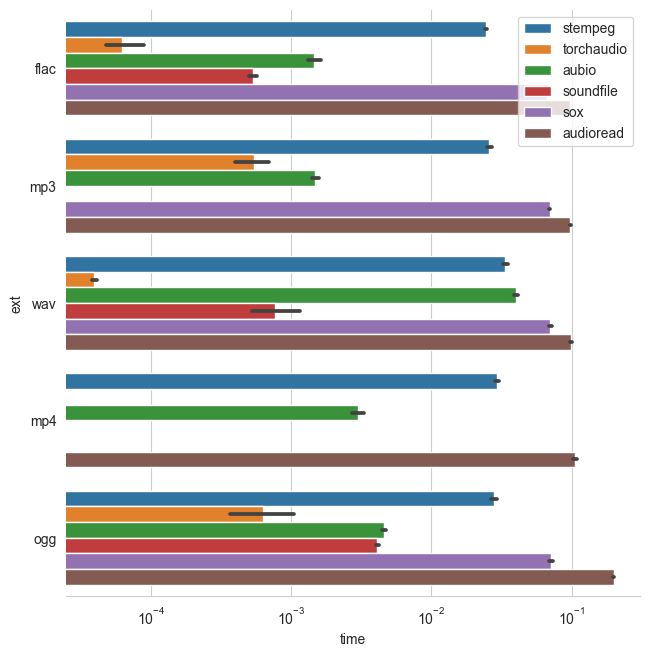

In addition to loading the file, one might also be interested in extracting

metadata. To benchmark this we asked for every file to provide metadata for

sampling rate, channels, samples, and duration. All in consecutive

calls, which means the file is not allowed to be opened once and extract all

metadata together. Note, that we have excluded pydub from the benchmark

results on metadata as it was significantly slower than the other tools.

To test the loading speed, we generate different durations of random (noise) audio data and encode it either to PCM 16bit WAV, MP3 CBR, or MP4.

The data is generated by using a shell script. To generate the data in the folder AUDIO, run

generate_audio.shBuild the docker container using

docker build -t audio_benchmark .It installs all the package requirements for all audio libraries.

Afterwards, mount the data directory into the docker container and run run.sh inside the

container, e.g.:

docker run -v /home/user/repos/python_audio_loading_benchmark/:/app \

-it audio_benchmark:latest /bin/bash run.shCreate a virtual environment, install the necessary dependencies and run the benchmark with

virtualenv --python=/usr/bin/python3 --no-site-packages _env

source _env/bin/activate

pip install -r requirements.txt

pip install git+https://github.com/pytorch/audio.gitRun the benchmark with

bash run.shand plot the result with

python plot.pyThis generates PNG files in the results folder.

The data is generated by using a shell script. To generate the data in the folder AUDIO, run generate_audio.sh.

@faroit, @hagenw

We encourage interested users to contribute to this repository in the issue section and via pull requests. Particularly interesting are notifications of new tools and new versions of existing packages. Since benchmarks are subjective, I (@faroit) will reran the benchmark on our server again.