This repository contains a machine learning pipeline for training an object detection model to identify hazel dormice (Muscardinus avellanarius) in images. Additionally, the dataset is provided in dormouse-detection/training_data and a pre-trained model is downloadable from Releases.

The model architecture uses transfer learning from a YOLOv8 model pre-trained on the ImageNet dataset.

The Dormouse Detection Dataset in this project was created by downloading images from iNaturalist and annotating them with CVAT (Computer Vision Annotation Tool).

🔧 Built with Keras and TensorFlow.

dormouse-detection/

├── data_downloader/ # Scripts for downloading data

├── logs/ # Training logs and metrics

├── plots/ # Visualisation of results

├── src/ # Source code for the pipeline

│ ├── __init__.py

│ ├── data_loader.py # Handles data loading and preprocessing

│ ├── logger.py # Logging

│ ├── metrics_callback.py # Model performance metrics computed during training

│ ├── model.py # Model architecture

│ ├── training_pipeline.py # Training and evaluation

│ ├── utils.py # Utility functions

├── tests/ # Unit testing

├── trained_models/ # Saved trained models

├── training_data/ # Training dataset

├── .gitignore

├── config.py # Configuration for model training and hyperparameters

├── inference.py # Script for running inference with trained models

├── main.py # Main entry point for training

├── poetry.lock

├── pyproject.toml

└── README.md

- Create a virtual environment using Conda.

# Create the environment

conda create --name env python=3.9.6

# Activate the environment

conda activate env- Install Poetry and project dependencies.

# Install Poetry

pip install poetry# Install dependencies

poetry installIn addition to training your own model using the instructions in the Training section, a pre-trained model for those who wish to directly use it for inference without going through the training process is available.

You can download the .keras model file from the Releases section.

To train the model, use the main.py as the entry point:

python main.pyThis will load configuration parameters from config.py and start the training process, saving models and logs in their respective directories.

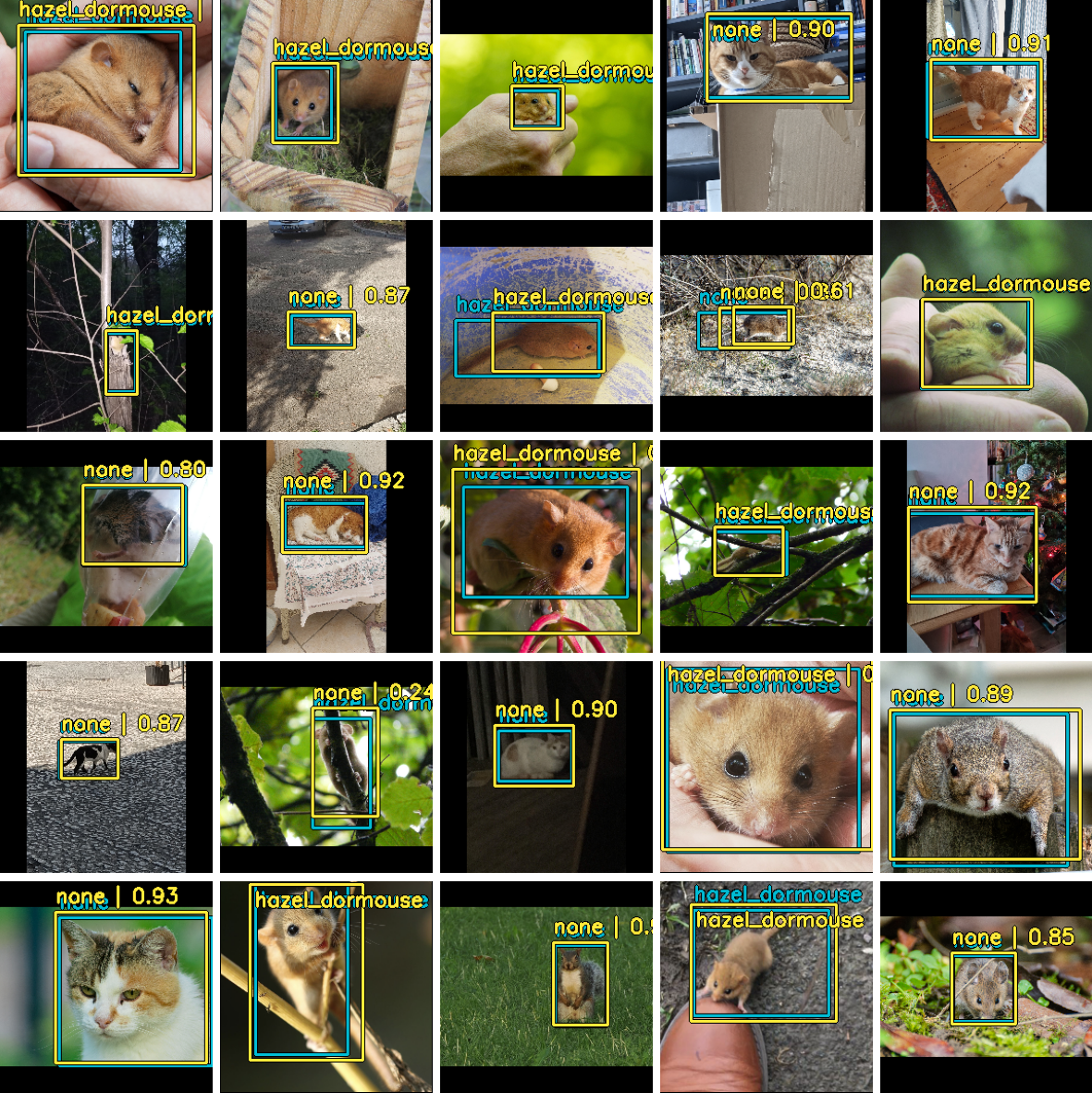

After training completes, the test set predictions are visualised and saved to /plots.

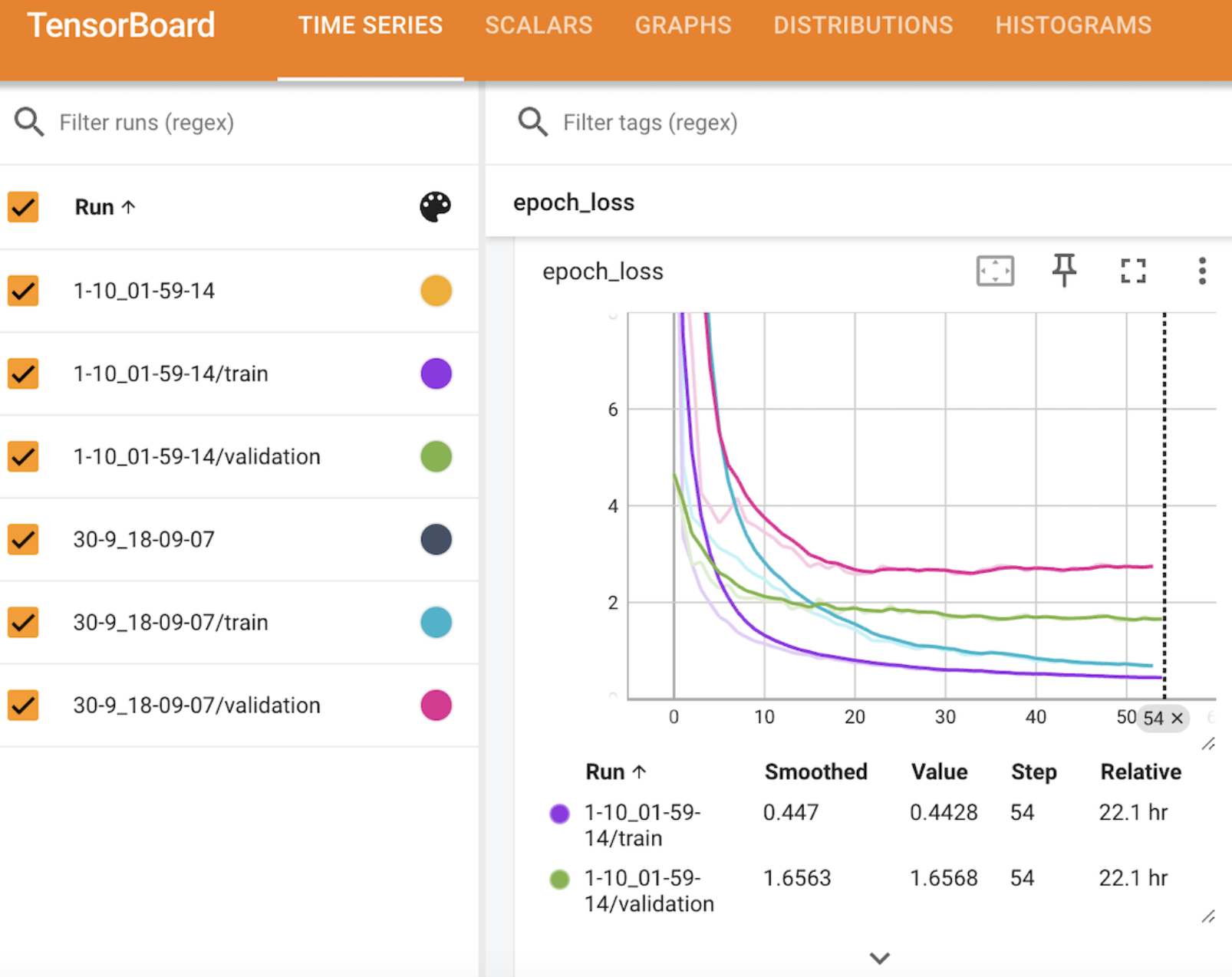

To view the training performance on Tensorboard:

tensorboard --logdir=logs/fit For running inference on new images using a trained model:

python inference.py --model_path trained_models/model_name.keras --image_path path/to/image.jpgOr, to predict all images in a folder:

python inference.py --model_path trained_models/model_name.keras --image_dir path/to/dir-

Positive Examples: 214 images of hazel dormice, each with bounding box annotations. The annotation class ID for hazel dormice is 0.

-

Negative Examples: 232 images of various non-target species with bounding box annotations. The breakdown is as follows:

- Common shrew: 22 images

- Grey squirrel: 26 images

- Wood mouse: 81 images

- Domestic cat: 103 images

All non-target species are annotated with class ID 1.

The training data is organised in the training_data/ directory and follows the YOLO annotation format, which includes:

- Images: All images are in .jpg format.

- Annotations: Corresponding .txt annotation files for each image. These annotation files use the YOLO format with the following structure:

class_id rel_centre_x rel_centre_y rel_width rel_height

Relative values are normalised between 0-1, with 1 representing the entire length of the image in the corresponding dimension.

- class_id: An integer representing the class of the detected object.

- rel_centre_x, rel_centre_y: The relative centre coordinates of the bounding box, between 0-1.

- rel_width, rel_height: The relative width and height of the bounding box, between 0-1.

- The dataset is split into training and test sets with a ratio of 90/10.

- The validation set used for calculating early stopping is the same as the test set due to limited data availability.

If data augmentation is enabled in the config.py, the training set is duplicated 5 times with the following augmentations applied: horizontal-flip, jittered-resize.

Email: fbennedik1@gmail.com.