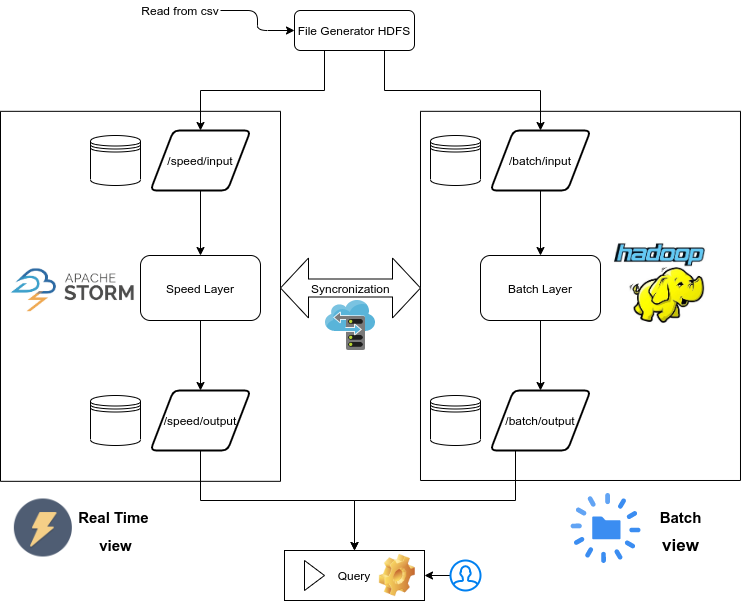

Calculating arbitrary functions on large real-time data sets is a difficult task. In this study a possible approach to problems of this type is shown: as an example task we consider the analysis of the feelings of the tweets. Instead of getting tweets through the Twitter API, we simulate issuing them by reading them from the sentiment140 dataset. In our scenario we create a Lambda Architecture that uses Apache Hadoop for the Batch Layer, Apache Storm for the Speed Layer and HDFS for data management. We use LingPipe to classify tweets using computational linguistics. This architecture allows to harness the full power of a computer cluster for data processing, is easily scalable, and meets low latency requirements for answering queries in real time.

- Java JDK: open jdk 11

- Apache Hadoop 2.9.2

- Apache Storm 2.1.0

- HdfsSpout Apache Storm 2.1.0

- LingPipe 4.1.2

- jfreechart 1.0.1

Sentiment140, 1.6 million tweets with annotated sentiment: download

git clone https://github.com/fedem96/SentimentAnalysis-LambdaArchitecture.git

Import all jar/libraries in your project and test Hadoop configuration. Set up a single node cluster guide Hadoop

Classifieredit configuration and set args[0]=dataset_file and args[1]=file in which save the classifierGeneratoredit configuration and set args[0]=dataset_file to generate tweets on HDFSBatch Layerno parameters need in args. Run after GeneratorSpeed Layerno parameters need in args. Run after GeneratorQuery Guino parameters in args. Run after Batch and Speed layerClearno parameters need in args. Used to clear all the directories in the HDFS before running a new simulation