A customizable GenAI RAG application using Google Cloud components:

- Gemini: LLM

- textembedding-gecko: embedding model

- Cloud Run: app deployment

- DocAI: document parsing

The app also uses the following open source components:

- gradio: UI

- langchain: orchestration of RAG components

The project has quick customizable options in src/config.py:

- unstructured text parsing

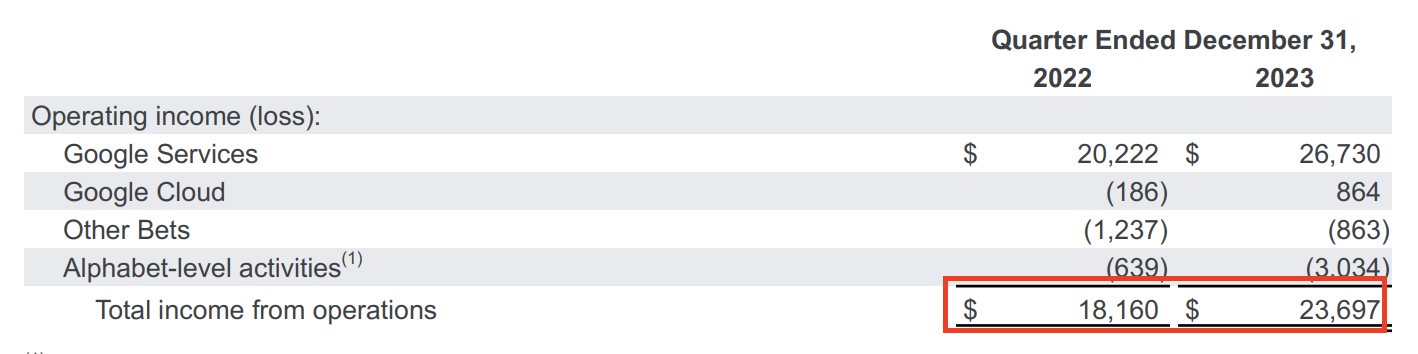

- table parsing

- entity parsing

- advanced options for each parsing strategy

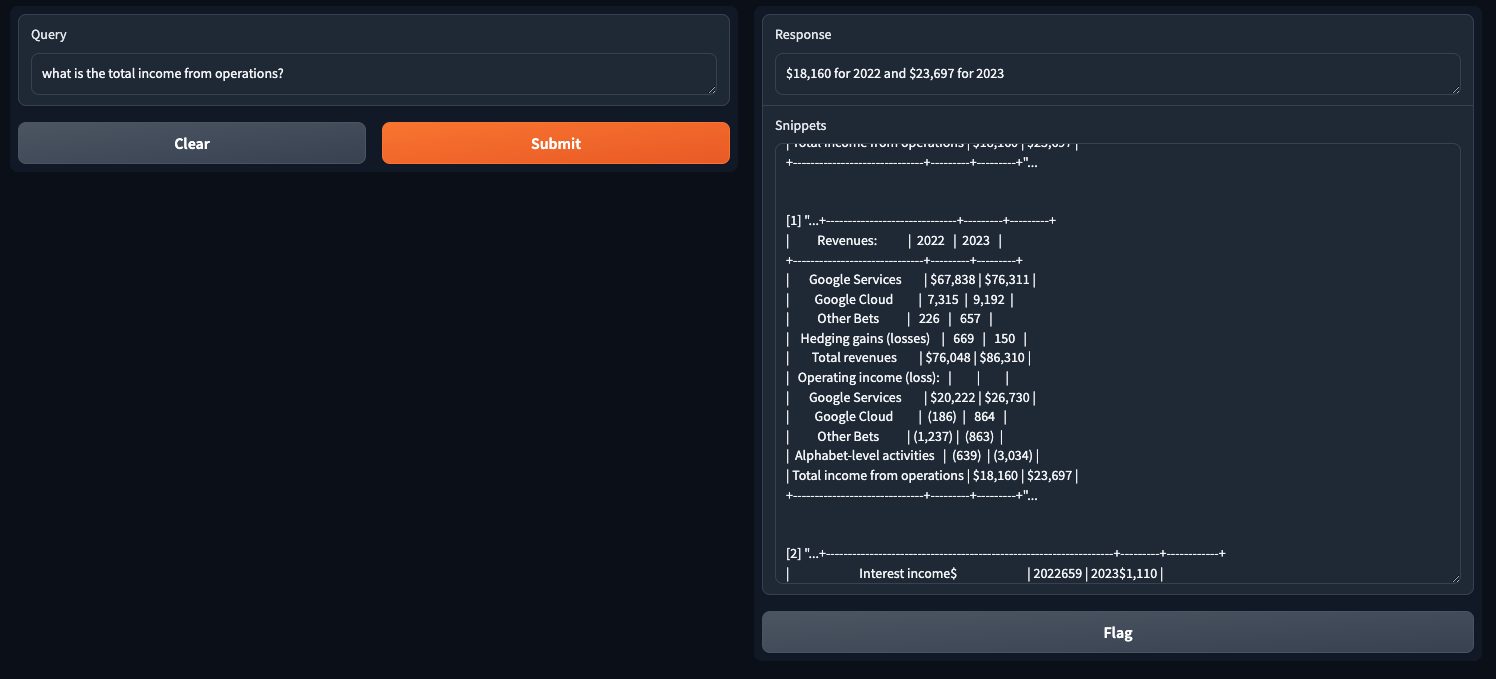

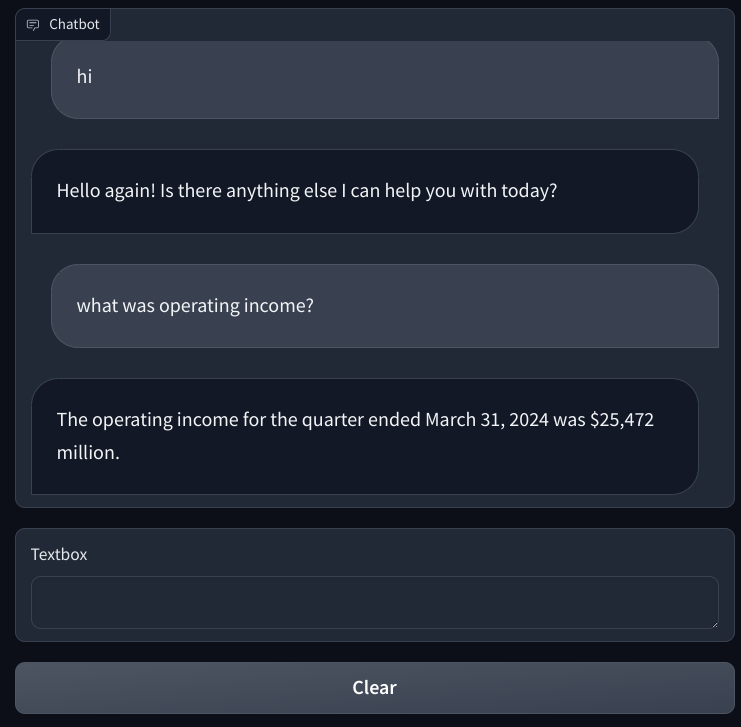

- chatbot vs search function

- ✅ Add retrieval snippets to UI

- ✅ Integrate DocAI Form Parser for tables. Remove table snippets from unstructured text parsing

- ✅ Integrate DocAI GenAI CDE 2.0 parser for entity extraction

- ✅ Add option for chatbot instead of single search

- ⬜ Read directory instead of one file

- ⬜ Read files from GCS

- ⬜ Include Vertex Search as a retrieval source

- ⬜ Include Vertex Vector Search as a retrieval source

- ⬜ Filtered retrieval based on document metadata (year, customer, etc...)

- ⬜ Evaluation job of RAG model for accuracy

- ⬜ Add github actions

Google Cloud Platform (GCP) project python version >= 3.8

Enable the following APIs

- Cloud Document AI API

- Vertex AI API

- Cloud Run API

- Generative Language API

Create Service Account with following roles:

- Vertex AI Administrator

- Document AI API User

- Cloud Run Developer

Create a service account key which automatically downloads a json file with your key:

Next, edit src/config.py to include your GCP project:

PROJECT = <PROJECT_ID>

Add the pdf document that you are trying to ask questions from to the "docs/" directory and modify src/config.py:

FILENAME=<DOCUMENT_LOCATION>

Create a DocAI processor and modify src/config.py:

For this project you will need two processors of two different types: One is the FORM_PARSER_PROCESSOR (setup) and the CUSTOM_EXTRACTION_PROCESSOR (setup). The form parser will be used to parse unstructured text blocks and tables while the custom extraction processor will be used to pull entities. Note that the custom extraction processor has some new Generative AI features that make it easier to extract entities.

FORM_PROCESSOR_ID=<ID>

FORM_PROCESSOR_VERSION=<VERSION>

CDE_PROCESSOR_ID=<ID>

CDE_PROCESSOR_VERSION=<VERSION>

Find the location of the json service account file that was downloaded and run on terminal:

export GOOGLE_APPLICATION_CREDENTIALS = <SERVICE ACCOUNT FILE LOCATION>

Clone this terminal and run:

cd src

python main.py

Modify Dockerfile to include your own project:

ENV GOOGLE_CLOUD_PROJECT <PROJECT_ID>

Deploy to cloud run:

gcloud run deploy <ANY APP NAME> --source . --region <REGION> --service-account=<SERVICE_ACCOUNT>