This repository contains an implementation of the learning algorithm proposed in Deep Q-Learning from Demonstrations (Hester et al. 2018) for solving Atari 2600 video games using a combination of reinforcement learning and imitation learning techniques.

Note: The implementation is part of my Bachelor's Thesis Tiefes Q-Lernen mit Demonstrationen.

|

|

|

|---|---|---|

|

|

|

- DQN (cf. Human-level control through deep reinforcement learning (Mnih et al. 2015))

- Double DQN (cf. Deep Reinforcement Learning with Double Q-learning (Van Hasselt, Guez, Silver 2015))

- PER (cf. Prioritized Experience Replay (Schaul et al. 2015))

- Dueling DQN (cf. Dueling Network Architectures for Deep Reinforcement Learning (Wang et al. 2016))

- n-step DQN (cf. Understanding Multi-Step Deep Reinforcement Learning: A Systematic Study of the DQN Target (Hernandez-Garcia, Sutton 2019))

- DQfD (cf. Deep Q-Learning from Demonstrations (Hester et al. 2018))

In order to clone the repository, open your terminal, move to the directory in which you want to store the project and type

$ git clone https://github.com/felix-kerkhoff/DQfD.gitThe installation of the GPU-Version of TensorFlow with the proper Nvidia driver and Cuda libraries from source can be quite tricky.

I recommend using Anaconda/Miniconda to create a virtual environment for the installation of the necessary packages, as conda will automatically install the right Cuda libraries.

So type

$ conda create --name atari_envto create an environment called atari_env.

If you already have a working TensorFlow 2 installation, you can of course also use venv and pip to create the virtual environment and install the packages.

To install the packages, we first have to activate the environment by typing:

$ conda activate atari_envThen install the necessary packages specified in requirements.txt by using the following command in the directory of your project:

$ conda install --file requirements.txt -c conda-forge -c poweraiNote:

-

If you want to use

pipfor the installation, you will need to make the following changes to the requirements.txt file:- replace the line

atari_py==0.2.6byatari-py==0.2.6 - replace the line

opencv==4.4.0byopencv-python==4.4.0

- replace the line

-

For being able to compile the Cython modules, make sure to have a proper C/C++ compiler installed. See the Cython Documentation for further information.

To see if everything works fine, I recommend training your first agent on the game Pong as this game needs the least training time. Therefor just run the following command in your terminal (in the directory of your project):

$ python pong_standard_experiment.pyYou should be seeing good results after about 150,000 training steps which corresponds to about 15 minutes of computation time on my machine.

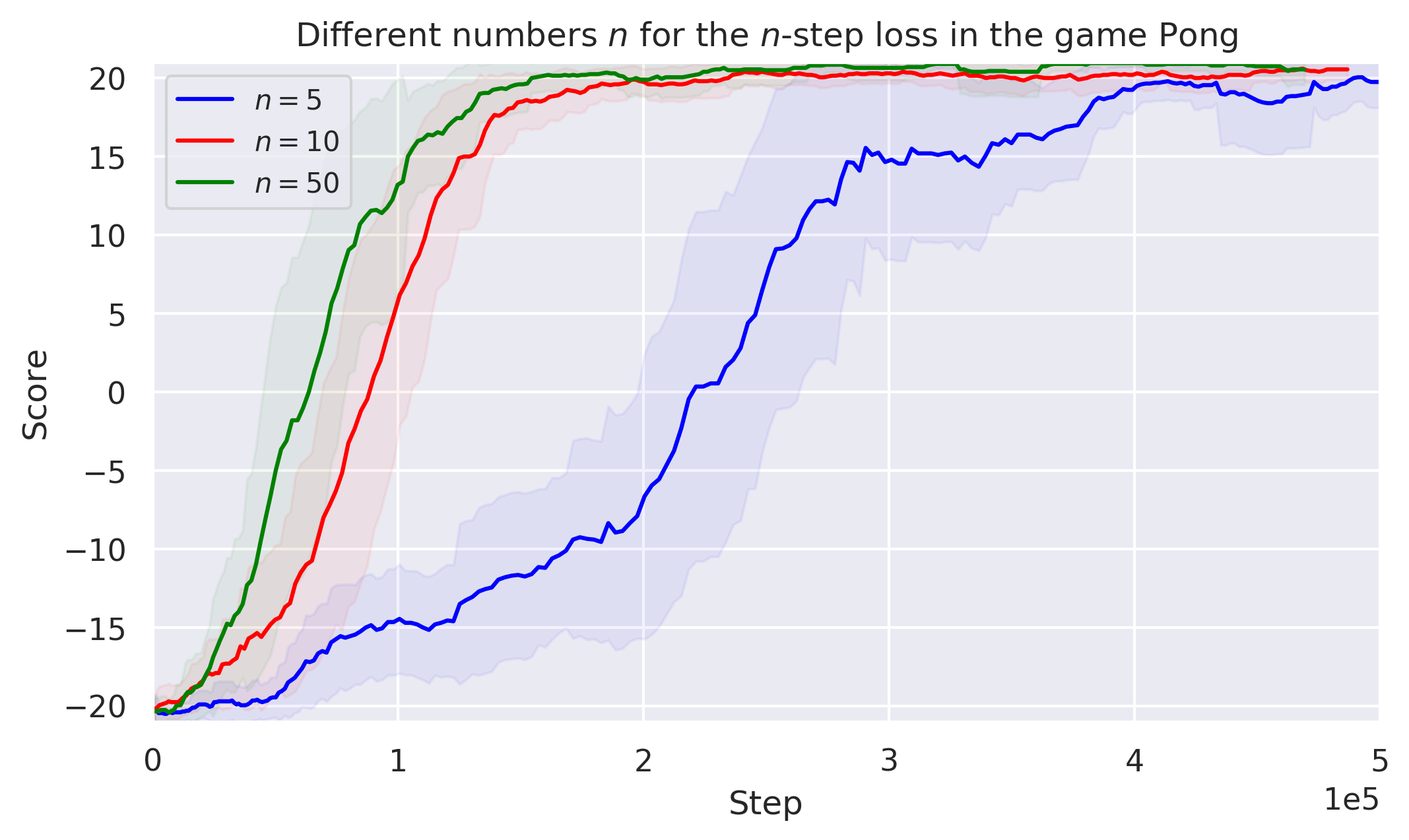

By using n_step = 50 instead of n_step = 10 as the number of steps considered for the n-step loss, you can even speed up the process to get good results after less than 100,000 training steps or 10 minutes (see the experiment in the next section).

Feel free to experiment with all the other parameters and games by changing them in the respective file.

With the first experiment, we try to show how the use of multi-step losses can speed up the training process in the game Pong.

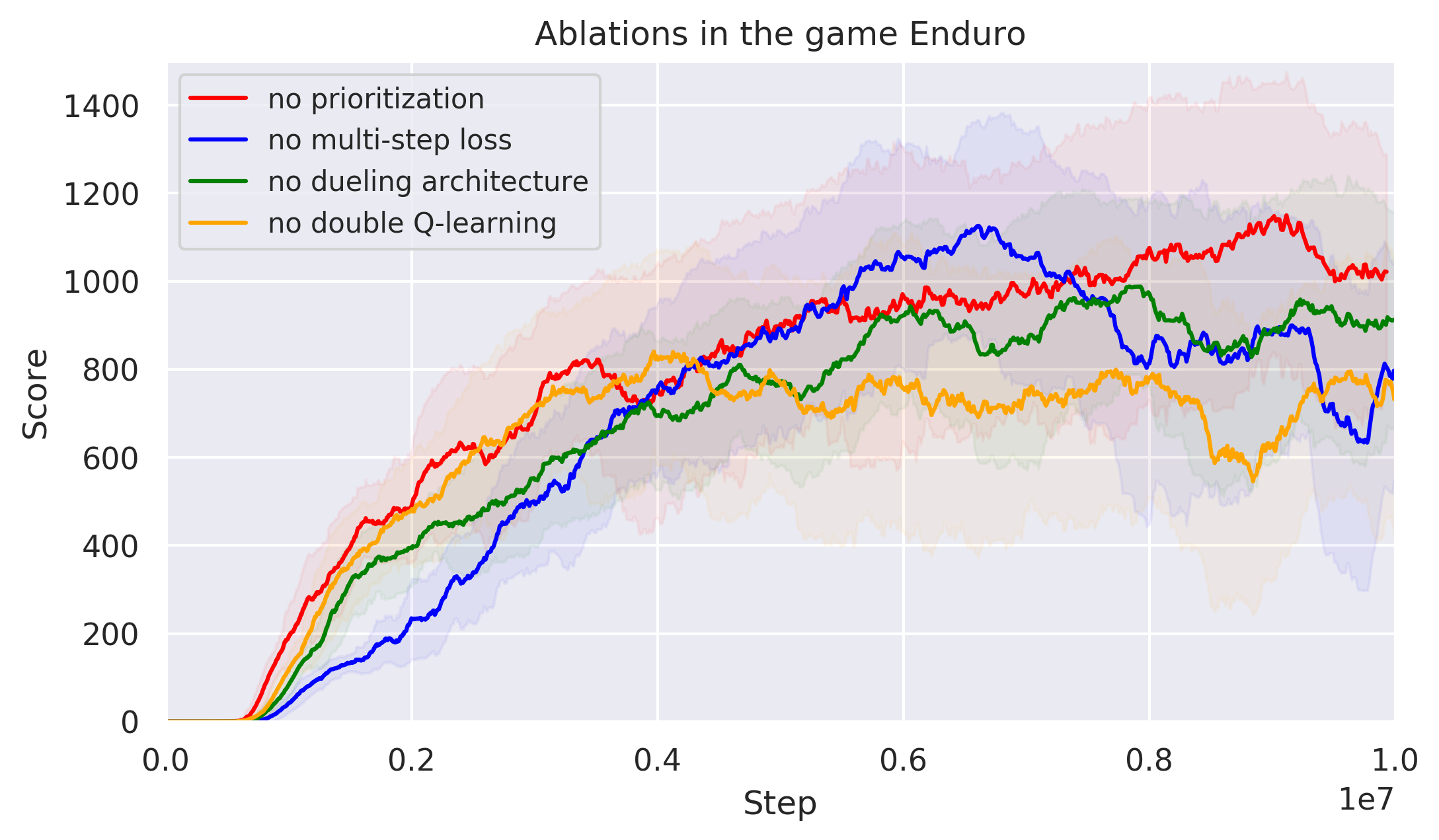

In the next experiment we investigate the influence of the different components of n-step Prioritized Dueling Double Deep Q-Learning using the example of the game Enduro. We will do this by leaving out exactly one of the components and keeping all other parameters unchanged.

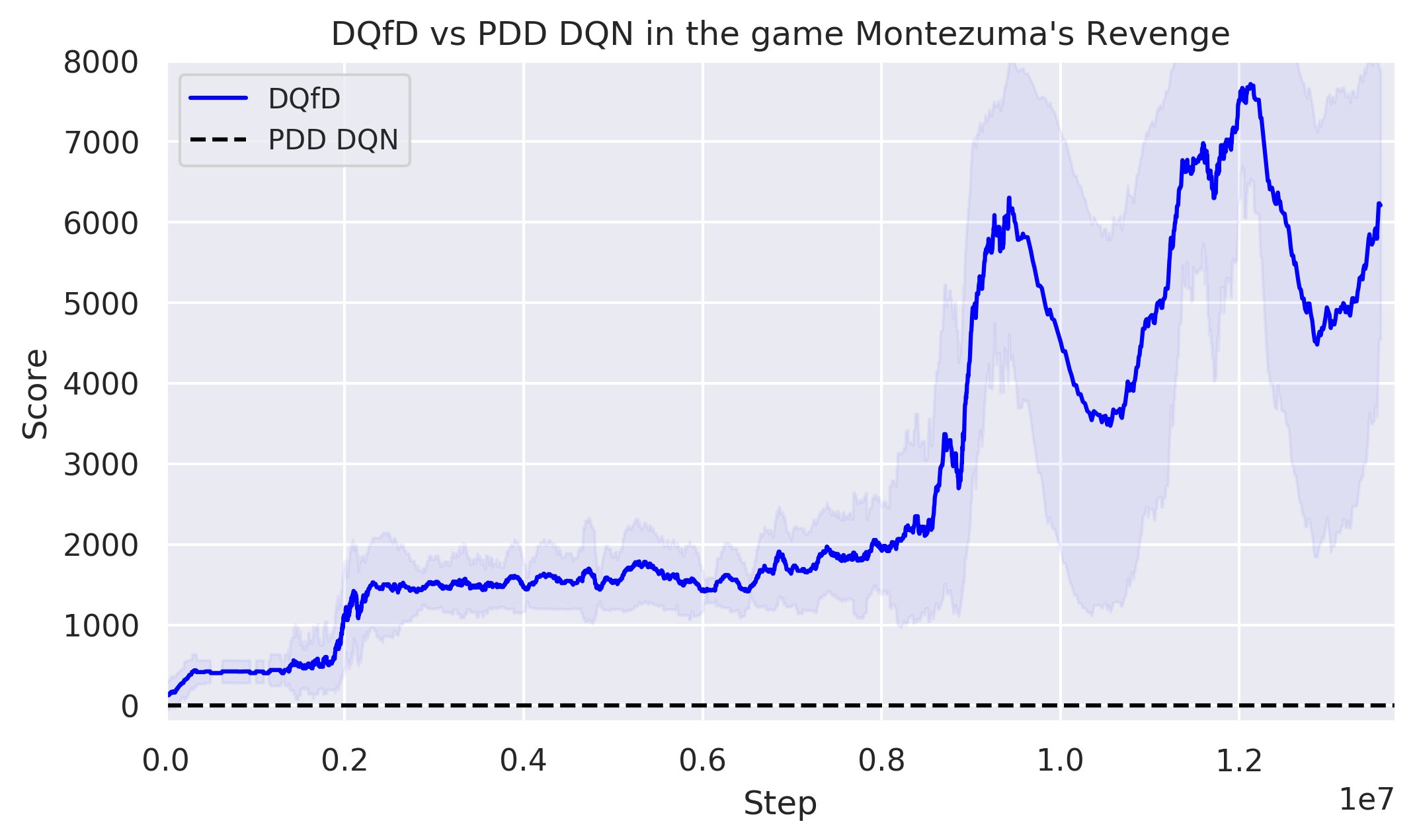

Due to very sparse rewards and the need of long-term planning, Montezuma's Revenge is known to be one of the most difficult Atari 2600 games to solve for deep reinforcement learning agents, such that most of them fail in this game. The use of human demonstrations might help to overcome this issue:

Note:

- The figures show the number of steps (i.e. the number of decisions made by the agent) on the x-axis and the scores achieved during the training process on the y-axis. The learning curves are smoothed using the moving average over intervals of 50 episodes and the shaded areas correspond to the standard deviance within these intervals.

- The learning curves were produced using the parameters (except of the ones that were considered in the experiments such as

n_stepin the first experiment) specified in the files pong_standard_experiment.py, enduro_standard_experiment.py and montezuma_demo_experiment.py.

- binary sum tree for fast proportional sampling

- Cython implementation of PER for memory efficient and fast storage and sampling of experiences

- DQN implementation (in TensorFlow 2 syntax) with an optional dueling network architecture and the possibility to use a combination of the following losses:

- single-step Q-Learning loss

- multi-step Q-Learning loss

- large margin classification loss (cf. Boosted Bellman Residual Minimization Handling Expert Demonstrations (Piot, Geist, Pietquin 2014))

- L2-regularization loss

- Q-learning agent including the learning algorithm

- logger for the documentation of the learning process

- environment preprocessing

- loading and preprocesing of human demonstration data using the Atari-HEAD data set (cf. Atari-HEAD: Atari Human Eye-Tracking and Demonstration Dataset (Zhang et al. 2019)) as resource

- function to use a pretrained model as demonstrator

- agent wrapper

- more advanced exploration strategies

- argparse function for command-line parameter selection

- random seed control for better reproducibility

- agent evaluation as suggested in The Arcade Learning Environment: An Evaluation Platform for General Agents (Bellemare et al. 2013) for better comparability

This project is licensed under the terms of the MIT license.

Copyright (c) 2020 Felix Kerkhoff