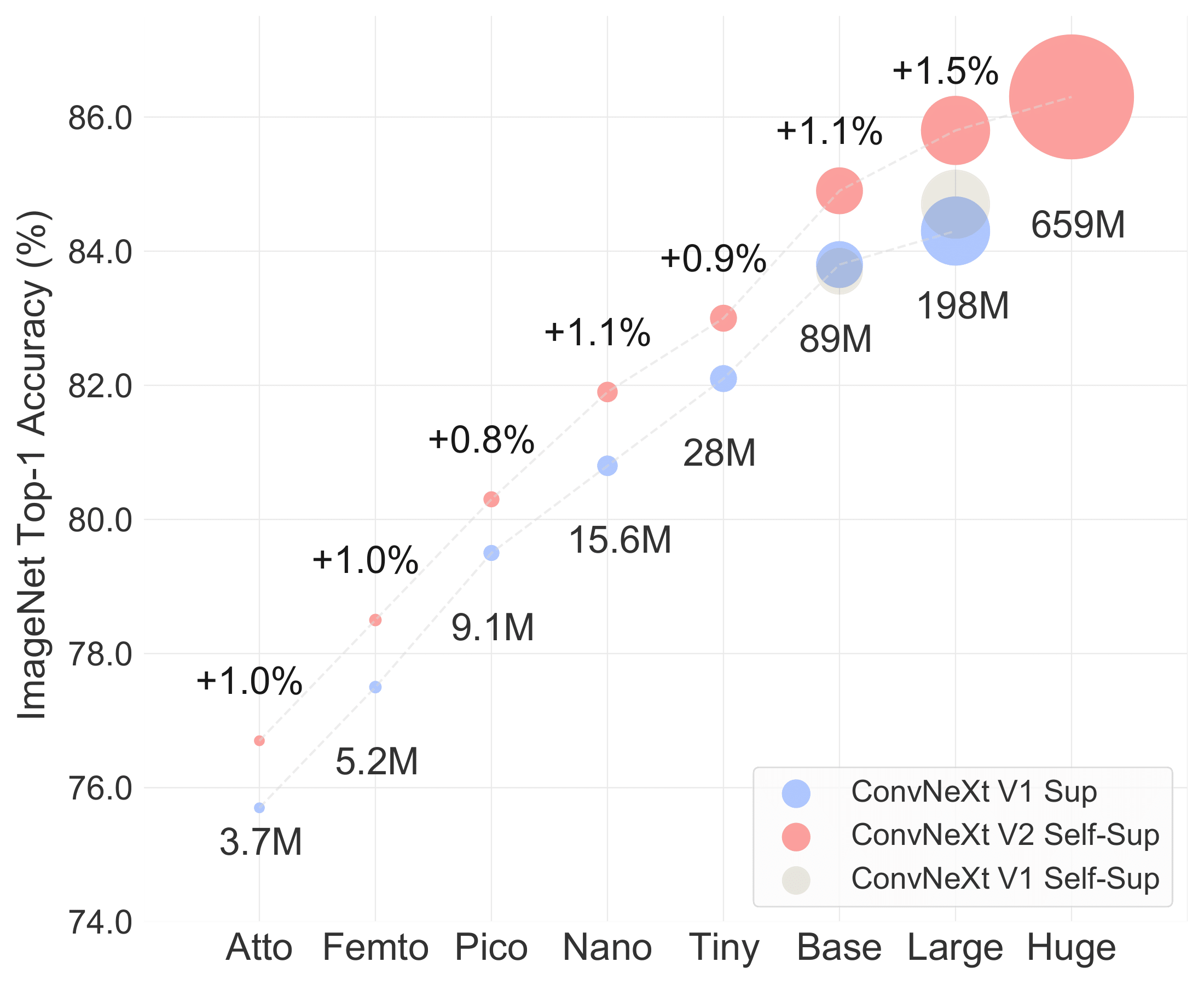

This repo contains the PyTorch version of 8 model definitions (Atto, Femto, Pico, Nano, Tiny, Base, Large, Huge), pre-training/fine-tuning code and pre-trained weights (converted from JAX weights trained on TPU) for our ConvNeXt V2 paper.

ConvNeXt V2: Co-designing and Scaling ConvNets with Masked Autoencoders

Sanghyun Woo, Shoubhik Debnath, Ronghang Hu, Xinlei Chen, Zhuang Liu, In So Kweon and Saining Xie

KAIST, Meta AI and New York University

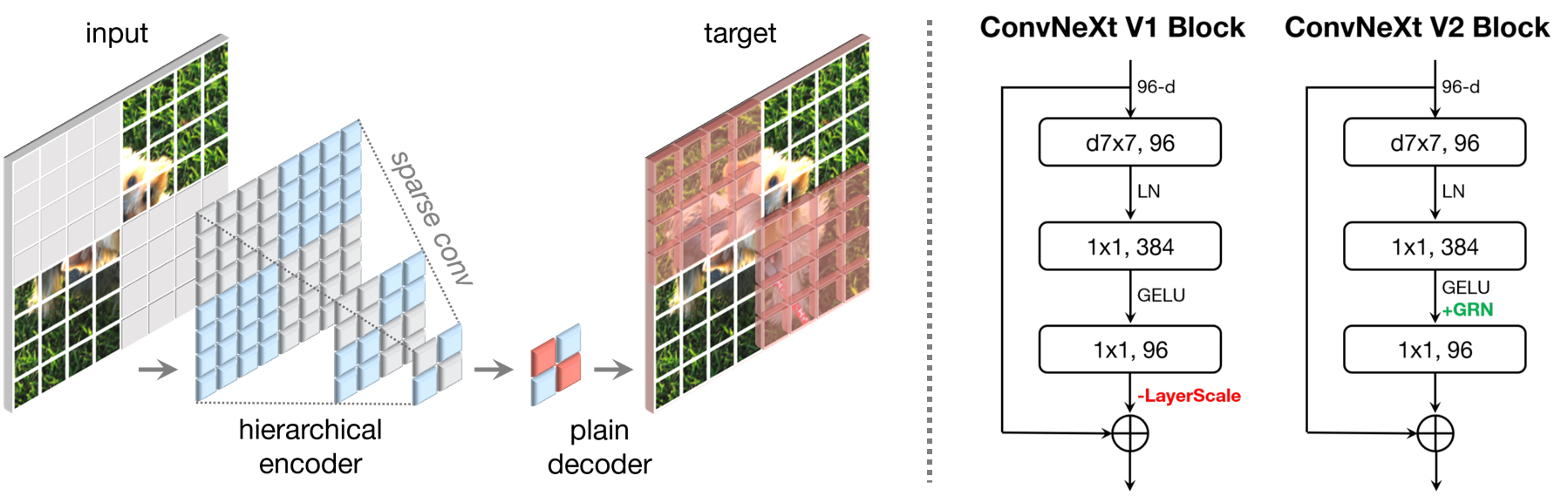

We propose a fully convolutional masked autoencoder framework (FCMAE) and a new Global Response Normalization (GRN) layer that can be added to the ConvNeXt architecture to enhance inter-channel feature competition. This co-design of self-supervised learning techniques and architectural improvement results in a new model family called ConvNeXt V2, which significantly improves the performance of pure ConvNets on various recognition benchmarks. We also provide pre-trained ConvNeXt V2 models of various sizes.

| name | resolution | #params | model |

|---|---|---|---|

| ConvNeXt V2-A | 224x224 | 3.7M | model |

| ConvNeXt V2-F | 224x224 | 5.2M | model |

| ConvNeXt V2-P | 224x224 | 9.1M | model |

| ConvNeXt V2-N | 224x224 | 15.6M | model |

| ConvNeXt V2-T | 224x224 | 28.6M | model |

| ConvNeXt V2-B | 224x224 | 89M | model |

| ConvNeXt V2-L | 224x224 | 198M | model |

| ConvNeXt V2-H | 224x224 | 660M | model |

| name | resolution | acc@1 | #params | FLOPs | model |

|---|---|---|---|---|---|

| ConvNeXt V2-A | 224x224 | 76.7 | 3.7M | 0.55G | model |

| ConvNeXt V2-F | 224x224 | 78.5 | 5.2M | 0.78G | model |

| ConvNeXt V2-P | 224x224 | 80.3 | 9.1M | 1.37G | model |

| ConvNeXt V2-N | 224x224 | 81.9 | 15.6M | 2.45G | model |

| ConvNeXt V2-T | 224x224 | 83.0 | 28.6M | 4.47G | model |

| ConvNeXt V2-B | 224x224 | 84.9 | 89M | 15.4G | model |

| ConvNeXt V2-L | 224x224 | 85.8 | 198M | 34.4G | model |

| ConvNeXt V2-H | 224x224 | 86.3 | 660M | 115G | model |

| name | resolution | acc@1 | #params | FLOPs | model |

|---|---|---|---|---|---|

| ConvNeXt V2-N | 224x224 | 82.1 | 15.6M | 2.45G | model |

| ConvNeXt V2-N | 384x384 | 83.4 | 15.6M | 7.21G | model |

| ConvNeXt V2-T | 224x224 | 83.9 | 28.6M | 4.47G | model |

| ConvNeXt V2-T | 384x384 | 85.1 | 28.6M | 13.1G | model |

| ConvNeXt V2-B | 224x224 | 86.8 | 89M | 15.4G | model |

| ConvNeXt V2-B | 384x384 | 87.7 | 89M | 45.2G | model |

| ConvNeXt V2-L | 224x224 | 87.3 | 198M | 34.4G | model |

| ConvNeXt V2-L | 384x384 | 88.2 | 198M | 101.1G | model |

| ConvNeXt V2-H | 384x384 | 88.7 | 660M | 337.9G | model |

| ConvNeXt V2-H | 512x512 | 88.9 | 660M | 600.8G | model |

Please check INSTALL.md for installation instructions.

We provide example evaluation commands for ConvNeXt V2-Base:

Single-GPU

python main_finetune.py \

--model convnextv2_base \

--eval true \

--resume /path/to/checkpoint \

--input_size 224 \

--data_path /path/to/imagenet-1k \

Multi-GPU

python -m torch.distributed.launch --nproc_per_node=8 main_finetune.py \

--model convnextv2_base \

--eval true \

--resume /path/to/checkpoint \

--input_size 224 \

--data_path /path/to/imagenet-1k \

- For evaluating other model variants, change

--model,--resume,--input_sizeaccordingly. URLs for the pre-trained models can be found from the result tables. - Setting model-specific

--drop_pathis not strictly required in evaluation, as theDropPathmodule in timm behaves the same during evaluation; but it is required in training. See TRAINING.md or our paper (appendix) for the values used for different models.

See TRAINING.md for pre-training and fine-tuning instructions.

This repository borrows from timm, ConvNeXt and MAE.

We thank Ross Wightman for the initial design of the small-compute ConvNeXt model variants and the associated training recipe. We also appreciate the helpful discussions and feedback provided by Kaiming He.

The majority of ConvNeXt-V2 is licensed under CC-BY-NC, however portions of the project are available under separate license terms: Minkowski Engine is licensed under the MIT license. Please see the LICENSE file for more information.

If you find this repository helpful, please consider citing:

@article{Woo2023ConvNeXtV2,

title={ConvNeXt V2: Co-designing and Scaling ConvNets with Masked Autoencoders},

author={Sanghyun Woo, Shoubhik Debnath, Ronghang Hu, Xinlei Chen, Zhuang Liu, In So Kweon and Saining Xie},

year={2023},

journal={arXiv preprint arXiv:2301.00808},

}