This Google Maps Scraper is provided for educational and research purposes only. By using this Google Maps Scraper, you agree to comply with local and international laws regarding data scraping and privacy. The authors and contributors are not responsible for any misuse of this software. This tool should not be used to violate the rights of others, for unethical purposes, or to use data in an unauthorized or illegal manner.

We take the concerns of the Google Maps Scraper Project very seriously. For any concerns, please contact Chetan Jain at chetan@omkar.cloud. We will promptly reply to your emails.

- ✅ BOTASAURUS: The All-in-One Web Scraping Framework with Anti-Detection, Parallelization, Asynchronous, and Caching Superpowers.

Google Maps Scraper helps you find Business Profiles from Google Maps.

-

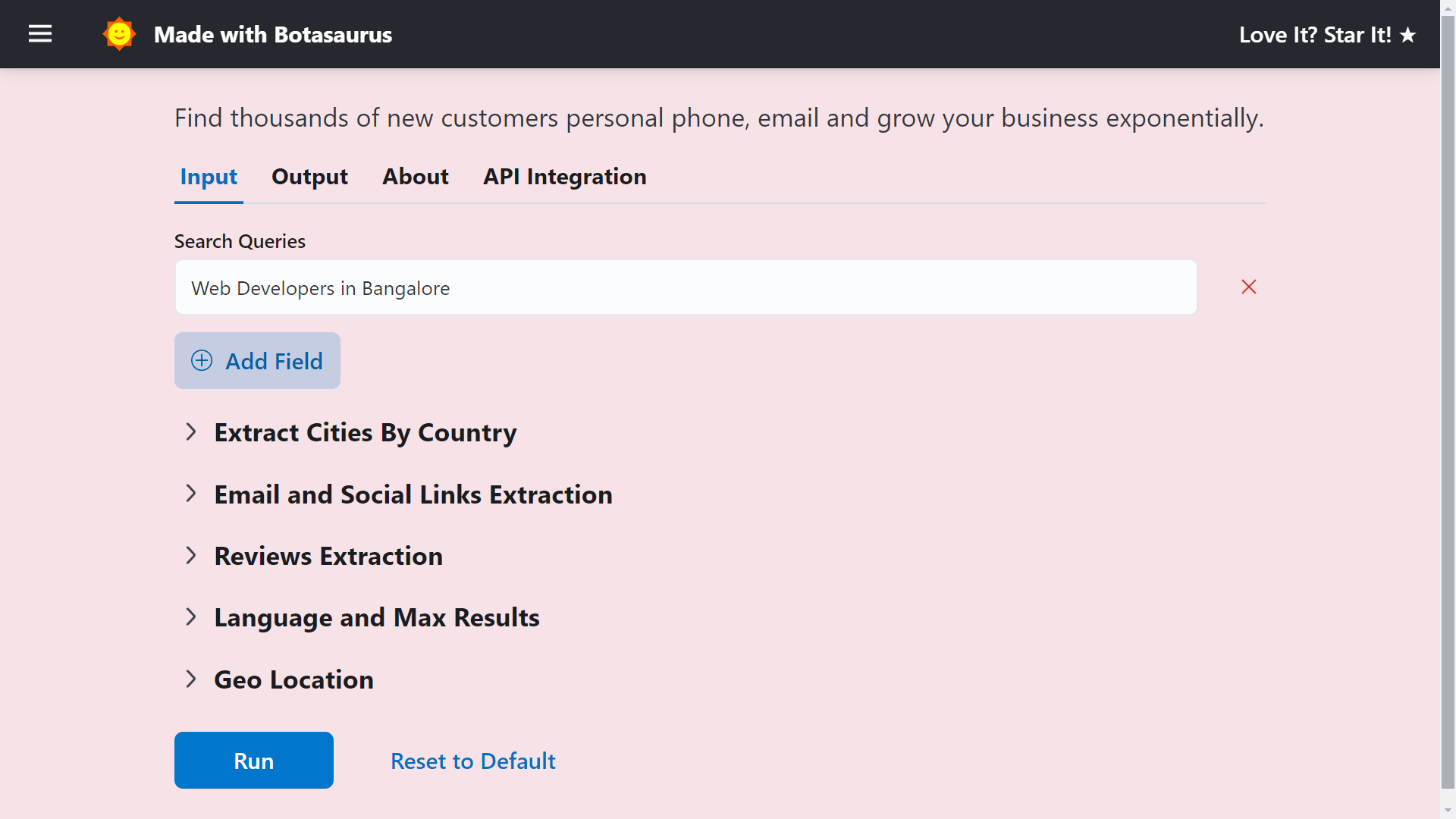

Easy-to-use, friendly dashboard.

-

Limitless scraping: Say sayonara to costly subscriptions or expensive pay-per-result fees.

-

Highly scalable, capable of running on Kubernetes, Docker, and servers.

-

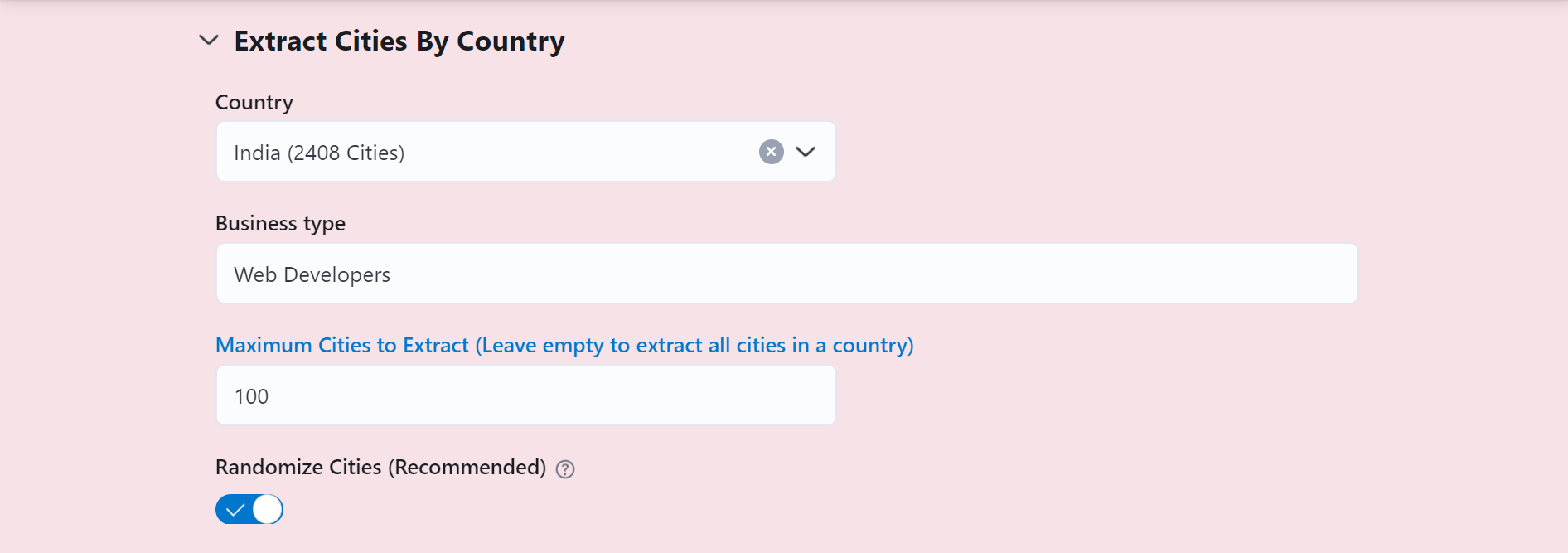

Scrape data for a specific type of business across all cities in a country.

-

Get the exact results you need by easily sorting, filtering, and exporting data as CSV, Excel, or JSON files.

-

Scrape reviews while ensuring the privacy of reviewers is maintained.

In the next 5 minutes, you'll extract 120 Search Results from Google Maps.

To use this tool, you'll need:

- Node.js version 16 or later to run the UI Dashboard (please check your Node.js version by running

node -v) - Python for running the scraper

Don't have Node.js or Python? No problem!

You can easily run this tool within Gitpod, a cloud-based development environment. We'll cover how to set that up later.

Let's get started by following these super simple steps:

1️⃣ Clone the Magic 🧙♀️:

git clone https://github.com/omkarcloud/google-maps-scraper

cd google-maps-scraper2️⃣ Install Dependencies 📦:

python -m pip install -r requirements.txt && python run.py install3️⃣ Launch the UI Dashboard 🚀:

python run.py4️⃣ Open your browser and go to http://localhost:3000, then press the Run button to have 120 search results within 2 minutes. 😎

Note: If you don't have Node.js 16+ and Python installed or you are facing errors, follow this Simple FAQ here, and you will have your search results in the next 5 Minutes

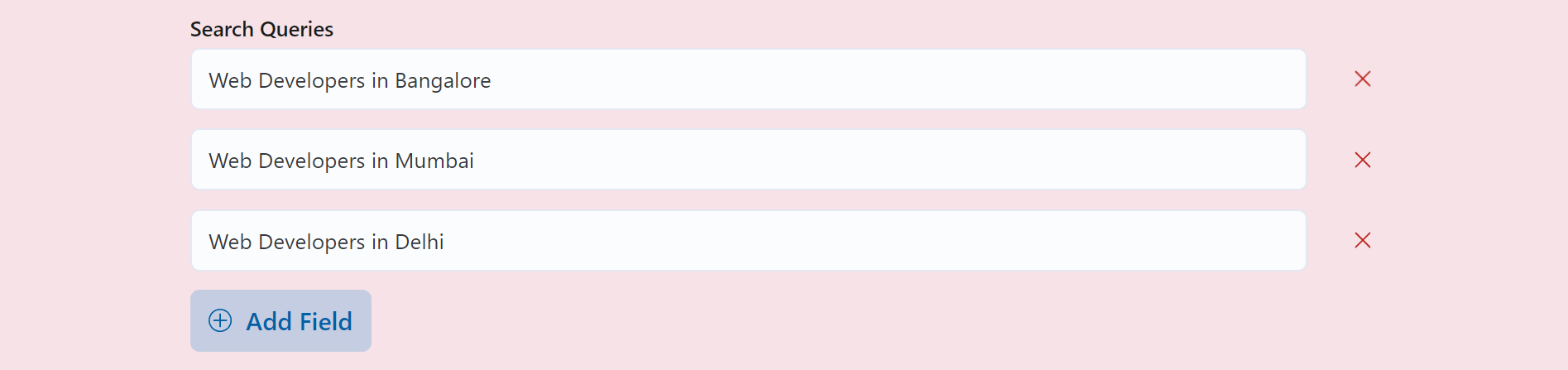

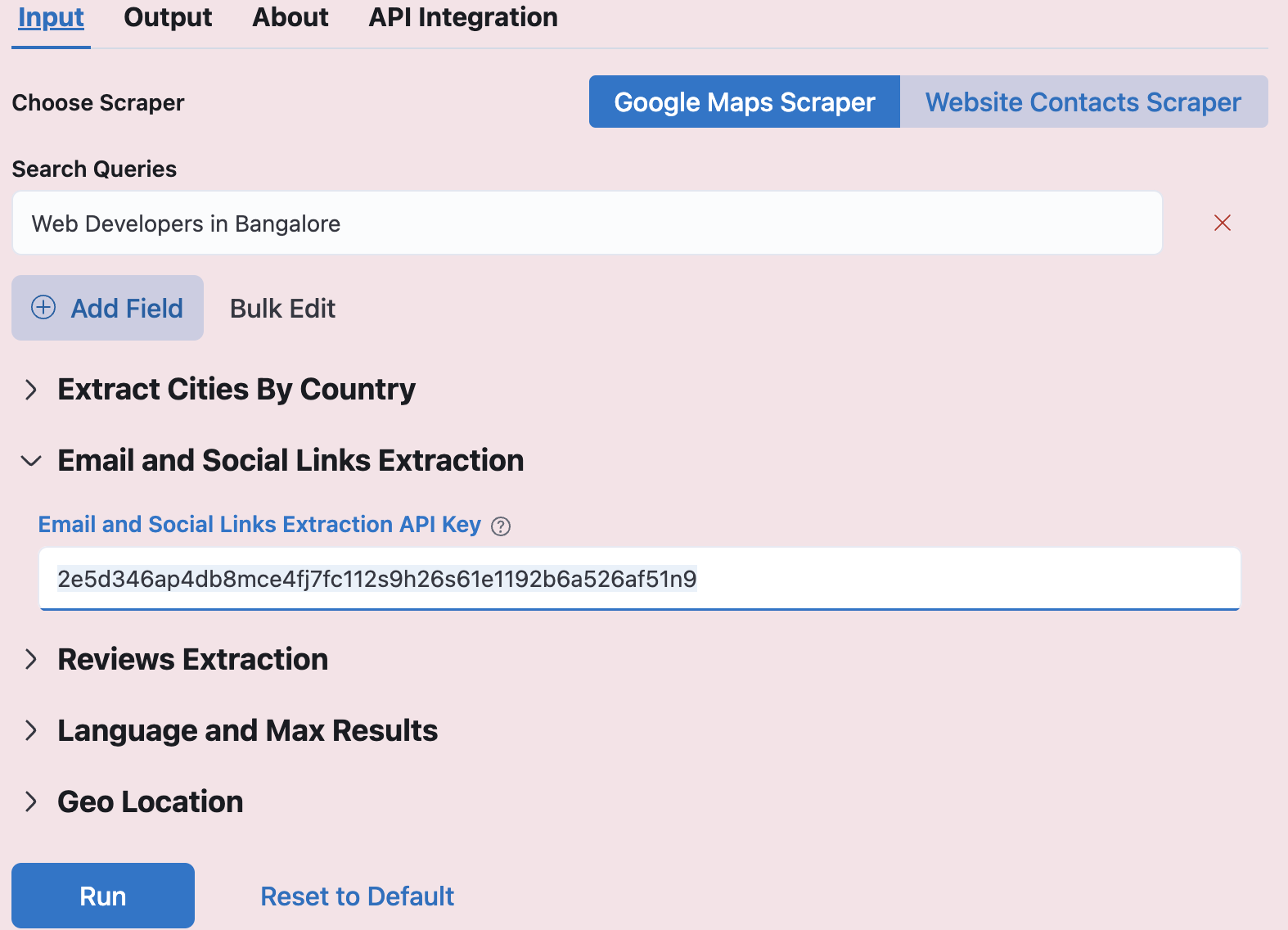

- Visit http://localhost:3000 and enter your search queries.

- Now, simply press the Run button.

Primarily, there are 3 pages in the UI Dashboard:

- Home Page ('/')

- Output Page ('/output')

- Results Page ('/output/1')

You can input your queries here and search by:

-

Scrape data for a specific type of business across all cities in a country.

-

Get Social Details of Profiles like Email, LinkedIn, Facebook, Twitter, etc.

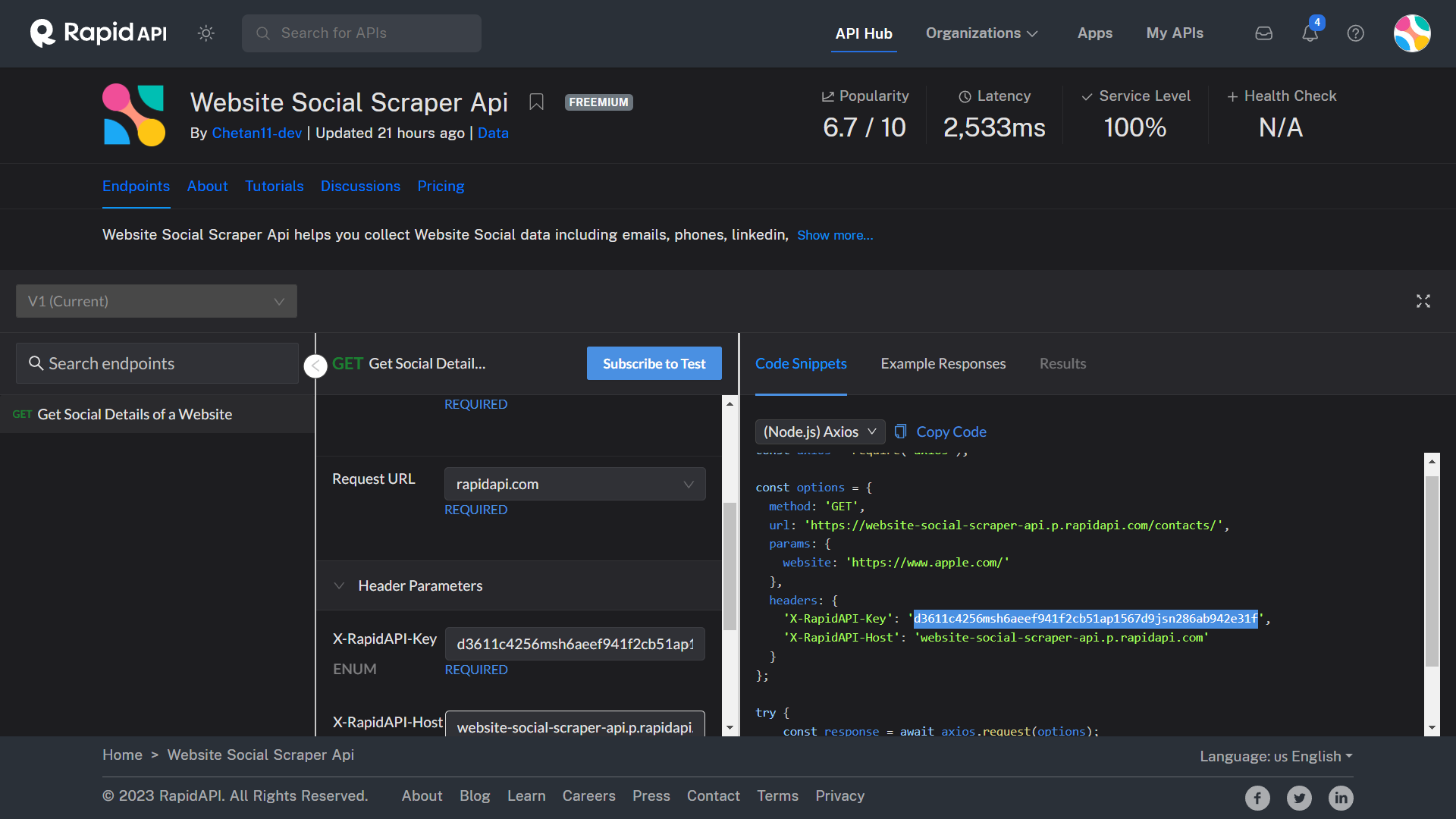

Extracting social details is a compute-intensive process that involves searching various directories and websites parallelly using proxies. To help with this process, we've created an API.

Kindly follow these steps to use the API and get the social details of the profiles:

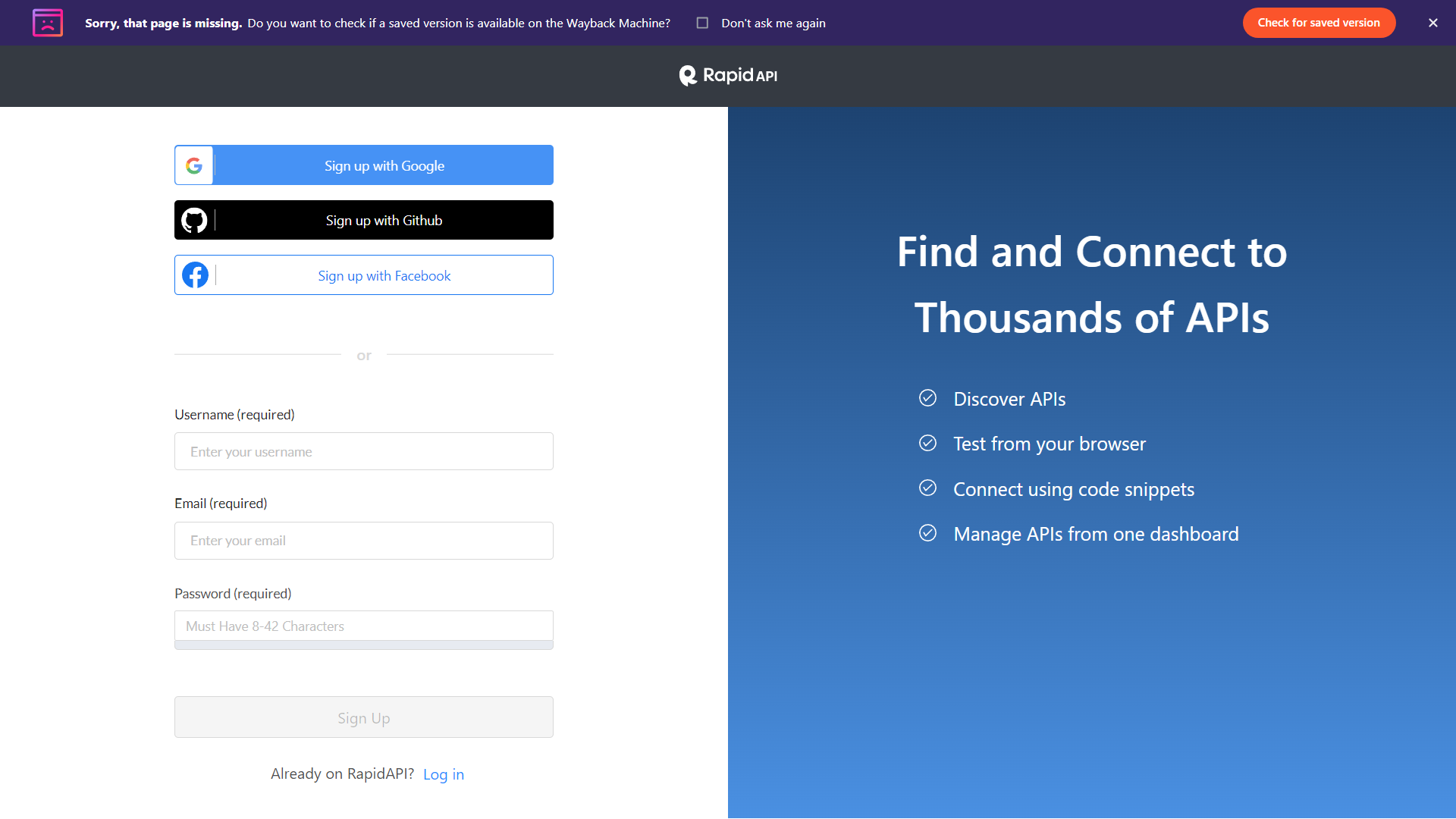

- Sign up on RapidAPI by visiting this link.

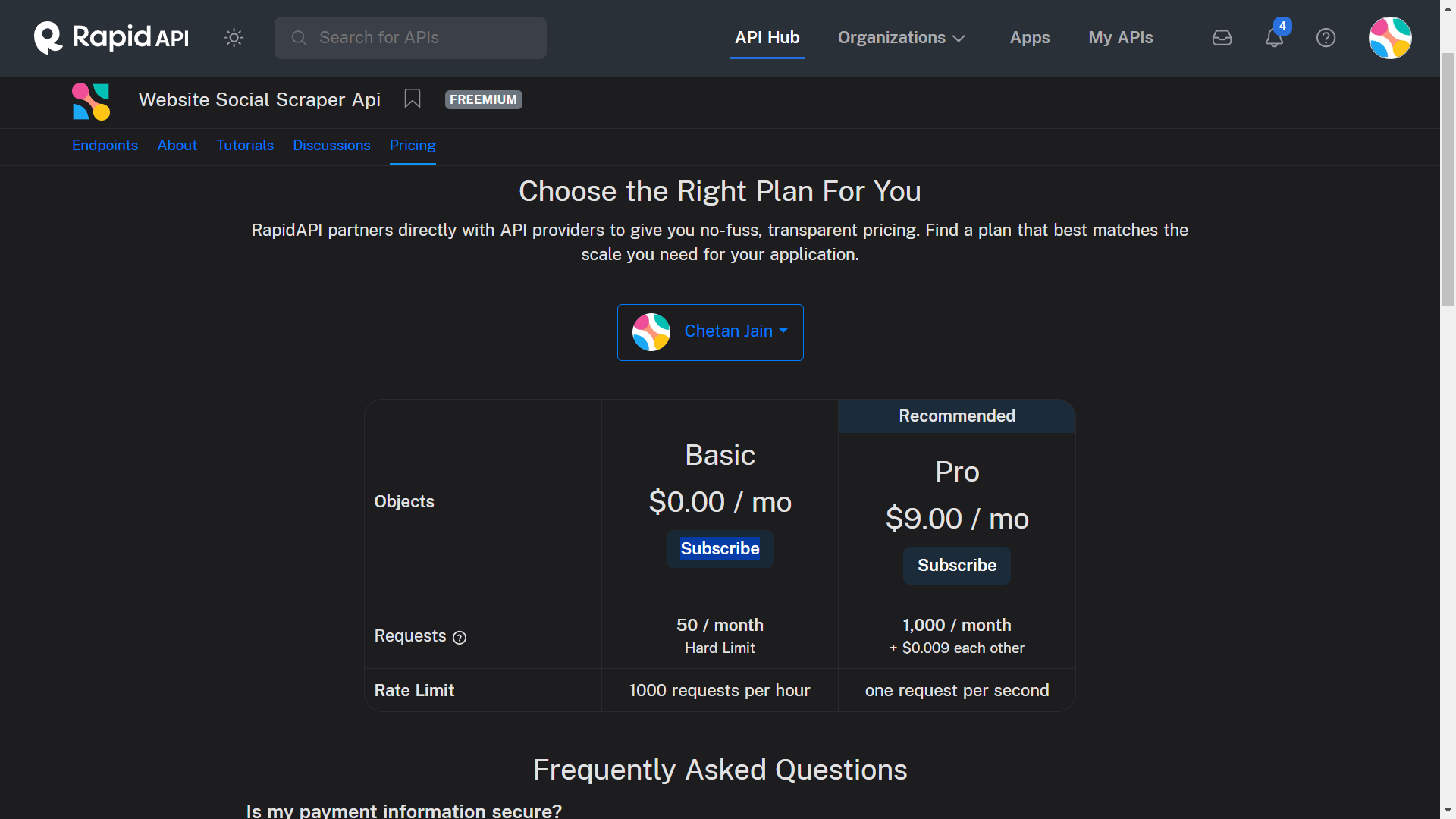

- Subscribe to the Free Plan by visiting this link.

The first 200 contact details are free to scrape with the API. After that, you can upgrade to the Pro Plan to scrape 1,000 contacts for $9, which is affordable considering if you land just one customer, you could easily make hundreds of dollars, easily covering the investment.

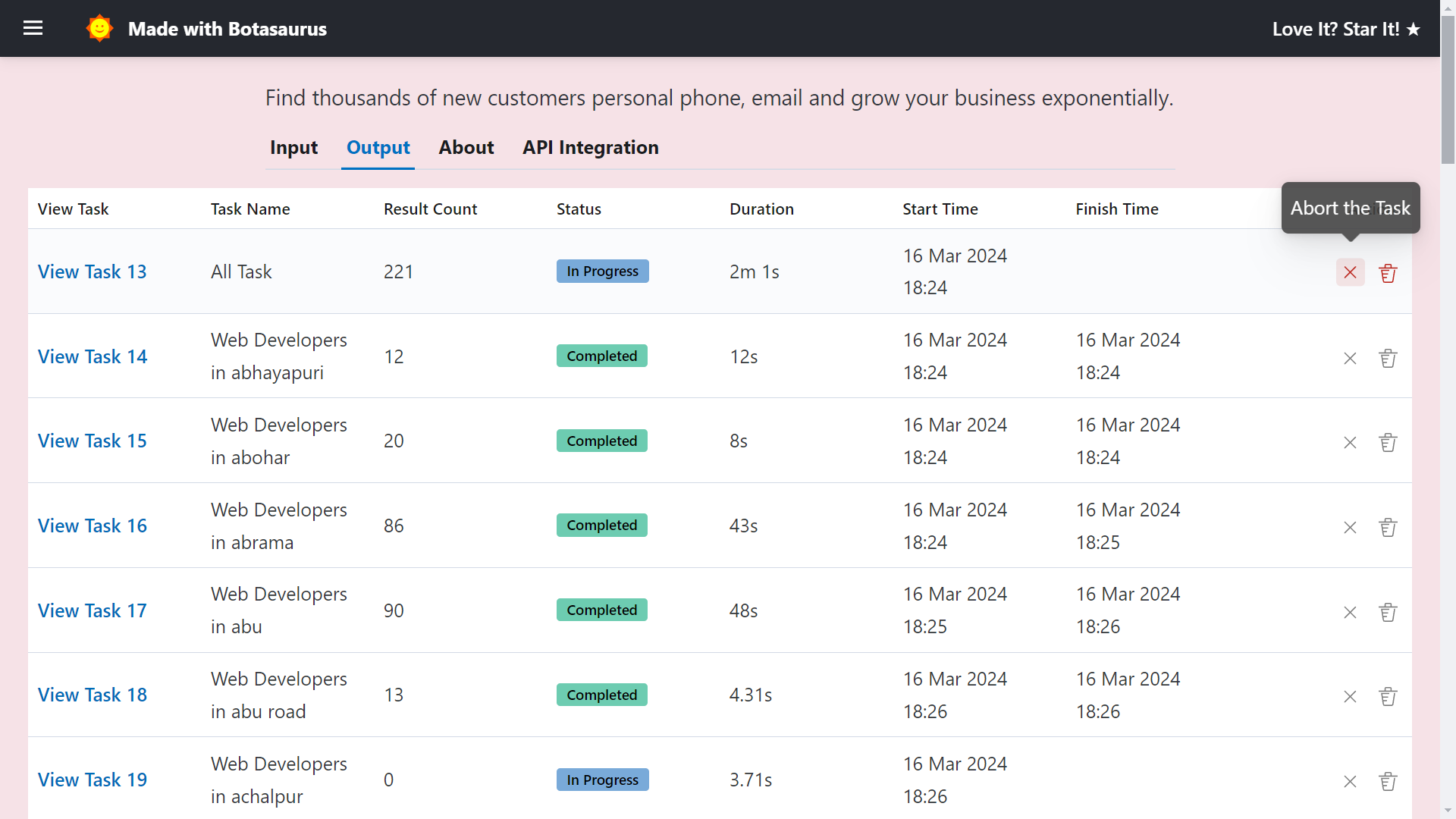

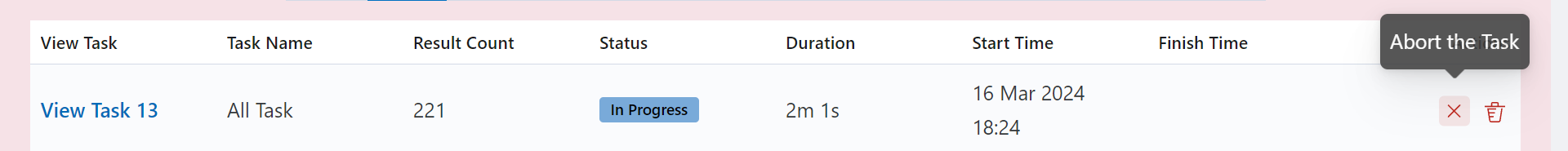

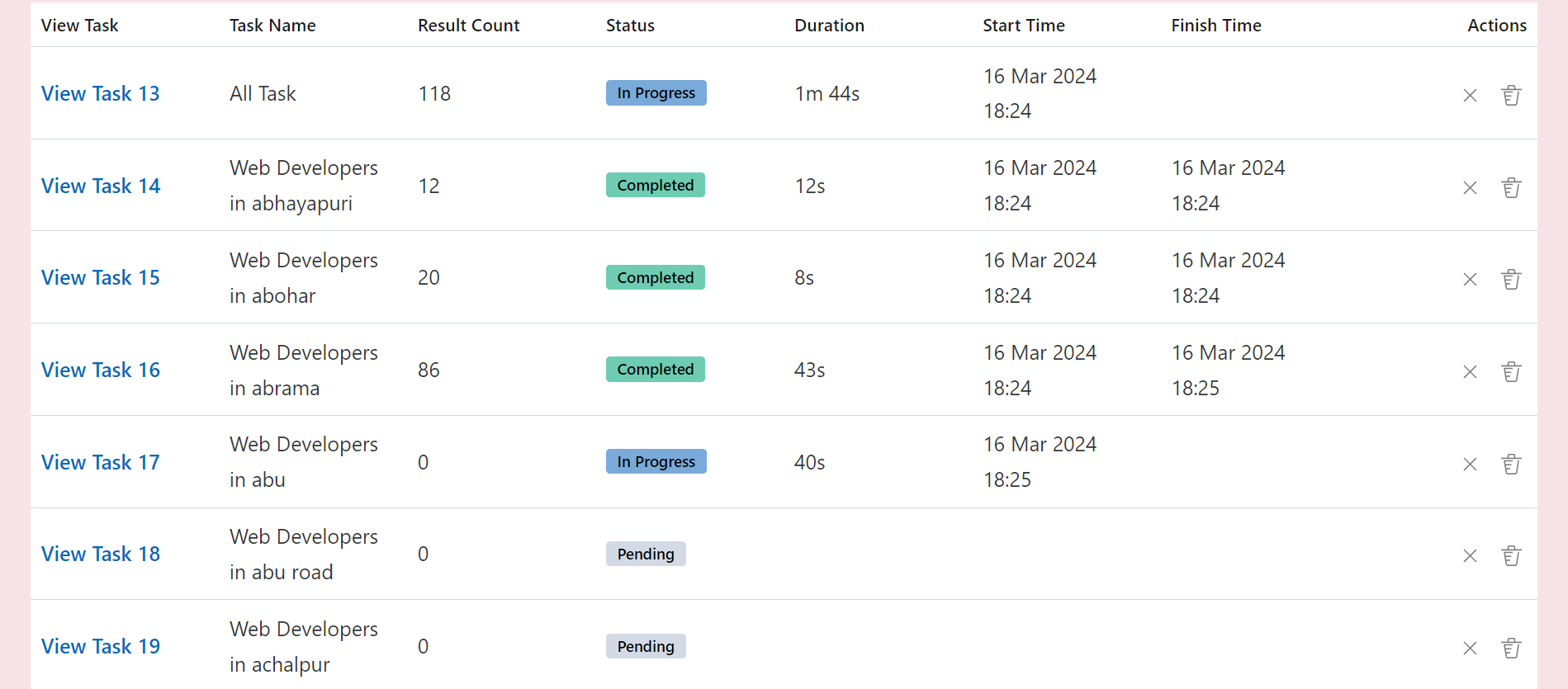

The Output page helps you manage your tasks. You can use it to:

-

See tasks and their status (pending, in progress, or completed).

-

Additionally, Whenever you run a query, a task named "All Task" will be created for it, which combines results from multiple queries.

For example, if you search for "Web Developers in Bangalore" and "Web Developers in Mumbai", the "All Task" will show you the combined results for both queries.

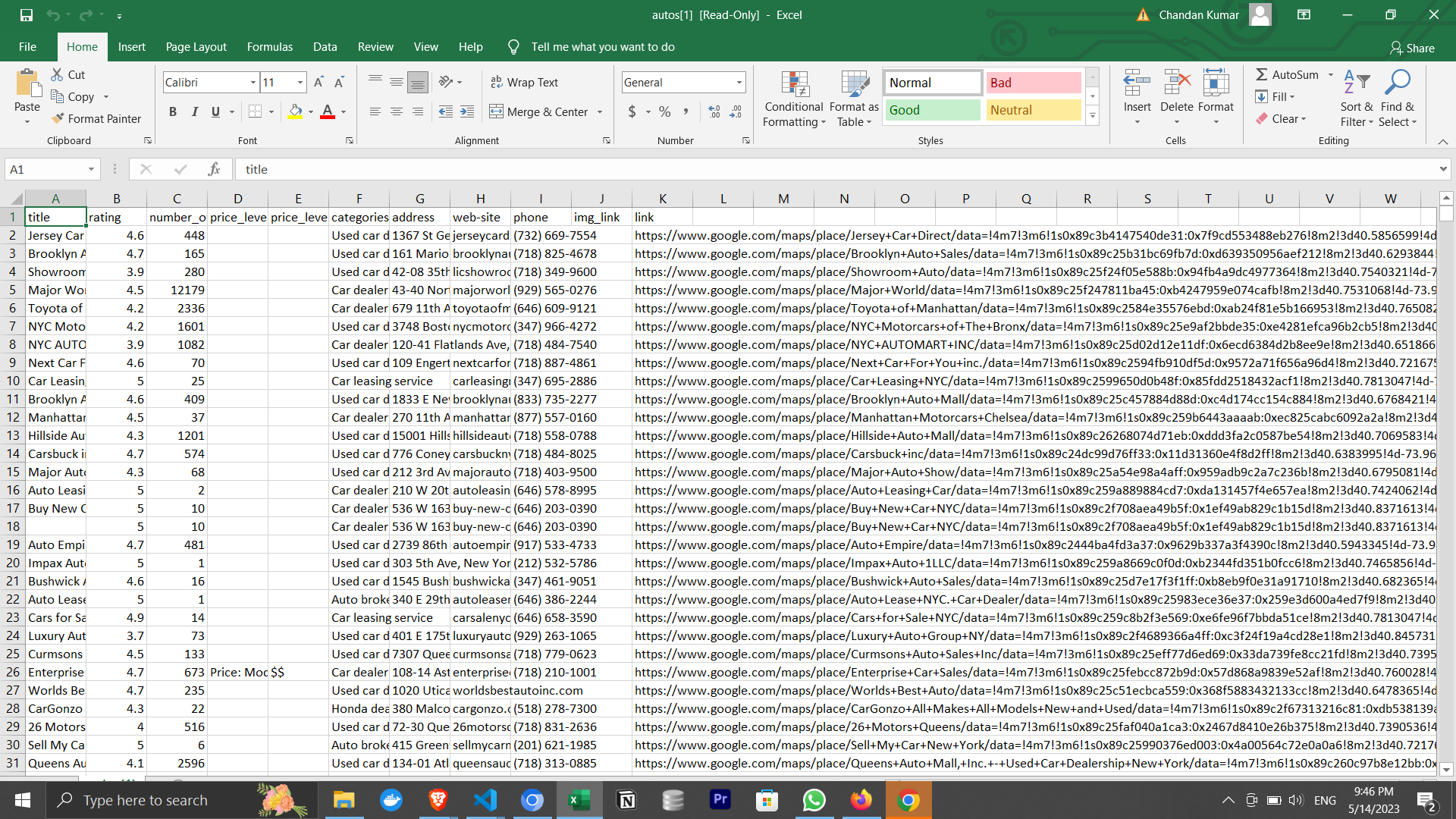

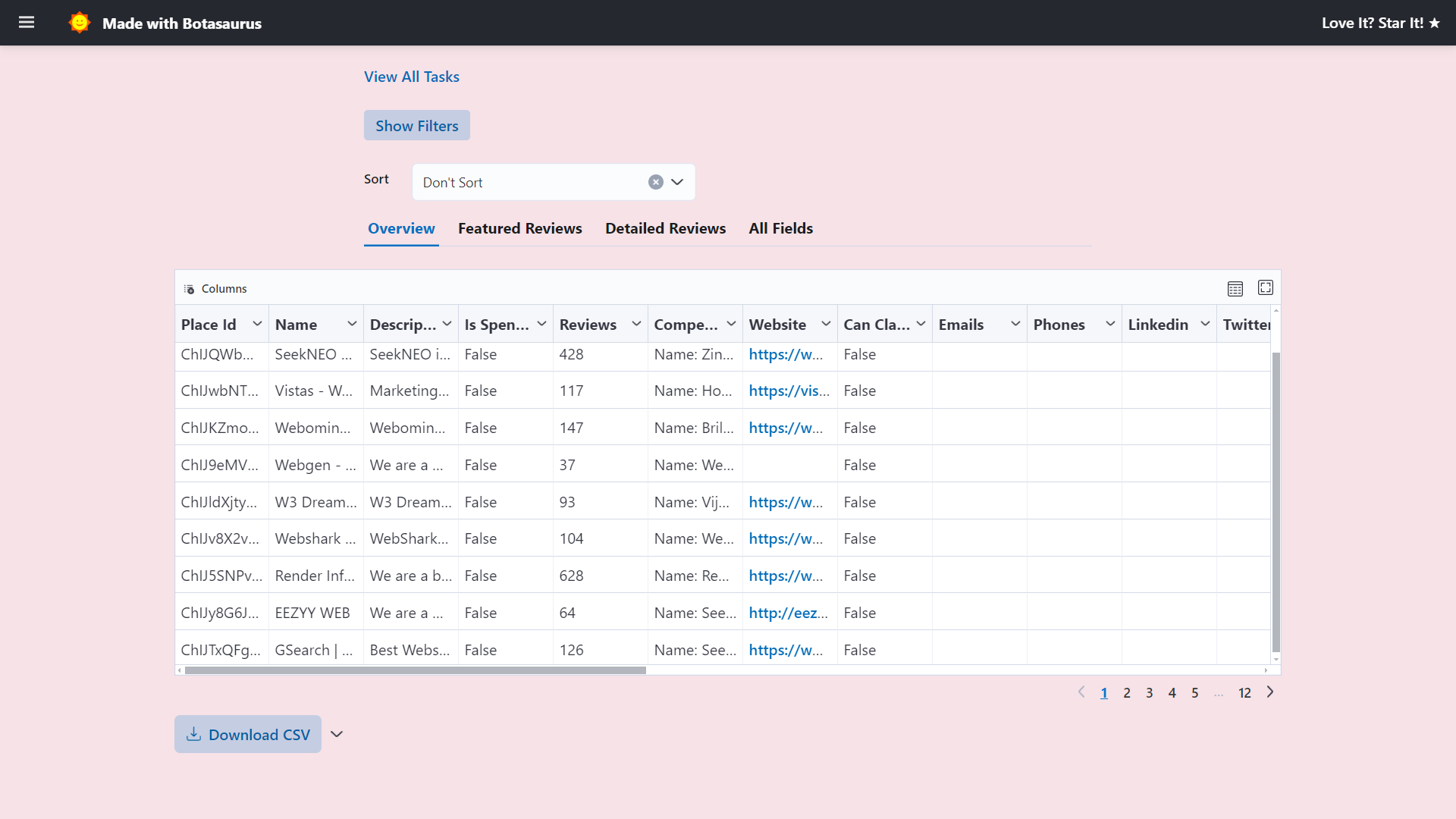

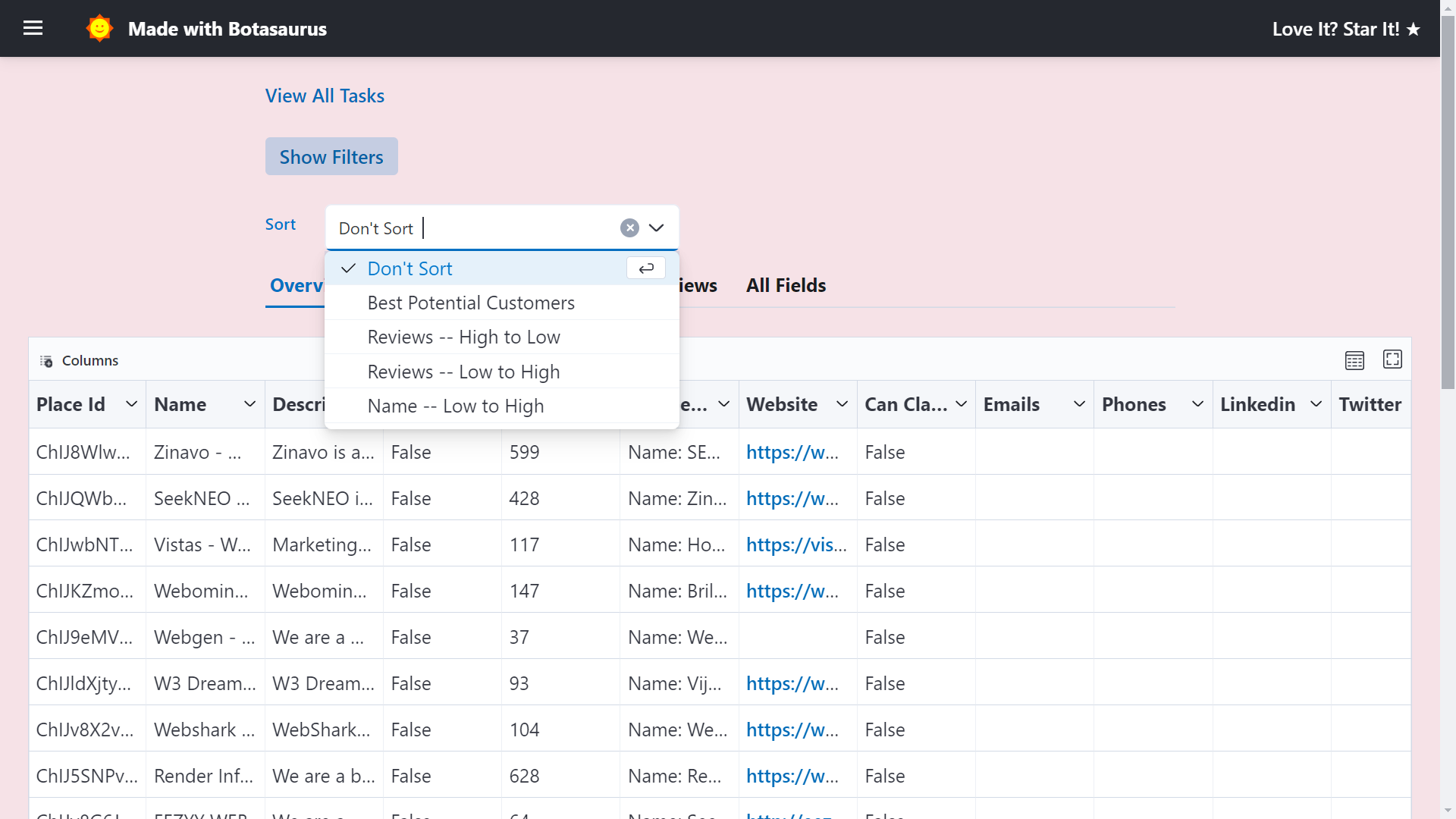

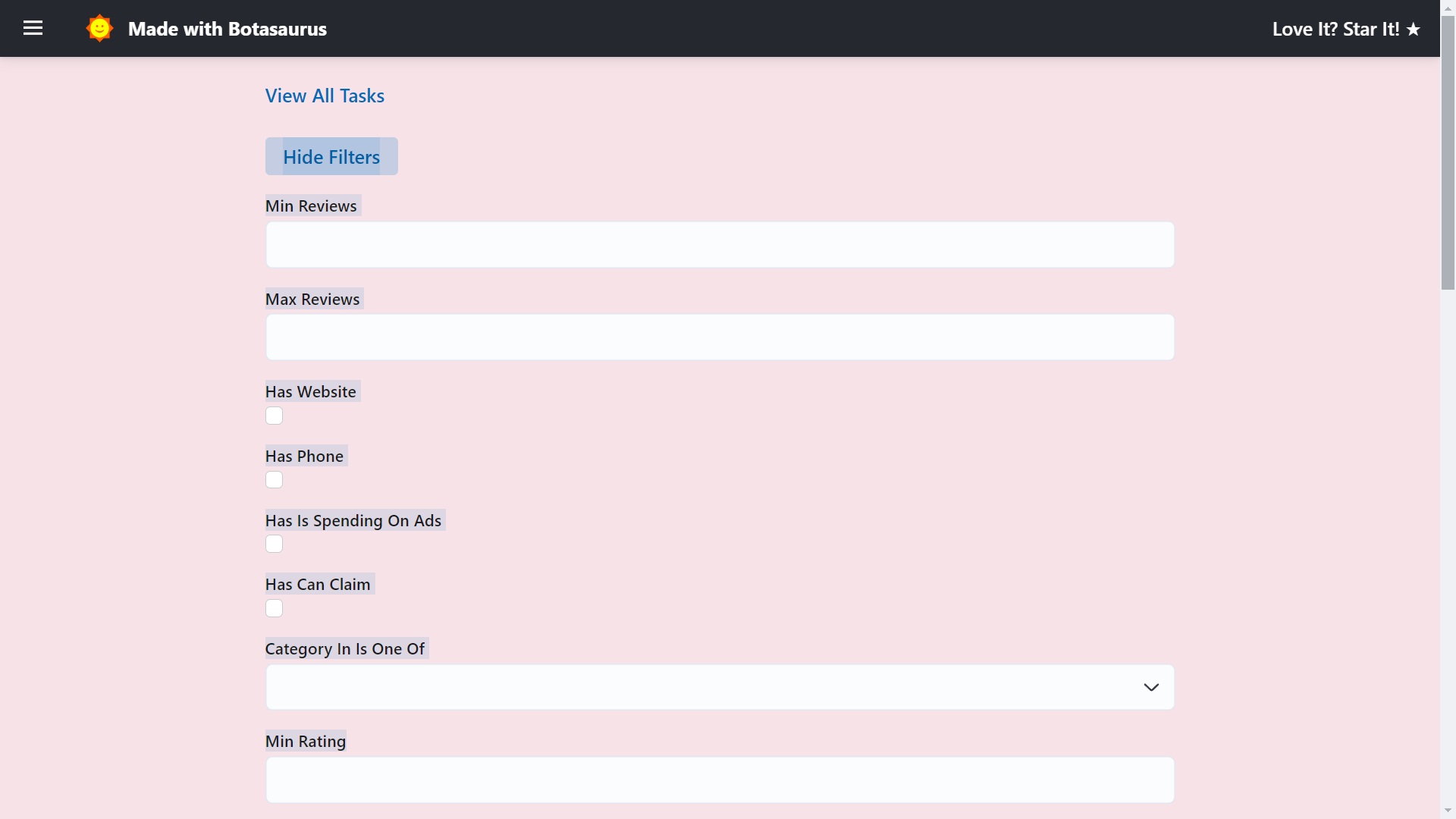

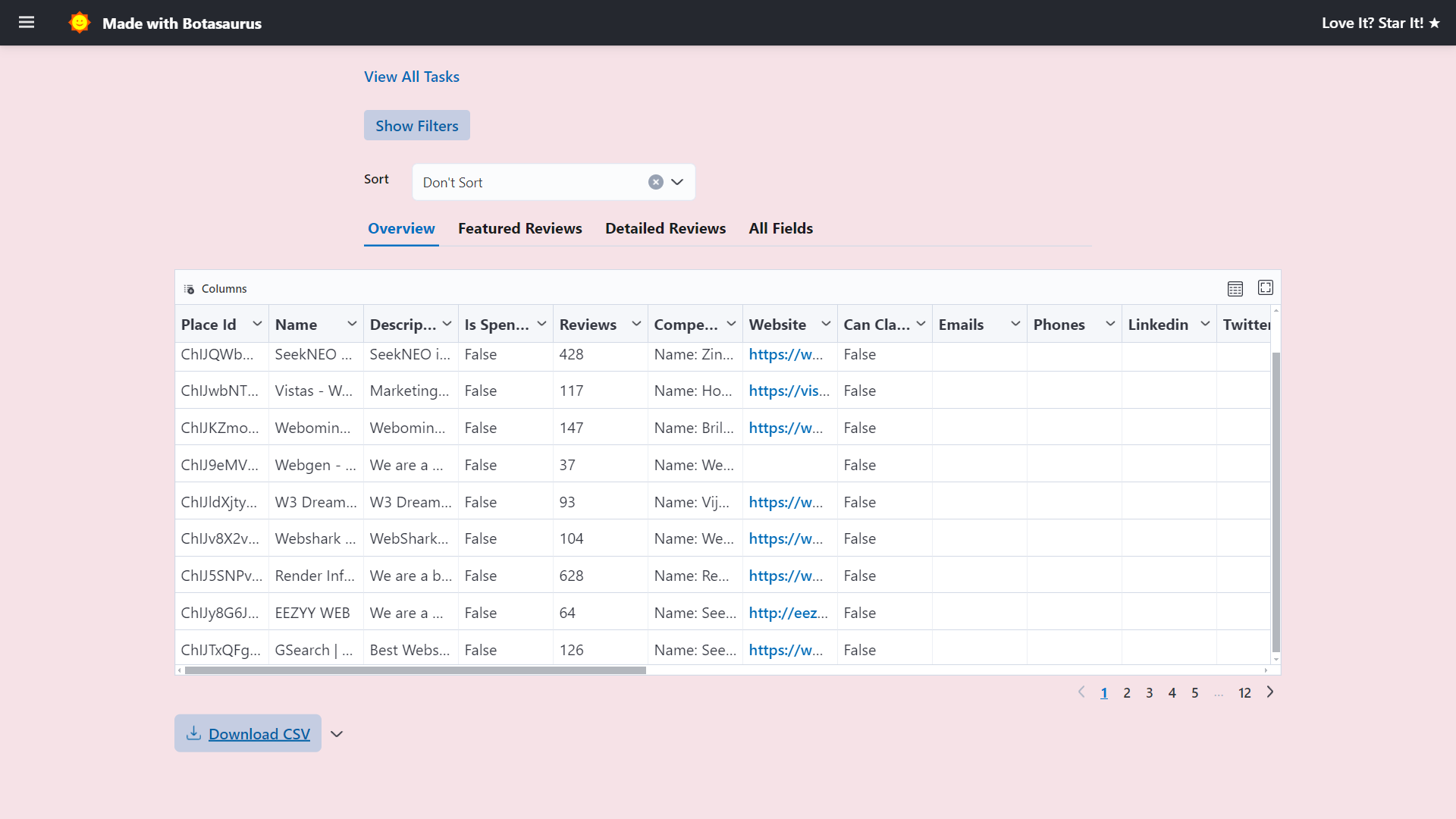

This is the most important page where you can view, sort, filter, or download the results of the task.

Sorting

By default, we sort the listings using a really good sorting order, which is as follows ("Best Potential Customers"):

- Reviews [Businesses with more reviews come first]

- Website [Businesses more open to technology come first]

- Is Spending On Ads [Businesses already investing in ads are more likely to invest in your product, so they appear first.]

You can also sort by other criteria, such as name or reviews.

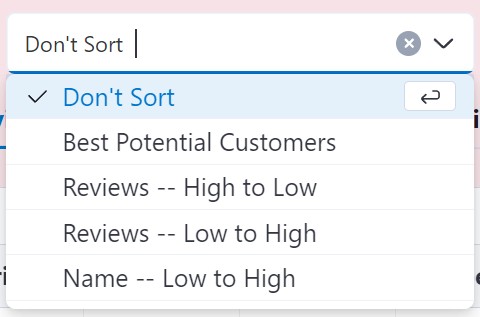

Filters

To find the exact results you're looking for, click the "Show Filters" button and apply the desired filters.

Export

Download results in various formats (CSV, JSON, Excel) using the export button.

❓ How can I access additional information like websites, phone numbers, geo-coordinates, and price ranges?

Free versions shows only a limited set of data points. To see additional datapoints, consider upgrading to the Pro Version:

- 🌐 Website

- 📞 Phone Numbers

- 🌍 Geo-Coordinates

- 💰 Price Range

- And many more! Explore a full list of data points here: https://github.com/omkarcloud/google-maps-scraper/blob/master/fields.md

The Pro Version is a one-time investment with lifetime updates and absolutely zero risk because we offer a generous 90-Day Refund Policy!

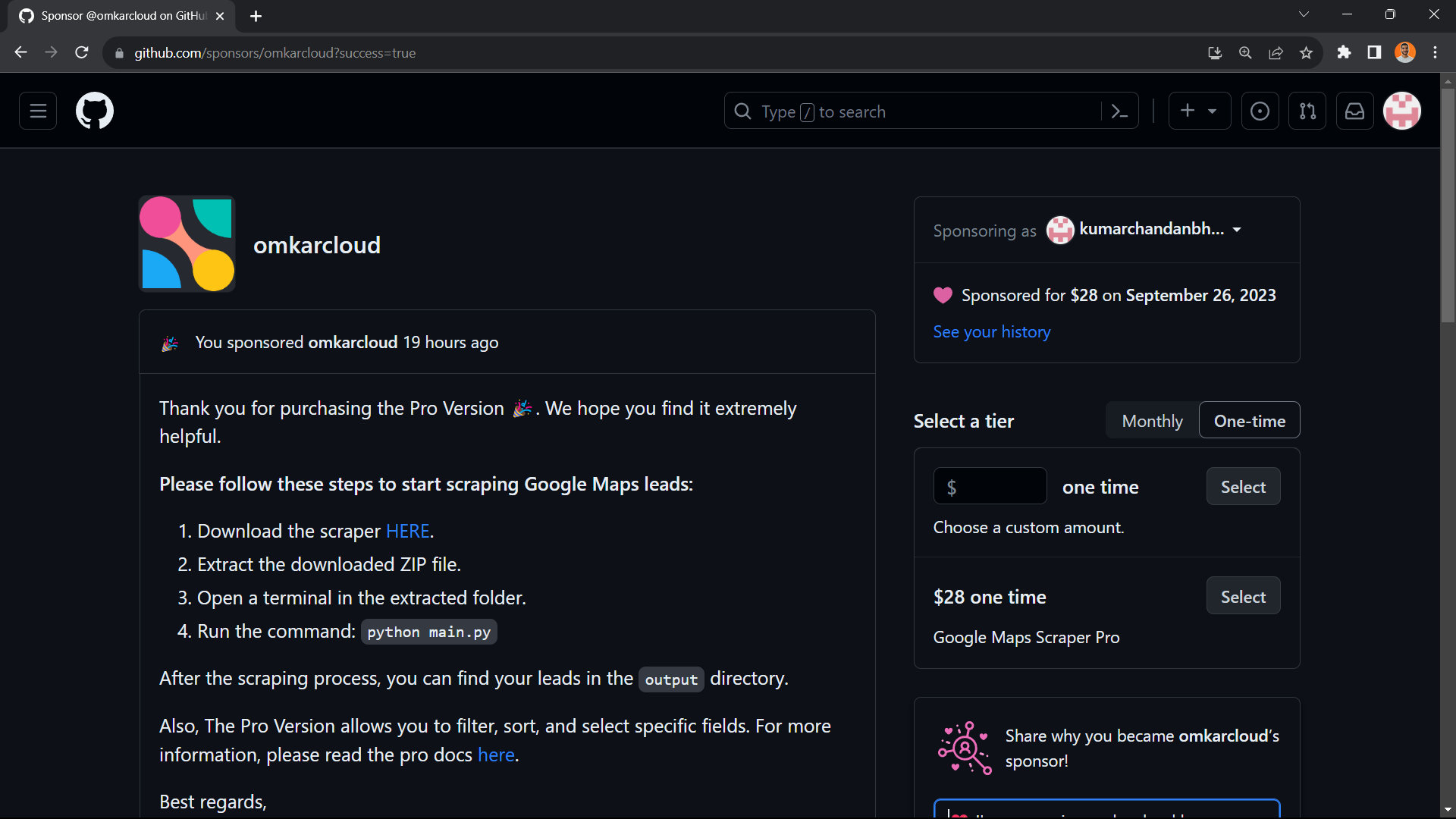

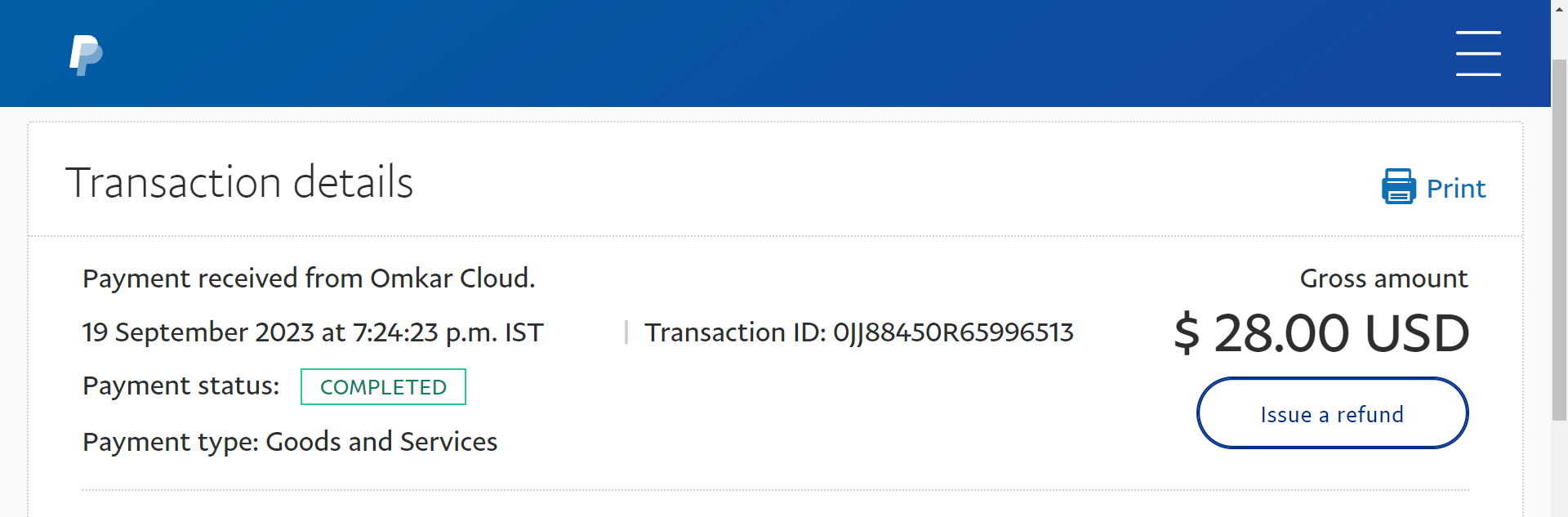

Visit the GitHub Sponsorship Page here and make a one time payment of $28 by selecting Google Maps Scraper Pro Option.

After payment, you'll see a success screen with instructions on how to use the Pro Version:

We wholeheartedly ❤️ believe in the value our product brings for you, especially since it has successfully worked for hundreds of people like you.

But, we also understand the reservations you might have.

That's why we've put the ball in your court: If, within the next 90 days, you feel that our product hasn’t met your expectations, don't hesitate. Reach out to us, and within 24 hours, we will gladly refund your money, no questions and no hassles.

The risk is entirely on us! because we're that confident in what we've created!

We are ethical and honest people, and we will not keep your money if you are not happy with our product. Requesting a refund is a simple process that should only take about 5 minutes. To request a refund, ensure you have one of the following:

- A PayPal Account (e.g., "myname@example.com" or "chetan@gmail.com")

- or a UPI ID (For India Only) (e.g., 'myname@bankname' or 'chetan@okhdfc')

Next, follow these steps to initiate a refund:

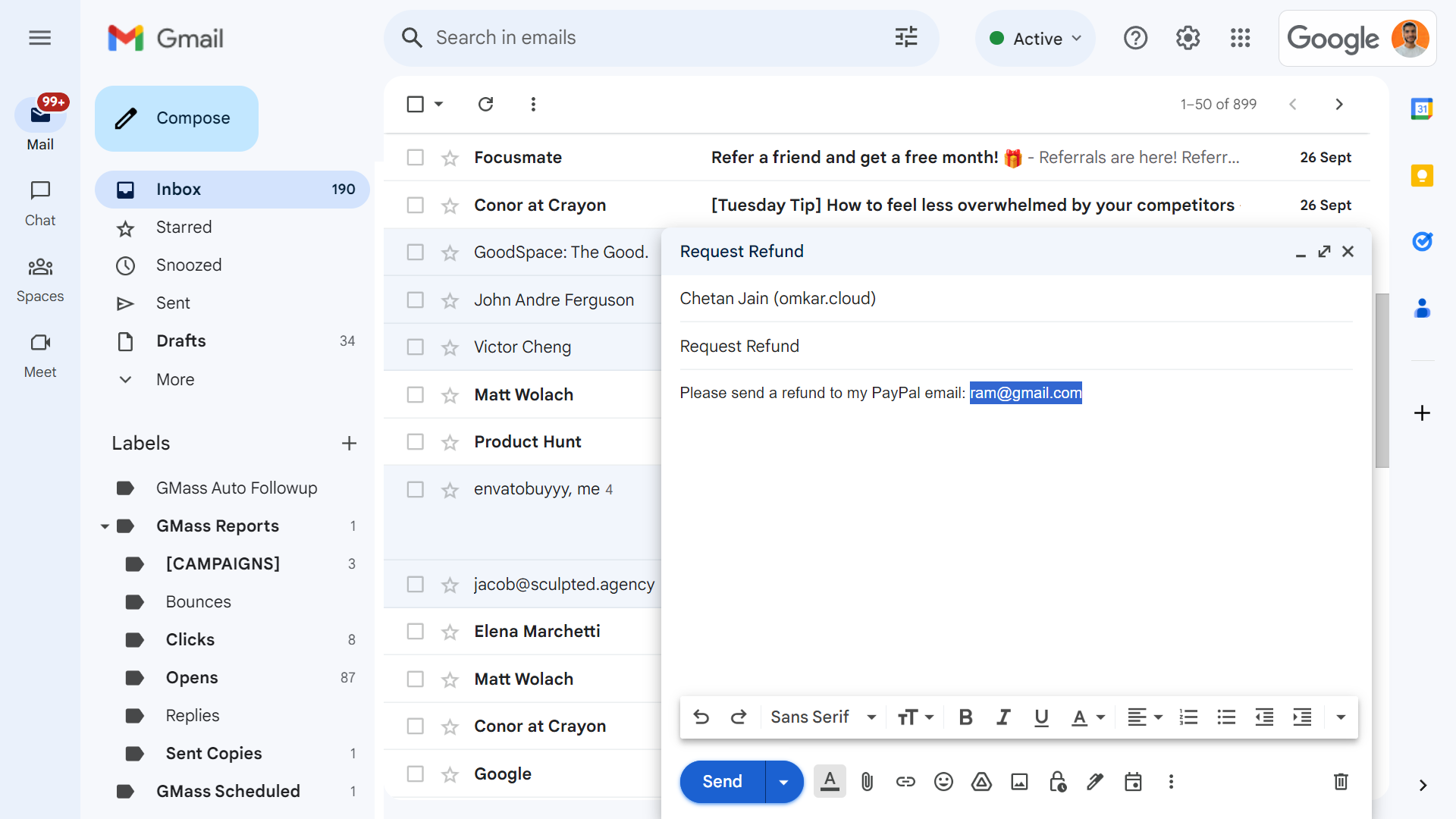

-

Send an email to

chetan@omkar.cloudusing the following template:-

To request a refund via PayPal:

Subject: Request Refund Content: Please send a refund to my PayPal email: myname@example.com -

To request a refund via UPI (For India Only):

Subject: Request Refund Content: Please send a refund to my UPI ID: myname@bankname

-

-

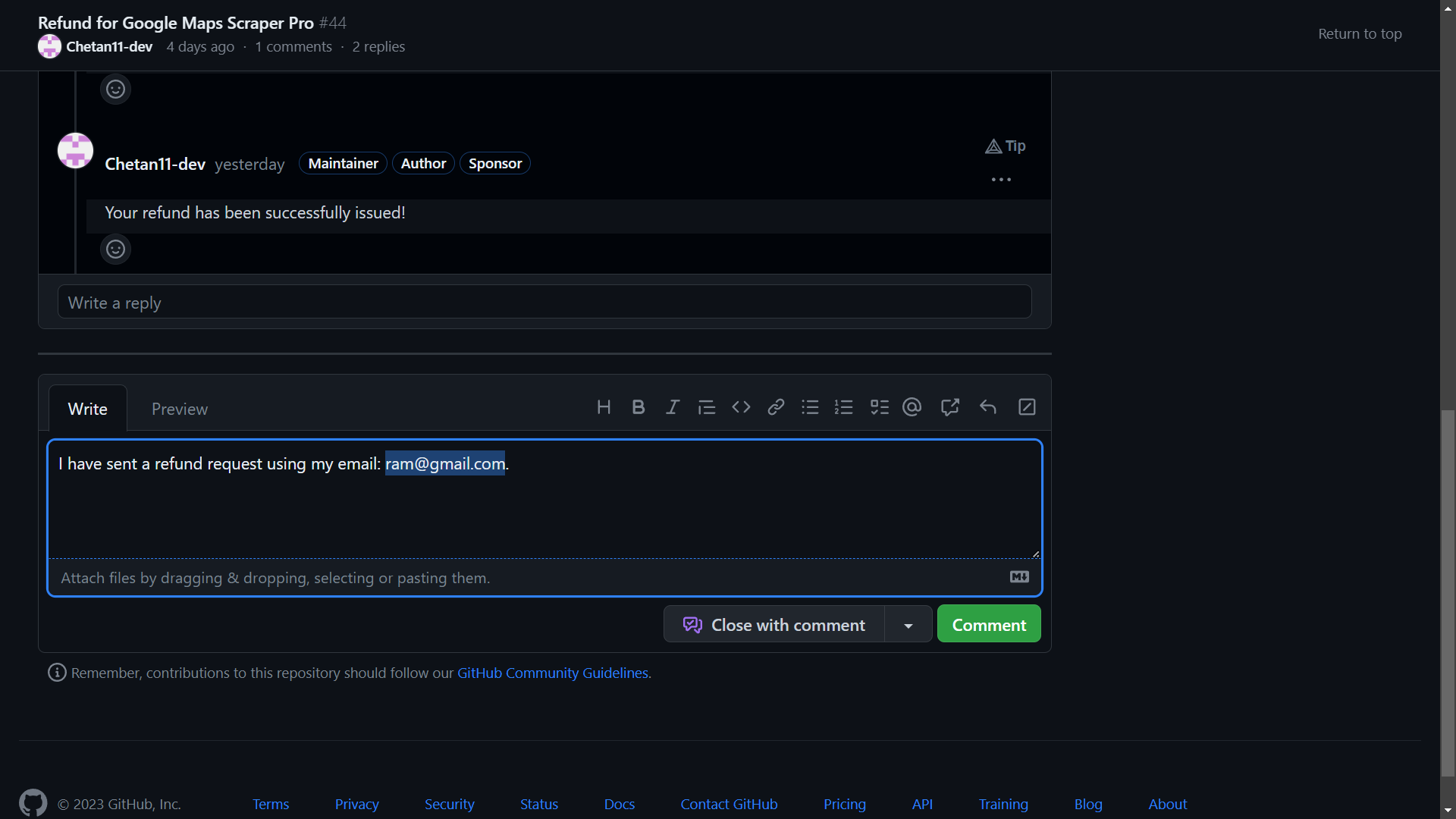

Next, go to the discussion here and comment to request a refund using this template:

I have sent a refund request from my email: myname@example.com. -

You can expect to receive your refund within 1 day. We will also update you in the GitHub Discussion here :)

Also, the Complete $28 will be refunded to you within 24 hours, without any questions and without any hidden charges.

❓ Could you share resources that would be helpful to me, as I am sending personalized emails providing people with useful services?

I recommend reading The Cold Email Manifesto by Alex Berman to learn how to emails that get replies.

Rest assured, I have your interest at my heart, and the above link is not an affiliate link. It's just a really awesome book, that's why I'm recommending it to you.

Thank you! We used Botasaurus, which is the secret behind our awesome Google Maps Scraper.

Botasaurus is a web scraping framework that makes life a lot easier for web scrapers.

It handled the hardest parts of our scraper, such as:

- Creating a gorgeous UI dashboard with task management features

- Sorting, filtering, and exporting data as CSV, JSON, Excel, etc.

- Caching, parallel and asynchronous scraping, and anti-detection measures

- Built-in integration with Kubernetes, Docker, Server, Gitpod, and a REST API

If you're a web scraper, I really recommend learning about Botasaurus here 🚀.

Trust me, learning Botasaurus will only take 20 minutes, but I guarantee it will definitely save you thousands of hours in your life as a web scraper.

Having read this page, you have all the knowledge needed to effectively use the tool.

You may choose to read the following questions based on your interests:

- I Don't Have Python, or I'm Facing Errors When Setting Up the Scraper on My PC. How to Solve It?

- Do I Need Proxies?

For further help, feel free to reach out to us through:

-

WhatsApp: If you prefer WhatsApp, simply send a message here. Also, to help me provide the best possible answer, please include as much detail as possible.

-

GitHub Discussions: If you believe your question could benefit the community, feel free to post it in our GitHub discussions here.

-

Email: If you prefer email, kindly send your queries to chetan@omkar.cloud. Also, to help me provide the best possible answer, please include as much detail as possible.

We look forward to helping you and will respond to emails and whatsapp messages within 24 hours.

Good Luck!

Love It? Star It ⭐!

Become one of our amazing stargazers by giving us a star ⭐ on GitHub!

It's just one click, but it means the world to me.