Dataset and code for our IEEE-Signal-Processing-Letter-2020 paper: 《Multi-modal Visual Place Recognition in Dynamics-Invariant Perception Space》

-

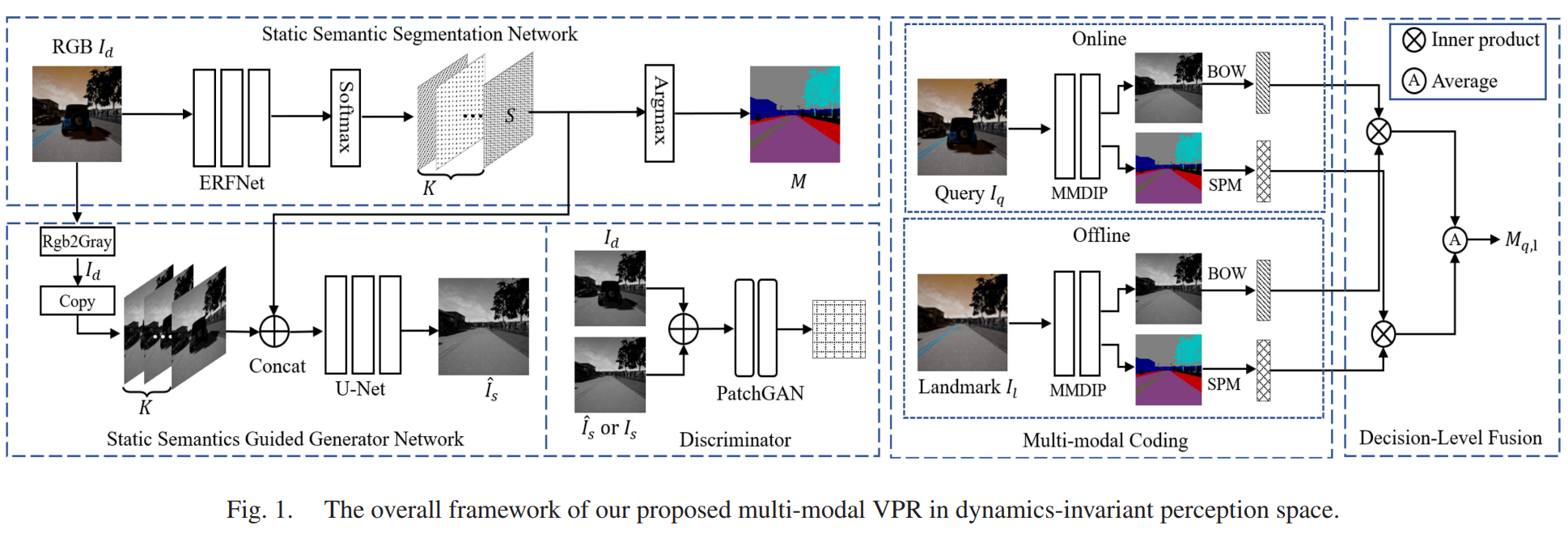

We build a dynamics-invariant perception space to improve feature matching in dynamic environments, which can be seen as an extension of Empty Cities (IEEE TRO2020).

-

Specifically, we propose a dynamics-invariant perception network to recover the static semantics and static images directly from the dynamic frames. We then design a multi-modal coding strategy to generate the robust semantic-visual features for image matching.

- Install Torch (tested on 1.2.0)

- Synthetic dataset for dynamic-to-static semantic segmentation [link] (Extraction-code: 5250).

- Synthetic dataset for dynamic-to-static image translation [link].

python train_TransNet --dataroot EmptycitiesDataset_path --gpu_ids 0 --name xyz --batchSize x --phase trainpython test_TransNet --gpu_ids 0 --name SegTransNet --phase testRand --epoch x --no_flip-

We provide training and testing scripts for static semantic segmentation.

python train_SegNet --gpu_ids 0 --name xyz --batchSize x --phase trainRand --mode Seg

python test_SegNet --gpu_ids 0 --name xyz --epoch x --phase testRand --mode Seg

-

We also provide evaluation scripts for image quality (L1, L2, PSNR, SSIM) and semantic segmentation performance (PA, MPA, MIoU, FWIoU). Please see "src/scripts" for more details.

BibTex:

@ARTICLE{9594697,

author={Wu, Lin and Wang, Teng and Sun, Changyin},

journal={IEEE Signal Processing Letters},

title={Multi-Modal Visual Place Recognition in Dynamics-Invariant Perception Space},

year={2021},

volume={28},

pages={2197-2201},

doi={10.1109/LSP.2021.3123907}}

- This work was supported by in part by the Postgraduate Research and Practice Innovation Program of Jiangsu Province under Grant SJCX20_0035, in part by the Fundamental Research Funds for the Central Universities under Grant 3208002102D, and in part by the National Natural Science Foundation of China under Grant 61803084.