-

Elisabetta Fedele (@elisabettafedele)

elisabetta.fedele@mail.polimi.it -

Filippo Lazzati (@filippolazzati)

filippo.lazzati@mail.polimi.it

In order to create the ontology, we started from the following competency questions:

-

Which characters speak in german at least once?

-

Which characters say a sentence x?

-

Which are the numbers of the scenes played in a New York taxicab during night?

-

Which are the pages occupied by the scene number x?

-

In which part of the day does the scene number x take place?

-

How many times is the word "Facebook" used?

-

Which is the starting page of the scene x?

-

How many times 2 characters x and y are in the same scene?

-

Which are all the movies inside the dataset?

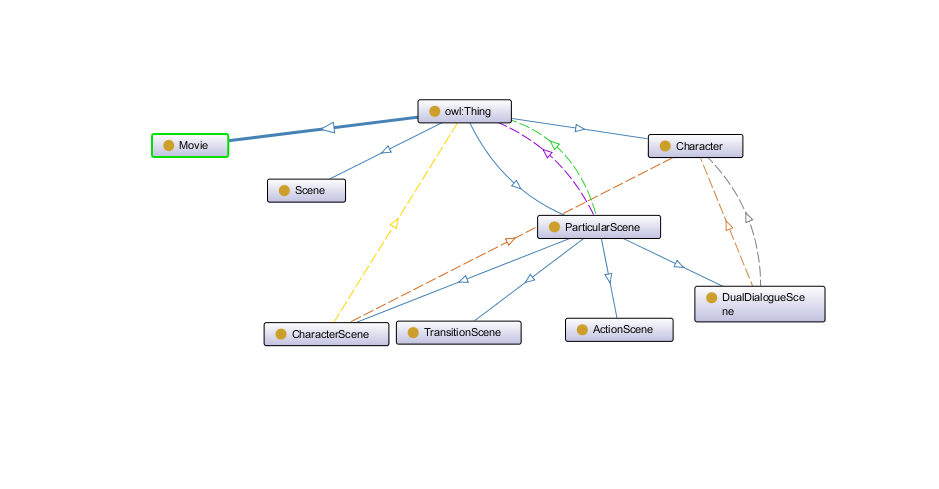

The ontology is written in OWL and its structure is the following:

- Movie

- Character

- ParticularScene

- ActionScene

- CharacterScene

- DualDialogueScene

- TransitionScene

- Scene

Where Movie, Character, ParticularScene and Scene are the three main classes and ActionScene, CharacterScene, DualDialogueScene and TransitionScene are subclasses of ParticularScene.

A representation of the classes made by OntoGraf:

Movie represents a movie. It has only the title property. It is as a named graph.

Scene represents a main scene of the movie. A scene has properties about the location and the time in which it is played and other information about the pages it occupies on the screenplay.

Character represents a character of the movie, with its name and the sentences he says during the movie. Furthermore, it has associated a hasModifier property which provides information about the visibility of the character in a scene (see Screenplay) .

Finally, ParticularScene is just a superclass of ActionScene, CharacterScene, DualDialogueScene and TransitionScene. The suffix -scene should not be mis-interpreted. In our model, a ParticularScene is the basic unit of a Scene, and it can be of 4 different types according to what in this unit happens (a dialogue, an action or a transition).

-

The screenplays in pdf format can be downloaded from the following website;

-

In order to obtain a format that can be easy translated to rdf, we used a pdf to json converter for screen play from SMASH-CUT;

-

RDF triples can be obtained from Json format through RML rules. There exists a facility language called YARRRML (sub-language of YAML) which allows to write RML rules in a less tricky way. To test the YARRRML rules the online tool Matey has been used. The rules.yaml file contains the YARRRML rules whereas the yarrrml parser library can be used to convert this file to into rules.rml; In order to achieve this, open the prompt and write the following command:

yarrrml-parser -i rules.yaml -o rules.rml -

rmlmapper-4.9.0.jar taken from the public repository executes RML rules to generate Linked Data. It has been used to create the RDF triples from the JSON files (screenplays). The command is:

java -jar rmlmapper-4.9.0.jar -m rules.rml -o SemScript/src/main/resources/movies_in_rdf/the_social_network.rdfthrough the above command, you can use the rules.rml file to transform the the_social_network.json file (contained in /movies) to the_social_network.rdf file. It should be remarked that rmlmapper provides in output a file with ANSI encoding, while the Jena API's work with the UTF-8 one. Therefore a change should be done. Moreover, the client class works with .ttl files, so the extension of the output file (the_social_network.rdf) has to be changed (to .ttl).

Note: inside the embedded_client_server_multiple_movies there is a server which can be launched and it creates a dataset (without using reasoners) with named graphs: each movie represents a named graph and can be queries with these queries.

The competency questions in the competency_questions.txt file have been converted to the SPARQL queries contained in the queries folder. They can be executed in different ways:

- run the EmbeddedServer (namely a Fuseki server) class with the proper name of the movie to query, and after run the ClientForEmbeddedServer class which will connect to the EmbeddedServer and run the queries. Both of these classes use the Jena API's. In particular, the EmbeddedServer class builds a Model for the ontology SemScript.owl and one for the data (example: the_social_network.ttl and uses the standard OWL reasoner of Jena to infer new data. The client connects to it on the 3031 port (custom value, since the fuseki server usually runs on the 3030 port) and submits the queries.

- another way is to download the fuseki-server on your pc (download here) and after run it (in windows, use the fuseki-server command from CLI inside the downloaded directory). Now a browser can be used to connect to it (localhost:3030) and run queries with UI. 2')an additional method strictly related to the 2) one is to run a Fuseki server but, instead of running the queries with UI, running them by the outSPARQL plugin for intellij (add a SPARQL endpoint in the plugin settings specifying the location, namely port 3030, then open a .rq file and run the query).

- a last method is to run the Fuseki server on your pc (maybe using this image) and configure it as specified at 2), and after using the jupyter notebook QueriesSPARQL.ipynb to run the queries.

Some last notes:

- you can configure a reasoner on the Fuseki server by using the fuseki_server_config_file.ttl provided (only few words have to be changed).

- Protégé - Ontology Creation

- Matey - RDF Mappings Generator

- Maven - Dependency Management

- IntelliJ - IDE

- outSPARQL - Plugin for running queries

- Jupyter notebook - IDE

- YARRRML parser - YARRRML parser

- RML mapper - Linked Data producer

- Docker - Appication deployer