[Official, ECCV 2024 (Oral), paper 🔥

Qihao Zhao*1,4, Yalun Dai*2, Shen Lin3, Wei Hu1, Fan Zhang1, Jun Liu4,5

1 Beijing University of Chemical Technology

2 Nanyang Technological University

3 Xidian University

4 Singapore University of Technology and Design

5 Lancaster University

(* Equal contribution)

- To install requirements:

pip install -r requirements.txt

- Hardware requirements 8 GPUs with >= 12G GPU RAM are recommended. Otherwise the model with more experts may not fit in, especially on datasets with more classes (the FC layers will be large). We do not support CPU training, but CPU inference could be supported by slight modification.

- Please download these datasets and put them to the /data file.

- ImageNet-LT and Places-LT can be found at here.

- iNaturalist data should be the 2018 version from here.

- CIFAR-100 will be downloaded automatically with the dataloader.

data

├── ImageNet_LT

│ ├── test

│ ├── train

│ └── val

├── CIFAR100

│ └── cifar-100-python

├── CIFAR10

│ └── cifar-10-python

├── Place365

│ ├── data_256

│ ├── test_256

│ └── val_256

└── iNaturalist

├── test2018

└── train_val2018

- We provide txt files for test-agnostic long-tailed recognition for ImageNet-LT, Places-LT and iNaturalist 2018. CIFAR-100 will be generated automatically with the code.

- For iNaturalist 2018, please unzip the iNaturalist_train.zip.

data_txt

├── ImageNet_LT

│ ├── ImageNet_LT_test.txt

│ ├── ImageNet_LT_train.txt

│ └── ImageNet_LT_val.txt

├── Places_LT_v2

│ ├── Places_LT_test.txt

│ ├── Places_LT_train.txt

│ └── Places_LT_val.txt

└── iNaturalist18

├── iNaturalist18_train.txt

└── iNaturalist18_val.txt

- For the training on Places-LT, we follow previous methods and use the pre-trained ResNet-152 model.

- Please download the checkpoint. Unzip and move the checkpoint files to /model/pretrained_model_places/.

nohup python train.py -c configs/{sade or bsce}/config_cifar100_ir10_{sade or ce}_rl.json &>{sade or ce}_rl_10.out&

nohup python train.py -c configs/{sade or bsce}/config_cifar100_ir50_{sade or ce}_rl.json &>{sade or ce}_rl_50.out&

nohup python train.py -c configs/{sade or bsce}/config_cifar100_ir100_{sade or ce}_rl.json &>{sade or ce}_rl_100.out&

Example:

nohup python train.py -c configs/sade/config_cifar100_ir100_sade_rl.json &>sade_rl_100.out&

# test

python test.py -r {$PATH}

python train.py -c configs/{sade or bsce}/config_imagenet_lt_resnext50_{sade or ce}_rl.json

python train.py -c configs/{sade or bsce}/config_imagenet_lt_resnext50_{sade or ce}_rl.json

python train.py -c configs/{sade or bsce}/config_iNaturalist_resnet50_{sade or ce}_rl.json

nohup python train.py -c configs/{sade/bsce}/config_cifar100_ir10_{sade/ce}.json &>{sade/ce}_10.out&

nohup python train.py -c configs/{sade/bsce}/config_cifar100_ir50_{sade/ce}.json &>{sade/ce}_50.out&

nohup python train.py -c configs/{sade/bsce}/config_cifar100_ir100_{sade/ce}.json &>{sade/ce}_100.out&

python test.py -r {$PATH}

python train.py -c configs/{sade or bsce}config_imagenet_lt_resnext50_{sade or ce}.json

python train.py -c configs/{sade or bsce}/config_imagenet_lt_resnext50_{sade or ce}_rl.json

python train.py -c configs/{sade or bsce}/config_iNaturalist_resnet50_{sade or ce}_rl.json

If you find our work inspiring or use our codebase in your research, please consider giving a star ⭐ and a citation.

@article{zhao2024ltrl,

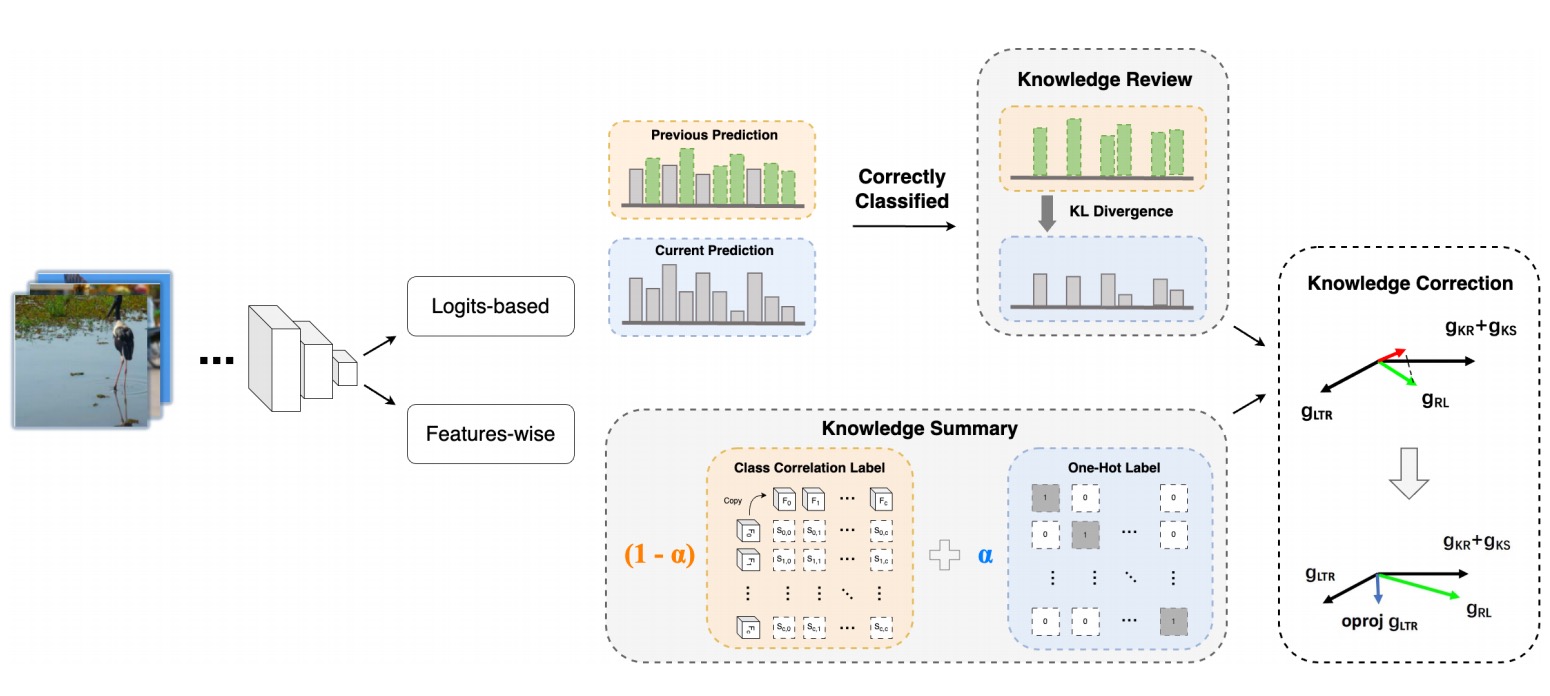

title={LTRL: Boosting Long-tail Recognition via Reflective Learning},

author={Zhao, Qihao and Dai, Yalun and Lin, Shen and Hu, Wei and Zhang, Fan and Liu, Jun},

journal={arXiv preprint arXiv:2407.12568},

year={2024}

}