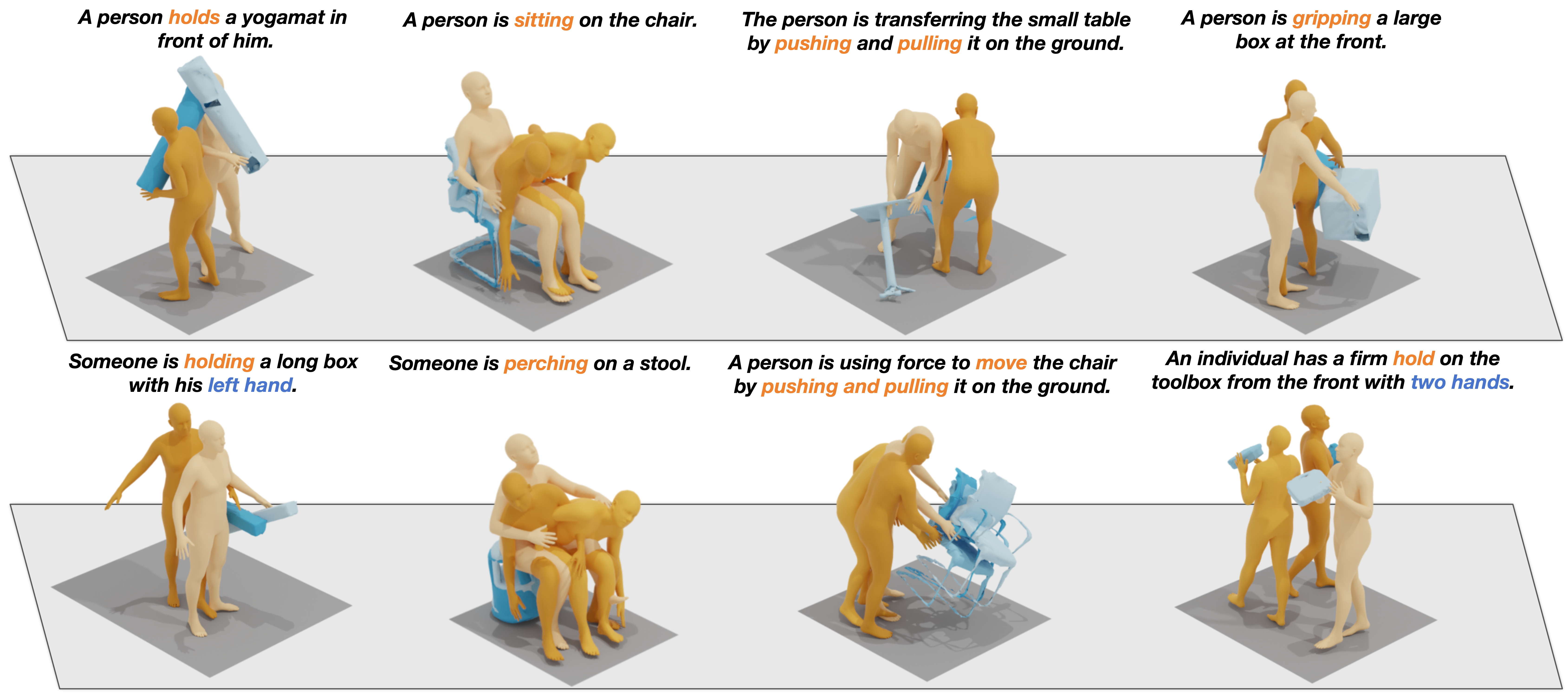

HOI-Diff: Text-Driven Synthesis of 3D Human-Object Interactions using Diffusion Models

Xiaogang Peng*

Yiming Xie*

Zizhao Wu

Varun Jampani

Deqing Sun

Huaizu Jiang

Northeastern University Hangzhou Dianzi University

Stability AI Google Research

arXiv 2023

- Release the dataset preparation and annotations.

- Release the main codes for implementation.

- Release the evaluation codes and the pretrained models.

- Release the demo video.

For more information about the implementation, see README.

Setup and download

Install ffmpeg (if not already installed):

sudo apt update

sudo apt install ffmpeg

Update installed ffmpeg path in ./data_loaders/behave/utils/plot_script.py:

# Line 5

plt.rcParams['animation.ffmpeg_path'] = 'your_ffmpeg_path/bin/ffmpeg'

Setup conda env:

conda env create -f environment.yml

conda activate t2hoi

python -m spacy download en_core_web_sm

pip install git+https://github.com/openai/CLIP.git

Download dependencies:

bash prepare/download_smpl_files.sh

bash prepare/download_glove.sh

bash prepare/download_t2hoi_evaluators.sh

Pleas follow this to install PointNet++.

MDM: Before your training, please download the pre-trained model here, then unzip and place them in ./checkpoints/.

HOI-DM and APDM:

Release soon!

python -m train.train_affordance --save_dir ./save/afford_pred --dataset behave --save_interval 1000 --num_steps 20000 --batch_size 32 --diffusion_steps 500

python -m train.hoi_diff --save_dir ./save/behave_enc_512 --dataset behave --save_interval 1000 --num_steps 20000 --arch trans_enc --batch_size 32

Generate from test set prompts

python -m sample.local_generate_obj --model_path ./save/behave_enc_512/model000020000.pt --num_samples 10 --num_repetitions 1 --motion_length 10 --multi_backbone_split 4 --guidance

Generate from your text file

python -m sample.local_generate_obj --model_path ./save/behave_enc_512/model000020000.pt --num_samples 10 --num_repetitions 1 --motion_length 10 --multi_backbone_split 4 --guidance

Render SMPL mesh

To create SMPL mesh per frame run:

python -m visualize.render_mesh --input_path /path/to/mp4/stick/figure/fileThis script outputs: [YOUR_NPY_FOLDER]

sample##_rep##_smpl_params.npy- SMPL parameters (human_motion, thetas, root translations, human_vertices and human_faces)sample##_rep##_obj_params.npy- SMPL parameters (object_motion, object_vertices and object_faces)

Notes:

- This script is running SMPLify and needs GPU as well (can be specified with the

--deviceflag). - Important - Do not change the original

.mp4path before running the script.

Refer to TEMOS-Rendering motions for blender setup, then install the following dependencies.

YOUR_BLENDER_PYTHON_PATH/python -m pip install -r prepare/requirements_render.txt

Run the following command to render SMPL using blender:

YOUR_BLENDER_PATH/blender --background --python render.py -- --cfg=./configs/render.yaml --dir=YOUR_NPY_FOLDER --mode=video --joint_type=HumanML3D

optional parameters:

--mode=video: render mp4 video--mode=sequence: render the whole motion in a png image.

This code is standing on the shoulders of giants. We want to thank the following contributors that our code is based on:

BEHAVE, MLD, MDM, GMD, guided-diffusion, MotionCLIP, text-to-motion, actor, joints2smpl, MoDi.

If you find this repository useful for your work, please consider citing it as follows:

@article{peng2023hoi,

title={HOI-Diff: Text-Driven Synthesis of 3D Human-Object Interactions using Diffusion Models},

author={Peng, Xiaogang and Xie, Yiming and Wu, Zizhao and Jampani, Varun and Sun, Deqing and Jiang, Huaizu},

journal={arXiv preprint arXiv:2312.06553},

year={2023}

}