This repository contains the data and code for the paper "HalluDial: A Large-Scale Benchmark for Automatic Dialogue-Level Hallucination Evaluation."

The HalluDial dataset can be downloaded from here. After downloading, please extract the contents to the data directory. The file structure should look like this:

HalluDial

├── data

│ ├── spontaneous

│ │ ├── spontaneous_train.json

│ │ └── ...

│ └── induced

│ ├── induced_train.json

│ └── ...

└── ...

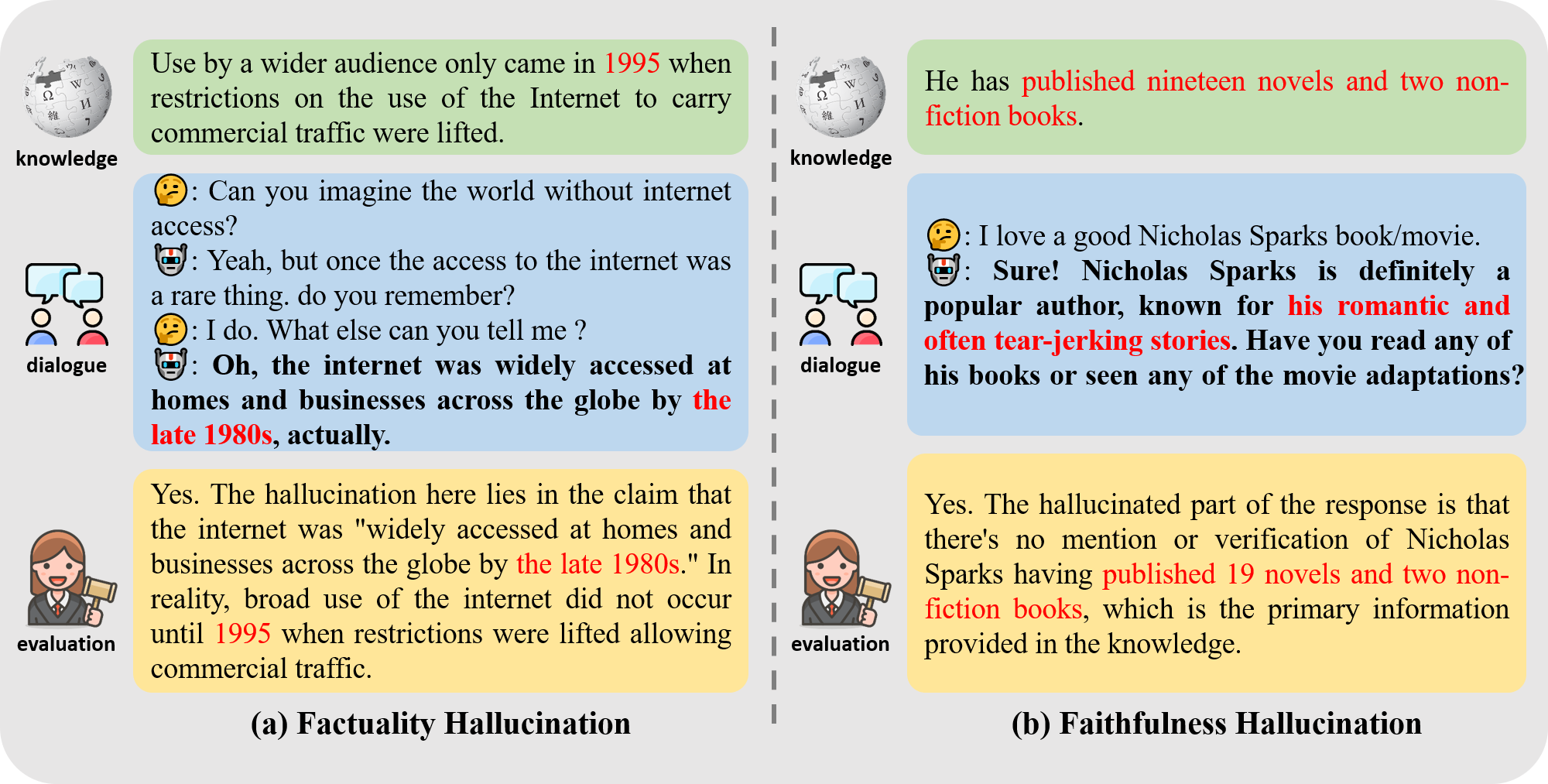

The HalluDial dataset includes two types of hallucination scenarios: the spontaneous hallucination scenario and the induced hallucination scenario, representing two different data construction processes. Each scenario is further split into training and test sets. The dataset is provided as JSON files, one for each partition: train.json, test.json. The splits are sized as follows:

| Split | # Samples |

|---|---|

train |

55071 |

test |

36714 |

total |

91785 |

| Split | # Samples |

|---|---|

train |

33042 |

test |

22029 |

total |

55071 |

Each JSON file contains a list of dialogues, where each dialogue is represented as a dictionary. Here is an example dialogue:

{

"dialogue_id": 0,

"knowledge": "Use by a wider audience only came in 1995 when restrictions on the use of the Internet to carry commercial traffic were lifted.",

"dialogue_history": "[Human]: Can you imagine the world without internet access? [Assistant]: Yeah, but once the access to the internet was a rare thing. do you remember? [Human]: I do. What else can you tell me ?",

"turn": 1,

"response": "Oh, the internet was widely accessed at homes and businesses across the globe by the late 1980s, actually.",

"target": "Yes. The hallucination here lies in the claim that the internet was \"widely accessed at homes and businesses across the globe by the late 1980s.\" In reality, broad use of the internet did not occur until 1995 when restrictions were lifted allowing commercial traffic.",

}where

dialogue_id: a unique identifier for the dialogue. Matching dialogue IDs indicate that the knowledge and the dialogue history are the same.knowledge: the knowledge provided for the dialogue.dialogue_history: the dialogue history.turn: the turn in the dialogue.response: the response generated by the model.target: the hallucination evaluation result, including the results of hallucination detection, hallucination localization, and rationale provision.

The data can be loaded the same way as any other JSON file. For example, in Python:

import json

spontaneous_dataset = {

"train": json.load(open("data/spontaneous/spontaneous_train.json")),

"test": json.load(open("data/spontaneous/spontaneous_test.json"))

}

induced_dataset = {

"train": json.load(open("data/induced/induced_train.json")),

"test": json.load(open("data/induced/induced_test.json"))

}However, it can be easier to work with the dataset using the HuggingFace Datasets library:

# pip install datasets

from datasets import load_dataset

spontaneous_dataset = load_dataset("FlagEval/HalluDial", "spontaneous")

induced_dataset = load_dataset("FlagEval/HalluDial", "induced")We provide example scripts to conduct meta-evaluations of the hallucination evaluation ability of Llama-2 on the HalluDial dataset.

To install the required dependencies, run:

pip install -r requirements.txtTo evaluate the hallucination detection performance, run:

sh example/eval_detect.shTo evaluate the hallucination localization and rationale provision performance, run:

sh example/eval_rationale.shIf you use the HalluDial dataset in your work, please consider citing our paper:

@article{luo2024halludial,

title={HalluDial: A Large-Scale Benchmark for Automatic Dialogue-Level Hallucination Evaluation},

author={Luo, Wen and Shen, Tianshu and Li, Wei and Peng, Guangyue and Xuan, Richeng and Wang, Houfeng and Yang, Xi},

journal={arXiv e-prints},

pages={arXiv--2406},

year={2024}

}