This repo has the code that goes with this blog post on How to Best Use DuckDB with Apache Airflow. The blog post contains the important details about the process, this README will show you how to get the project up and running.

There are some specific prerequisites you need to run this repo:

-

A Docker Engine. Docker Desktop or Colima both work with the Astro CLI.

-

The Astro CLI

-

The data files need to be upload to an S3 (or S3 compliant service like Minio) that you have access to.

The files needed are hosted on the transtats site and the file names looks like:

On_Time_Reporting_Carrier_On_Time_Performance_1987_present_2002_1.zip. This is the On Time Reporting data for flights in the US. Thefetch_files.shscript included in this repo will fetch and unzip the files for 2018 - 2022. Run the script somewhere locally and take a break from doing computer things while it runs. You then need to upload the csv files to a folder on an S3 bucket somewhere.

You need to then update the MY_S3_BUCKET variable in both DAG files (dags/duckdb_process_dag.py and dags/duckdb_test_dag.py) with your S3 path. This is near the top of file, so it will be easy to find. Change this line:

MY_S3_BUCKET = 's3://jf-ml-data/flight_data/'

to you S3 bucket's path. Once that's done, you are good to go.

You also need to create a file named .env file in root of the project folder, with you AWS credentials:

AWS_ACCESS_KEY_ID='AKIAIOSFODNN7EXAMPLE'

AWS_SECRET_ACCESS_KEY='wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY'

Here are the steps to follow to get this project running:

-

$ git clone https://github.com/fletchjeff/duckdb_demo -

$ cd duckdb_demo

$ astro dev start -

Once the astro cli start up process has completed, open the Airflow UI at http://localhost:8080/

User:

admin

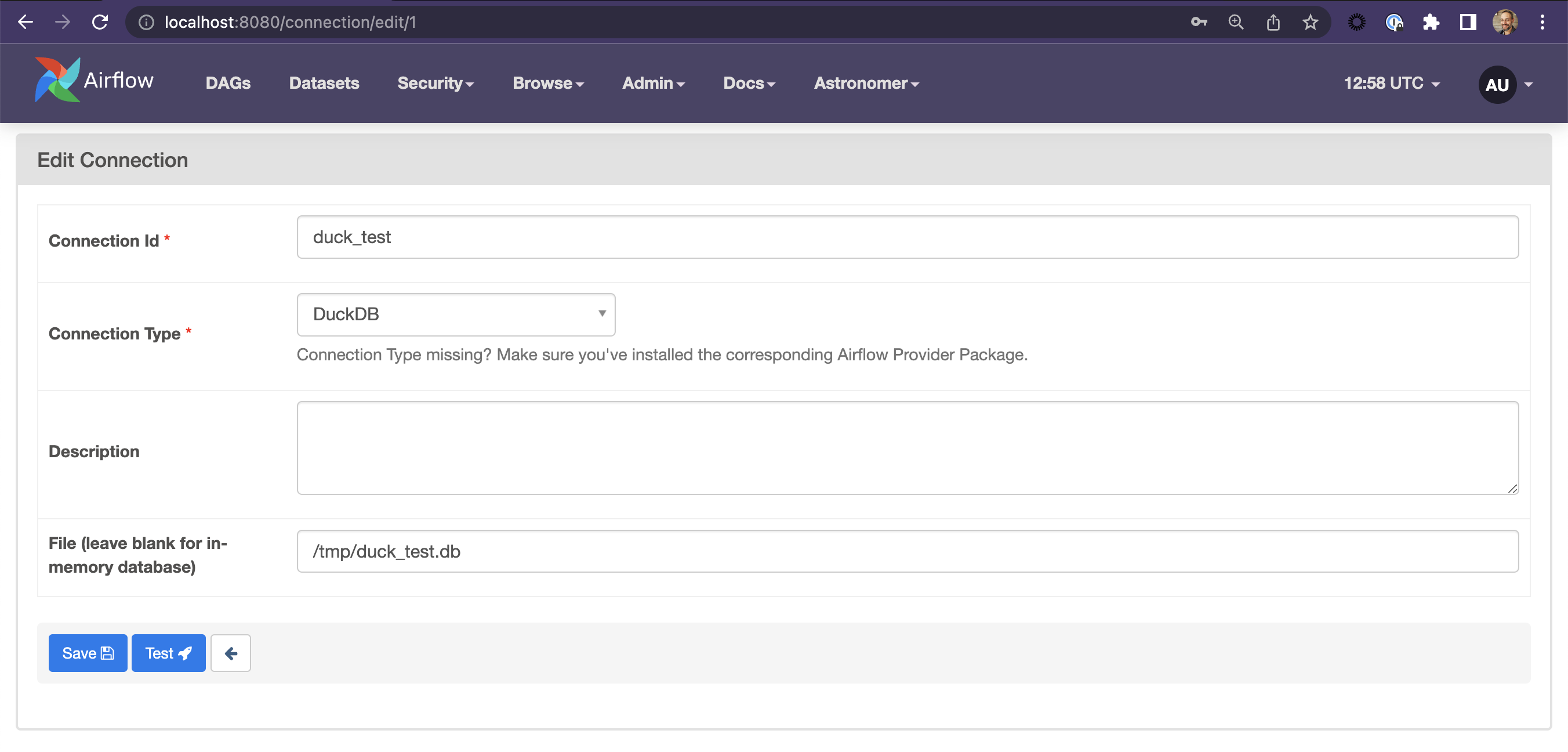

Password:adminThe first is to create the DuckDB connection. In the UI, go to Admin > Connections and create at DuckDB connection with the following details:

Connection Id :

duck_test

Connection Type :DuckDB

File :/tmp/duck_test.dbClick Save when you are done. Then go to the main DAGs page.

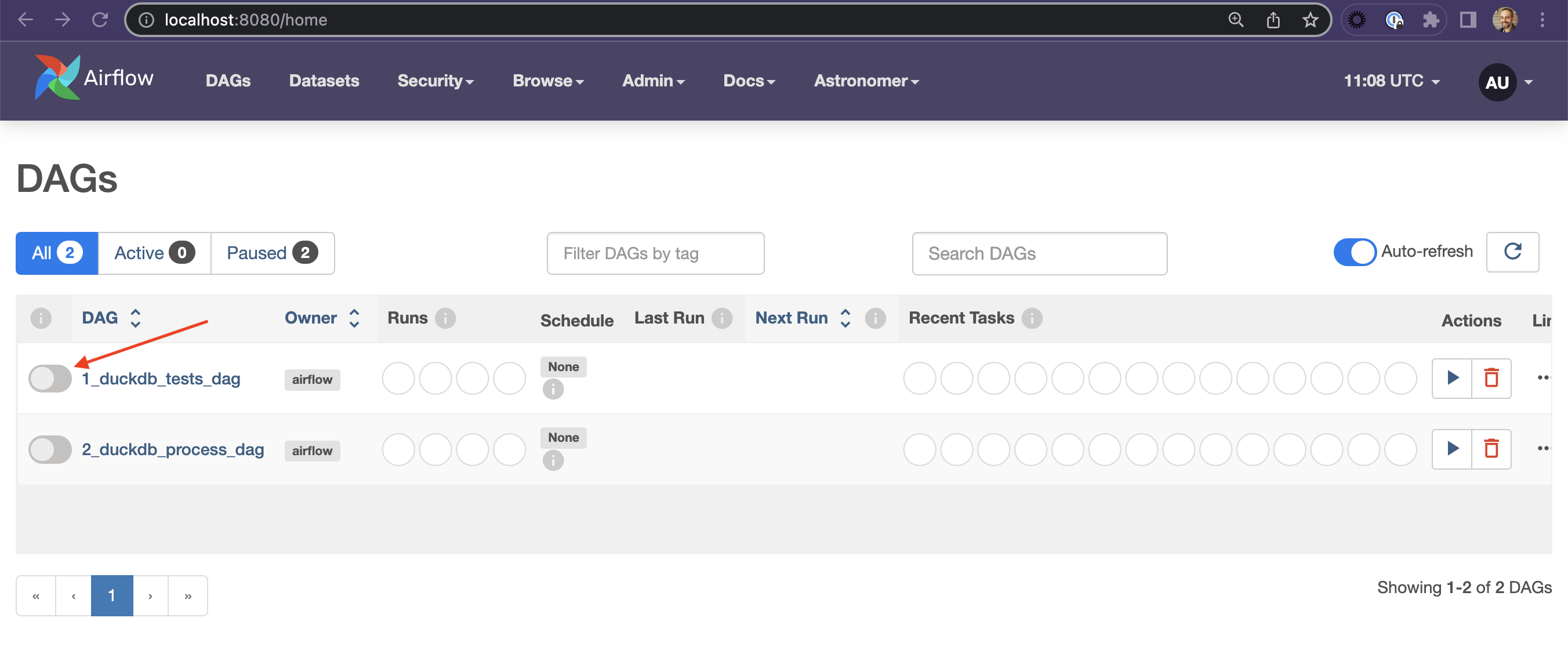

You should see 2 DAGs in the DAGs list, called

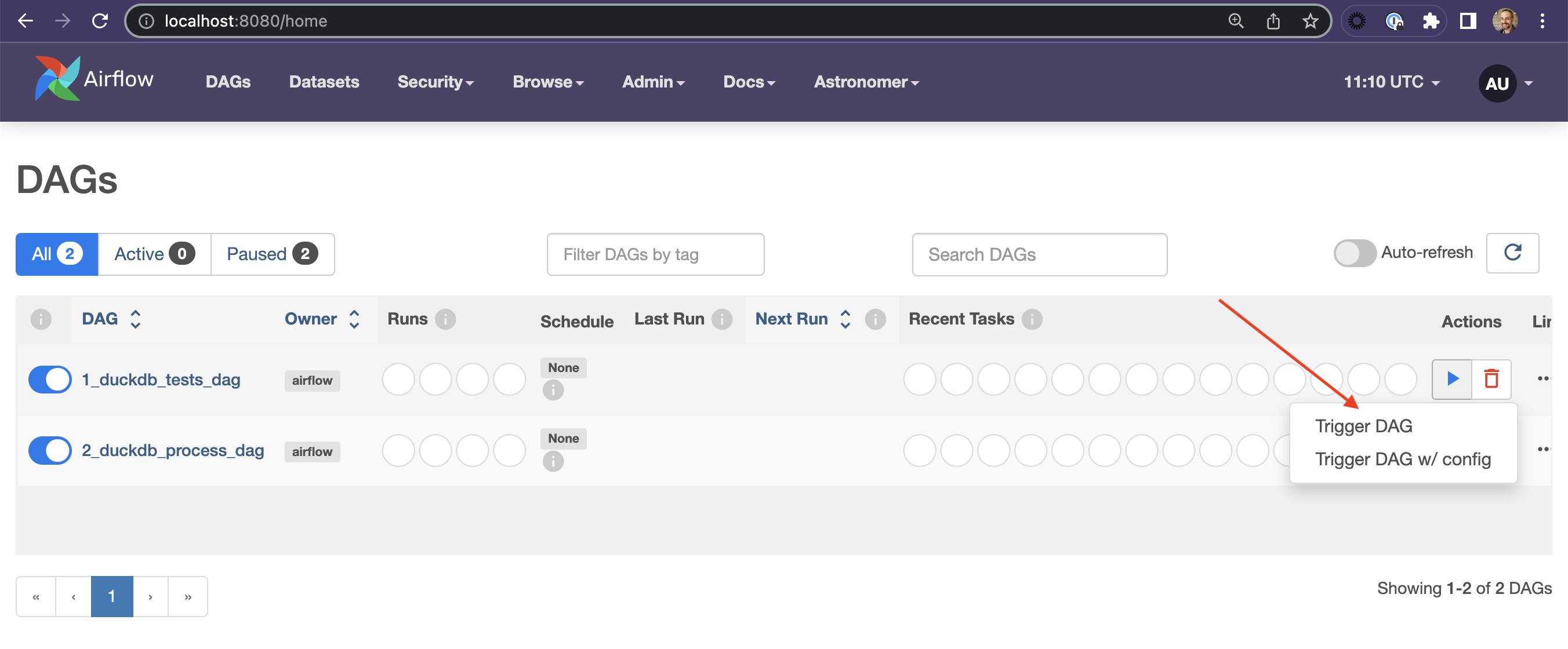

1_duckdb_test_dagand2_duckdb_process_dag. Make them both active by clicking the slider next to the name:You first need to run the

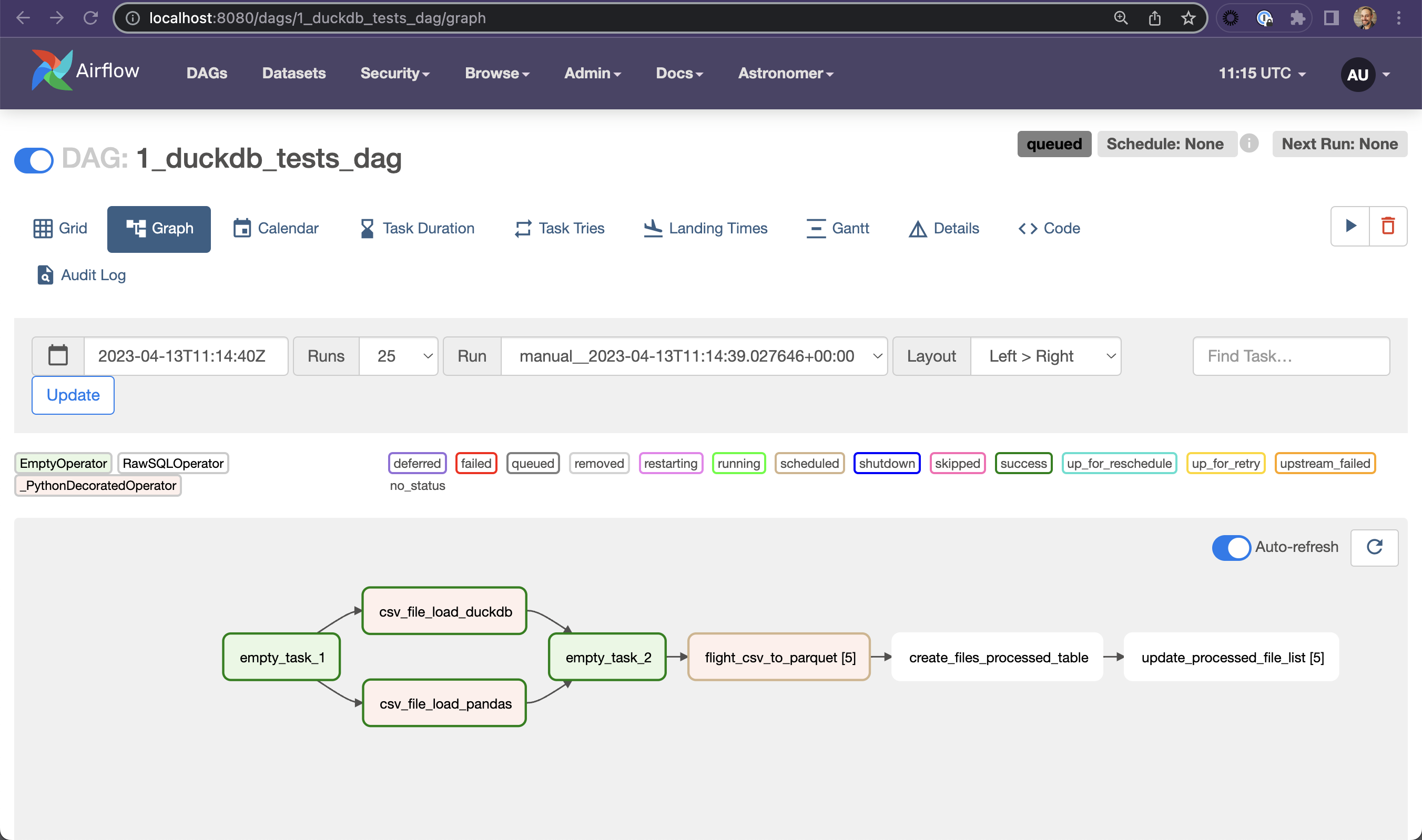

1_duckdb_test_dagDAG to create the parquet files used by the second DAG. Click the play button next to the1_duckdb_test_dagDAG's name to trigger the DAG run:From there you can click the DAG's name and then the Graph icon to see how it ran in the Graph view: