This is where I'm keeping track of everything I learn about Large Language Models. It's straightforward – notes, code, and links to useful resources.

- Notes: Quick thoughts, summaries, and explanations I've written down to better understand LLM concepts.

- Code: The actual code I've written while experimenting with LLMs. It's not always pretty, but it works (mostly).

- Resources: Links to articles, papers, and tutorials that have cleared things up for me. No fluff, just the good stuff.

This is where I keep my experiments and code snippets.

- 001-pytorch-tensors.ipynb - Basic experiments with PyTorch tensors.

- 002-pytorch-neuron.ipynb - Implementation of a single neuron from scratch and training it on the Iris dataset

- 003-pytorch-transformers.ipynb - Implementation of a transformer model from scratch

- 004-pytorch-data-science-basics.ipynb - Basic data science operations and ML with PyTorch

I needed somewhere to dump my brain as I dive into LLMs. Maybe it'll help someone else, maybe not. But it's helping me keep track of my progress and organize my thoughts.

Feel free to look around if you're into LLMs or just curious about what I'm learning. No promises, but you might find something useful.

This is the curriculum I'm following to learn about Large Language Models. It's a mix of PyTorch basics, LLM concepts, and real-world applications. The first draft of the study plan has been generated by a LLM and I'll be updating it as I go along.

Resources:

| Category | Title+Link | Comment |

|---|---|---|

| Study | How I got into deep learning | Vikas Paruchuri's journey into deep learning and AI. |

Most of my notes will be in the form of notebooks, and I will link them in each section. I will also write a short summary of the key points I've learned in each section.

At the moment I prefer to use PyCharmPro as my dev environment. The benefits are venv- and notebook support and full IDE support (with CoPilot). If you want to run any of my code, you need to set up and activate a virtual environment and install the required packages with:

pip install -r requirements.txtAlternatively follow these installation guides

I am a software engineer and already know how to code. But I am new to the PyTorch library and want to get familiar and fluent writing code with it before I dive deeper into LLMs. If you don't know how to program, I would recommend to take at least a short introductory course into Python before continuing.

If you look at the tools and libraries used to build neural networks, you'll quickly discover that there are many choices. You will also see that PyTorch is one of the most popular and upcoming libraries. To start somewhere that is the library I picked. For now I am not going to worry about other choices of the need to know them, I'll focus on PyTorch and expand later when I need to.

Vikas Paruchuri said this about proficiency: "You should get to a point where you can code up any of the main neural networks architectures in plain numpy". Since PyTorch tensors are very similar to numpy arrays, this will be my goal. And now lets get good with PyTorch tensors.

I did a bit of searching to findout how PyTorch tensors and Numpy arrays are different and how they are similar. Here is what I found:

PyTorch tensors and NumPy arrays are both powerful tools widely used in the field of data science and machine learning, especially for array computing and handling large datasets. Despite their similarities, there are fundamental differences, especially in how they are used within the deep learning context.

-

Data Structure: Both PyTorch tensors and NumPy arrays provide efficient data structures for storing and manipulating numerical data in multi-dimensional arrays. They offer a wide range of functionalities for array manipulations such as reshaping, slicing, and broadcasting.

-

API Overlap and Interoperability: There is a significant overlap in the APIs between PyTorch and NumPy, making it relatively easy for users to switch between the two or to integrate them within the same project. PyTorch tensors can be easily converted to and from NumPy arrays, allowing for seamless integration between the two libraries. Functions for operations like addition, multiplication, transposition, and more, have similar calling conventions.

-

Memory Sharing: PyTorch can interoperate with NumPy through memory sharing. Tensors can be converted to NumPy arrays and vice versa without necessarily copying data. This allows for efficient memory usage when transitioning between the two during preprocessing or analysis stages.

-

Computation Graphs and Backpropagation: PyTorch tensors are integrated with a powerful automatic differentiation library, Autograd. This makes them suitable for building neural networks where gradients are computed for optimization. NumPy, on the other hand, does not support automatic differentiation and is typically used for more straightforward numerical computations without the need for tracking gradients.

-

GPU Support: PyTorch tensors are designed to easily switch between CPU and GPU operations, which is crucial for training deep learning models efficiently. NumPy primarily operates on the CPU, meaning operations using NumPy arrays do not benefit from GPU acceleration.

-

Mutable vs Immutable: When a PyTorch tensor is modified, its underlying data is also modified without the need to create a new tensor. In contrast, NumPy operations often result in a new array being created even if the operation could be applied in place.

-

Designed for Deep Learning: PyTorch is inherently designed for deep learning applications. It provides functionalities like tensor operations on GPUs, distributed computing, and more, which are specifically tailored for training neural networks. NumPy, while versatile in handling numerical data, lacks these deep learning-specific enhancements.

-

Dynamic vs Static Computing: PyTorch allows for dynamic computational graphs, meaning the graph is built at runtime. This is beneficial for models where the computation cannot be completely described as a static graph beforehand. NumPy’s usage scenario doesn’t involve computational graphs and is purely for static array computations.

NumPy is excellent for tasks that require straightforward numerical computation in science and engineering but do not need gradients or massive parallelism offered by GPUs. PyTorch is preferable when developing complex models that require gradients, need to run on GPUs for performance, or when the models involve dynamic changes in the computation process.

While PyTorch tensors and NumPy arrays share many similarities in terms of their core functionality as n-dimensional arrays, PyTorch tensors are specifically designed for deep learning and machine learning applications, with features like automatic differentiation and GPU support, which make them more suitable for these tasks compared to the more general-purpose NumPy arrays.

Since we are going to get good with LLMs, PyTorch sounds just like what we need. Lets get into it in the next section.

I created a Jupyter Notebook 001-pytorch-tensors.ipynb that contains all of my basic experiments with PyTorch tensors.

I like to keep my notes in a question answering format because it helps with retrieval and interview preparation at the same time.

| Question | Answer |

|---|---|

| What is a tensor? | A tensor is a multi-dimensional array for numerical computations that can store numerical data. (And it is very similar to a numpy array ot TensorflowTensor |

| What is a tensor with rank 0? | A 0-dimensional tensor is a scalar that represents a single numerical value. |

| What is a tensor with rank 1? | A 1-dimensional tensor is a vector that represents a list of numerical values. |

| What is a tensor with rank 2? | A 2-dimensional tensor is a matrix that represents a table of numerical values. |

| What is broadcasting? | Broadcasting is a technique in PyTorch that allows for element-wise operations between tensors of different shapes and sizes, without manually reshaping or duplicating data. |

| When is a PyTorch tensor "broadcastable"? | Rule 1: Each tensor has at least one dimension. Rule 2: When iterating over the dimension sizes, starting at the trailing dimension, the dimension sizes must either be equal, one of them is 1, or one of them does not exist. |

| Why does the choice of data type for a tensor matter? | Choosing the right one is important because it will influence the memory usage and performance |

| Category | Title | Comment |

|---|---|---|

| Coding | 4.3 Vectors, Matrices, and Broadcasting | A YouTube video by Sebastian Raschka |

| Coding | Broadcasting Semantics in PyTorch | Explains how/when broadcasting happens |

| Coding | PyTorch Tensor Basics | Basic tensor operations in PyTorch with explanations |

- Transformer models

- Architecture of Transformer Models: Attention mechanisms, multi-head attention, positional encoding, feed-forward networks.

- Pre-trained Models Overview: GPT (Generative Pre-trained Transformer), BERT (Bidirectional Encoder Representations from Transformers), and variants (RoBERTa, T5, etc.).

- Tokenization and Embeddings: WordPiece, SentencePiece, BPE (Byte Pair Encoding), contextual embeddings.

- Language Modeling: Unsupervised learning, predicting the next word, understanding context.

- Evaluation Metrics: Perplexity, BLEU score, ROUGE, F1 score, accuracy, precision, recall.

Q: What are homogenized models, and why are transformers homogenized models?

A: Homogenized models are designed to be highly adaptable across a wide range of tasks without needing specific

task-oriented tuning. Transformers are considered homogenized models because they use the same model architecture to

perform various NLP tasks effectively, leveraging their ability to process sequences of data in parallel and understand

context without task-specific adjustments.

Q: What are foundation models in the context of transformers?

A: A foundation model is a model that has been trained on billions of records and has billions of parameters. These

models can then perform a wide range of tasks without any further fine-tuning.

Q: When should one use traditional NLP methods, and when are transformers the better choice for NLP tasks?

A: Traditional NLP methods are useful when working with smaller datasets or when computational resources are limited.

They are also beneficial when the tasks require simpler models that can be more easily interpreted. Transformers are

better when dealing with large datasets, require understanding of context, or when the tasks benefit from deeper, more

complex patterns in the data.

Q: What are transformer models in the context of Industry 4.0?

A: In Industry 4.0, transformer models are used for automating complex decision-making processes by analyzing vast

amounts of data from various sources such as sensors, machines, and production lines. They enhance predictive

maintenance, quality control, and supply chain management through advanced NLP and machine learning techniques.

Q: Why do we say that a feature of transformers is a high-level of homogenization?

A: Transformers exhibit a high level of homogenization because they apply the same architecture to process various types

of data across multiple tasks, enabling consistent performance and facilitating machine-to-machine connections in

dynamic environments like Industry 4.0.

Q: What are some examples of foundation models?

A: Examples of foundation models include GPT-3 by OpenAI, Google's BERT, Facebook’s RoBERTa, and Microsoft’s Turing-NLG.

Q: Why can it be that some models do not reach the homogenization level of foundation models?

A: Some models may not achieve the homogenization level of foundation models due to limitations in training data

diversity, computational resources, or insufficient training methodologies that prevent the models from generalizing

well across different tasks.

Q: What is a stochastic model, and how does that relate to LLMs?

A: A stochastic model in the context of LLMs (large language models) like Codex refers to their probabilistic nature in

generating outputs. This means they use randomness in their processes to generate varied results, which can be useful

for tasks like code generation where multiple correct solutions can exist.

Q: What is a sequence model?

A: A sequence model is a type of AI model that processes sequences of data, such as sentences or time series, where the

order of the input data is important. It learns to predict elements in the sequence, understand context, or generate new

sequences based on learned patterns.

Q: In the context of NLP, what are Markov Chains and Markov (decision) processes, what are they used for?

A: In NLP, Markov Chains are used to model the probabilities of sequences of words or phrases, assuming that the

probability of each item depends only on the previous item. Markov decision processes extend this concept into decision

making, where transitions between states are decided not only based on the state but also the action taken, useful in

conversational agents and other sequential decision-making tasks.

Q: What are RNNs good for or used for? Give examples.

A: RNNs (Recurrent Neural Networks) are particularly good for tasks where the order and context of the input data

matter, such as text generation, speech recognition, and time series prediction. They excel in handling sequences where

the current input depends on the previous one.

Q: Can CNNs be applied to text? How?

A: Yes, CNNs (Convolutional Neural Networks) can be applied to text by treating segments of words or characters as

spatial dimensions, similar to how they treat regions in an image. This allows them to identify patterns like word

groupings and sentence fragments, useful in tasks like sentiment analysis and topic classification.

Q: What is LeNet-5 from Yann LeCun, and why is it well known?

A: LeNet-5, developed by Yann LeCun, is one of the earliest convolutional neural networks that significantly influenced

the development of deep learning. It was initially designed for digit recognition and is well-known for demonstrating

the effectiveness of CNNs in practical applications, leading to the broader adoption of deep learning in many fields.

Q: Why can't CNNs deal well with long term dependencies in long and/or complex sequences of text?

A: CNNs struggle with long-term dependencies in text because their convolutional filters typically capture local

patterns within a fixed window size, making it difficult to maintain contextual information over longer text sequences

without extensive layering or large receptive fields, which can be computationally inefficient.

Q: Are there recurrences in transformer models? A: No, recurrence has been abandoned in the transformer architecture.

Q: What type of architecture is a transformer? A: An encoder-decoder architecture.

Q: What is the encoder, and what is the decoder responsible for in a transformer? A: The encoder is responsible for creating a rich context representation of the input text, and the decoder is responsible for using that to generate the next output based on the previous outputs.

Q: What replaced the recurrence functions of RNNs, LSTMs, CNNs in transformers? A: The attention mechanism has replaced the recurrence functions.

Q: How many layers did the encoder stack of the original transformer have (from Attention is all you need paper)? A: The encoder consisted of 6 layers, each one featuring an attention sublayer and a feed forward sublayer and a normalization sublayer between.

Q: What is a difference between the encoder and decoder stack? A: The decoder stack features an additional masked multi head attention sublayer.

Q: What is multi head attention? A: Transformers have multiple (8) attention heads that can process in parallel.

Q: What do the attention mechanisms learn? A: Each attention mechanism learns different perspectives of the same input sequence.

Q: With what has recurrence been replaced in transformer models? A: The recurrence we know from RNNs and LSTMs has been replaced by the attention mechanism in transformers.

Q: Are the layers in the encoder stack of the original transformer identical? A: Yes, each layer consists of sublayers (multi-head attention + feedforward sublayer) except the first layer also has an embedding layer that combines the input embedding with positional encoding of the inputs before feeding it into the 1st layer.

Q: Why do we speak of self-attention when we talk about transformers? A: Because we are using the query against past keys. This is self-attention.

Q: What is the motivation for the architecture of the transformer model? A: To allow an industrial approach to deep learning. For the start, it perfectly fits hardware optimization requirements. For example, the stack structure of transformers allows for design of domain-specific optimized hardware that requires less floating-point precision.

Q: What is a stack in the context of transformer architectures? A: A Stack consists of n layers in the NNL. A stack can either be an encoder or a decoder. A stack runs from the bottom (layer 1) to the top (layer n). And during that process, each layer learns something that it passes on to the next layer. Similar to how human memory works.

Q: What are sublayers? A: Each layer in a stack contains sublayers. The structure of the sublayers of different layers is the same (great for hardware optimization). In the original transformer paper, the sublayers were a self-attention sublayer and a feedforward network sublayer, processed int hat order. The self-attention sublayer was specifically designed for NLP and hardware optimizations.

Q: What are attention heads? A: Each self-attention sublayer is divided into n independent and identical layers called "heads". The original transformer architecture contained 8 heads in the self-attention sublayer of every layer. Each of the heads can be processed independently of each other, ideal for parallelization.

Q: What is an autoregressive language model? A: An autoregressive language model is a type of artificial intelligence model that generates text by predicting one word at a time, based on the previous words in the sequence. This approach is called "autoregressive" because it uses its own previous outputs as inputs for future predictions.

Q: What is an example of an autoregressive language model? A: GPT-3 (Generative Pre-trained Transformer 3) by OpenAI is an example of an autoregressive language model that generates human-like text by predicting the next word in a sequence based on the context of the previous words.

Q: What is the difference between autoregressive and non-autoregressive language models?

Q: What is the MMLU Benchmark? A: The MMLU Benchmark (Massive Multi-task Language Understanding) is a comprehensive evaluation is a challenging test designed to measure a text model's multitask accuracy by evaluating models in zero-shot and few-shot settings. The MMLU serves as a standardized way to assess AI performance on tasks that range from simple math to complex legal reasoning. The MMLU contains 57 tasks across topics including elementary mathematics, US history, computer science, and law. It requires models to demonstrate a broad knowledge base and problem-solving skills.

Foundational and advanced mathematical concepts that underpin the workings of Large Language Models (LLMs), especially those based on the Transformer architecture.

- Linear Algebra:

- Vectors and Matrices: Understanding the basic building blocks of neural networks, including operations like addition, multiplication, and transformation.

- Eigenvalues and Eigenvectors: Importance in understanding how neural networks learn and how data can be transformed.

- Special Matrices: Identity matrices, diagonal matrices, and their properties relevant to neural network optimizations.

This is an overview and/or review of some of the basic concepts in linear Algebra.

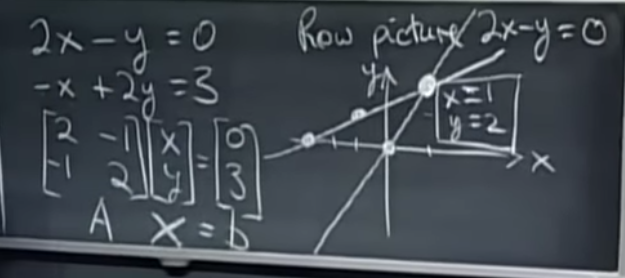

3.1.1. The Geometry of Linear Equations

Idea: We are looking for a solution of a system of linear equations. For that we are expressing the equations as row vectors in a matrix. Then the solution is the to all equations is the vector x in A*x = b.

There are different ways of looking at the matrix and the vectors involved in the Ax = b equation:

Row picture of a matrix

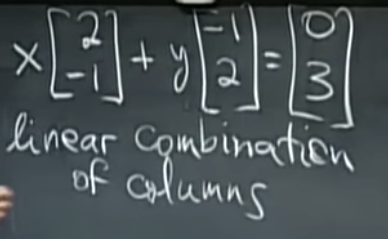

Linear combinations

Column picture of a matrix

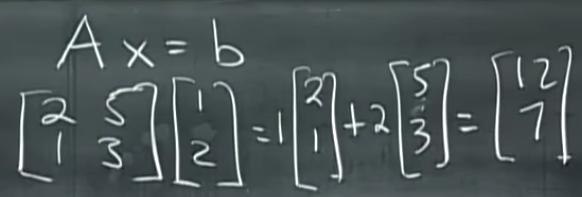

Matrix-Vector multiplication

You can do it column based: Take 1 of the first column and add 2 of the 2nd column

You can do it row based (dot product): Dot product of the first row of A with the vector + dot product of the second of of A with the vector.

| Question | Answer |

|---|---|

| Do the linear combinations of the columns fill n-dimensional space? | This is the same question as: Does Ax=b always have a solution for x? |

| Is there always a solution for x in Ax=b? | Yes, if A is invertible. Yes, if A is non-singular. |

| Are invertible matrices always non-singular matrices? | Yes. |

| What is the definition of a singular matrix? | A matrix is singular if it does not have an inverse. |

| What can you tell about a matrix if its determinant is zero? | That the matrix has linear dependent row or column vectors |

| When is a matrix not invertible? | When it has linear dependent row or column vectors |

| What does the determinant tell us about a matrix? | When it is zero the matrix is not invertible. When it is not zero the row and column vectors are linearly independent. |

| What is the definition of an invertible matrix? | A is invertible if A^-1^ exist such that A*A^-1^=I. |

| What are some methods that can be used to find the inverse of a matrix | a) Gaussian Elemination (Row Reduction) b) Matrix Decomposition techniques: LU-decomposition, QR-decomposition, singular value decomposition (SVD). |

| When a matrix is invertible, how many solutions can exist for x in Ax=b? | x will always have exactly one solution. |

| When a matrix is singular, how many solutions can exist for x in Ax=b? | x can have 0 or infinitely many solutions, but never exactly one. |

| How can Gaussian Elimination fail? | It can fail primarily due to zero pivots that cannot be replaced by row swaps. This often occurs when there is linear dependence among the rows, leading either to no solution (inconsistent system) or to a system with infinitely many solutions (underdetermined system). |

| What does it mean when we find a zero pivot during Gaussian Elimination? | That we have linear dependent rows or columns. Meaning there are either zero or infinitely many solutions to the system of equations. |

| Category | Title | Comment |

|---|---|---|

| Math | MIC OCW Linear Algebra, Fall 2011 | by Prof. Gilbert Strang |

| Math | MIT OCW Lecture 1 The Geometry of Linear Equations | by Prof. Gilbert Strang |

| Math | MIT OCW Lecture 2 Elimination with Matrices | by Prof. Gilbert Strang |

| Math | MIT OCW Lecture 3 Multiplication and Inverse Matrices | by Prof. Gilbert Strang |

- Fine-Tuning Techniques: Transfer learning, learning rate adjustment, layer freezing/unfreezing, gradual unfreezing.

- Optimization Algorithms: Adam, RMSprop, SGD, learning rate schedulers.

- Regularization and Generalization: Dropout, weight decay, batch normalization, early stopping.

- Efficiency and Scalability: Mixed precision training, model parallelism, data parallelism, distributed training.

- Model Size Reduction: Quantization, pruning, knowledge distillation.

- Introduction to RAG: Concept, architecture, comparison with traditional LLMs.

- Retrieval Mechanisms: Dense Vector Retrieval, BM25, using external knowledge bases.

- Integrating RAG with LLMs: Fine-tuning RAG models, customizing retrieval components.

- Applications of RAG: Question answering, fact checking, content generation with external references.

- Challenges and Solutions: Handling out-of-date knowledge, bias in retrieved documents, improving retrieval relevance.

- Integrating LLMs into Applications: API development, deploying models with Flask/Django for web applications, mobile app integration.

- User Interface and Experience: Chatbots, virtual assistants, generating human-like text, handling user inputs.

- Security and Scalability: Authentication, authorization, load balancing, caching.

- Monitoring and Maintenance: Logging, error handling, continuous integration and deployment (CI/CD) pipelines.

- Case Studies and Project Ideas: Content generation, summarization, translation, sentiment analysis, automated customer service.

| Keyword | Explanation | Links |

|---|---|---|

| Temperature | affects the randomness of the model's output by scaling the logits before applying softmax, influencing the model's "creativity" or certainty in its predictions. Lower temperatures lead to more deterministic outputs, while higher temperatures increase diversity and creativity. | Peter Chng |

| Top P (Nucleus Sampling) | selects a subset of likely outcomes by ensuring the cumulative probability exceeds a threshold p, allowing for adaptive and context-sensitive text generation. This method focuses on covering a certain amount of probability mass. | Peter Chng |

| Top K | limits the selection pool to the K most probable next words, reducing randomness by excluding less likely predictions from consideration. This method normalizes the probabilities of the top K tokens to sample the next token. | Peter Chng |

| Q (Query) | represents the input tokens being compared against key-value pairs in attention mechanisms, facilitating the model's focus on different parts of the input sequence for predictions. | |

| K (Key) | represents the tokens used to compute the amount of attention that input tokens should pay to the corresponding values, crucial for determining focus areas in the model's attention mechanism. | |

| V (Value) | is the content that is being attended to, enriched through the attention mechanism with information from the key, indicating the actual information the model focuses on during processing. | |

| Embeddings | are high-dimensional representations of tokens that capture semantic meanings, allowing models to process words or tokens by encapsulating both syntactic and semantic information. | |

| Tokenizers | are tools that segment text into manageable pieces for processing by models, with different algorithms affecting model performance and output quality. | |

| Rankers | are algorithms used to order documents or predict their relevance to a query, influencing the selection of next words or sentences based on certain criteria in NLP applications. |

A collection of quotes, advice, and tips that I've found helpful in my learning journey.

| Category | Advice | Source |

|---|---|---|

| Study | Study like there is nothing else to do in your life. Create a plan and stick to it no matter what. No change of directions and no second thoughts. | DL Insider |

| Study | Join Discord communities where the latest (state of the art) papers and models are discussed | Vikas Paruchuri |

| Study | Despite transformers, CNNs are still widely used, and everything old is new again with RNNs. | Vikas Paruchuri |

| Study | Learn from examples and create things along the path. | DL Insider |

| Study | It can take years of hard study to master ML/DL math. And in the end it will help you only in 15% of the cases ... or less. | DL Insider |

| Study | Is is much easier to understand the models from an engineering perspective and then fill the gaps with math. | DL Insider |

| Study | It is much easier to learn ML as an SWE than the other way around | Greg Brockman |

| Coding | You should get to a point where you can code up any of the main neural networks architectures in plain numpy (forward and backward passes) | Vikas Paruchuri |

| Training LLMs | The easiest entrypoint for training models these days is fine-tuning a base model. Huggingface transformers is great for finetuning because it implements a lot of models already, and uses PyTorch. | Vikas Paruchuri |

| Training LLMs | The easiest way to finetune is to pick a small model (7B or fewer params), and try fine-tuning with LoRA. | Vikas Paruchuri |

| Training LLMs | Understanding the fundamentals is important to training good models | Vikas Paruchuri |

| Training LLMs | You don’t need a lot of GPUs for fine-tuning | Vikas Paruchuri |

| Impact | Finetuning is a very crowded space, and it’s hard to make an impact when the state of the art changes every day. | Vikas Paruchuri |

| Impact | Finding interesting problems to solve is the best way to make an impact with what you build | Vikas Paruchuri |

| Impact | There are many niches in AI where you can make a big impact, even as a relative outsider. | Vikas Paruchuri |

| Impact | Focus on the system you need, not the one you like. You will have to be able to use many different resources (Google, HuggingFace, OpenAI, etc.) |

In this section I keep track of all the articles, papers, and tutorials I am reading to learn about LLMs.

Next Up:

Inbox:

- 18.06SC | Fall 2011 | Linear Algebra lectures by Gilbert Strang

- by Chip Huyen

- A curated list of resources dedicated to Natural Language Processing

- A prompt for your thoughts: Prompt-based learning

- AllenNLP A library and platform that provides transformer models for various NLP tasks. (Has a free EDU API)

- An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale: Vision Transformer

- An Overview for Text Representations in NLP

- Applied AI: Building NLP Apps with Hugging Face Transformers

- Attention in transformers, visually explained: Chapter 6, Deep Learning

- Attention Is All You Need: Transformers

- Awesome Resource for NLP

- Awesome-LLM: A curated list of resources for Large Language Models.

- Beyond Chinchilla-Optimal: Accounting for Inference in Language Model Scaling Laws

- bigscience/bloom · Hugging Face

- brexhq/prompt-engineering

- Broadcasting in Python (C1W2L15): YT Video by Andrew Ng

- Building a news aggregator from scratch: news filtering, classification, grouping in threads and ranking

- Building a semantic search engine in Python by Vikas Paruchuri

- Building a sentence embedding index with fastText and BM25

- But what is a GPT? Visual intro to transformers: Chapter 5, Deep Learning

- Can LLMs learn from a single example?

- Central Limit Theorem by 3Blue1Brown

- Chip Huyen - Blog

- CNNs are still widely used: Tweet by Sebastian Raschka

- CS224N: Natural Language Processing with Deep Learning by Stanford University

- Curated papers, articles, and blogs on data science & machine learning in production

- Curated papers/blogs, guides, and interviews with ML practitioners.

- dair-ai/ML-Papers-Explained

- Day 1 of 60 Days of Deep Learning with Projects Series

- Decoupled Weight Decay Regularization: AdamW

- Deep Learning Book a book by Ian Goodfellow and Yoshua Bengio and Aaron Courville TIP: Read only the first 2 parts, skip the 3rd.

- Deep Learning Tuning Playbook by Google

- Deep Learning with torch:: CHEAT SHEET by rstudio.com

- Designing Machine Learning Systems - Book by Chip Huyen

- Efficient Transformers: A Survey

- Efficiently Scaling Transformer Inference

- Efficiently Scaling Transformer Inference

- eugeneyan/applied-ml

- Evaluating LLMs Trained on Code

- Extending Context Length in Large Language Models

- fast.ai course(s) by Jeremy Howard

- Few-shot learning in practice: GPT-Neo and the 🤗 Accelerated Inference API by Huggingface

- From zero to GPT: A course by Vikas Paruchuri

- Getting Started With Embeddings by Huggingface

- Getting started with Transformers and TPU using PyTorch

- GPT-2: Language Models are Unsupervised Multitask Learners: The original paper that introduced the GPT-2 model.

- GPT-3: Language Models are Few-Shot Learners: The original paper that introduced the GPT-3 model.

- Griffin: Mixing Gated Linear Recurrences with Local Attention for Efficient Language Models: RNNs

- How to Build a Semantic Search Engine With Transformers and Faiss

- How to Build & Understand GPT-7's Mind Sholto Douglas & Trenton Bricken w/ Dwarkesh Patel

- How to Differentiate Between Scaling, Normalization, and Log Transformations

- How to implement Q&A against your documentation with GPT3, embeddings and Datasette

- How to scale LLM workloads to 20B+ with Amazon SageMaker using Hugging Face and PyTorch FSDP

- How Transformers work in deep learning and NLP: an intuitive introduction

- Hugging Face Transformers: A library of pre-trained models for NLP tasks.

- Implemented Deep Learning Projects

- Improving LoRA: Implementing Weight-Decomposed Low-Rank Adaptation (DoRA) from Scratch

- Intro to Deep Learning and Generative Models Course: by Sebastian Raschka

- Karpathy YouTube Karpathy videos

- Language Identification from Very Short Strings

- Language Models and Contextualised Word Embeddings

- Language Models are Unsupervised Multitask Learners: GPT-2

- Let's build GPT: from scratch, in code, spelled out.: by Andrej Karpathy

- Let's build the GPT Tokenizer by Andrej Karpathy

- LLM Numbers Everyone Should know

- LLM Visualised an interactive/animated LLM visualisation

- LoRA: Low-Rank Adaptation of Large Language Models: LoRA

- manuelyhvh/nlp-with-transformers

- Mathematics for Machine Learning by Marc Peter Deisenroth A. Aldo Faisal Cheng Soon Ong

- Measuring Massive Multitask Language Understanding)

- Meet Claude: Anthropic’s Rival to ChatGPT

- Mixing Gated Linear Recurrences with Local Attention for Efficient Language Models:

- Mixture of Experts Explained

- Modern Deep Learning Techniques Applied to Natural Language Processing

- Mooler0410/LLMsPracticalGuide

- Multimodal Chain-of-Thought Reasoning in Language Models by Amazon Science

- Natural Language Processing with Transformers Book

- NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE: RNN attention

- Neural Networks (incl Transformers) by 3Blue1Brown

- Neural Networks - From the ground up: YouTube series from 3Blue1Brown

- NLP Best Practices

- NLP's ImageNet moment has arrived

- OLMo: Accelerating the Science of Language Models

- On the Opportunities and Risks of Foundation Models

- OpenELM: An Efficient Language Model Family with Open-source Training and Inference Framework

- Papers with Code

- PEFT: Parameter-Efficient Fine-Tuning of Billion-Scale Models on Low-Resource Hardware by Huggingface

- PEFT: Parameter-Efficient Fine-Tuning of Billion-Scale Models on Low-Resource Hardware by HuggingFace

- Post-processing in automatic speech recognition systems

- Probabilities of Probabilities by 3Blue1Brown

- Prompt Engineering And Why It Matters To The AI Revolution

- PyTorch Cheat Sheet by PyTorch

- PyTorch DL Cheat Sheet by DataCamp

- Q&A with GPT-Index

- Quantization Fundamentals with Hugging Face by Huggingface

- Quantization Fundamentals with Huggingface

- Retrieval-Augmented Generation (RAG) Made Simple & 2 How To Tutorials

- RLHF Automatic Prompt Engineer for Stable Diffusion 2

- Scaling Up AI Research to Production with PyTorch and MLFlow

- Scattertext

- Self-Attention clearly explained

- Semantic search with embeddings: index anything

- Semantic Search with S-BERT is all you need

- spaCy 101: Everything you need to know

- Stanford MLSys Seminars on YouTube

- Super Easy Way to Get Sentence Embedding using fastText in Python Medium Article

- Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity: Switch transformer

- swyxio/ai-notes

- Text Similarities : Estimate the degree of similarity between two texts

- The 2024 MAD (ML, AI & Data) Landscape

- The Annotated GPT-2: A detailed explanation of the GPT-2 model architecture.

- The Annotated Transformer Alt: A detailed explanation of the Transformer model architecture.

- The Essence of Linear Algebra Videos and as text YouTube playlist/course by 3Blue1Brown

- The fall of RNN / LSTM

- The Illustrated GPT-2 (Visualizing Transformer Language Models)

- The Illustrated GPT-2 (Visualizing Transformer Language Models)

- The Illustrated GPT-2: A visual guide to the GPT-2 model architecture.

- The Illustrated Transformer: A visual guide to the Transformer model architecture.

- The Principles of Deep Learning Theory - An Effective Theory Approach to Understanding Neural Networks by Daniel A. Roberts and Sho Yaida

- The Transformer: Attention is All You Need: The original paper that introduced the Transformer model.

- Thinking Like Transformers

- Token Selection Strategies: Top-k, Top-p, and Temperature: by Peter Chng

- torchtune - Easily fine-tune LLMS using PyTorch on pytorch.com

- Tracking Progress in Natural Language Processing

- Transformers

- Turing-NLG: A 17-billion-parameter language model by Microsoft

- Understanding and Coding the Self-Attention Mechanism of Large Language Models From Scratch by Sebastian Raschka

- Understanding text with BERT

- Vaclav Kosar's Software & Machine Learning Blog

- Vector databases (4): Analyzing the trade-offs

- What are the recent trends in machine learning, deep learning, and AI: bySebastian Raschka

- What I learned from looking at 900 most popular open source AI tools

- What I learned from looking at 900 most popular open source AI tools by Chip Huyen

- Where does AI come from, and where is it heading? - Data Big and Small

- Yann LeCun & Alfredo Canziani - DEEP LEARNING NYU CENTER FOR DATA SCIENCE

- Yann LeCun’s Deep Learning Course at CDS

Paper - Training Compute-Optimal Large Language Models by Google DeepMind

DeepMind's "Chinchilla" paper presents several key results and findings centered around the scaling laws in language model training:

- The paper argues that current models (in 2022) are significantly under-trained (meaning they have too many parameters for how long they've been trained, assuming the datasets were of high quality).

- The paper discusses how to estimate the optimal model size and number of tokens for training dense autoregressive models.

- In the paper these ideas were tested by training a 70B parameter model "Chinchilla" with different number of tokens and compare the performance of the models on a range of natural language reasoning and understanding tasks, demonstrating better performance than larger models that were under-trained for their size.

- The authors suggest doubling the number of tokens approximately linear with the increase in the size of the model for a fixed amount of compute.

- The paper presents three techniques for determining the number of tokens or the size of the model dependent on the amount of (fixed) available compute (FLOPS):

- Fixed model size -> scale the number of training tokens and with compute.

- Fixed number of training tokens -> scale the model size with compute .

- Fixed compute -> scale the number of tokens with size of the model.

Key Takeaways:

- Increasing the amount of training data is more efficient than increasing model size when both are constrained by compute resources.

- It's more effective to train a slightly smaller model on more data rather than a larger model on fewer data:

- Smaller models are more cost-effective during training

- Smaller models are cheaper to run during inference

- A model with better performance on a range of tasks.

Free ML Training Resources:

Discord Servers: