The RAPIDS cuDF library is a GPU DataFrame manipulation library based on Apache Arrow that accelerates loading, filtering, and manipulation of data for model training data preparation. The RAPIDS GPU DataFrame provides a pandas-like API that will be familiar to data scientists, so they can now build GPU-accelerated workflows more easily.

Please see the Demo Docker Repository, choosing a tag based on the NVIDIA CUDA version you’re running. This provides a ready to run Docker container with example notebooks and data, showcasing how you can utilize cuDF.

You can get a minimal conda installation with Miniconda or get the full installation with Anaconda.

You can install and update cuDF using the conda command:

conda install -c nvidia -c rapidsai -c numba -c conda-forge -c defaults cudf=0.3.0Note: This conda installation only applies to Linux and Python versions 3.5/3.6.

You can create and activate a development environment using the conda commands:

# create the conda environment (assuming in base `cudf` directory)

$ conda env create --name cudf_dev --file conda/environments/dev_py35.yml

# activate the environment

$ source activate cudf_dev

# when not using default arrow version 0.10.0, run

$ conda install -c nvidia -c rapidsai -c numba -c conda-forge -c defaults pyarrow=$ARROW_VERSIONThis installs the required cmake, nvstrings, pyarrow and other

dependencies into the cudf_dev conda environment and activates it.

Support is coming soon, please use conda for the time being.

The following instructions are tested on Linux Ubuntu 16.04 & 18.04, to enable from source builds and development. Other operatings systems may be compatible, but are not currently supported.

Compiler requirements:

gccversion 5.4nvccversion 9.2cmakeversion 3.12

CUDA/GPU requirements:

- CUDA 9.2+

- NVIDIA driver 396.44+

- Pascal architecture or better

You can obtain CUDA from https://developer.nvidia.com/cuda-downloads

Since cmake will download and build Apache Arrow (version 0.7.1 or

0.8+) you may need to install Boost C++ (version 1.58+) before running

cmake:

# Install Boost C++ for Ubuntu 16.04/18.04

$ sudo apt-get install libboost-all-devor

# Install Boost C++ for Conda

$ conda install -c conda-forge boostTo install cuDF from source, ensure the dependencies are met and follow the steps below:

- Clone the repository

git clone --recurse-submodules https://github.com/rapidsai/cudf.git

cd cudf- Create the conda development environment

cudfas detailed above - Build and install

libcudf

$ cd /path/to/cudf/cpp # navigate to C/C++ CUDA source root directory

$ mkdir build # make a build directory

$ cd build # enter the build directory

$ cmake .. -DCMAKE_INSTALL_PREFIX=/install/path # configure cmake ... use $CONDA_PREFIX if you're using Anaconda

$ make -j # compile the libraries librmm.so, libcudf.so ... '-j' will start a parallel job using the number of physical cores available on your system

$ make install # install the libraries librmm.so, libcudf.so to '/install/path'To run tests (Optional):

$ make testBuild and install cffi bindings:

$ make python_cffi # build CFFI bindings for librmm.so, libcudf.so

$ make install_python # install python bindings into site-packages

$ cd python && py.test -v # optional, run python tests on low-level python bindings- Build the

cudfpython package, in thepythonfolder:

$ cd ../../python

$ python setup.py build_ext --inplaceTo run Python tests (Optional):

$ py.test -v # run python tests on cudf python bindings- Finally, install the Python package to your Python path:

$ python setup.py install # install cudf python bindingsA Dockerfile is provided with a preconfigured conda environment for building and installing cuDF from source based off of the master branch.

- Install nvidia-docker2 for Docker + GPU support

- Verify NVIDIA driver is

396.44or higher - Ensure CUDA 9.2+ is installed

From cudf project root run the following, to build with defaults:

$ docker build --tag cudf .After the container is built run the container:

$ docker run --runtime=nvidia -it cudf bashActivate the conda environment cudf to use the newly built cuDF and libcudf libraries:

root@3f689ba9c842:/# source activate cudf

(cudf) root@3f689ba9c842:/# python -c "import cudf"

(cudf) root@3f689ba9c842:/#

Several build arguments are available to customize the build process of the container. These are spcified by using the Docker build-arg flag. Below is a list of the available arguments and their purpose:

| Build Argument | Default Value | Other Value(s) | Purpose |

|---|---|---|---|

CUDA_VERSION |

9.2 | 10.0 | set CUDA version |

LINUX_VERSION |

ubuntu16.04 | ubuntu18.04 | set Ubuntu version |

CC & CXX |

5 | 7 | set gcc/g++ version; NOTE: gcc7 requires Ubuntu 18.04 |

CUDF_REPO |

This repo | Forks of cuDF | set git URL to use for git clone |

CUDF_BRANCH |

master | Any branch name | set git branch to checkout of CUDF_REPO |

NUMBA_VERSION |

0.40.0 | Not supported | set numba version |

NUMPY_VERSION |

1.14.3 | Not supported | set numpy version |

PANDAS_VERSION |

0.20.3 | Not supported | set pandas version |

PYARROW_VERSION |

0.10.0 | 0.8.0+ | set pyarrow version |

PYTHON_VERSION |

3.5 | 3.6 | set python version |

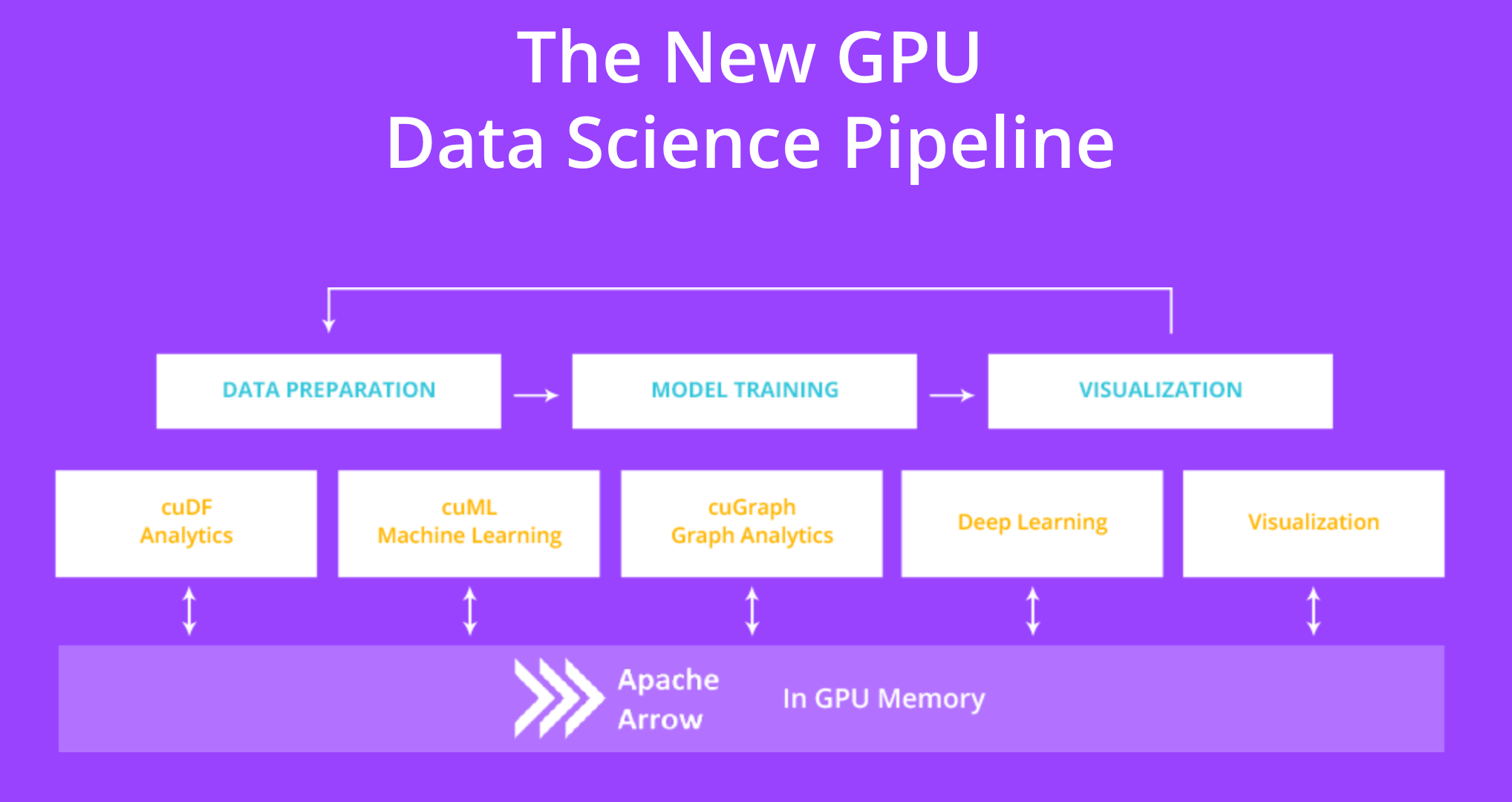

The RAPIDS suite of open source software libraries aim to enable execution of end-to-end data science and analytics pipelines entirely on GPUs. It relies on NVIDIA® CUDA® primitives for low-level compute optimization, but exposing that GPU parallelism and high-bandwidth memory speed through user-friendly Python interfaces.

The GPU version of Apache Arrow is a common API that enables efficient interchange of tabular data between processes running on the GPU. End-to-end computation on the GPU avoids unnecessary copying and converting of data off the GPU, reducing compute time and cost for high-performance analytics common in artificial intelligence workloads. As the name implies, cuDF uses the Apache Arrow columnar data format on the GPU. Currently, a subset of the features in Apache Arrow are supported.