By Chu Zhou, Minggui Teng, Youwei Lyu, Si Li, Chao Xu, Boxin Shi

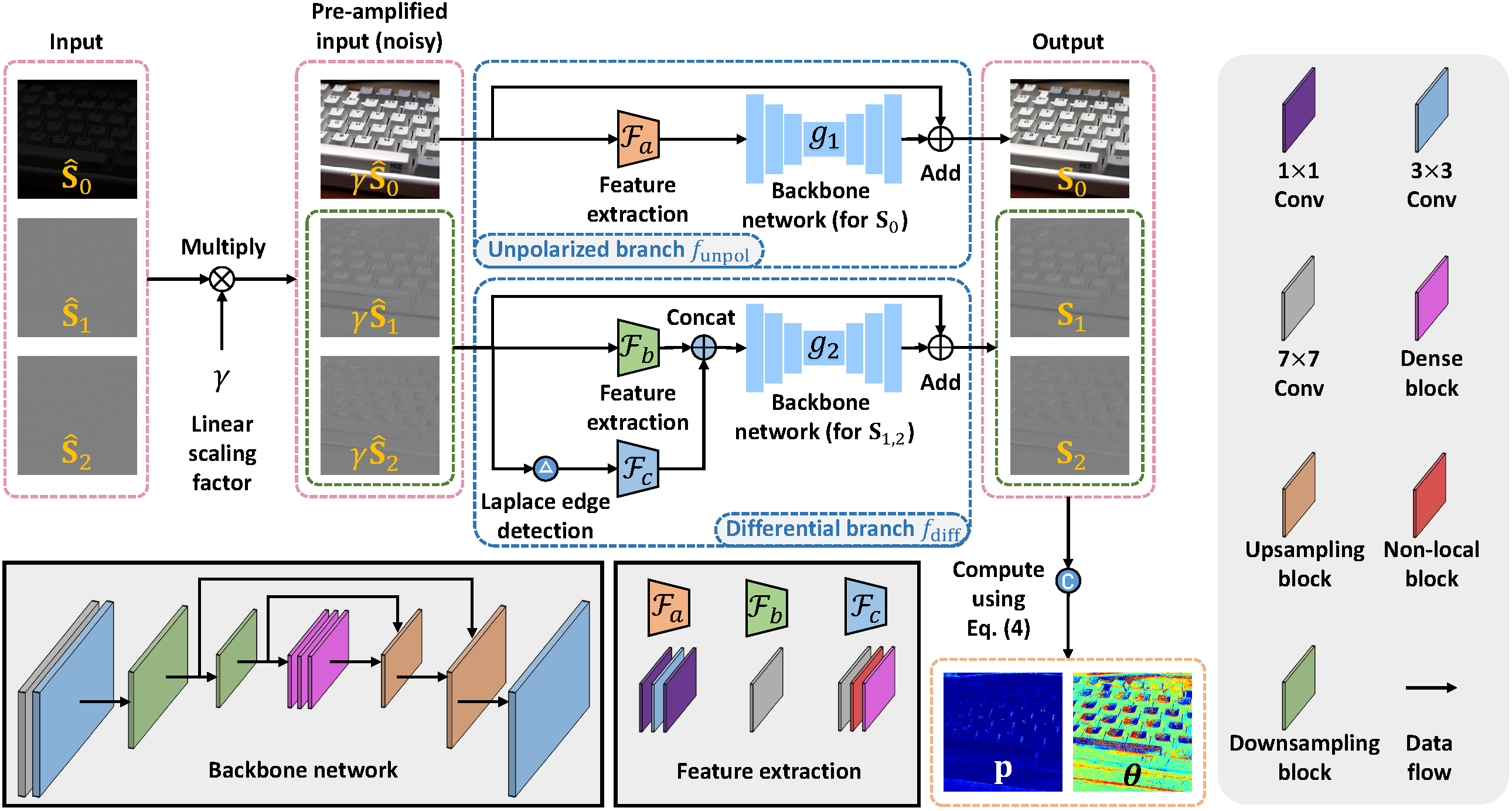

Polarization-based vision algorithms have found uses in various applications since polarization provides additional physical constraints. However, in low-light conditions, their performance would be severely degenerated since the captured polarized images could be noisy, leading to noticeable degradation in the degree of polarization (DoP) and the angle of polarization (AoP). Existing low-light image enhancement methods cannot handle the polarized images well since they operate in the intensity domain, without effectively exploiting the information provided by polarization. In this paper, we propose a Stokes-domain enhancement pipeline along with a dual-branch neural network to handle the problem in a polarization-aware manner. Two application scenarios (reflection removal and shape from polarization) are presented to show how our enhancement can improve their results.

- Linux Distributions (tested on Ubuntu 20.04).

- NVIDIA GPU and CUDA cuDNN

- Python >= 3.7

- Pytorch >= 1.10.0

- cv2

- numpy

- tqdm

- tensorboardX (for training visualization)

- We provide the pre-trained models for inference

- Run

mkdir checkpointfirst - Please put the downloaded files (

Ours.pthandOurs_gray.pth) into thecheckpointfolder

- RGB images:

python execute/infer_subnetwork2.py -r checkpoint/Ours.pth --data_dir <path_to_input_data> --result_dir <path_to_result_data> --data_loader_type InferDataLoader --verbose_output 1 default

- Grayscale images (converted from the RGB images):

python execute/infer_subnetwork2.py -r checkpoint/Ours_gray.pth --data_dir <path_to_input_data> --result_dir <path_to_result_data> --data_loader_type GrayInferDataLoader --verbose_output 1 gray

- Real grayscale images captured by a polarization camera (used for applications):

python execute/infer_subnetwork2.py -r checkpoint/Ours_gray.pth --data_dir <path_to_input_data> --result_dir <path_to_result_data> --data_loader_type RealGrayInferDataLoader --verbose_output 1 gray

Since the file format we use is .npy, we provide scrips for visualization:

- use

notebooks/visualize_aop.pyto visualize the AoP - use

notebooks/visualize_dop.pyto visualize the DoP - use

notebooks/visualize_S0.pyto visualize S0 - use

notebooks/visualize_S1_S2.pyto visualize S1 or S2

- About the PLIE dataset

- The first open-source dataset for polarized low-light image enhancement

- Containing pairwise polarized low- and normal-light images

- Details can be found in the supplementary materials

- We provide the raw images captured by a Lucid Vision Phoenix polarization camera, including two folders:

for_train: containing the raw images used for trainingfor_test: containing the raw images used for testing

- Run

mkdir -p raw_images data/{test,train}first - Please put the downloaded folders (

for_trainandfor_test) into theraw_imagesfolder - After downloading, you can use our scripts to preprocess them for obtaining the dataset:

- For obtaining the dataset for testing:

- run

scripts/preprocess_dataset.pyafter setting thein_dirandout_base_dirparameters in it to'../raw_images/for_test'and'../raw_images/data_test_temp'respectively - run

scripts/make_dataset_test.py - now you can find the dataset for testing in

data/test

- run

- For obtaining the dataset for training:

- run

scripts/preprocess_dataset.pyafter setting thein_dirandout_base_dirparameters in it to'../raw_images/for_train'and'../raw_images/data_train_temp'respectively - run

scripts/make_dataset_train.py - now you can find the dataset for training in

data/train

- run

- For obtaining the dataset for testing:

- RGB:

python execute/train.py -c config/subnetwork2.json

- Grayscale:

python execute/train.py -c config/subnetwork2_gray.json

Note that all config files (config/*.json) and the learning rate schedule function (MultiplicativeLR) at get_lr_lambda in utils/util.py could be edited

If you find this work helpful to your research, please cite:

@inproceedings{zhou2023polarization,

title={Polarization-Aware Low-Light Image Enhancement},

author={Zhou, Chu and Teng, Minggui and Lyu, Youwei and Li, Si and Xu, Chao and Shi, Boxin},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={37},

number={3},

pages={3742--3750},

year={2023}

}