This repository contains the official implementation of tinyCLAP.

First of all, let's clone the repo and install the requirements:

git clone https://github.com/fpaissan/tinyCLAP & cd tinyCLAP

pip install -r extra_requirements.txt

You can distill your tinyCLAP model(s) using this command:

MODEL_NAME=phinet_alpha_1.50_beta_0.75_t0_6_N_7

./run_tinyCLAP.sh $MODEL_NAME $DATASET_LOCATIONNote that MODEL_NAME is formatted such that the script will automatically parse the configuration for the student model.

You can change parameters by changing the model name.

Please note:

- To use the original CLAP encoder in the distillation setting, replace the model name with

Cnn14; - To reproduce the variants of PhiNet from the manuscript, refer to the hyperparameters listed in Table 1.

The command to evaluate the model on each dataset varies slightly among datasets. Below are listed all the necessary commands.

python tinyclap.py hparams/distill_clap.yaml --experiment_name tinyCLAP_$MODEL_NAME --zs_eval True --esc_folder $PATH_TO_ESCpython tinyclap.py hparams/distill_clap.yaml --experiment_name tinyCLAP_$MODEL_NAME --zs_eval True --us8k_folder $PATH_TO_US8Kpython tinyclap.py hparams/distill_clap.yaml --experiment_name tinyCLAP_$MODEL_NAME --zs_eval True --tut17_folder $PATH_TO_TUT17You can download pretrained models from the tinyCLAP HF.

Note: The checkpoints contain only the student model, so the text encoder will be downloaded separately.

To run inference using the pretrained models, use:

python tinyclap.py hparams/distill_clap.yaml --pretrained_clap fpaissan/tinyCLAP/$MODEL_NAME.ckpt --zs_eval True --esc_folder $PATH_TO_ESCThis command will automatically download the checkpoint if present in the zoo of pretrained models. Make sure to change the dataset configuration file based on the evaluation. Please refer to the HF repo for a list of available tinyCLAP models.

@article{paissan2023tinyclap,

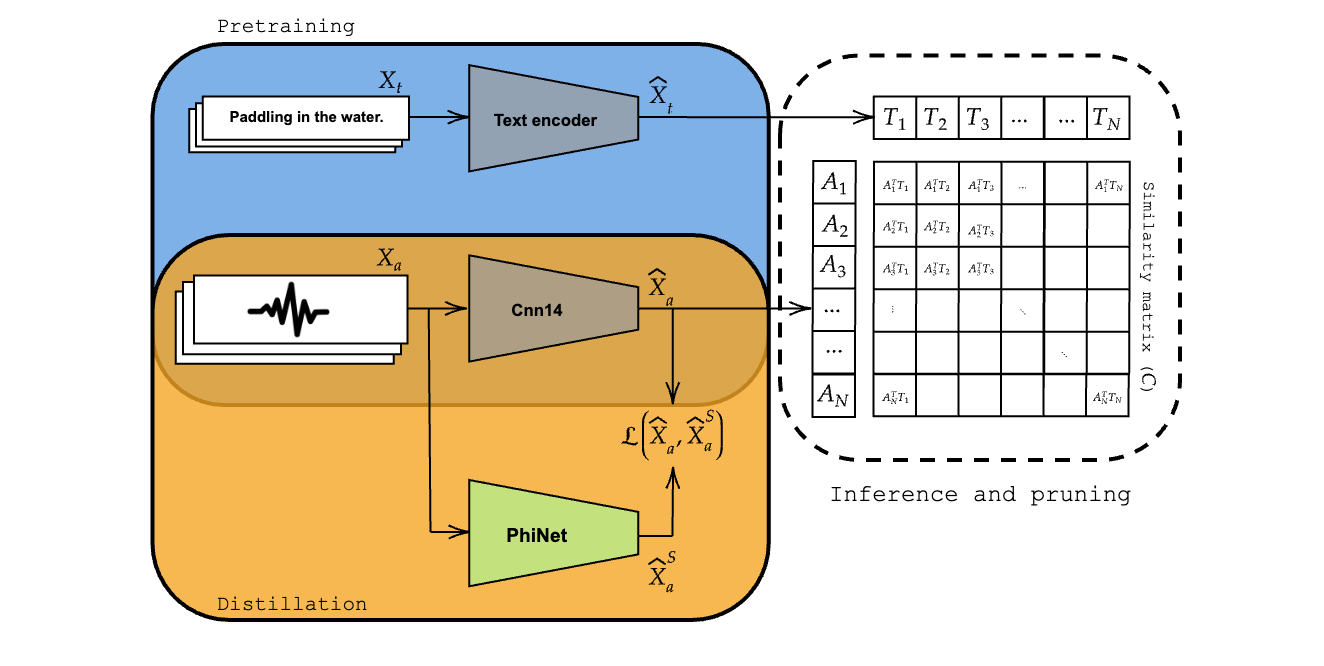

title={tinyCLAP: Distilling Constrastive Language-Audio Pretrained Models},

author={Paissan, Francesco and Farella, Elisabetta},

journal={arXiv preprint arXiv:2311.14517},

year={2023}

}

To promote reproducibility and follow-up research on the topic, we release all code and pretrained weights publicly (Apache 2.0 License). Please be mindful that the Microsoft CLAP weights are released under a different license, the MS-Public License. This can impact the license of the BERT weights that are used during inference (automatically downloaded from our code).