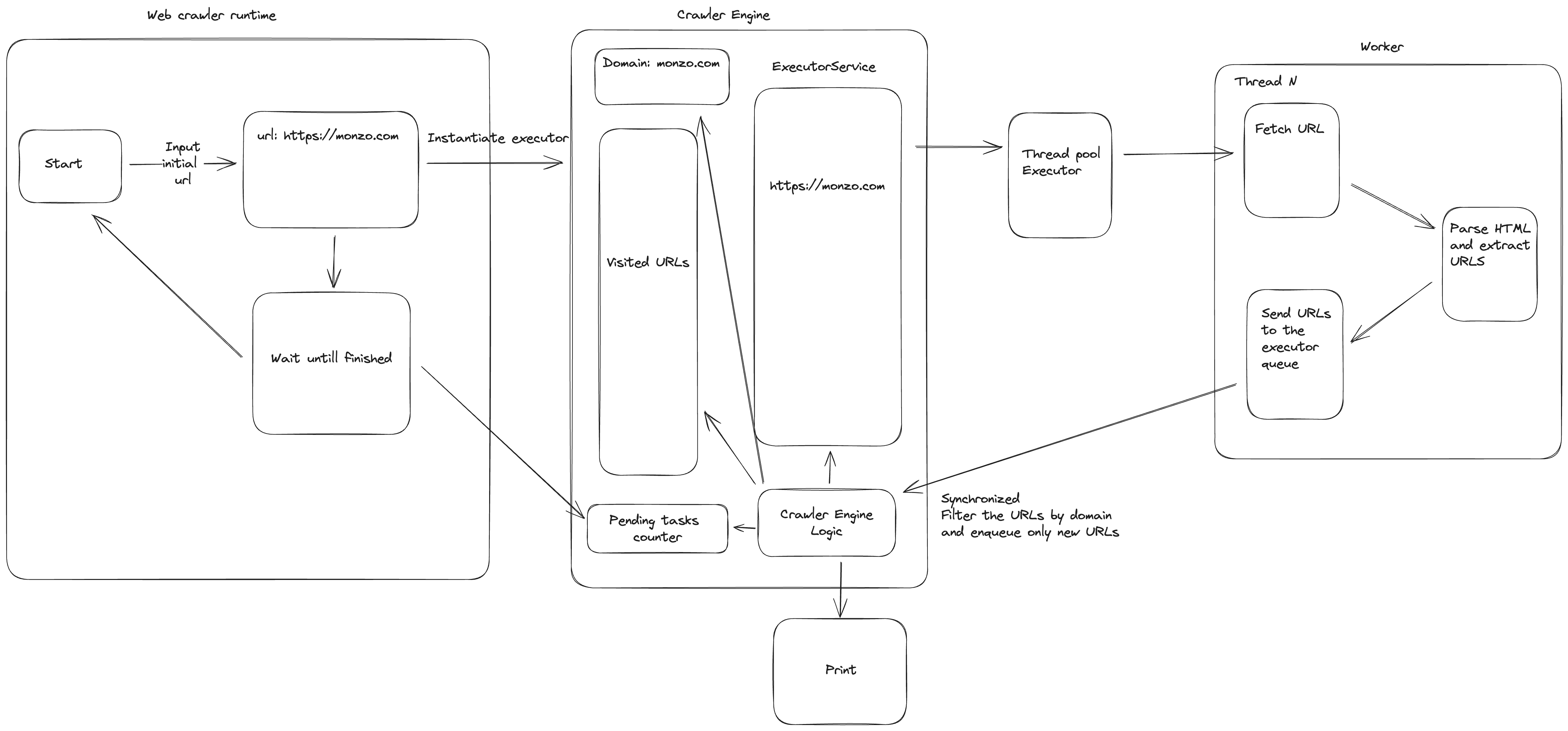

Starting from an initial URL, this web crawler will fetch and print all the links it can find in a webpage. Then it will enqueue and process the URLs not already visited and with the same domain as the initial one.

We have three main components:

- A Web Crawler runtime that is taking care of interfacing with the user for the initial url, spinning up the engine and waiting for the process to finish

- A Web Crawler Engine that is taking care of enqueuing the new URLs to be processed, printing the results and keeping the status of the entire process

- A Worker that is taking care of fetching the data from the URL, parsing the page and extracting the links

This service requires Java 21 to run.

NOTE: if you don't want to install Java you can build it and run it using Docker, see below.

If you don't have Java already on your machine you can easily install it using SDKMAN!.

First, install SDKMAN! (here) running the following command:

curl -s "https://get.sdkman.io" | bash

source "$HOME/.sdkman/bin/sdkman-init.sh"Then install Java using the Env command (here)

sdk env install

# you can simply do "sdk env" after the first installationA makefile has been added to simplify this process.

Open a terminal and To build and run the application simply do

make

# or make runIf for any reasons it should not work correctly, you can try

make jarA Docker file is provided to build and run the application in case you don't want to install Java.

Open a terminal in the root directory of the project and run the following commands:

docker build -t webcrawler .

docker run -it --rm webcrawlerOnce started the application will ask for the initial url.

If you don't specify anything it will default to https://www.google.com.

If the protocol is not specified it will use https as default.

If you type exit it will terminate the execution.

After the input of the initial url, the application will print the url of each inspected page,

followed by the list of all the href it found in the page.

This includes any type of reference, including mailto:.

Then, only the urls with the same domain as the initial url that have not been visited yet, will be inspected.

Once the processing is completed, the application will ask for a new url.

First of all I used Java because it is the language I'm more comfortable with and I thought it would be a good use case for using the Virtual threads, hence why I used Java 21. Go could have been another good solution with goroutines and channels, but I don't have enough experience with it.

Everything is persisted in memory, and it works because it runs in a single instance. For a distributed implementation we should probably think of using some external persistence to store the information like the visited URLs.

To avoid concurrency issues when processing the result of the parsing I used synchronized on the method.

This is creating a bit of a bottleneck and there is the chance that the worker threads are waiting to acquire the lock.

This could be mitigated by using another ExecutorService and enqueuing the results there and having more granular locks.

The HTTPClient uses the same threads of the ExecutorService (sync requests). Here it could be interesting to use two different pools and tune better the concurrency. Some websites could throttle the requests, so we need to be careful on how many requests we send to the same domain.

Error handling can be improved with a more granular and robust logic. For example, it could be nice to introduce a sort of retry in case of errors while fetching a web page. Also, if an exception is not handled correctly, the main loop could get stuck waiting for the engine to finish processing.

While parsing the page the application is taking any href it can find. It would be nice here to have a better logic to extract only some sort of types of urls. Urls are not "cleaned", so identical urls with different request parameters are considered as different urls, and this can lead to longer processing.

All the configuration is hardcoded, and it would be nice to move it in a property file or environment variable, for example the number of concurrent threads.

I tried to cover as much code as possible with the unit tests using Junit and Mockito. It would be nice to add some end-to-end tests to cover the entire logic of the application.

There are no metrics or monitoring, for a real production application it would be useful to add them.