Implementation of the explainability method for Transfomer architecture proposed in Transformer Interpretability Beyond Attention Visualization.

We find a closed form for relevance propagation for each layer type, which reduces computational costs by 38% and incrementally improves performance. Analysis of the method and results available in our project report.

This project was part of DD2412 - Deep Learning, Advanced at KTH.

Please refere to the ViT or BERT folder to run the different experiements

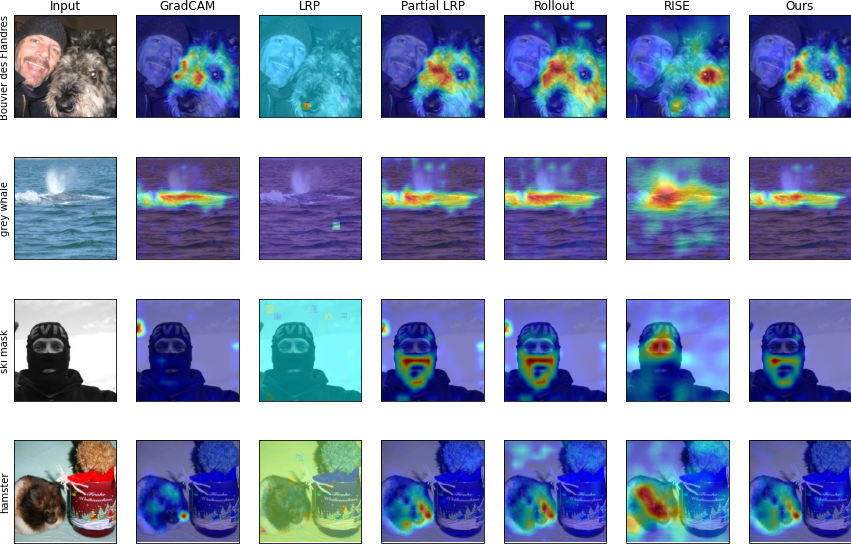

Example of saliency maps generated to explain the ViT's prediction. For further examples and quantitative results, refer to our project report.