This project is part of Udacity's Deep Reinforcement Learning Nanodegree and is called Project-1 Navigation. This model was trained on a MacBook Air 2017 with 8GB RAM and Intel core i5 processor.

The project involves an agent that is tasked to collect as much yellow bananas as possible ignoring the blue bananas. The environment is created using Unity and can be found in Unity ML Agents. On collecting a yellow banana the agent gets a reward of +1 and on collecting a blue banana the agnet is given a reward (or punishment) of -1.

The state space has 37 dimensions and the agent can perform 4 different actions:

0 - move forward

1 - move backward

2 - turn left

3 - turn right

The agent's task is episodic and is solved when the agent gets atleast +13 over consecutive 100 episodes.

For this task I used a Deep Q Network which takes as input the current 37 dimensional state and passed through two (2) layers of multi layered perceptron with ReLU activation followed by an output layer which gives the action-values for all the possible actions.

The thing I truly loved is how the agent knows it is stuck and chooses action back to get out of the state ❤️.

- Clone the repository:

user@programer:~$ git clone https://github.com/frankhart2018/banana-collecting-agent

- Install the requirements:

user@programmer:~$ pip install requirements.txt

- Download your OS specific unity environment:

- Linux: click here

- MacOS: click here

- Windows (32 bit): click here

- Windows (64 bit): click here

- Update the banana app location according to your OS in the mentioned placed.

- Unzip the downloaded environment file

- If you prefer using jupyter notebook then launch the jupyter notebook instance:

user@programmer:~$ jupyter-notebook

➡️ For re-training the agent use Banana Collecting Agent.ipynb

➡️ For testing the agent use Banana Agent Tester.ipynbIn case you like to run a python script use:

➡️ For re-training the agent type:

user@programmer:~$ python train.py

➡️ For testing the agent use:

user@programmer:~$ python test.py

- Unity ML Agents

- PyTorch

- NumPy

- Matplotlib

- Multi Layered Perceptron.

- Deep Q-Network. To learn more about this algorithm you can read the original paper by DeepMind: Human-level control through deep reinforcement learning

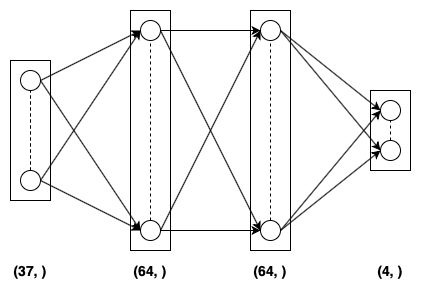

The Q-Network has three dense (or fully connected layers). The first two layers have 64 nodes activated with ReLU activation function. The final (output layer) has 4 nodes and is activated with linear activation (or no activation at all). This network takes in as input the 37 dimensional current state and gives as output 4 action-values corresponding to the possible actions that the agent can take.

The neural network used Adam optimizer and Mean Squared Error (MSE) as the loss function.

The following image provides a pictorial representation of the Q-Network model:

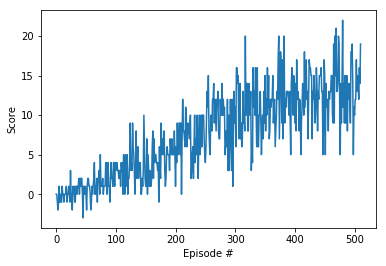

The following image provides the plot for score v/s episode number:

| Hyperparameter | Value | Description |

|---|---|---|

| Buffer size | 100000 | Maximum size of the replay buffer |

| Batch size | 64 | Batch size for sampling from replay buffer |

| Gamma (γ) | 0.99 | Discount factor for calculating return |

| Tau (τ) | 0.001 | Hyperparameter for soft update of target parameters |

| Learning Rate (α) | 0.0005 | Learning rate for the neural networks |

| Update Every (C) | 4 | Number of time steps after which soft update is performed |

| Epsilon (ε) | 1.0 | For epsilon-greedy action selection |

| Epsilon decay rate | 0.995 | Rate by which epsilon decays after every episode |

| Epsilon minimum | 0.01 | The minimum value of epsilon |