Databricks Terraform Provider

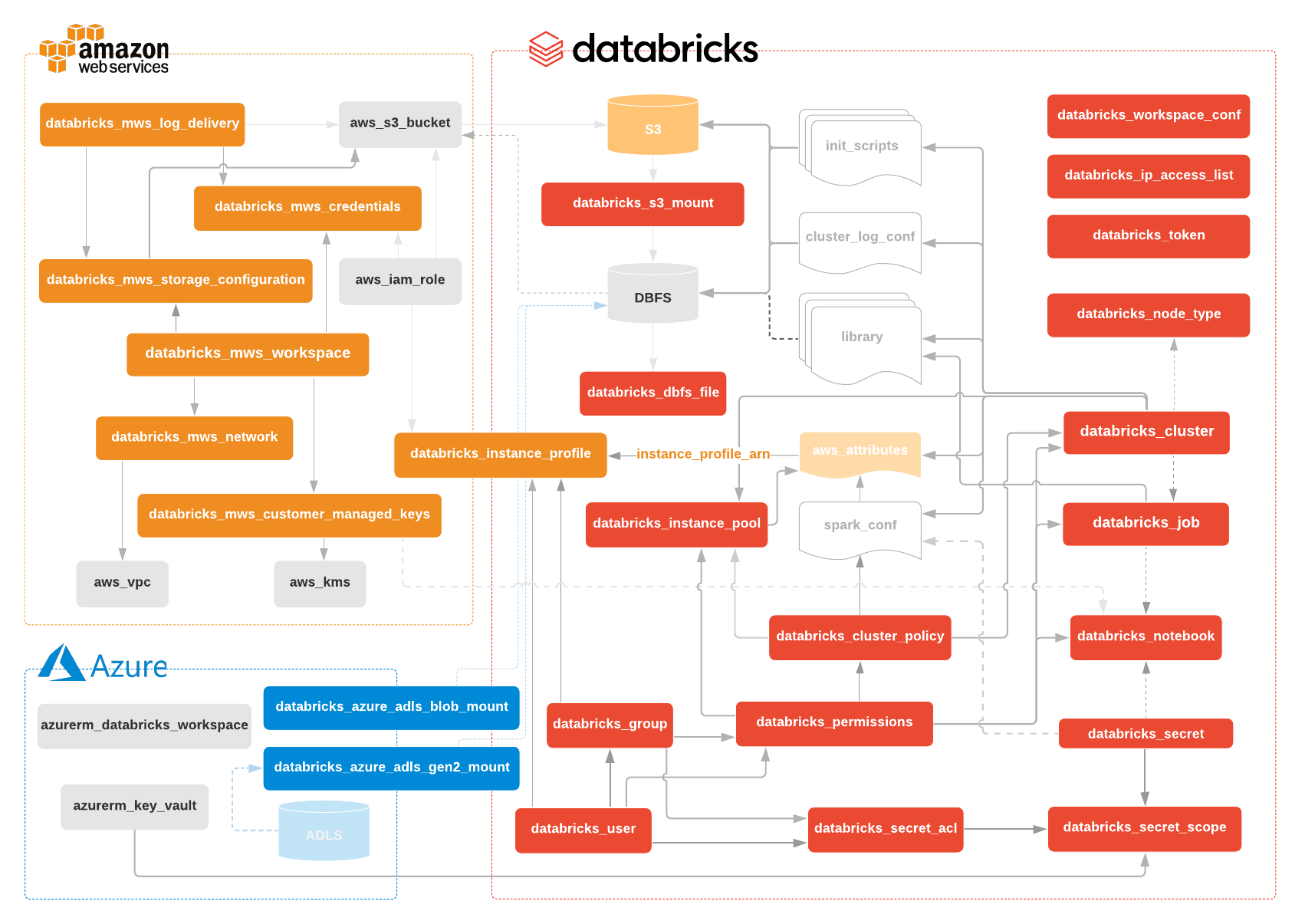

AWS tutorial | Azure tutorial | End-to-end tutorial | Migration from 0.2.x to 0.3.x | Changelog | Authentication | databricks_aws_s3_mount | databricks_aws_assume_role_policy data | databricks_aws_bucket_policy data | databricks_aws_crossaccount_policy data | databricks_azure_adls_gen1_mount | databricks_azure_adls_gen2_mount | databricks_azure_blob_mount | databricks_cluster | databricks_cluster_policy | databricks_current_user | databricks_dbfs_file | databricks_dbfs_file_paths data | databricks_dbfs_file data | databricks_global_init_script | databricks_group | databricks_group data | databricks_group_instance_profile | databricks_group_member | databricks_instance_pool | databricks_instance_profile | databricks_ip_access_list | databricks_job | databricks_mws_credentials | databricks_mws_customer_managed_keys | databricks_mws_log_delivery | databricks_mws_networks | databricks_mws_storage_configurations | databricks_mws_workspaces | databricks_node_type data | databricks_notebook | databricks_notebook data | databricks_notebook_paths data | databricks_permissions | databricks_secret | databricks_secret_acl | databricks_secret_scope | databricks_spark_version data | databricks_sql_dashboard | databricks_sql_endpoint | databricks_sql_permissions | databricks_sql_query | databricks_sql_visualization | databricks_sql_widget | databricks_token | databricks_user | databricks_user_instance_profile | databricks_workspace_conf | Contributing and Development Guidelines

If you use Terraform 0.13 or newer, please refer to instructions specified at registry page. If you use older versions of Terraform or want to build it from sources, please refer to contributing guidelines page.

terraform {

required_providers {

databricks = {

source = "databrickslabs/databricks"

version = "0.3.3"

}

}

}Then create a small sample file, named main.tf with approximately following contents. Replace <your PAT token> with newly created PAT Token.

provider "databricks" {

host = "https://abc-defg-024.cloud.databricks.com/"

token = "<your PAT token>"

}

data "databricks_current_user" "me" {}

data "databricks_spark_version" "latest" {}

data "databricks_node_type" "smallest" {

local_disk = true

}

resource "databricks_notebook" "this" {

path = "${data.databricks_current_user.me.home}/Terraform"

language = "PYTHON"

content_base64 = base64encode(<<-EOT

# created from ${abspath(path.module)}

display(spark.range(10))

EOT

)

}

resource "databricks_job" "this" {

name = "Terraform Demo (${data.databricks_current_user.me.alphanumeric})"

new_cluster {

num_workers = 1

spark_version = data.databricks_spark_version.latest.id

node_type_id = data.databricks_node_type.smallest.id

}

notebook_task {

notebook_path = databricks_notebook.this.path

}

email_notifications {}

}

output "notebook_url" {

value = databricks_notebook.this.url

}

output "job_url" {

value = databricks_job.this.url

}Then run terraform init then terraform apply to apply the hcl code to your Databricks workspace.

Project Support

Important: Projects in the databrickslabs GitHub account, including the Databricks Terraform Provider, are not formally supported by Databricks. They are maintained by Databricks Field teams and provided as-is. There is no service level agreement (SLA). Databricks makes no guarantees of any kind. If you discover an issue with the provider, please file a GitHub Issue on the repo, and it will be reviewed by project maintainers as time permits.