This document describes the various processes and things one need know who wants to implement Red Hat Platform 10 Director or TripleO ("OpenStack-On-OpenStack") together with Juniper Contrail Networking plugin for Neutron to archieve a scalable network for your OpenStack installation. With Juniper Contrail Networking, you can boost your OpenStack installation to easily grow beyond the 4K limitation of traditional VLANs and enable OpenStack to learn new networking options like service chaining, which are not included into the original OpenStack VIM design but are demanded by Telcos who follow the ETSI NFV specifications.

This document is intended to be used as a blueprint to help those who:

-

Have an OpenStack background but are not that familiar with Contrail and the changes it causes in a combined installation.

-

Have a moderate Contrail Networking background and need to understand the process for integrating it into a Red Hat Platform 10 Director environment to have a combined solution.

The examples in this document are intended to enable you to create a full production grade environment.

Much effort has been put into providing practical advice to obtain the highest performance and resiliency from the suggested designs. These designs are all validated. We have also included the steps taken, and outputs of the various commands, to enable you to verify your own results.

Good luck with your installation.

Before you install anything: Make a Plan!

Please review the information below for a successful installation of your OpenStack Fabric with Contrail Networking enabled.

The Contrail Networking functions are usually split to three different entities that together build the Contrail Neutron plugin function to be used by OpenStack:

- Controller (in TripleO installation, called contrail-controller)

- Analytics Node (in TripleO installation, called contrail-analytics)

- Analytics DB Node (in TripleO installation, called contrail-analytics-database)

Note: In the TripleO documentation, a “Controller” refers to a fourth instance which contains the OpenStack functions to utilize the three plugin entities. Don’t get confused by the overlapping mentioning of OpenStack as a Controller for the entire VIM and Contrail Controller as SDNcontroller for the Neutron Networking plugin to OpenStack.

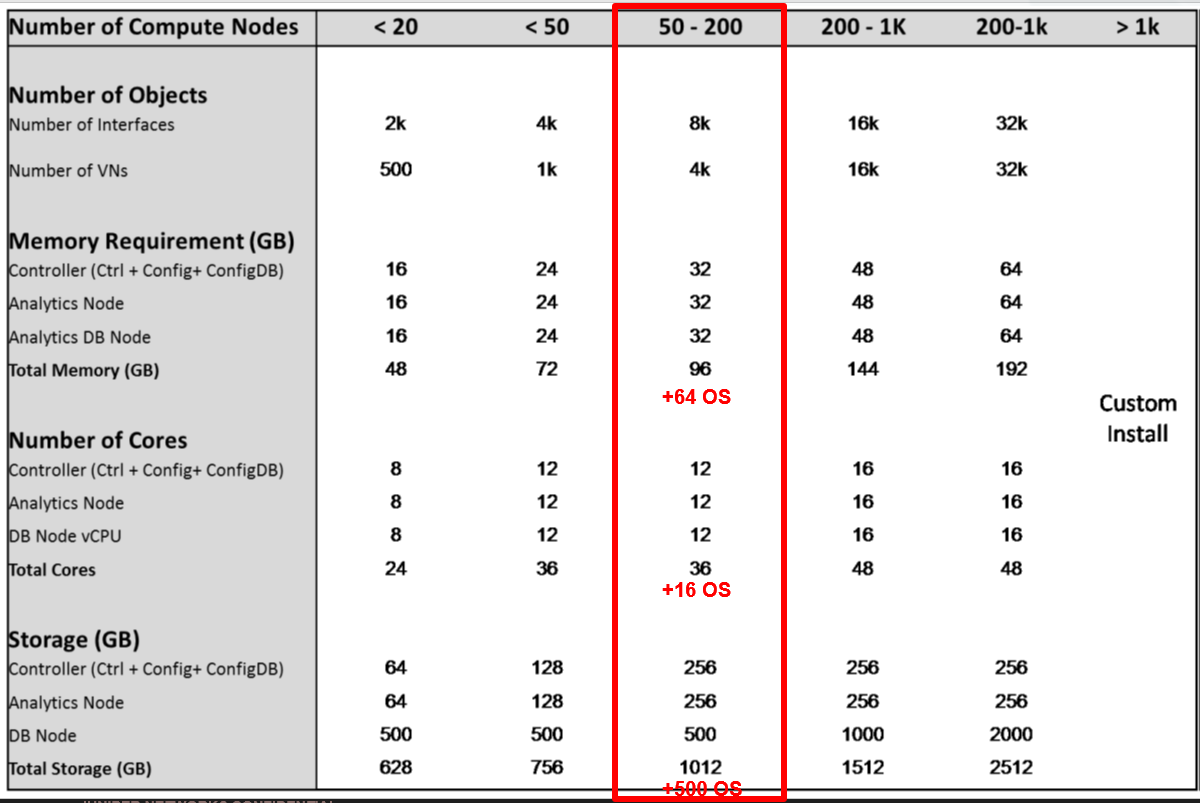

Consule the following table when you setup the fabric to size the Contrail entities according to the number of compute nodes they need to manage:

The redundancy schema of Contrail is similar to the behaviour of OpenStack itself, which is 2*N + 1. For real high availability (HA) systems you must have a minimum of three copies of every entity deployed (to have a quorum as the three nodes elect a master) to allow the loss of a node without any impact.

Depending on the hardware capacity of your servers, you may group these functions together (in form of VMs) so you can install:

- Contrail Controller

- Contrail Analytics

- Contrail Analytics Database

all together on a single physical node. However, for HA you must have three of those physical nodes with the same functions installed.

Note: Installing and operating any of the three entities/functions more than once on the SAME PHYSICAL server will result in a non-resilient installation and will not provide the planed HA.

The following rules apply to the installation of the four needed Control instances and the various compute node flavours:

| Function | Supported as VM | Supported as Bare-Metal |

|---|---|---|

| OpenStack Controller | No | Yes |

| Contrail Controller | Yes | Yes |

| Contrail Analytics | Yes | Yes |

| Contrail Analytics DB | Yes | Yes |

| Compute Node | No | Yes |

| Compute Node w. DPDK | No | Yes |

| Contrail TSN Node | Yes | Yes |

| CEPH Storage Nodes | No | Yes |

| RH Director (undercloud) | Yes | Yes |

The “not supported” statement on compute nodes as VMs in the table above should be respected - Juniper does not support this option! The reason is that this setup requires nested hypervisor support, which generally resuls in unpredictable performance.

TripleO has the ability to automatically install all functions on bare-metal hosts. Keep in mind that only one function per bare-metal host will be installed and supported.

Note that if you intend to use VMs then TripleO (in typical cases) does not help you to install the host OS onto the server, setup the KVM hypervisor, install the networking interfaces and bridges to the VMs, or create and connect the VMs. You must perform these steps manually (for now) but you only need to setup blank VMs on those hosts. TripleO will then be able to treat the prepared blank VMs same way as a bare-metal host and automatically perform the guest OS installation together with the function of the VM it implements. Since you will typically do this for three to four hosts with Control functions this should not be a big deal, however you need to include this in your planning.

Note: The term “bare-metal” is used in different contexts to mean different things:

- In the OpenStack community, it typically is connected to a host that is installed and maintained through OpenStack Ironic.

- In the Contrail community, it refers to one of the three ways to connect servers without a Contrail vRouter on them via a top-of-rack switch with VXLAN GWsupport to the overlay network. This is described in Using Contrail with OVSDB in Top-of-Rack Switches, which also explains the function of a Contrail TSN node.

This document, uses “bare-metal” to refer to the first option above, and “Contrail bare-metal” to refer to the second option.

Note: The TripleO undercloud VM should be on a separate node for production environments as it also serves as an independent entity to perform upgrades.

Contrail uses inside the Data Center fabric an overlay technology to abstract the network traffic of the VMs from the physical network. Traditionally, one uses VLANs to segment traffic into virtual networks inside a single OpenStack Project/Tenant, and then again to shield the Project/Tenants from each other. This obviously presents scale issues, and also requires OpenStack to integrate (via a plugin) with the physical switches in the Data Center fabric to program the VLAN setup. In short, Contrail erases the need for VLANs to shield the traffic of VMs. For further implementation details, see Contrail Architecture.

Please note that Director-10 has a limitation wherein all the overcloud networks must be stretched at Layer 2 to all the overcloud nodes. If the overcloud nodes are physical servers that are present in different racks/subnets of an IP fabric, then you’ll have to first stretch all the overcloud networks to the physical servers. One way of doing this is using EVPN. If you have a traditional data center topology (non IP fabric), then you can extend VLANs across the physical compute nodes to extend all the overcloud networks.

Deploying an overcloud using TripleO/Director across multiple subnets is an upstream feature and a work-in-progress at the time of writing this document. Upstream developers (mostly from Red Hat) are driving this effort. You can check the status of this feature on its blueprint page.

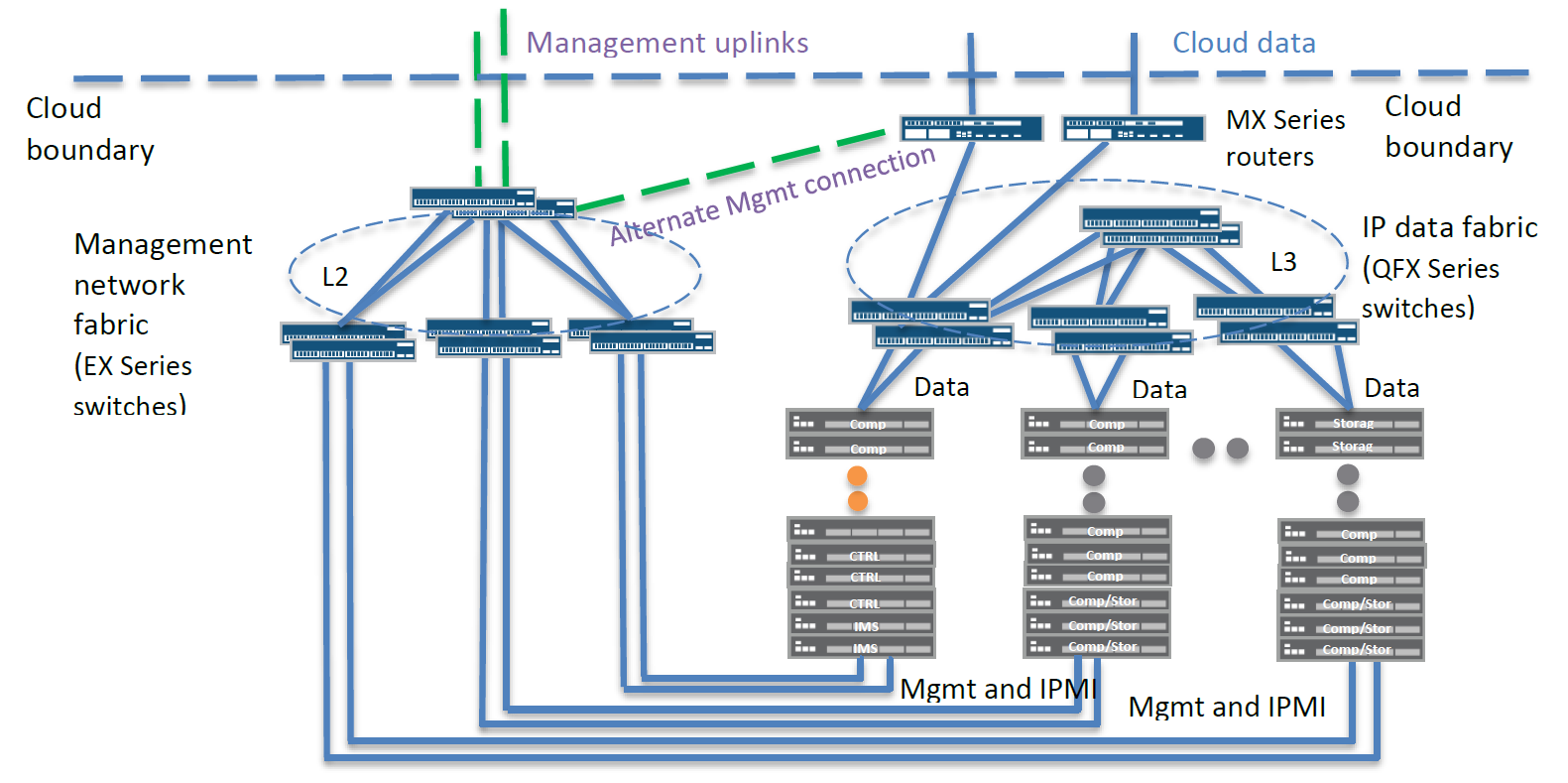

The diagram below illustrates a data center network design where the fabric is Layer 3, from the Compute nodes (which are attached to the leaf switches) to the spine layer (between the leaf layer and DC router), and finally to the DC router that connects the fabric to the WAN. As mentioned above it would require Layer 2 among the leaf devices to stretch the OpenStack Internal API network among them. If the fabric network is made of leaf and spine switches from Juniper, you might also consider options like Juniper Virtual Chassis Fabric, Juniper Fusion DC or Juniper IP fabric. Please contact Juniper if you are unsure which option would be the best design for you.

You can remove and redesign the DC fabric later to a full Layer 3 fabric when appropriate.

As part of the network planning and topology design process, consider the following guidelines:

As part of the network planning and topology design process, consider the following guidelines:

- Do NOT change the IP address range of the provisioning network 192.0.2.0/24. It’s not only the undercloud.conf file that will need to be changed; you also need to adapt various Heat templates later, which increases the risk of error.

- All Controller nodes and entities must be in the same Layer 2 network. This is not supposed to change even in future designs when TripleO intends to support multiple subnets between racks towards the upstream network. The reason is that for redundancy of the API IP addresses VRRP is used to share a single virtual IP address to the outside/inside. This is automatically installed during the installation. If those entities were to be segmented through different subnets, it would be necessary to have the physical underlay network monitor the health status of those entities and change the routing dynamically in case of failure.

- Carefully choose the overlay tunnel transport protocol from the data center router towards the Compute nodes:

- Between Compute nodes (for east-west traffic), MPLSoGRE, MPLSoUDP, or VXLAN are supported and can be changed on the fly at any time.

- For north-south traffic between the DC router and Compute nodes, in the typical case (excluding Contrail bare-metal support for the moment) you must choose between MPLSoGRE or MPLSoUDP (you can change later but not on the fly).

- If the DC router is not a Juniper device, then it likely does not support MPLSoUDP, and you will need to use MPLSoGRE instead.

- If the DC router is a Juniper MX Series device, then it’s recommended to have at least Junos OS Release 17.2R1 installed and select MPLSoUDP as transport. This has the additional advantage that you are able to use multipath inside your data center fabric and utilize 802.3ad link bundles to distribute traffic among bundled links. MPLSoGRE doesn’t contain enough (no Layer 4) entropy information to fully utilize the links. Note that regardless of which transport protocol you choose on a Juniper MX Series device, you must have a Trio-based PFE (and enable tunneling on the FPC/PIC).

Below is a generic example of the resulting networking design.

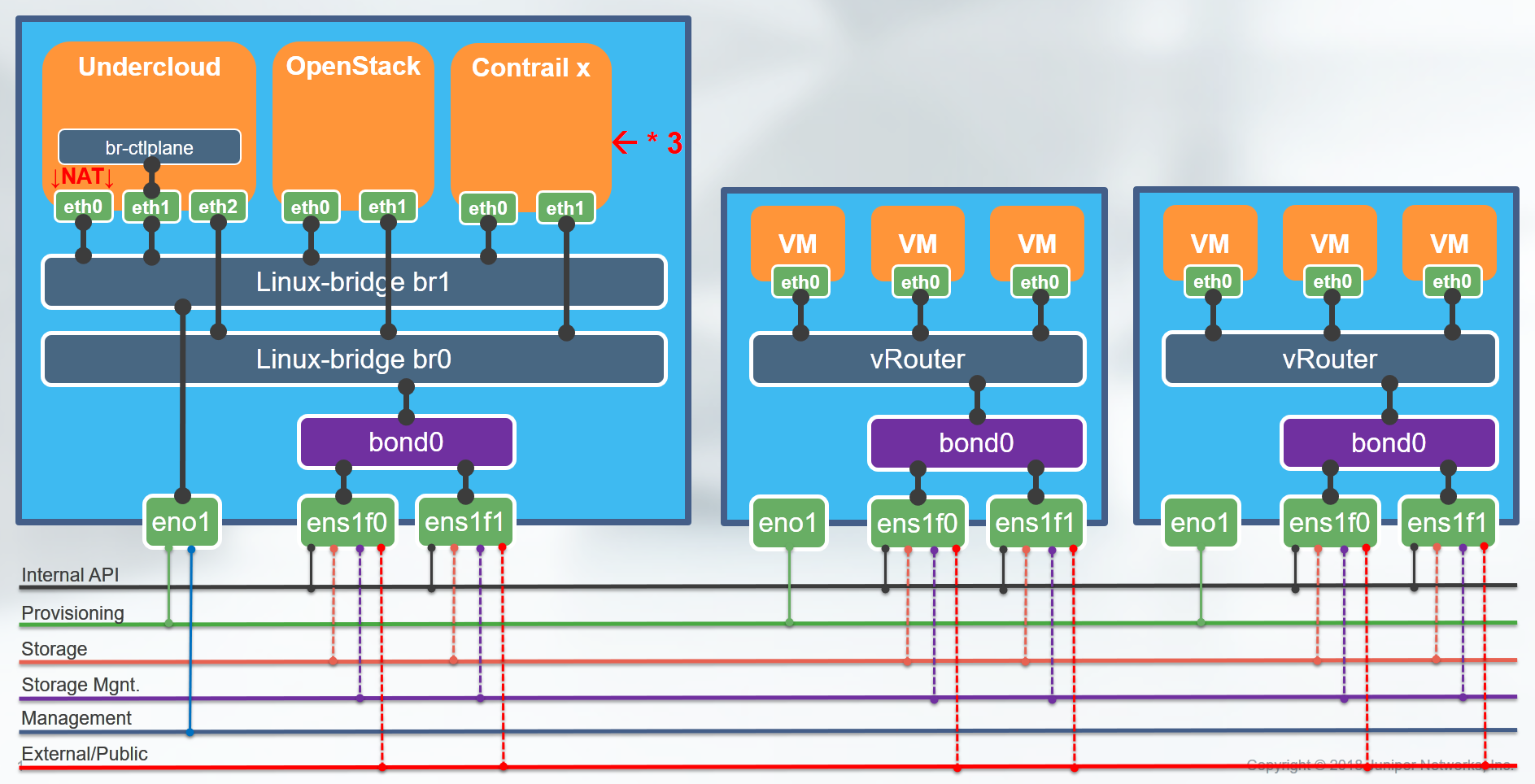

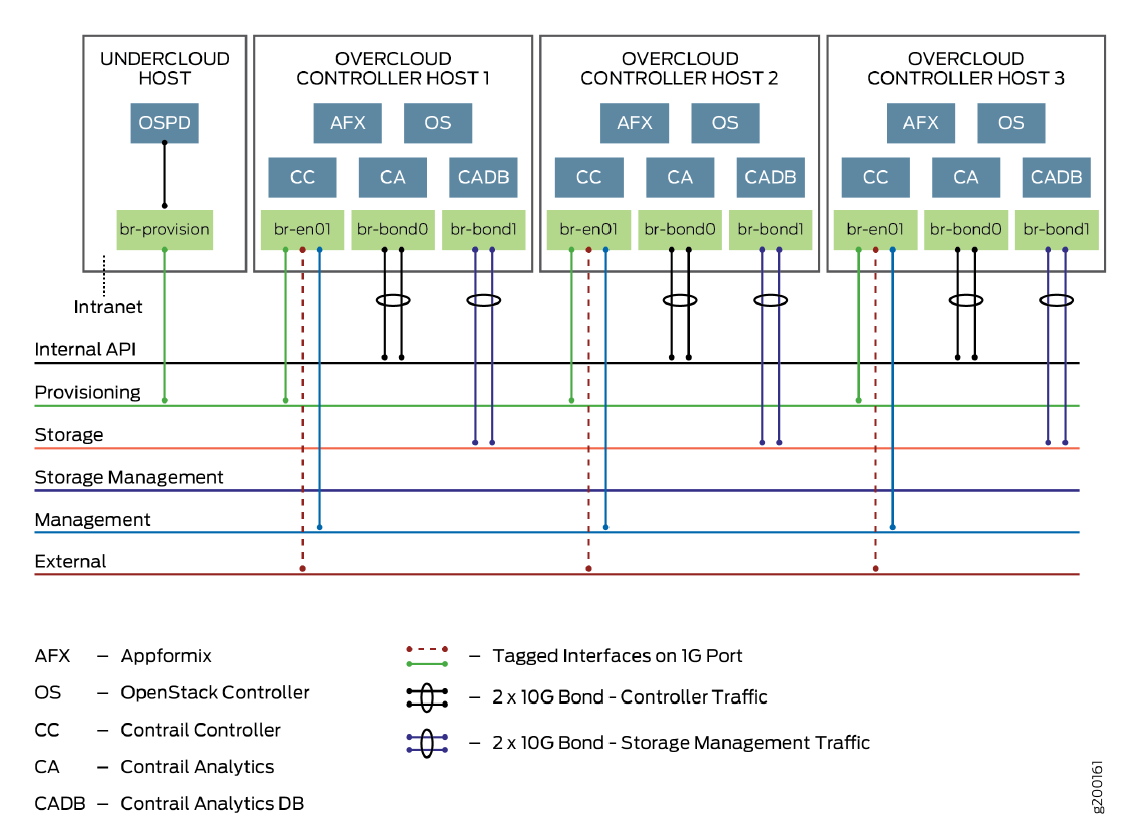

First, the Controller entities:

Note: Appformix is an optionally integrated Juniper product for telemetry and cloud performance, monitoring/optimization. You can find more information here.

Note: Appformix is an optionally integrated Juniper product for telemetry and cloud performance, monitoring/optimization. You can find more information here.

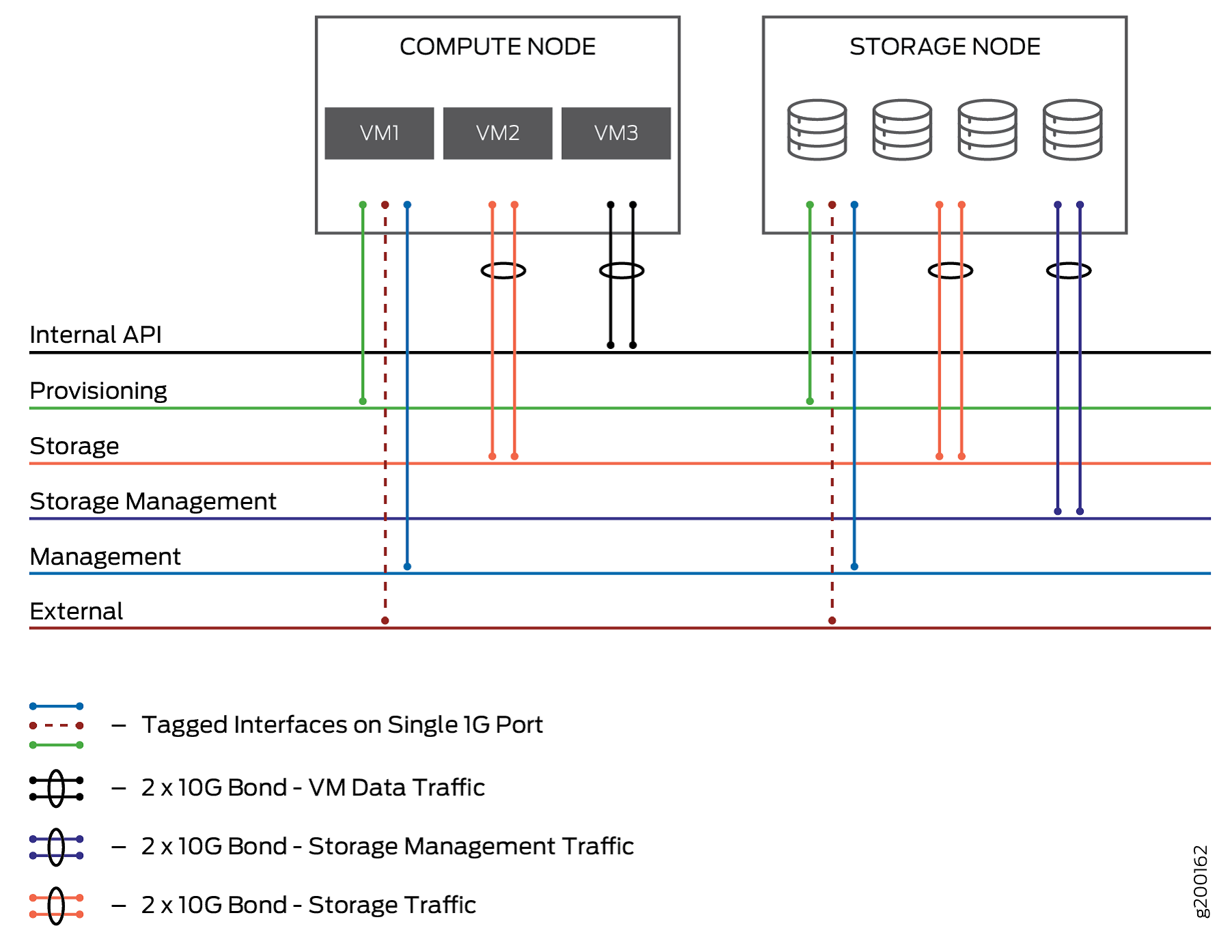

Next, the Compute and Storage nodes:

Note: Your networking setup must be complete before you can install the Overcloud. During the rollout, the system will send pings to the various nodes on the particular VLANs to test the environment.

Only the DC router can be configured at a later time.

Note: Your networking setup must be complete before you can install the Overcloud. During the rollout, the system will send pings to the various nodes on the particular VLANs to test the environment.

Only the DC router can be configured at a later time.

The following article from Red Hat should be reviewed as part of planning your network design. Isolating_networks

However, it must be said that due to the nature of the Contrail overlay design things become much easier in the design and fewer networks and functions need to be considered using Contrail.

As an example, the Contrail design completely eliminates the need for Neutron gateway nodes, as the vRouter is highly distributed and implemented on every Compute node performing this function now.

The following Table represents the usually used Networks for an OpenStack installation and how they are utilized with Contrail Networking.

| Network Name | Purpose | Needed when |

|---|---|---|

| Provisioning | OpenStack Ironic to allow undercloud to setup and control the overcloud VM and bare-metal hosts | Always (for installation) |

| Internal API | OpenStack API network, Contrail API network and Contrail data network | Always |

| Tenant Networks | Tenant traffic separation | No longer needed. Contrail replaces this in the Contrail data network |

| Storage | Storage data network | Optional external storage |

| Storage Management | Storage API network | Optional external storage |

| Management | Node management | Not really needed but can be used for IPMI to the bare-metal hosts |

| External & Floating IP | Exposes OpenStack and Contrail REST API and GUIs towards WAN. | Recommended for production designs but no longer need for floating IP as this is now part of the Contrail data network Note: You need to plan for enough public IP addresses or have to NAT somewhere. |

Note: The provisioning network must ALWAYS be of native/untagged VLANs when it reaches the VMs and bare-metal hosts on their respective virtual and physical interfaces. This is because OpenStack Ironic uses PXE boot to install the host OS on them and PXE boot doesn’t know about any VLAN tags upfront.

Also keep in mind that all your bare-metal servers must support IPMI (or a similar supported function like iDRAC) to allow the undercloud OpenStack Ironic to power on and off the nodes and make them boot (from PXE or HD) during the installation. Today, this should not be a big concern anymore.

It may sound a bit odd that, in the current state, Contrail multiplexes the API and the data transport into the same network, but the reason for this is to easily (without demanding any additional features from the network) detect a complete loss of ALL interfaces on a Compute node that are responsible to transport the networking data in the fabric. As the heartbeats of the XMPP control protocol travel on the same interfaces, the SDN controller will be able to detect this outage and signal this information towards OpenStack, where the admin then can take the appropriate action, such as VM evacuation. Should you not want this practice, you can configure the Contrail data network traffic to utilize a separate network, such as the tenant network (in that case only use a single network for all tenants please), and then enable your own methods to monitor the link status of your network.

In the previous section, the Red Hat article identified various options for NIC configuration and explained how bridging options inside the host are used. Choosing from all these options can be rather confusing, so here are some easy guidelines. Keep in mind that the Red Hat article doesn’t cover any Contrail options, so some of the recommendations here may contradict the article using newer information.

- Do NOT use/setup Linux bridges and/or OVS support on any vRouter-enabled Compute node! The Contrail vRouter kernel module acts as a complete replacement, and if you configure both methods they will interfere with each other. Unfortunately, OVS will still be installed on the system by TripleO, but it should never receive any traffic.

- If you use VLANs on any vRouter-enabled Compute node, add them either to the physical NIC interface or, if using interface bonding, to the bond* interface.

- If you create VMs to host your various Controller entities on a KVM host, use either standard Linux bridges or OVS to create the internal bridges where the VMs are being attached towards the NICs. It doesn’t really matter which option you pick as this is control traffic; choose whichever option you are most familiar with.

- If you use NICbundling, do NOT use the OVS direct bundle support. OVS is fine for managing VLANs and internal bridges (on non-Compute nodes as stated above), but it is not a good option on handling interface bundles as its current implementation misses needed features like Layer 3+4 policy support. Also, review the Red Hat statement here. Instead, always use the native bonding support of the Linux host operating system and then you might put OVS on top of it (or use Linux bridges).

- If you utilize link bundling, enable IEEE 802.3ad and LACP on the leaf switches (every vendor should support this now). Use bond mode 4 (802.3ad support on Linux) and bond policy 1 (Layer 3+4 ECMP-based distribution of traffic on the links) on the linux host side. This will provide true active/active interface support, higher throughput, and distribute the flows over the links by looking at the tunnel overlay protocol (if it’s MPLSoGRE or VXLAN).

- Link bundles should always be created and handled by the native Linux OS and then the vRouter; Linuxbridges or OVS may be created on top if needed.

- If using link bundles, to achieve leaf layer redundancy, you can either use MC-LAG or, if using two Juniper switches you can pool them together to form a Virtual Chassis, which makes them behave like a single switch. The Virtual Chassis option is required if you intend to use these Juniper switches for Contrail bare-metal support and require HA. MC-LAG is NOT supported for Contrail bare-metal setups.

Note: If you have Compute nodes in which you want to run the DPDK-based vRouter instead of the standard vRouter in kernel mode, be sure to check the Juniper release notes for qualified NICs and host operating systems.

This section describes how to configure all these options so that TripleO/Director can make the appropriate configurations.

TripleO provides the flexibility to have different NIC templates for different overcloud roles. For example, there might be differences between the NIC/networking layout for the overcloud-compute-nodes and the overcloud-contrail-controller-nodes.

These NIC templates provide data to the backend scripts that take care of provisioning the network on the overcloud nodes. The templates are written in standard JSON formats.

The ‘resources’ section within the template includes all the networking information for the corresponding overcloud role. This information includes:

- Number of NICs

- Network associated with each NIC

- Static routes associated with each NIC

- Any VLAN configuration which is tied to a particular NIC

- Network associated with each VLAN interface

- Static routes associated with each VLAN

More information on what each of these sections look like can be accessed here

The link mentioned above has great examples on how to define a NIC within the template. We’ll use this information in subsequent sections.

Note: One of the most common topologies for a TripleO deployment consists of three NICs:

- NIC-1: Carries these networks:

- Provisioning: Untagged

- Management: Tagged

- External: Tagged

- NIC-2: Carries internal API network

- NIC-3: Carries tagged storage-related networks (storage and storage management)

As part of deployment in the first step, a net template must be provided. Note: The base directory for the files in this chapter we are showing is usually: /home/stack/tripleo-heat-templates/environments/contrail/

The net template files are also available at the same directory location:

[stack@undercloud contrail]$ ls -lrt | grep contrail-net

-rw-rw-r--. 1 stack stack 1866 Sep 19 17:10 contrail-net-storage-mgmt.yaml

-rw-rw-r--. 1 stack stack 894 Sep 19 17:10 contrail-net-single.yaml

-rw-rw-r--. 1 stack stack 1528 Sep 19 17:10 contrail-net-dpdk.yaml

-rw-rw-r--. 1 stack stack 1504 Sep 19 17:10 contrail-net-bond-vlan.yaml

-rw-rw-r--. 1 stack stack 1450 Sep 19 17:12 contrail-net.yaml

These files are pre-populated examples that are included with a Contrail package. Note that the file names match the use case that they’re trying to solve. For example, use the contrail-net-dpdk.yaml file if your use-case includes a DPDK compute. Similarly, use the contrail-net-bond-vlan.yaml file if your topology uses bond interfaces and VLAN sub-interfaces that need to be created on top of these bond interfaces.

Note that these are example files; you will need to modify them to match your topology.

The ‘resource_registry’ section of this file specifies which NIC template must be used for each role: <<highlight resource_registry>>

[stack@undercloud contrail]$ cat contrail-net.yaml

resource_registry:

OS::TripleO::Compute::Net::SoftwareConfig: contrail-nic-config-compute.yaml

OS::TripleO::ContrailDpdk::Net::SoftwareConfig: contrail-nic-config-compute-dpdk-bond-vlan.yaml

OS::TripleO::Controller::Net::SoftwareConfig: contrail-nic-config.yaml

OS::TripleO::ContrailController::Net::SoftwareConfig: contrail-nic-config.yaml

OS::TripleO::ContrailAnalytics::Net::SoftwareConfig: contrail-nic-config.yaml

OS::TripleO::ContrailAnalyticsDatabase::Net::SoftwareConfig: contrail-nic-config.yaml

OS::TripleO::ContrailTsn::Net::SoftwareConfig: contrail-nic-config-compute.yaml

parameter_defaults:

ControlPlaneSubnetCidr: '24'

InternalApiNetCidr: 10.0.0.0/24

InternalApiAllocationPools: [{'start': '10.0.0.10', 'end': '10.0.0.200'}]

InternalApiDefaultRoute: 10.0.0.1

ManagementNetCidr: 10.1.0.0/24

ManagementAllocationPools: [{'start': '10.1.0.10', 'end': '10.1.0.200'}]

ManagementInterfaceDefaultRoute: 10.1.0.1

ExternalNetCidr: 10.2.0.0/24

ExternalAllocationPools: [{'start': '10.2.0.10', 'end': '10.2.0.200'}]

EC2MetadataIp: 192.0.2.1 # Generally the IP of the Undercloud

DnsServers: ["8.8.8.8","8.8.4.4"]

VrouterPhysicalInterface: vlan20

VrouterGateway: 10.0.0.1

VrouterNetmask: 255.255.255.0

ControlVirtualInterface: eth0

PublicVirtualInterface: vlan10

VlanParentInterface: eth1 # If VrouterPhysicalInterface is a vlan interface using vlanX notation

In this example, all the OpenStack controller and Contrail control plane roles use the NIC template named ‘contrail-nic-config.yaml’. Note that the compute roles and the DPDK roles use different NIC templates.

These NIC template files can be accessed at this directory location:

[stack@undercloud contrail]$ ls -lrt | grep contrail-nic-config-

-rw-rw-r--. 1 stack stack 5615 Sep 19 17:10 contrail-nic-config-vlan.yaml

-rw-rw-r--. 1 stack stack 5568 Sep 19 17:10 contrail-nic-config-storage-mgmt.yaml

-rw-rw-r--. 1 stack stack 3861 Sep 19 17:10 contrail-nic-config-single.yaml

-rw-rw-r--. 1 stack stack 5669 Sep 19 17:10 contrail-nic-config-compute-storage-mgmt.yaml

-rw-rw-r--. 1 stack stack 3864 Sep 19 17:10 contrail-nic-config-compute-single.yaml

-rw-rw-r--. 1 stack stack 5385 Sep 19 17:10 contrail-nic-config-compute-dpdk.yaml

-rw-rw-r--. 1 stack stack 5839 Sep 19 17:10 contrail-nic-config-compute-bond-vlan.yaml

-rw-rw-r--. 1 stack stack 5666 Sep 19 17:10 contrail-nic-config-compute-bond-vlan-dpdk.yaml

-rw-rw-r--. 1 stack stack 5538 Sep 19 17:10 contrail-nic-config-compute-bond-dpdk.yaml

-rw-rw-r--. 1 stack stack 5132 Sep 19 17:13 contrail-nic-config-compute.yaml

-rw-r--r--. 1 stack stack 5503 Sep 19 17:13 contrail-nic-config-compute-dpdk-bond-vlan.yaml

Just like the network template files, these NIC template files are examples included with the Contrail package. Again these files have names matching the use case that they’re trying to solve. Note that these NIC template files are only examples;you may need to modify them according to your cluster’s topology.

Also, these examples call out NIC names in the format of nic1, nic2, nic3, etc. (nic.$). Think of these as variables. Director’s backend scripts translate these NIC numbers into actual interface names based on the interface boot order. So if you specify nic1, nic2, and nic3 in the template, and the boot order of interfaces is eth0, eth1, and eth2, then the mapping of these nic variables to actual interfaces would look like:

- nic1 mapped to eth0

- nic2 mapped to eth1

- nic3 mapped to eth2

TripleO also provides the flexibility to use actual NIC names (e.g. eth0, em1, ens2f1, etc.) in the NIC templates instead of using nic1, nic2, nic3, etc.

NOTE: A common mistake while defining NIC templates is that the boot order of NICs is not set correctly. Because of this, your deployment might go beyond the network_configuration stage but there might be connectivity issues as the IP/subnet/route information might not be configured correctly for the NICs of overcloud nodes.

As a next step, let’s look at the network_config NIC template used by the Controllers: contrail-nic-config.yaml:

network_config:

-

type: interface

name: nic1

use_dhcp: false

dns_servers: {get_param: DnsServers}

addresses:

-

ip_netmask:

list_join:

- '/'

- - {get_param: ControlPlaneIp}

- {get_param: ControlPlaneSubnetCidr}

routes:

-

ip_netmask: 169.254.169.254/32 # Mandatory!

next_hop: {get_param: EC2MetadataIp} # Mandatory!

-

type: vlan

use_dhcp: false

vlan_id: {get_param: InternalApiNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: InternalApiIpSubnet}

routes:

-

default: true

next_hop: {get_param: InternalApiDefaultRoute}

-

type: vlan

vlan_id: {get_param: ManagementNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: ManagementIpSubnet}

-

type: vlan

vlan_id: {get_param: ExternalNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: ExternalIpSubnet}

-

type: vlan

vlan_id: {get_param: StorageNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: StorageIpSubnet}

-

type: vlan

vlan_id: {get_param: StorageMgmtNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: StorageMgmtIpSubnet}

Let’s look at the sub-sections of this template:

- Definition for NIC-1:

-

type: interface

name: nic1

use_dhcp: false

dns_servers: {get_param: DnsServers}

addresses:

-

ip_netmask:

list_join:

- '/'

- - {get_param: ControlPlaneIp}

- {get_param: ControlPlaneSubnetCidr}

routes:

-

ip_netmask: 169.254.169.254/32 # Mandatory!

next_hop: {get_param: EC2MetadataIp} # Mandatory!

Observations:

-

This is the definition for an interface called ‘nic1’

-

The DNS server is defined. Make sure that this parameter has a valid value. Most commonly, this variable is assigned a value in the contrail-services.yaml file

-

An IP and subnet are provided under the ‘addresses’ section. Note that these values are again variables, and the format is:

$(Network_Name)IP and $ (Network_Name)SubnetCidr. -

This means that this particular NIC is on the ‘ControlPlane’ network. In the background, this NIC might be connected to an access port on a switch for the ‘ControlPlane’ VLAN.

-

In the ‘routes’ section, there’s a /32 route for this NIC. When planning the networking for your cluster, you may need to provision static routes on the overcloud roles. Use the format mentioned under the ‘routes’ section to specify any such static routes.

Definition for NIC-2:

-

type: vlan

use_dhcp: false

vlan_id: {get_param: InternalApiNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: InternalApiIpSubnet}

routes:

-

default: true

next_hop: {get_param: InternalApiDefaultRoute}

-

type: vlan

vlan_id: {get_param: ManagementNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: ManagementIpSubnet}

-

type: vlan

vlan_id: {get_param: ExternalNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: ExternalIpSubnet}

-

type: vlan

vlan_id: {get_param: StorageNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: StorageIpSubnet}

-

type: vlan

vlan_id: {get_param: StorageMgmtNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: StorageMgmtIpSubnet}

Observations:

- In this example, NIC-2 has multiple VLANs defined on it.

- In the background, NIC-2 might be connected to a switch’s trunk port. And all these corresponding VLANs must be allowed on the trunk.

- Given that Director-based deployments require the administrator to use many networks, it’s a very common requirement/design to use VLAN interfaces on the overcloud nodes. This way administrators do not have to worry about having 6-7 physical NICs on each overcloud node.

- For each VLAN interface, the ‘vlan_id’ is defined. Note that the ‘vlan_id’ again points to a variable. These variables can be assigned values in the contrail-net.yaml file discussed earlier.

- Another very important observation here is setting the default route. In this example, the default route was provisioned on the VLAN interface in the ‘InternalApiNetwork’. Note that the next hop points to a variable. This variable can also be set in the contrail-net.yaml file. Here’s the snippet that shows the default route information:

-

type: vlan

use_dhcp: false

vlan_id: {get_param: InternalApiNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: InternalApiIpSubnet}

routes:

-

default: true

next_hop: {get_param: InternalApiDefaultRoute}

The NIC definitions for compute roles are slightly different from those for Control nodes. This is because Contrail provisions a logical interface called ‘vhost0’ on all Compute nodes, and this interface must be included in the NIC definition file for a Compute node. vhost0 is the logical interface that gets attached to the control/data network (or the internal API network in a TripleO-based installation).

Note: In TripleO 10-based installation, it is important to keep in mind that you always have to configure TWO files for the Compute hosts:

- contrail-nic-config-compute.yaml: This is similar to the Controller nodes above.

- contrail-net.yaml: Here we have to supply ADDITIONAL information for the NIC configuration, as the integration with TripleO requires these values to setup the vhost0 interface correctly. Without this information, the installation will fail!

Improved integration is expected in TripleO 13, but for now you must keep both files in sync and replicate some of the information manually between them.

In the contrail-net.yaml example provided above, the NIC template being used for the Compute nodes was ‘contrail-nic-config-compute.yaml’. Let’s look at the contents of the ‘resources’ section this file:

resources:

OsNetConfigImpl:

type: OS::Heat::StructuredConfig

properties:

group: os-apply-config

config:

os_net_config:

network_config:

-

type: interface

name: nic1

use_dhcp: false

dns_servers: {get_param: DnsServers}

addresses:

-

ip_netmask:

list_join:

- '/'

- - {get_param: ControlPlaneIp}

- {get_param: ControlPlaneSubnetCidr}

routes:

-

ip_netmask: 169.254.169.254/32 # Mandatory!

next_hop: {get_param: EC2MetadataIp} # Mandatory!

-

type: vlan

vlan_id: {get_param: InternalApiNetworkVlanID}

device: nic2

-

type: interface

name: vhost0

use_dhcp: false

addresses:

-

ip_netmask: {get_param: InternalApiIpSubnet}

routes:

-

default: true

next_hop: {get_param: InternalApiDefaultRoute}

-

type: vlan

vlan_id: {get_param: ManagementNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: ManagementIpSubnet}

-

type: vlan

vlan_id: {get_param: ExternalNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: ExternalIpSubnet}

-

type: vlan

vlan_id: {get_param: StorageNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: StorageIpSubnet}

-

type: vlan

vlan_id: {get_param: StorageMgmtNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: StorageMgmtIpSubnet}

Details of each NIC:

- NIC-1:

-

type: interface

name: nic1

use_dhcp: false

dns_servers: {get_param: DnsServers}

addresses:

-

ip_netmask:

list_join:

- '/'

- - {get_param: ControlPlaneIp}

- {get_param: ControlPlaneSubnetCidr}

routes:

-

ip_netmask: 169.254.169.254/32 # Mandatory!

next_hop: {get_param: EC2MetadataIp} # Mandatory!

This looks very similar to the NIC definition template for the Control nodes mentioned earlier; in this example topology, the first NIC for all the Compute nodes is connected to the ‘ControlPlane’ network. Note that this is again untagged, so this NIC might be connected to an access port on the underlay switch.

NIC-2:

-

type: interface

name: vhost0

use_dhcp: false

addresses:

-

ip_netmask: {get_param: InternalApiIpSubnet}

routes:

-

default: true

next_hop: {get_param: InternalApiDefaultRoute}

-

type: vlan

vlan_id: {get_param: InternalApiNetworkVlanID}

device: nic2

-

type: vlan

vlan_id: {get_param: ManagementNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: ManagementIpSubnet}

-

type: vlan

vlan_id: {get_param: ExternalNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: ExternalIpSubnet}

-

type: vlan

vlan_id: {get_param: StorageNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: StorageIpSubnet}

-

type: vlan

vlan_id: {get_param: StorageMgmtNetworkVlanID}

device: nic2

addresses:

-

ip_netmask: {get_param: StorageMgmtIpSubnet}

This also looks very similar to the parameters defined in the NIC definition template for the Control plane nodes. There are two major differences though:

- The VLAN sub-interface for ‘InternalApiNetwork’ does not have an IP address.

- The vhost0 interface holds the IP address for ‘InternalApiNetwork’.

- If you’re using a standard TripleO-based installation, the IP address for the ‘InternalApiNetwork’ will always be configured on the vhost0 interface.

Some more additional parameters are required to successfully provision Compute nodes. These values are normally specified in the contrail-net.yaml file:

parameter_defaults:

ControlPlaneSubnetCidr: '24'

InternalApiNetCidr: 10.0.0.0/24

InternalApiAllocationPools: [{'start': '10.0.0.10', 'end': '10.0.0.200'}]

InternalApiDefaultRoute: 10.0.0.1

ManagementNetCidr: 10.1.0.0/24

ManagementAllocationPools: [{'start': '10.1.0.10', 'end': '10.1.0.200'}]

ManagementInterfaceDefaultRoute: 10.1.0.1

ExternalNetCidr: 10.2.0.0/24

ExternalAllocationPools: [{'start': '10.2.0.10', 'end': '10.2.0.200'}]

EC2MetadataIp: 192.0.2.1 # Generally the IP of the Undercloud

DnsServers: ["8.8.8.8","8.8.4.4"]

VrouterPhysicalInterface: vlan20

VrouterGateway: 10.0.0.1

VrouterNetmask: 255.255.255.0

ControlVirtualInterface: eth0

PublicVirtualInterface: vlan10

Details of these variables:

- Network-related parameters:

- Subnet CIDR: You can set the subnet mask of each overcloud network in this file.

- Allocation Pool Range: If set, the overcloud nodes are allocated IP addresses from the specified range.

- Default Route: Set the next hop for the default route in the specified format. In this example, the default route is set for ‘InternalApiNetwork’ and the next hop is set as 10.0.0.1.

- VrouterPhysicalInterface: This is the interface on which the vhost0 interface gets attached and the Contrail vRouter will be present. This may be a physical NIC (e.g. eth2 or enps0f0), a bond* interface, or a VLAN interface (e.g. Vlan20)

- VrouterGateway: This is the IP address of the gateway for vRouter towards the DC fabric. It will be “blindly” used to send traffic towards the DC router (as the other Compute nodes are all in the same subnet currently). The SDN controller will also use BGP based signaling towards to the DC router and as a result it will also use this gateway for the control plane. In most cases, this represents the IP address of the local leaf switch in the underlay where the internal API network is attached. Note: The term “blindly” is used to indicate known behavior of the vRouter to NOT inspect the kernel routing table for performance reasons (the vRouter is a kernel module), so that every packet that is not in the local attached subnet will be sent to the programmed IP address, and every additional route in the routing table is ignored. On the Controllers, the routing table is used as normal. It’s best to keep both in sync to not get confused.

- VrouterNetmask: This is the subnet mask for the vhost0 interface (this is provisioned in the Compute node’s config files).

- VlanParentInterface: This is optional, and needed only if the vhost0 interface needs to be attached to a VLAN interface.

The topology used in this document has the following constraints:

- The first NIC must be connected to the ControlPlane network.

- The second NIC must have separate VLAN interfaces for every other network.

Keeping these limitations in mind, we specified 'eth1' as the VlanParentInterface. Note that ‘nic2’ was specified as the interface with multiple VLAN sub-interfaces in the NIC definition template. In RHEL 7.3/7.4 (at the time of writing this document), the NIC’s manifest as eth0, eth1, and so on. Because of this, NIC-2 is translated to eth1.

As part of the development process, there are several NIC templates that are made available to the user. These templates are named according to the topology that they’re intended to enable, and are available in the environments/contrail/ directory. Use and modify these templates according to your topology before deploying Contrail with TripleO/RedHat-Director.

Below is a complete list of all Contrail-specific additional items that you can or must configure in the contrail-net.yaml file to help the undercloud installer doing the right NIC configuration. We have added inline comments to make the file more understandable. Note that should your Compute node not use the usual vRouter as kernel module, you must use the dpdk and tsn variables accordingly:

.

.

.

EC2MetadataIp: 192.0.2.1 # Generally the IP of the Undercloud

DnsServers: ["9.9.9.9"]

#

# Fill this section with values for the COMPUTE NODES with Contrail vRouter.

# This is MANDATORY to help the changed deployment scripts

#

# This must be PYSICAL Interface or bondX of vRouter OR a vlanxXX (with a parent below)

VrouterPhysicalInterface: eth1

# VrouterDpdkPhysicalInterface: eth1

# VrouterTsnPhysicalInterface: eth1

VrouterGateway: 10.0.0.1

VrouterNetmask: 255.255.255.0

# This must be PYSICAL Interface of computenode

ControlVirtualInterface: eth0

# Public means ExternalNetwork

PublicVirtualInterface: vlan10

## if PhysicalInterface is a vlan interface using vlanX notation then you need to set the parent

# VlanParentInterface: eth1

# VlanDpdkParentInterface: eth1

# VlanTsnParentInterface: eth1

## if vhost0 is based on a bond

## check https://www.kernel.org/doc/Documentation/networking/bonding.txt

# BondInterface: bond0

# BondDpdkInterface: bond0

# BondTsnInterface: bond0

## NOTE: Nic Members have be separated by komma and the whole string

## needs to be encapsulated by '-signs to declare a single string

# BondInterfaceMembers: 'eth0,eth1'

# BondDpdkInterfaceMembers: 'eth0,eth1'

# BondTsnInterfaceMembers: 'eth0,eth1'

# BondMode: 4

# BondDpdkMode: 4

# BondTsnMode: 4

## https://access.redhat.com/solutions/71883

## use layer3+4 even if it's not 802.3ad compliant

# BondPolicy: 1

# BondDpdkPolicy: 1

# BondTsnPolicy: 1

## dpdk parameter

# ContrailDpdkHugePages: 2048

# ContrailDpdkCoremask: 0xf

# ContrailDpdkDriver: uio_pci_generic

Nowadays, it is hard to find a switch that doesn’t support frames larger than1500 bytes; most every vendor supports 9000 bytes or more.

In production networks, we recommend you to USE LARGE MTUs. It requires only a small amount of additional configuration work on the switching side and some additional lines in your Heat templates, and it can boost performance (depending on the traffic type) at no additional cost.

The increased MTU value must be configured everywhere applicable to your environment: on each relevant physical NIC interface, on every bond* interface (where the slaves have already been changed), and if used, on the vRouter virtual-interface. Note that VLANs and bridges attached to native or bond* interfaces will automatically inherit the increased value.

It is highly recommended that you test this setup BEFORE you start the overcloud installation. a simple/minimalistic test could be to setup a host without KVM, OpenStack or Contrail, using just with the bare host OS. Create native and/or bond interfaces with the increased MTU and then initiate a LARGE ping from this host to the loopback interface of the DC router that is clearly larger than any supported jumbo frame size on the switches (typically 20.000 bytes) and do NOT add a “don’t-fragment” option. You WANT to see how the destination leaf switch handles fragmentation and verify that the DC router rents a return packet from its loopback interface to the host.. Do the same in reverse from the DC router towards the Host. For example:

ping 169.245.169.15 –s 20000

If possible use tools like “tcpdump” on your host to monitor the traffic.

Note: When using Juniper QFX5100 switches as a ToR, you must add 14 bytes to the value that you configured on the on the physical NIC of the host.

Due to the nature of wrapping an overlay tunnel protocol around the original traffic from/to the VM when it’s sent to another Compute node or the DC router, this tunnel will add additional bytes to the transport MTU. Let’s usesending ICMP-based ping packets as an example. To reach the maximum MTU of 1500, the VM will send 1472 bytes:

# ping -f -l 1472 10.0.0.1

1472 bytes Payload/Data of the ICMP-Protocol

+ 8 bytes ICMP-Header

+ 20 bytes IPv4-Header

-------------

= 1500 bytes (Payload of Layer 3 = your MTU)

+ 14 bytes (Header of Layer 2)

+ 4 bytes (FCS-Checksum)

-------------

= 1518 bytes (complete Frame on the wire)

The added payload of the three tunnel protocols in the nominal case would be:

| MPLSoGRE | MPLSoUDP | VXLAN |

|---|---|---|

| IPv4 = 20 Bytes | IPv4 = 20 Bytes | IPv4 = 20 Bytes |

| GRE = 4 Bytes | UDP = 8 Bytes | UDP = 8 Bytes |

| MPLS = 4 Bytes | MPLS = 4 Bytes | VXLAN = 8 Bytes |

| N/A | N/A | Ethernet-II = 14 Bytes |

| Total = 28 Bytes | Total = 32 Bytes | Total = 50 Bytes |

What happens if the VM doesn’t know about this requirement, and the vRouter constantly breaks and fragments the packets that don’t fit into the underlay? The vRouter detects the problem and signals the required MTU to the VM. In the example below, we configured the same MTU (9000 bytes) for both the VM and the physical interface where the vRouter was attached:

# ping 1.1.1.1 -s 8941 -c1 on the VM causes:

[root@overcloud-novacompute-0 heat-admin]# tcpdump -n -i tape38befe5-a0

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on tape38befe5-a0, link-type EN10MB (Ethernet), capture size 262144 bytes

14:53:45.789095 ARP, Request who-has 10.10.10.3 tell 10.10.10.2, length 28

14:53:45.789234 ARP, Reply 10.10.10.3 is-at 02:e3:8b:ef:e5:a0, length 28

14:53:47.575058 IP 10.10.10.3 > 1.1.1.1: ICMP echo request, id 50433, seq 0, length 8949

14:53:47.576421 IP 10.10.10.1 > 10.10.10.3: ICMP 1.1.1.1 unreachable - need to frag (mtu 8968), length 136

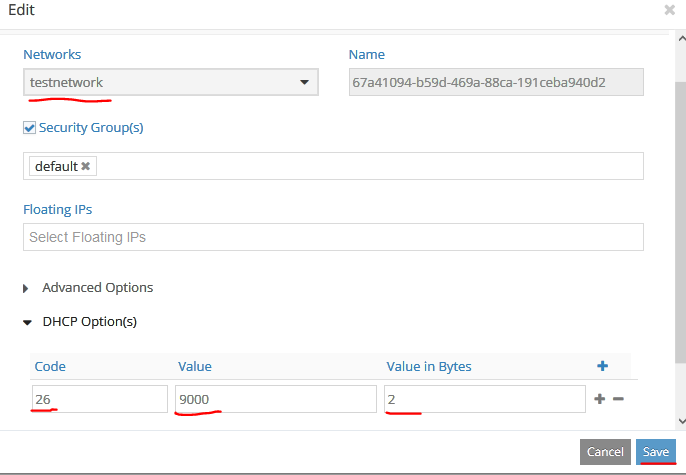

Now we need a way to tell the VMs being launched that they could use a higher MTU. Of course, you can always (inside the guest VM) use higher MTU settings and wait until the vRouter corrects this, but it would be better to do this automatically. As most VMs are configured as DHCP clients, it is best to add this as a DHCP-Option to the DHCP server that the vRouter implements.

Here is an example how to do this:

In the Contrail GUI, go to Configure -> Networking -> Ports -> Add (+), select a network to configure, add the relevant values in the DHCP Options section, and click Save.

Then you only need to launch the VM attached to this pre-created neutron port:

neutron port-list

+------------------+------------------+------------------+--------------------+

| id | name | mac_address | fixed_ips |

+------------------+------------------+------------------+--------------------+

| 67a41094-b59d- | 67a41094-b59d- | 02:67:a4:10:94:b | {"subnet_id": "d44 |

| 469a-88ca- | 469a-88ca- | 5 | 75877-0645-402d-98 |

| 191ceba940d2 | 191ceba940d2 | | 13-3ed89a18458a", |

| | | | "ip_address": |

| | | | "10.10.10.3"} |

+------------------+------------------+------------------+--------------------+

nova boot --image cirros --flavor testflavor --nic port-id=67a41094-b59d-469a-88ca-191ceba940d2 testvm1

And then login to the VM and verify if the procedure was successful:

admin@DC90-CE-MX80-Lab-2-R8> ssh cirros@10.10.10.3 routing-instance testvrf

cirros@10.10.10.3's password: gocubsgo

$ ifconfig

eth0 Link encap:Ethernet HWaddr 02:67:A4:10:94:B5

inet addr:10.10.10.3 Bcast:10.10.10.255 Mask:255.255.255.0

inet6 addr: fe80::67:a4ff:fe10:94b5/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:9000 Metric:1

RX packets:243 errors:0 dropped:0 overruns:0 frame:0

TX packets:254 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:23482 (22.9 KiB) TX bytes:21849 (21.3 KiB)

Finally, plan how you implement your large MTU environment in your data center fabric.

To cause the least amount of fragmentation and de-fragmentation, and also be able to change the tunnel protocol used in the underlay without causing any updates, we suggest the following configuration:

- For all VMs, use MTU 9000 (via DHCP as above, if desired). This will allow the traffic between the VMs and the vRouter on the internal dynamically created Linux tap-* interfaces. This is your base MTU setting, from which all others will be derived.

- The NICs or bond interfaces where the vRouter is attached will get an MTU that is 100 bytes higher than the base MTU (e.g. 9100 Bytes). This will hopefully ensure that no traffic from the VM, regardless of tunnel type, will ever force fragmentation to happen between physical and virtual environments due to the added payload of the tunneling protocols. Also this practice will avoid the need for the vRouter to perform any fragmentation reassembly for traffic back to the VM, as it always has more MTU capacity in the underlay then the VM will ever send.

- Between the Compute node (physical interface) and leaf switch, use the higher MTU of 9100 bytes.

- Between leaf switch and spine switches, use the higher MTU of 9100 bytes.

- Between spine switches, use the higher MTU of 9100 bytes. With this configuration, all east-west traffic between Compute nodes will never need to be re-assembled when traveling over leaf/spine devices. • The MTU between spine switches and the DC router can be either the base MTU or the higher MTU, as it can be assumed the connected WAN at the DC router uses a lower MTU on its WAN links.

Note: If you intend to utilize DPDK-enabled Compute nodes, make sure to use a version (>=V3.6.8) that support jumbo frames.

If you want to use options like:

- DPDK support for Compute nodes. (A novel way to get more packets per second throughput supported if needed for the application.)

- A Compute node as ToR services nod (TSN) for Contrail bare-metal

- Other Contrail Releases, such as >= Contrail 4.0

See the following page on GitHub.

Note: This is a living document that can change.

In this Chapter, we are executing and installing a full working setup, including ALL the configuration steps.

This environment meets the following guidelines and should be easy to re-implement:

- Reduced number of servers to the minimum allowable combination. As a result, this setup work with three servers. If you wish, you can reduce this setup by one server, however you will not able to see Ccompute node-to-Compute node traffic.

- As a result of this reduction, this setup does not provide HA and reuires more nodes to be production grade (which can be done easily).

- We did not setup CEPH storage and only used ephemeral storage for the VMs, which is fine for most simple VMs and NFVIs.

- Mixed setup of VMs and bare-metal hosts so that you can see the individual changes.

- Usage of bond interfaces and large MTUs, to demo the usage.

- DC router uses MPLSoUDP towards the Compute nodes (and vice versa)

- Multiplexing of provisioning and management network onto the same LAN, to keep things simple.

- vRouter bond interface also has other VLANs attached, but is kept native as in the design suggestion in Chapter 2.3.

In our example, we have used:

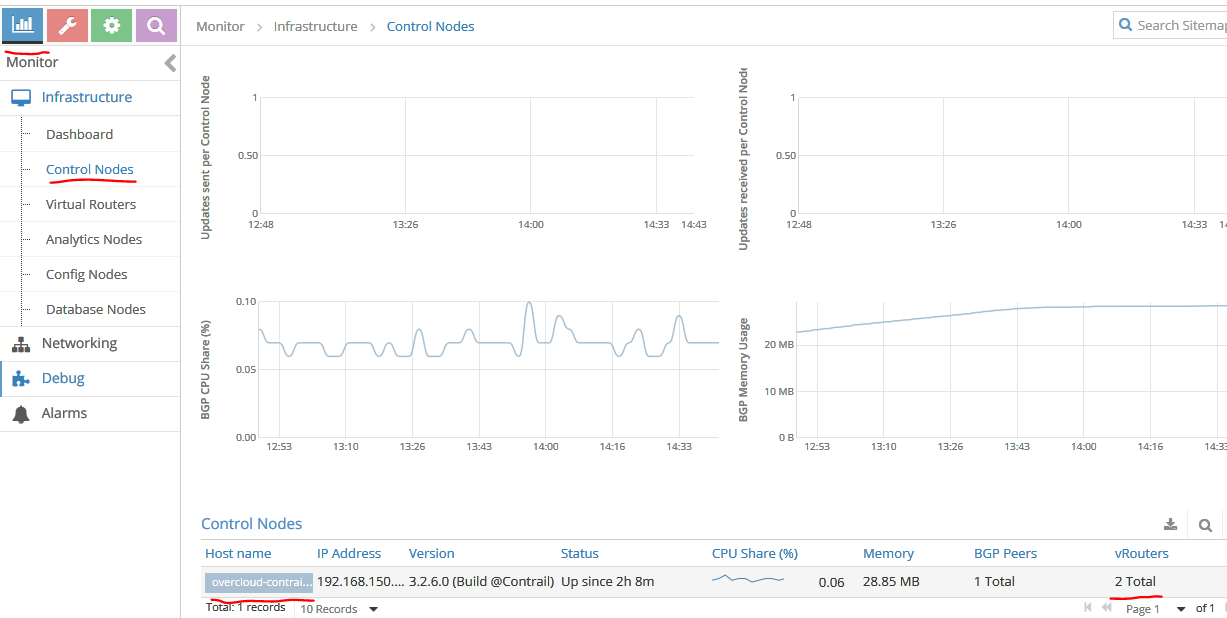

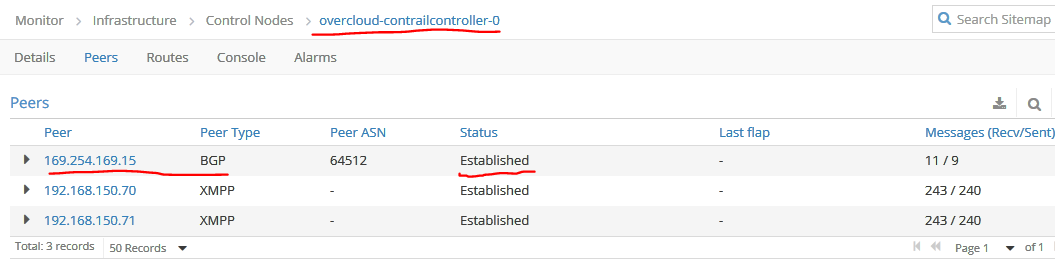

- Contrail Networking: V3.2.6 newton

- Host OS: RedHat Enterprise Linux V7.4

- Director/Tripleo: V10

In our lab we used the following assignment:

| Network Name | IP-Subnet | VLAN-ID |

|---|---|---|

| Provisioning | 192.0.2.0/24 | 1 |

| Management/IPMI | 10.10.16.20/22 | 1 |

| Internal-Api | 192.168.150.0/24 | 150 |

| External/Public | 192.168.10.0/24 | 20 |

| Storage | 192.168.30.0/24 | 30 |

| Storage_mgmt. | 192.168.40.0/24 | 40 |

| Leaf_to_Spine | 192.168.140.0/24 | N/A |

| Spine_to_DCR | 192.168.130.0/24 | N/A |

| Network w. Route | Range | Gateway | Used for |

|---|---|---|---|

| Provisioning | 0.0.0.0/0 | 192.0.2.1 | Software Download |

| Provisioning | 169.254.169.254/32 | 192.0.2.1 | Undercloud Metadata |

| Internal-Api | 169.254.169.15/32 | 192.168.150.1 | Loopback DC-R |

| Internal-Api | 169.254.169.12/32 | 192.168.150.1 | Loopback Leaf |

| Management | 0.0.0.0/0 | 10.10.16.2 Lab Internet | Access |

Note: In a production environment, the default gateway should be in the external/public network as this is exposed to the outside and accessible over the WAN and not, as in this example, in the Provisioning network.

Note: If the software is installed via a local Red Hat Satellite Server, and not via Internet as in this example, you will need to add an additional appropriate route to reach this network.

| Name | Type | 1st Address | 2nd Address | Other Address |

|---|---|---|---|---|

| server2 | Host | 10.10.16.22/24 | ||

| undercloud | VM | 10.10.16.55/24 | 192.0.2.1/24 | 169.254.169.254/32 |

| controller | VM | 192.168.150.60/24 | 192.0.2.x/24 | 192.168.10+30+40.x/24 |

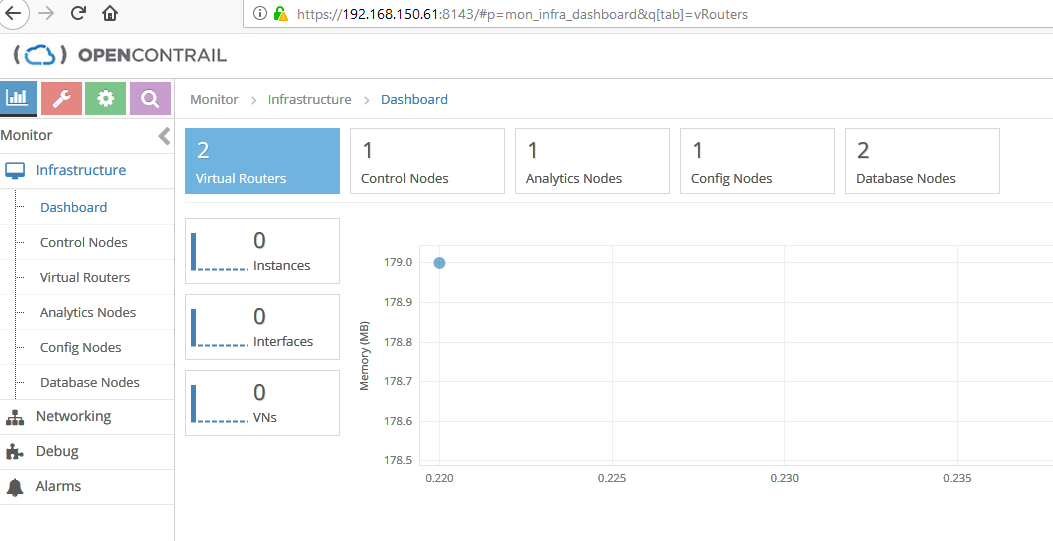

| contrailcontroller | VM | 192.168.150.61/24 | 192.0.2.x/24 | 192.168.10+30+40.x/24 |

| contrailanalytics | VM | 192.168.150.62/24 | 192.0.2.x/24 | 192.168.10+30+40.x/24 |

| contrailanalyticsdb | VM | 192.168.150.63/24 | 192.0.2.x/24 | 192.168.10+30+40.x/24 |

| novacompute-0 | Host | 192.168.150.70/24 | 192.0.2.x/24 | 192.168.10+30+40.x/24 |

| novacompute-1 | Host | 192.168.150.71/24 | 192.0.2.x/24 | 192.168.10+30+40.x/24 |

| server3 | IPMI | 10.10.16.25/24 | ||

| server4 | IPMI | 10.10.16.27/24 | ||

| MX80 | DC-R | 10.10.16.15/24 | 169.254.169.15 | 192.168.130.1/24 |

| Leaf-VC | Leaf | 10.10.16.12/24 | 169.254.169.12 | 192.168.150.1/24 + 192.168.140.2/24 |

| Spine | Spine | 10.10.16.14/24 | 192.168.140.1/24 | 192.168.130.2/24 |

The Undercloud VM needs to have MINIMUM 2 interfaces; most designs use three interfaces so we follow this practice here. Configure the first interface which is “eth0” (and maybe “eth2” as well). During the installation process OpenStack will always create a bf-ctlplane OVS bridge and assign it to the second interface (“eth1” in this case). It will bind the DHCP and PXE boot server to the bf-ctlplane bridge and listen on 192.0.2.1/24 (and 169.254.169.254/32 for the metadata server) over this interface. Ensure this interface is able to reach the other VMs and bare-metal hosts UNTAGGED to ensure PXE boot is working. AFTER completing the undercloud OpenStack installation, you may also configure eth2 to be attached to interal API and/or public IP networks, though this is not required.

In this setup, it is assumed that you have installed Red Hat Linux V7.4 onto the first server (server2 in our lab) that is used to launched the various VMs and acts as Linux KVM hypervisor.

Allow the root user to use SSH to access this host. This is needed on every host where OpenStack Ironic needs to start and shutdown the VMs.

sed -i "s/PasswordAuthentication no/PasswordAuthentication yes/g" /etc/ssh/sshd_config

Register your server to Red Hat, if you haven’t done so already.

# register server

subscription-manager register --username RedHatCustomerAccount --password RedHatCustomerPassword --auto-attach

subscription-manager list --available | egrep "Subscription Name|Pool" | grep Linux -A1

subscription-manager attach --pool=8a85f9875cce764f015ccefafa4516c7

#subscription-manager repos --disable=*

#subscription-manager repos --enable=rhel-7-server-rpms --enable=rhel-7-server-extras-rpms --enable=rhel-7-server-rh-common-rpms --enable=rhelha-for-rhel-7-server-rpms --enable=rhel-7-server-openstack-10-rpms --enable=rhel-7-server-openstack-10-tools-rpms --enable=rhel-7-serveropenstack-10-optools-rpms --enable=rhel-7-server-openstack-10-devtools-rpms

Update package info.

yum update -y

Install basic packages for KVM hypervisor, and any other needed packages.

yum install -y libguestfs libguestfs-tools openvswitch virt-install kvm libvirt libvirt-python python-virtinst

Start libvirtd to enable KVM for the VMs.

systemctl start libvirtd

Create virtual switches for the undercloud VMs. Note: This may differ according to your setup.

rmmod bonding

modprobe bonding

echo 4 > /sys/class/net/bond0/bonding/mode

echo 1 > /sys/class/net/bond0/bonding/xmit_hash_policy

ifconfig bond0 0.0.0.0 mtu 9100 up

ifenslave bond0 ens1f0 ens1f1

sleep 10

ethtool bond0

brctl addbr br0

brctl addbr br1

brctl addif br0 bond0 ; ifconfig br0 up

# get parameters of current ssh interface

DEVICE=eno1

HOSTNAME=`uname -n`

IPADDR=`ifconfig $DEVICE|grep 'inet '|awk '{sub(/addr:/,""); print $2}'`

NETMASK=`ifconfig $DEVICE|grep 'netmask '|awk '{sub(/Mask:/,""); print $4}'`

GATEWAY=`route -n|grep '^0.0.0.0'|awk '{print $2}'`

# this HAS to be on a single line not to lose the SSH connection

ifconfig $DEVICE 0.0.0.0 up; brctl addif br1 $DEVICE ; ifconfig br1 $IPADDR netmask $NETMASK up ; route add default gw $GATEWAY

Review the created bridges and interfaces.

brctl show

bridge name bridge id STP enabled interfaces

br0 8000.90e2bad9ce50 no bond0

br1 8000.ac1f6b01e688 no eno1

virbr0 8000.5254000fd076 yes virbr0-nic

ifconfig

bond0: flags=5187<UP,BROADCAST,RUNNING,MASTER,MULTICAST> mtu 9100

inet6 fe80::92e2:baff:fed9:ce50 prefixlen 64 scopeid 0x20<link>

ether 90:e2:ba:d9:ce:50 txqueuelen 1000 (Ethernet)

RX packets 95 bytes 19348 (18.8 KiB)

RX errors 0 dropped 12 overruns 0 frame 0

TX packets 391 bytes 47850 (46.7 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

br0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9100

inet6 fe80::92e2:baff:fed9:ce50 prefixlen 64 scopeid 0x20<link>

ether 90:e2:ba:d9:ce:50 txqueuelen 1000 (Ethernet)

RX packets 58 bytes 10672 (10.4 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8 bytes 648 (648.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

br1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.10.16.22 netmask 255.255.252.0 broadcast 10.10.19.255

inet6 fe80::ae1f:6bff:fe01:e688 prefixlen 64 scopeid 0x20<link>

ether ac:1f:6b:01:e6:88 txqueuelen 1000 (Ethernet)

RX packets 102 bytes 9648 (9.4 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 32 bytes 3452 (3.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eno1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::ae1f:6bff:fe01:e688 prefixlen 64 scopeid 0x20<link>

ether ac:1f:6b:01:e6:88 txqueuelen 1000 (Ethernet)

RX packets 2071 bytes 308414 (301.1 KiB)

RX errors 0 dropped 2 overruns 0 frame 0

TX packets 819 bytes 129321 (126.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device memory 0xc7120000-c713ffff

eno2: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

ether ac:1f:6b:01:e6:89 txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device memory 0xc7100000-c711ffff

ens1f0: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 9100

ether 90:e2:ba:d9:ce:50 txqueuelen 1000 (Ethernet)

RX packets 57 bytes 13822 (13.4 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 203 bytes 24538 (23.9 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens1f1: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 9100

ether 90:e2:ba:d9:ce:50 txqueuelen 1000 (Ethernet)

RX packets 38 bytes 5526 (5.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 188 bytes 23312 (22.7 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens2f0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

ether 90:e2:ba:d9:da:8c txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens2f1: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

ether 90:e2:ba:d9:da:8d txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1 (Local Loopback)

RX packets 2 bytes 208 (208.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2 bytes 208 (208.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255

ether 52:54:00:0f:d0:76 txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Create and bind the bridges to the KVM hypervisor.

cat << EOF > br0.xml

<network>

<name>br0</name>

<forward mode='bridge'/>

<bridge name='br0'/>

</network>

EOF

cat << EOF > br1.xml

<network>

<name>br1</name>

<forward mode='bridge'/>

<bridge name='br1'/>

</network>

EOF

virsh net-define br0.xml

virsh net-start br0

virsh net-autostart br0

virsh net-define br1.xml

virsh net-start br1

virsh net-autostart br1

virsh net-list

Name State Autostart Persistent

----------------------------------------------------------

br0 active yes yes

br1 active yes yes

default active yes yes

In the following step, we make our network configuration changes permanent so that if you ever happen to reboot the host, the configuration will be re-applied.

cat <<EOF >/etc/sysconfig/network-scripts/ifcfg-eno1

DEVICE=eno1

ONBOOT=yes

HOTPLUG=no

NM_CONTROLLED=no

BRIDGE=br1

EOF

cat <<EOF >/etc/sysconfig/network-scripts/ifcfg-bond0

DEVICE=bond0

ONBOOT=yes

HOTPLUG=no

NM_CONTROLLED=no

PEERDNS=no

BONDING_OPTS="mode=802.3ad xmit_hash_policy=layer3+4 lacp_rate=fast miimon=100"

MTU=9100

BRIDGE=br0

EOF

cat <<EOF >/etc/sysconfig/network-scripts/ifcfg-ens1f0

DEVICE=ens1f0

ONBOOT=yes

HOTPLUG=no

NM_CONTROLLED=no

PEERDNS=no

MASTER=bond0

SLAVE=yes

BOOTPROTO=none

MTU=9100

EOF

cat <<EOF >/etc/sysconfig/network-scripts/ifcfg-ens1f1

DEVICE=ens1f1

ONBOOT=yes

HOTPLUG=no

NM_CONTROLLED=no

PEERDNS=no

MASTER=bond0

SLAVE=yes

BOOTPROTO=none

MTU=9100

EOF

cat <<EOF >/etc/sysconfig/network-scripts/ifcfg-br0

DEVICE=br0

TYPE=Bridge

ONBOOT=yes

BOOTPROTO=none

NM_CONTROLLED=no

DELAY=0

EOF

cat <<EOF >/etc/sysconfig/network-scripts/ifcfg-br1

DEVICE=br1

TYPE=Bridge

IPADDR=10.10.16.22

NETMASK=255.255.252.0

GATEWAY=10.10.16.2

PEERDNS=yes

DNS1=8.8.8.8

ONBOOT=yes

BOOTPROTO=none

NM_CONTROLLED=no

DELAY=0

EOF

systemctl restart network

If desired, reboot the host at this time to verify that your network setup is indeed permanent and the settings are recreated as expected.

Here we use a script to create the four needed Controller VMs with an empty disk and the necessary vCPU and RAM requirements. Review Chapter 2.1 for the requirements of your production environment. Also, note the order in which we attach the networking bridges in accordance with Chapter 3.1.2, as this attaches them to eth0 and eth1 of the VM. As mentioned earlier: The first interface of the VM (eth0) must contain the ‘ProvisionNetwork’ in an untagged manner.

num=0

for i in control contrail-controller contrail-analytics contrail-analytics-database

do

num=$(expr $num + 1)

qemu-img create -f qcow2 /var/lib/libvirt/images/${i}_${num}.qcow2 100G

virsh define /dev/stdin <<EOF

$(virt-install --name ${i} --disk /var/lib/libvirt/images/${i}_${num}.qcow2 --vcpus=6 --ram=25000 --network network=br1,model=virtio --network network=br0,model=virtio --virt-type kvm --import --os-variant rhel7 --graphics vnc,listen=0.0.0.0 --noautoconsole --serial pty --console pty,target_type=virtio --print-xml)

EOF

done

Stop and disable the firewall service (on this host ONLY) for the VNC remote connection. Do not disable any other firewall (especially the one in the undercloud VM).

service firewalld stop

systemctl disable firewalld

The VMs should all now be in shutdown state, with empty virtual disks and dynamic MAC addresses pre-generated for them (KVM does this during install).

virsh list --all

Id Name State

----------------------------------------------------

- contrail-analytics shut off

- contrail-analytics-database shut off

- contrail-controller shut off

- control shut off

This script captures the MAC address of the FIRST interface (eth0) where the PXE boot for the VM has to happen. This MAC is for OpenStack Ironic needed to be known to install the host OS into the VM later.

rm ironic_list

for i in control contrail-controller contrail-analytics contrail-analytics-database

do

prov_mac=`virsh dumpxml ${i} | grep "mac address" | head -n1 | sed 's/ //g' | sed 's/<macaddress=//g' | sed 's/\/>//g' | sed "s/'//g"`

echo ${prov_mac} ${i} >> ironic_list

done

Then review the assigned MAC addresses that are extracted in this list. We will need this generated list again later.

cat ironic_list

52:54:00:b1:b5:5c control

52:54:00:46:e2:cc contrail-controller

52:54:00:e5:4a:8a contrail-analytics

52:54:00:87:64:2e contrail-analytics-database

Set password and subscription information. Note: You must use the pool ID for OpenStack, as the Undercloud VM is built on OpenStack packages.

subscription-manager register --username RedHatCustomerAccount --password RedHatCustomerPassword --auto-attach

subscription-manager list --available | egrep "Subscription Name|Pool" | grep OpenStack -A1

export USER=RedHatCustomerAccount

export PASSWORD=RedHatCustomerPassword

export POOLID=8a85f9895cce2f3a015ccf0eb79749f8

export ROOTPASSWORD=c0ntrail123

export STACKPASSWORD=c0ntrail123

Create the stack user, which will be used later for all undercloud commands.

useradd -G libvirt stack

echo $STACKPASSWORD |passwd stack --stdin

echo "stack ALL=(root) NOPASSWD:ALL" | sudo tee -a /etc/sudoers.d/stack

chmod 0440 /etc/sudoers.d/stack

Adjust the permissions for KVM.

chgrp -R libvirt /var/lib/libvirt/images

chmod g+rw /var/lib/libvirt/images

Download the Red Hat Enterprise Linux 7.4 KVM guest image, which is usually available at the following URL: https://access.redhat.com/downloads/content/69/ver=/rhel---7/7.4/x86_64/product-software

scp root@10.10.16.20://root/RHOSP/rhel-server-7.4-x86_64-kvm.qcow2 /root

Prepare the undercloud VM (this step deletes previous creations and is therefore optional).

virsh destroy undercloud

virsh snapshot-delete undercloud undercloud-save

virsh undefine undercloud

This snippet creates the undercloud VM, registers it, and makes some changes. The image is then copied to libvirtd, to launch it in KVM.

export LIBGUESTFS_BACKEND=direct

qemu-img create -f qcow2 undercloud.qcow2 100G

virt-resize --expand /dev/sda1 /root/rhel-server-7.4-x86_64-kvm.qcow2 undercloud.qcow2

virt-customize -a undercloud.qcow2 \

--run-command 'xfs_growfs /' \

--root-password password:$ROOTPASSWORD \

--hostname undercloud.local \

--sm-credentials $USER:password:$PASSWORD --sm-register --sm-attach auto --sm-attach pool:$POOLID \

--run-command 'useradd stack' \

--password stack:password:$STACKPASSWORD \

--run-command 'echo "stack ALL=(root) NOPASSWD:ALL" | tee -a /etc/sudoers.d/stack' \

--chmod 0440:/etc/sudoers.d/stack \

--run-command 'subscription-manager repos --enable=rhel-7-server-rpms --enable=rhel-7-server-extras-rpms --enable=rhel-7-server-rh-common-rpms --enable=rhel-ha-for-rhel-7-server-rpms --enable=rhel-7-server-openstack-10-rpms --enable=rhel-7-server-openstack-10-devtools-rpms' \

--install python-tripleoclient \

--run-command 'sed -i "s/PasswordAuthentication no/PasswordAuthentication yes/g" /etc/ssh/sshd_config' \

--run-command 'systemctl enable sshd' \

--run-command 'yum remove -y cloud-init' \

--selinux-relabel

cp undercloud.qcow2 /var/lib/libvirt/images/undercloud.qcow2

Install the undercloud VM and launch it in KVM. Again, make sure that the second (eth1) interface of the undercloud VM is connected to a bridge or interface on the host OS that has the untagged ‘ProvisionNetwork’. In this example, we multiplex it with the Management network.

virt-install --name undercloud \

--disk /var/lib/libvirt/images/undercloud.qcow2 \

--vcpus=8 \

--ram=32696 \

--network network=br1,model=virtio\

--network network=br1,model=virtio\

--network network=br0,model=virtio\

--virt-type kvm \

--import \

--os-variant rhel7 \

--graphics vnc,listen=0.0.0.0 \

--serial pty \

--noautoconsole \

--console pty,target_type=virtio

Log into the undercloud VM from the host, once it has started.

virsh console undercloud

Apply the networking configuration into the undercloud VM. We do NOT configure eth1 or eth2 as this will be done by the OpenStack installation later.

cat <<EOF >/etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

BOOTPROTO=none

IPADDR=10.10.16.55

NETMASK=255.255.252.0

GATEWAY=10.10.16.2

PEERDNS=yes

DNS1=8.8.8.8

ONBOOT=yes

USERCTL=no

EOF

hostnamectl set-hostname undercloud.example.com

hostnamectl set-hostname --transient undercloud.example.com

echo "10.10.16.55 undercloud undercloud.example.com" >> /etc/hosts

sleep 10

systemctl restart network

Configure the undercloud config file (optionally), or else edit it using “vi ~/undercloud.conf”.

cp /usr/share/instack-undercloud/undercloud.conf.sample ~/undercloud.conf

sed -i -e 's/#undercloud_hostname = <None>/undercloud_hostname = undercloud.example.com/g' undercloud.conf

sed -i -e 's/#local_interface = eth1/local_interface = eth1/g' undercloud.conf

sed -i -e 's/#undercloud_admin_password = <None>/undercloud_admin_password = c0ntrail123/g' undercloud.conf

Change to the user stack, as it will be used to initiate all future installations and changes, and install the undercloud packages.

su - stack

openstack undercloud install

.

.

.

Apply a mandatory bugfix for dnsmasq, to ensure it will still work after a reboot. See more information here: https://bugzilla.redhat.com/show_bug.cgi?id=1348700 and here https://bugs.launchpad.net/tripleo/+bug/1615996.

Use the following command to evaluate the state of dnsmasq: “sudo journalctl -u openstack-ironic-inspector-dnsmasq”.

sudo sed -i 's/bind-interfaces/bind-dynamic/g' /etc/ironic-inspector/dnsmasq.conf

ps aux | grep dnsmasq

nobody 872 0.0 0.0 15580 416 ? S 09:56 0:00 /sbin/dnsmasq --conf-file=/etc/ironic-inspector/dnsmasq.conf

nobody 3572 0.0 0.0 15608 904 ? S 09:58 0:00 dnsmasq --no-hosts --no-resolv --strict-order --except-interface=lo --pid-file=/var/lib/neutron/dhcp/ef61bf10-ca64-474f-ade8-61b27ca2cf42/pid --dhcp-hostsfile=/var/lib/neutron/dhcp/ef61bf10-ca64-474f-ade8-61b27ca2cf42/host --addn-hosts=/var/lib/neutron/dhcp/ef61bf10-ca64-474f-ade8-61b27ca2cf42/addn_hosts --dhcp-optsfile=/var/lib/neutron/dhcp/ef61bf10-ca64-474f-ade8-61b27ca2cf42/opts --dhcp-leasefile=/var/lib/neutron/dhcp/ef61bf10-ca64-474f-ade8-61b27ca2cf42/leases --dhcp-match=set:ipxe,175 --bind-interfaces --interface=tapeff6e358-cb --dhcp-range=set:tag0,192.0.2.0,static,86400s --dhcp-option-force=option:mtu,1500 --dhcp-lease-max=256 --conf-file=/etc/dnsmasq-ironic.conf --domain=openstacklocal

MANDATORY: save the iptables state. This may help if you have problems later.

sudo iptables-save > /home/stack/undercloud.fw

cat /home/stack/undercloud.fw

# Generated by iptables-save v1.4.21 on Mon Feb 5 04:53:44 2018

*mangle

:PREROUTING ACCEPT [77964:33241977]

:INPUT ACCEPT [77964:33241977]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [77457:33094370]

:POSTROUTING ACCEPT [77457:33094370]

COMMIT

# Completed on Mon Feb 5 04:53:44 2018

# Generated by iptables-save v1.4.21 on Mon Feb 5 04:53:44 2018

*raw

:PREROUTING ACCEPT [77703:33127952]

:OUTPUT ACCEPT [77196:32980345]

:neutron-openvswi-OUTPUT - [0:0]

:neutron-openvswi-PREROUTING - [0:0]

-A PREROUTING -j neutron-openvswi-PREROUTING

-A OUTPUT -j neutron-openvswi-OUTPUT

COMMIT

# Completed on Mon Feb 5 04:53:44 2018

# Generated by iptables-save v1.4.21 on Mon Feb 5 04:53:44 2018

*nat

:PREROUTING ACCEPT [22:1866]

:INPUT ACCEPT [41:3006]

:OUTPUT ACCEPT [1840:110937]

:POSTROUTING ACCEPT [1840:110937]

:BOOTSTACK_MASQ - [0:0]

:DOCKER - [0:0]

-A PREROUTING -d 169.254.169.254/32 -i br-ctlplane -p tcp -m tcp --dport 80 -j REDIRECT --to-ports 8775

-A PREROUTING -m addrtype --dst-type LOCAL -j DOCKER

-A OUTPUT ! -d 127.0.0.0/8 -m addrtype --dst-type LOCAL -j DOCKER

-A POSTROUTING -j BOOTSTACK_MASQ

-A POSTROUTING -s 172.17.0.0/16 ! -o docker0 -j MASQUERADE

-A POSTROUTING -s 192.0.2.0/24 -o eth0 -j MASQUERADE

-A BOOTSTACK_MASQ -s 192.0.2.0/24 -d 192.168.122.1/32 -j RETURN

-A BOOTSTACK_MASQ -s 192.0.2.0/24 ! -d 192.0.2.0/24 -j MASQUERADE

-A DOCKER -i docker0 -j RETURN

COMMIT

# Completed on Mon Feb 5 04:53:44 2018

# Generated by iptables-save v1.4.21 on Mon Feb 5 04:53:44 2018

*filter

:INPUT ACCEPT [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [77196:32980345]

:DOCKER - [0:0]

:DOCKER-ISOLATION - [0:0]

:ironic-inspector - [0:0]

:neutron-filter-top - [0:0]

:neutron-openvswi-FORWARD - [0:0]

:neutron-openvswi-INPUT - [0:0]

:neutron-openvswi-OUTPUT - [0:0]

:neutron-openvswi-local - [0:0]

:neutron-openvswi-sg-chain - [0:0]

:neutron-openvswi-sg-fallback - [0:0]

-A INPUT -j neutron-openvswi-INPUT

-A INPUT -i br-ctlplane -p udp -m udp --dport 67 -j ironic-inspector

-A INPUT -m comment --comment "000 accept related established rules" -m state --state RELATED,ESTABLISHED -j ACCEPT

-A INPUT -p icmp -m comment --comment "001 accept all icmp" -m state --state NEW -j ACCEPT

-A INPUT -i lo -m comment --comment "002 accept all to lo interface" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 22 -m comment --comment "003 accept ssh" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 27019 -m comment --comment "101 mongodb_config" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 27018 -m comment --comment "102 mongodb_sharding" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 27017 -m comment --comment "103 mongod" -m state --state NEW -j ACCEPT

-A INPUT -p udp -m multiport --dports 123 -m comment --comment "105 ntp" -m state --state NEW -j ACCEPT

-A INPUT -p vrrp -m comment --comment "106 vrrp" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 1993 -m comment --comment "107 haproxy stats" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 6379,26379 -m comment --comment "108 redis" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 6789,6800:6810 -m comment --comment "110 ceph" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 5000,13000,35357,13357 -m comment --comment "111 keystone" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 9292,9191,13292 -m comment --comment "112 glance" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 6080,13080,8773,13773,8774,13774,8775,13775 -m comment --comment "113 nova" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 9696,13696 -m comment --comment "114 neutron server" -m state --state NEW -j ACCEPT

-A INPUT -p udp -m multiport --dports 67 -m comment --comment "115 neutron dhcp input" -m state --state NEW -j ACCEPT

-A INPUT -p udp -m multiport --dports 4789 -m comment --comment "118 neutron vxlan networks" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 8776,13776 -m comment --comment "119 cinder" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 3260 -m comment --comment "120 iscsi initiator" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 11211 -m comment --comment "121 memcached" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 8080,13808 -m comment --comment "122 swift proxy" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 873,6000,6001,6002 -m comment --comment "123 swift storage" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 8777,13777 -m comment --comment "124 ceilometer" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 8000,13800,8003,13003,8004,13004 -m comment --comment "125 heat" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 80,443 -m comment --comment "126 horizon" -m state --state NEW -j ACCEPT

-A INPUT -p udp -m multiport --dports 161 -m comment --comment "127 snmp" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 8042,13042 -m comment --comment "128 aodh" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 8041,13041 -m comment --comment "129 gnocchi-api" -m state --state NEW -j ACCEPT

-A INPUT -p udp -m multiport --dports 69 -m comment --comment "130 tftp" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 5900:5999 -m comment --comment "131 novnc" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 8989,13989 -m comment --comment "132 mistral" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 8888,13888 -m comment --comment "133 zaqar" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 9000 -m comment --comment "134 zaqar websockets" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 6385,13385 -m comment --comment "135 ironic" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 8779,13779 -m comment --comment "136 trove" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 5050 -m comment --comment "137 ironic-inspector" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 8787 -m comment --comment "138 docker registry" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 8088 -m comment --comment "139 apache vhost" -m state --state NEW -j ACCEPT

-A INPUT -p tcp -m multiport --dports 3000 -m comment --comment "142 tripleo-ui" -m state --state NEW -j ACCEPT

-A INPUT -m comment --comment "998 log all" -m state --state NEW -j LOG

-A INPUT -m comment --comment "999 drop all" -m state --state NEW -j DROP

-A FORWARD -j neutron-filter-top

-A FORWARD -j neutron-openvswi-FORWARD

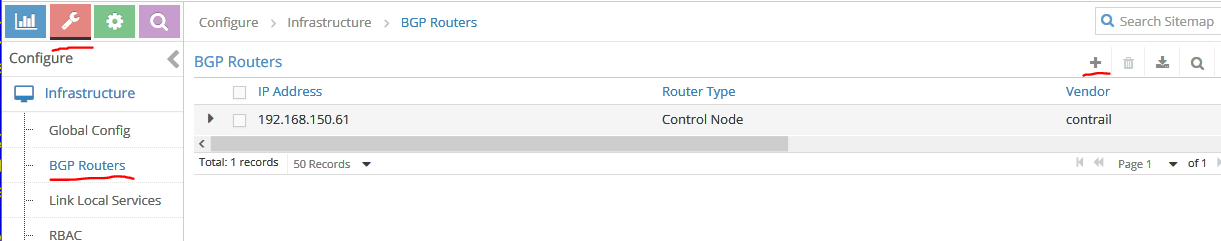

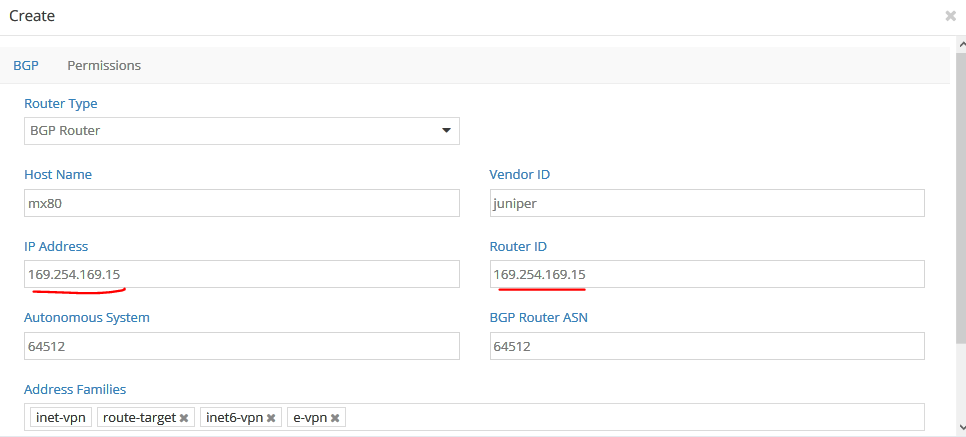

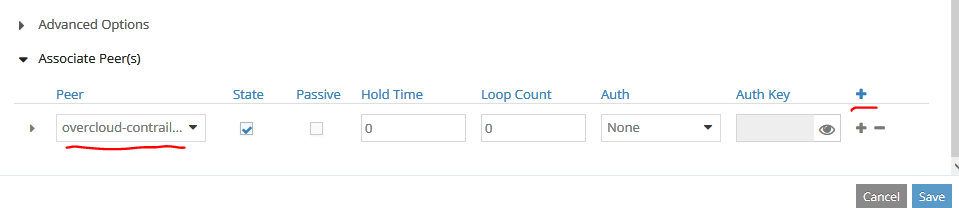

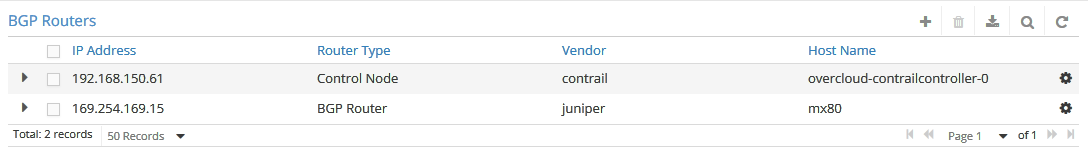

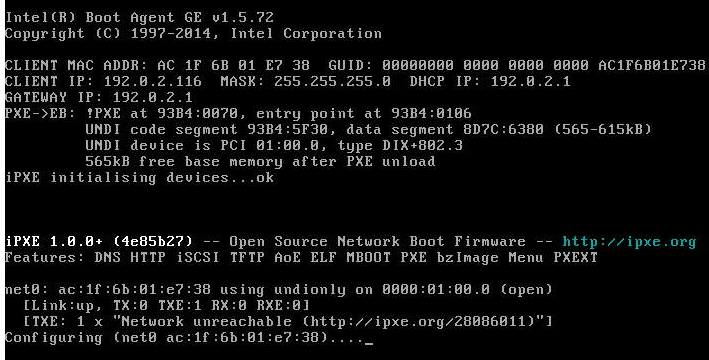

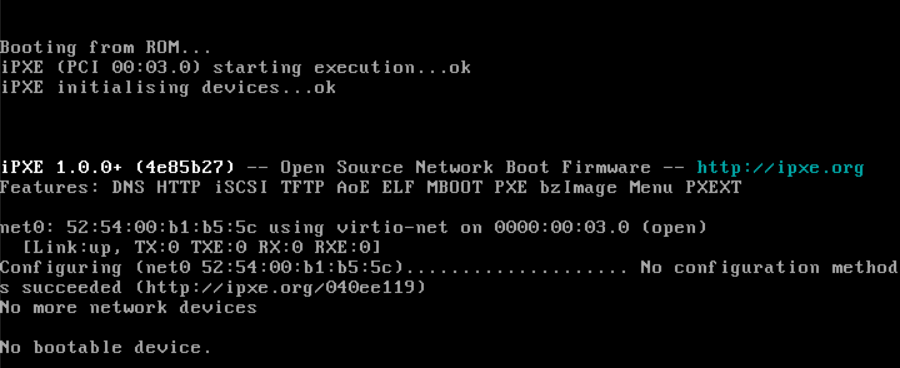

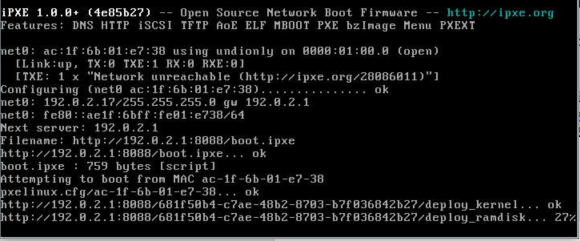

-A FORWARD -d 192.0.2.0/24 -p tcp -m comment --comment "140 network cidr nat" -m state --state NEW -j ACCEPT