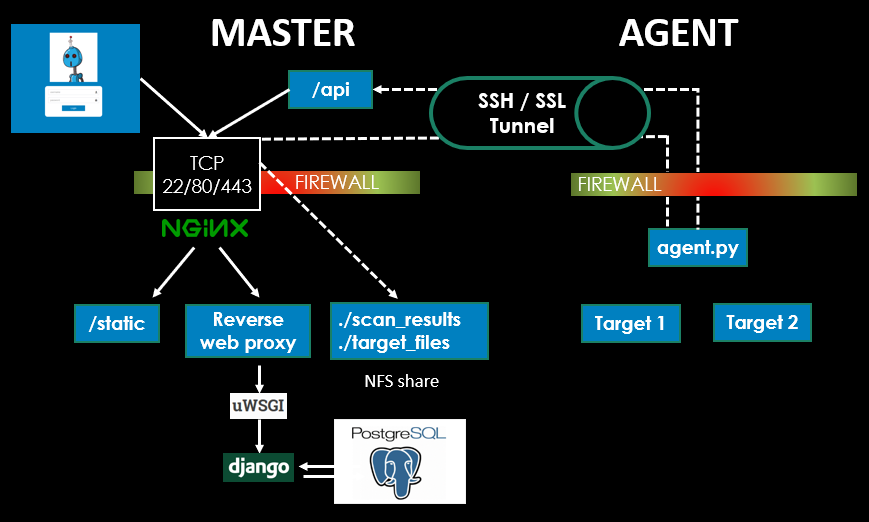

Scantron is a distributed nmap scanner comprised of two components. The first is a Master node that consists of a web front end used for scheduling scans and storing nmap scan targets and results. The second component is an agent that pulls scan jobs from Master and conducts the actual nmap scanning. A majority of the application's logic is purposely placed on Master to make the agent(s) as "dumb" as possible. All nmap target files and nmap results reside on Master and are shared through a network file share (NFS) leveraging SSH tunnels. The agents call back to Master periodically using a REST API to check for scan tasks and provide scan status updates.

Scantron is coded for Python3.6+ exclusively and leverages Django for the web front-end, Django REST Framework as the API endpoint, PostgreSQL as the database, and comes complete with Ubuntu-focused Ansible playbooks for smooth deployments. Scantron has been tested on Ubuntu 18.04 and may be compatible with other operating systems. Scantron's inspiration comes from:

Scantron relies heavily on utilizing SSH port forwards (-R / -L) as an umbilical cord to the agents. Either an SSH

connection from Master --> agent or agent --> Master is acceptable and may be required depending on different

firewall rules, but tweaking the port forwards and autossh commands will be necessary. If you are unfamiliar with these

concepts, there are some great overviews and tutorials out there:

- https://help.ubuntu.com/community/SSH/OpenSSH/PortForwarding

- https://www.systutorials.com/39648/port-forwarding-using-ssh-tunnel/

- https://www.everythingcli.org/ssh-tunnelling-for-fun-and-profit-autossh/

Scantron is not engineered to be quickly deployed to a server to scan for a few minutes, then torn down and destroyed.

It's better suited for having a set of static scanners (e.g., "internal-scanner", "external-scanner") with a relatively

static set of assets to scan.

-

Agent: If you plan on compiling masscan on an agent, you'll need at least 1024 MB of memory. It fails to build with only 512 MB. If you do not want to build masscan, set

install_masscan_on_agenttoFalseinansible-playbooks/group_vars/all -

Master: 512 MB of memory was the smallest amount successfully tested.

This is your local box, preferably Linux. Ansible >= 2.4.0.0 is the minimum version required for utilizing ufw comments.

Clone the project and execute initial_setup.sh.

# Clone scantron project.

git clone https://github.com/rackerlabs/scantron.git

cd scantron

./initial_setup.sh # Run as non-root user.Installation requires a general knowledge of Python, pip, and Ansible. Every attempt to make the deployment as simple as possible has been made.

If the Master server is actually a RFC1918 IP and not the public IP (because of NAT), the NAT'd RFC1918 IP

(e.g., 10.1.1.2) will have to be added to the ALLOWED_HOSTS in

ansible-playbooks/roles/master/templates/production.py.j2

This is common in AWS and GCP environments.

Per https://github.com/0xtavian: For the Ansible workload to work on IBM Cloud, edit the file /boot/grub/menu.lst

by changing

# groot=LABEL...to

# groot=(hd0)Edit the hosts in this file:

ansible-playbooks/hosts

The recommendation is to deploy Master first.

Edit any variables in these files before running playbook:

ansible-playbooks/group_vars/all

If you plan on utilizing the same API key across all agents (not recommended, but easier for automated deployments),

change utilize_static_api_token_across_agents to True. This prevents you from having to log into each agent and

update agent_config.json with the corresponding API key. The group_vars/static_api_key will be created by the

Master ansible playbook. The Ansible agent playbook will autofill the agent_config.json.j2 template with the API key

found in group_vars/static_api_key.

WARNING: The agent_config.json.j2 will generate a random scan_agent (e.g., agent-847623), so if you deploy

more than 1 agent, you won't run into complications with agent name collisions. You will, however, need to add create

the user on Master, since Master returns scheduled jobs to the agent based off the agent's name!

Rename master/scantron_secrets.json.empty to master/scantron_secrets.json (should be done for you by

initial_setup.sh)

Update all the values master/scantron_secrets.json if you do not like ones generated using initial_setup.sh. Only

the production values are used.

-

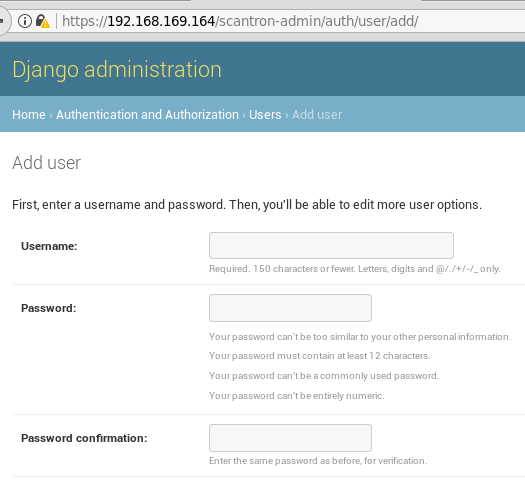

All Scantron Django passwords have a minimum password length of 12.

-

For the "SECRET_KEY", per Django's documentation: The secret key must be a large random value and it must be kept secret.

The scantron operating system user password is not really leveraged and is populated by providing a salted hash of a

random password generated using Python's passlib library. If you want to change the password, you will have to

generate a hash for the desired password and update the temp_user_pass variable in

scantron/ansible-playbooks/roles/add_users/vars/main.yml.

pip3 install passlib

python3 -c "from passlib.hash import sha512_crypt; import getpass; print(sha512_crypt.encrypt(getpass.getpass()))"Ensure you have a SSH key (or username/password) to access the Master box, specified by --private-key in the Ansible

command. User must also have password-less sudo privileges.

cd ansible-playbooks

# non-root user with password-less sudo capabilities.

ansible-playbook master.yml -u ubuntu --become --private-key=<agent SSH key>

# root user.

ansible-playbook master.yml -u root --private-key=<agent SSH key>cd into the master directory scantron/master and run the following to change the admin (or whatever user needs

their password changed) user password.

python3 manage.py changepassword adminEdit any variables in these files before running playbook:

ansible-playbooks/group_vars/allansible-playbooks/roles/agent/vars/main.yml

Ensure you have a SSH key (or username/password) to access the agent box, specified by --private-key in the Ansible

command. The user must also have password-less sudo privileges. If you are creating the boxes on AWS, then the

user is ubuntu for Ubuntu distros and the user already has password-less sudo capabilities. If you need to add

password-less sudo capability to a user, create a /etc/sudoder.d/<USERNAME> file, where <USERNAME> is the actual

user, and populate it with:

<USERNAME> ALL=(ALL) NOPASSWD: ALLSSH-ing in as root will also work for the Ansible deployment, but is not generally recommended.

cd ansible-playbooks

# non-root user with password-less sudo capabilities.

ansible-playbook agent.yml -u ubuntu --become --private-key=<agent SSH key>

# root user.

ansible-playbook agent.yml -u root --private-key=<agent SSH key>A Scantron agent is synonymous with a user.

Agents <--> Users

Users / agents are added through the webapp, so once a user / agent is added, an API token is automatically generated for that user / agent. The user's / agent's password is not necessary for Scantron to function since all user / agent authentication is done using the API token. The username and password can be used to login to the webapp to test API functionality. More API testing information can be found in the Test Agent API section of this README.

This is done automatically for one agent through Ansible. You may have to add additional lines and update SSH keys for each agent if they are different. These commands are for Master connecting to the agents.

In this example:

- Master - 192.168.1.99

- agent1 - 192.168.1.100

- agent2 - 192.168.1.101

# Master --> Agent 1

su - autossh -s /bin/bash -c 'autossh -M 0 -f -N -o "StrictHostKeyChecking no" -o "ServerAliveInterval 60" -o "ServerAliveCountMax 3" -p 22 -R 4430:127.0.0.1:443 -R 2049:127.0.0.1:2049 -i /home/scantron/master/autossh.key autossh@192.168.1.100'

# Master --> Agent 2

su - autossh -s /bin/bash -c 'autossh -M 0 -f -N -o "StrictHostKeyChecking no" -o "ServerAliveInterval 60" -o "ServerAliveCountMax 3" -p 22 -R 4430:127.0.0.1:443 -R 2049:127.0.0.1:2049 -i /home/scantron/master/autossh.key autossh@192.168.1.101'If Master cannot SSH to an agent, then the autossh command will be run on the agent and the port forwards will be local (-L) instead of remote (-R).

# Master <-- Agent 1

su - autossh -s /bin/bash -c 'autossh -M 0 -f -N -o "StrictHostKeyChecking no" -o "ServerAliveInterval 60" -o "ServerAliveCountMax 3" -p 22 -L 4430:127.0.0.1:443 -L 2049:127.0.0.1:2049 -i /home/scantron/master/autossh.key autossh@192.168.1.99'agent_config.json is a configuration file used by agents to provide basic settings and bootstrap communication with Master. Each agent can have a different configuration file.

The "api_token" will have to be modified on all the agents after deploying Master!

Agent settings:

scan_agent: Name of the agent. This name is also used in the agent's HTTP User-Agent string to help identify

agents calling back in the nginx web logs.

api_token: Used to authenticate agents. Recommend different API Tokens per agent, but the same one could be used.

master_address: Web address of Master. Could be 127.0.0.1 if agent traffic is tunneled to Master through an SSH port forward.

master_port: Web port Master is listening on.

callback_interval_in_seconds: Number of seconds agents wait before calling back for scan jobs.

number_of_threads: Experimental! Number of threads used to execute scan jobs if multiple jobs may be required at the same time. Keep at 1 to avoid a doubling scanning race condition.

target_files_dir: Name of actual agent target_files directory on the agent box.

scan_results_dir: Name of actual agent scan_results directory on the agent box.

log_verbosity: Desired log level for logs/agent.log

# Level Numeric value

# CRITICAL 50

# ERROR 40

# WARNING 30

# INFO 20

# DEBUG 10http_useragent: HTTP User-Agent used instead of nmap's default

Mozilla/5.0 (compatible; Nmap Scripting Engine; https://nmap.org/book/nse.html).

Update all the agents' agent_config.json files with their respective api_token for the agent by logging in as admin

and browsing to https://<HOST>/scantron-admin/authtoken/token to see the corresponding API token for each user / agent.

Enable scantron-agent service at startup.

systemctl daemon-reload # Required if scantron-agent.service changed.

systemctl enable scantron-agentDisable scantron-agent service at startup.

systemctl disable scantron-agentScantron service troubleshooting commands.

systemctl status scantron-agent

systemctl start scantron-agent

systemctl stop scantron-agent

systemctl restart scantron-agentUse screen to avoid the script dying after disconnecting through SSH.

screen -S agent1 # Create a screen session and name it agent1, if using screen.

cd agent

source .venv/bin/activate

python agent.py -c agent_config.json

CTRL + a + d # Break out of screen session, if using screen.

screen -ls # View screen job, if using screen.

screen -r agent1 # Resume named screen session, if using screen.Verify SSH connection from Master with reverse port redirect is up on each agent. Any traffic hitting 127.0.0.1:4430 will be tunneled back to Master. This port is for communicating with the API. Any traffic hitting 127.0.0.1:2049 will connect back to the NFS share on Master.

tcp 0 0 127.0.0.1:4430 0.0.0.0:* LISTEN 1399/sshd: autossh

tcp 0 0 127.0.0.1:2049 0.0.0.0:* LISTEN 1399/sshd: autosshCheck each agent's root crontab to ensure nfs_watcher.sh is being run every minute.

crontab -l -u rootIf you need to test the API without running the agent, ensure there is a 'pending' scan set to start earlier than the current date and time. The server only returns scan jobs that have a 'pending' status and start datetime earlier than the current datetime.

# Not using SSH tunnels.

curl -k -X GET -H 'Authorization: Token <VALID API TOKEN>' https://192.168.1.99:443/api/scheduled_scans

# Using SSH tunnels.

curl -k -X GET -H 'Authorization: Token <VALID API TOKEN>' https://127.0.0.1:4430/api/scheduled_scansYou can also log into the webapp using the agent name and password and browse to /api/?format=json to view any scan

jobs. The username and agent name are the same from the webapp's point of view.

- Place files with target IPs/hosts (fed to nmap

-iLswitch) inmaster/target_files/ target_filesis an NFS share on Master that the agent reads from through an SSH tunnel.

- nmap scan results from agents go here.

master/scan_results/is an NFS share on Master that the agent writes to through an SSH tunnel.

1). Ensure SSH tunnels setup in /etc/rc.local are up.

netstat -nat | egrep "192.168.1.100|192.168.1.101"

ps -ef | egrep autossh2). Django logs can be found here: /var/log/webapp/django_scantron.log

3). Check nginx logs for agent name in User-Agent field to determine which agents are calling home.

nginx logs: tail -f /var/log/nginx/{access,error}.log

4). uwsgi logs: /home/scantron/master/logs

If you need to reboot a box, do it with the provided clean_reboot.sh script that will stop all relevant services.

Without stopping the nfs-kernel-server service gracefully, sometimes the OS will hang and get angry.

Ubuntu's nmap version pulled using apt is fairly out-of-date and the recommendation for Scantron's agents is to pull

the latest version.

For RPM-based Distributions, the latest .rpm packages can be found here https://nmap.org/dist/?C=M&O=D. However,

for Debian-based distributions, you must utilize alien to convert the .rpm to a .deb file

https://nmap.org/book/inst-linux.html or compile from source. Recommend going down the alien route before compiling

from source.

VERSION=7.70-1 # CHANGE THIS TO LATEST VERSION

apt install alien -y

wget https://nmap.org/dist/nmap-$VERSION.x86_64.rpm

alien nmap-$VERSION.x86_64.rpm

apt remove nmap -y

apt remove ndiff -y

dpkg --install nmap_*.debAnother option is to compile nmap from source. This is dynamically compiled and must be done on the box where nmap is going to be run from. Note that past experience had a compiled nmap version returning a different banner than the provided apt version...so your mileage may vary.

VERSION=7.70-1 # CHANGE THIS TO LATEST VERSION

wget https://nmap.org/dist/nmap-$VERSION.tar.bz2

bzip2 -cd nmap-$VERSION.tar.bz2 | tar xvf -

cd nmap-$VERSION

./configure --without-ncat --without-ndiff --without-nmap-update --without-nping --without-subversion \

--without-zenmap --with-libdnet=included --with-libpcap=included --with-libpcre=included

make

./nmap -VThis provides a list of the actual ports being scanned when the --top-ports option is used:

# TCP

nmap -sT --top-ports 1000 -v -oG -

# UDP

nmap -sU --top-ports 1000 -v -oG -Sorted list based on frequency.

# TCP sorted list based on frequency.

egrep /tcp /usr/share/nmap/nmap-services | sort -r -k3

# UDP sorted list based on frequency.

egrep /udp /usr/share/nmap/nmap-services | sort -r -k3-

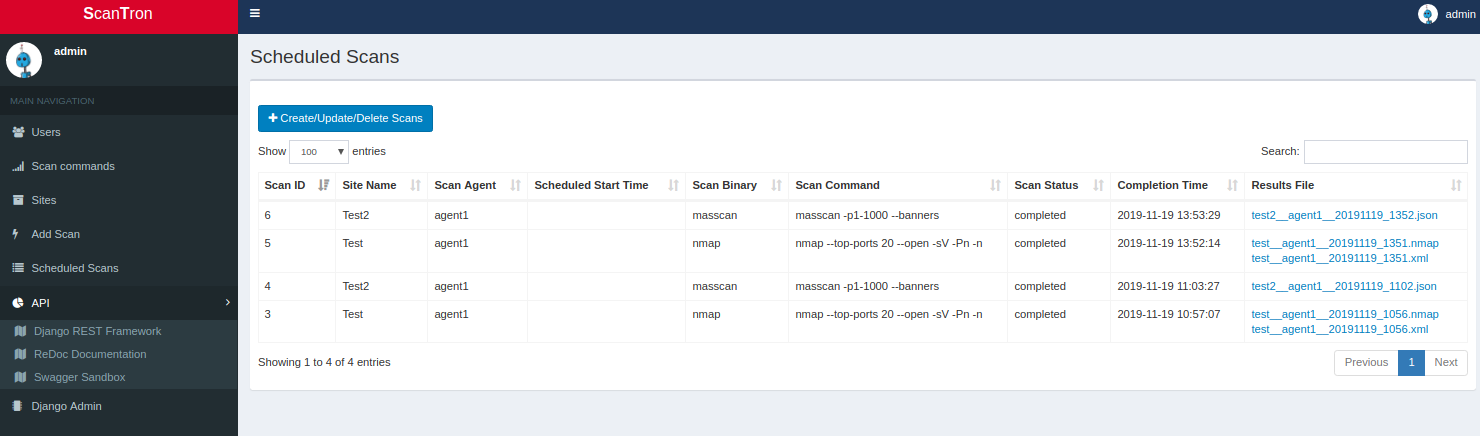

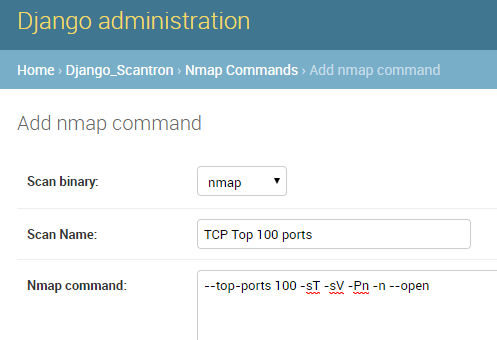

Create user/agent. By default, Ansible creates

agent1. -

Create nmap command

-

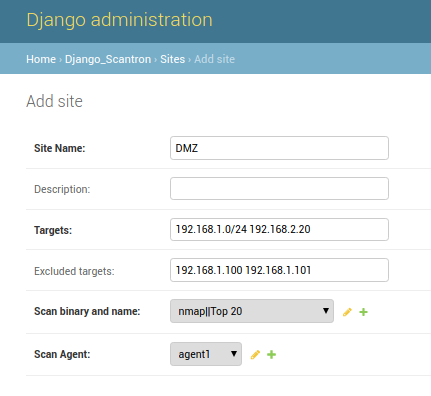

Create a site

- IPs, IP subnets, and FQDNs are allowed.

- IP ranges (

192.168.1.0-10) are not currently supported. - The targets and excluded_targets are validated using

master/extract_targets.py, which can also be used as a stand alone script.

-

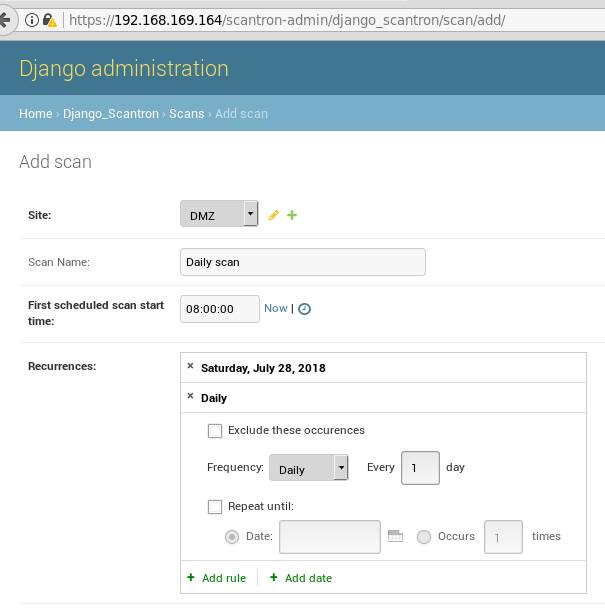

Create scan

- Select start time

- Add start date

- Add recurrence rules (if applicable)

The

/home/scantron/master/scan_scheduler.shcronjob checks every minute to determine if any scans need to be queued. If scans are found, it schedules them to be picked up by the agents. -

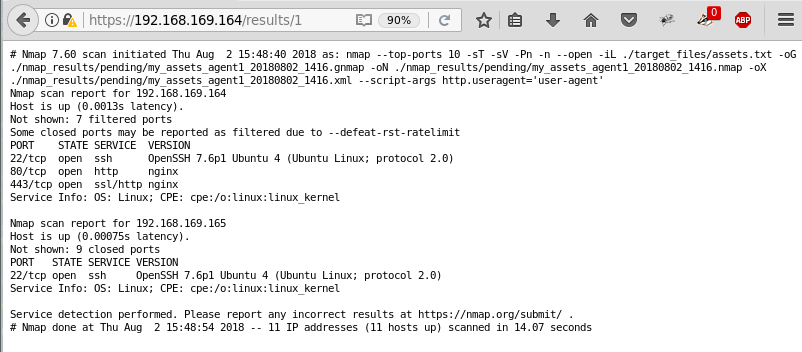

View currently executing scan results

cd /home/scantron/master/scan_results/pending ls -lartCompleted scans are moved to the

/home/scantron/master/scan_results/completeddirectory. -

Process scans

Scan files are moved between a few folders.

/home/scantron/master/scan_results/pending- Pending scan files from agents are stored here before being moved to scan_results/complete/home/scantron/master/scan_results/complete- Completed scan files from agents are stored here before being processed by nmap_to_csv.pyThe

scantronuser executes a cron job (nmap_to_csv.shwhich callsnmap_to_csv.py) every 5 minutes that will process the.xmlscan results found in thecompletedirectory and move them to theprocesseddirectory./home/scantron/master/scan_results/processed- nmap scan files already processed by nmap_to_csv.py reside here./home/scantron/master/for_bigdata_analytics- csv files for big data analytics ingestion

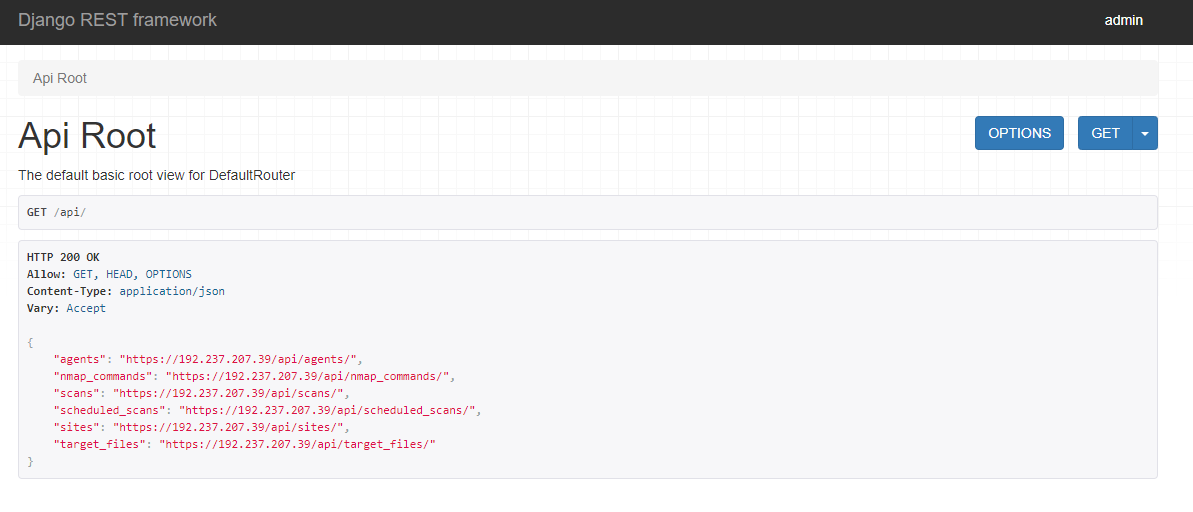

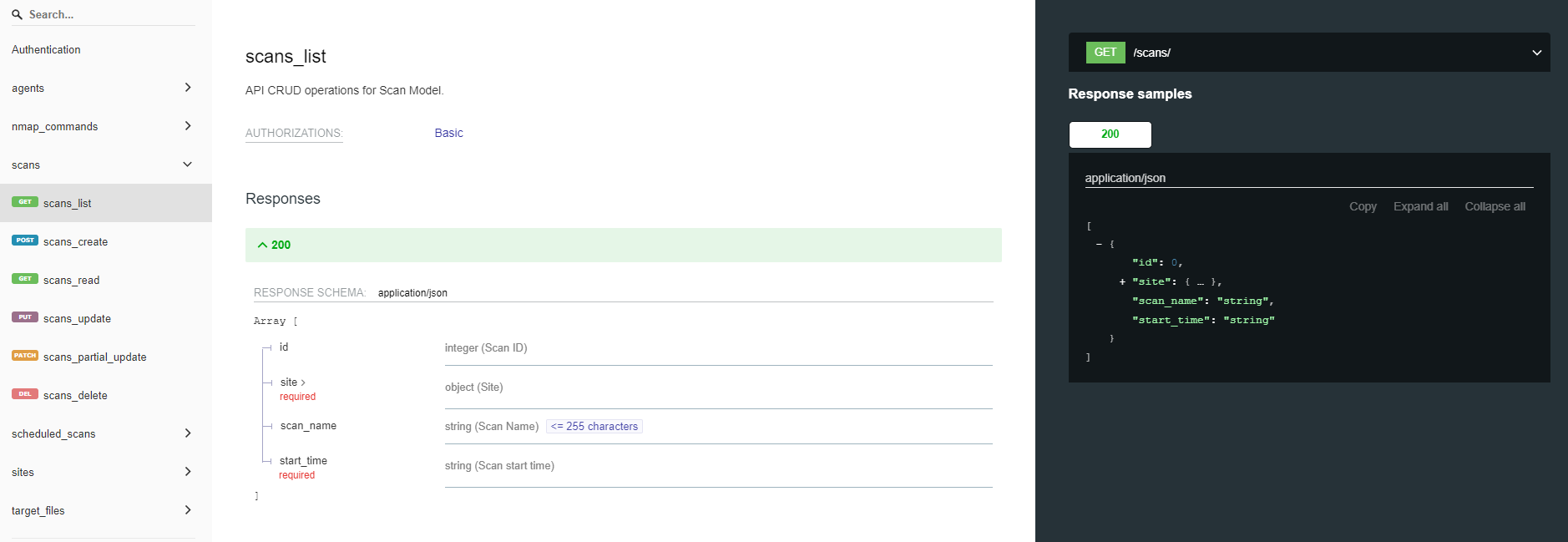

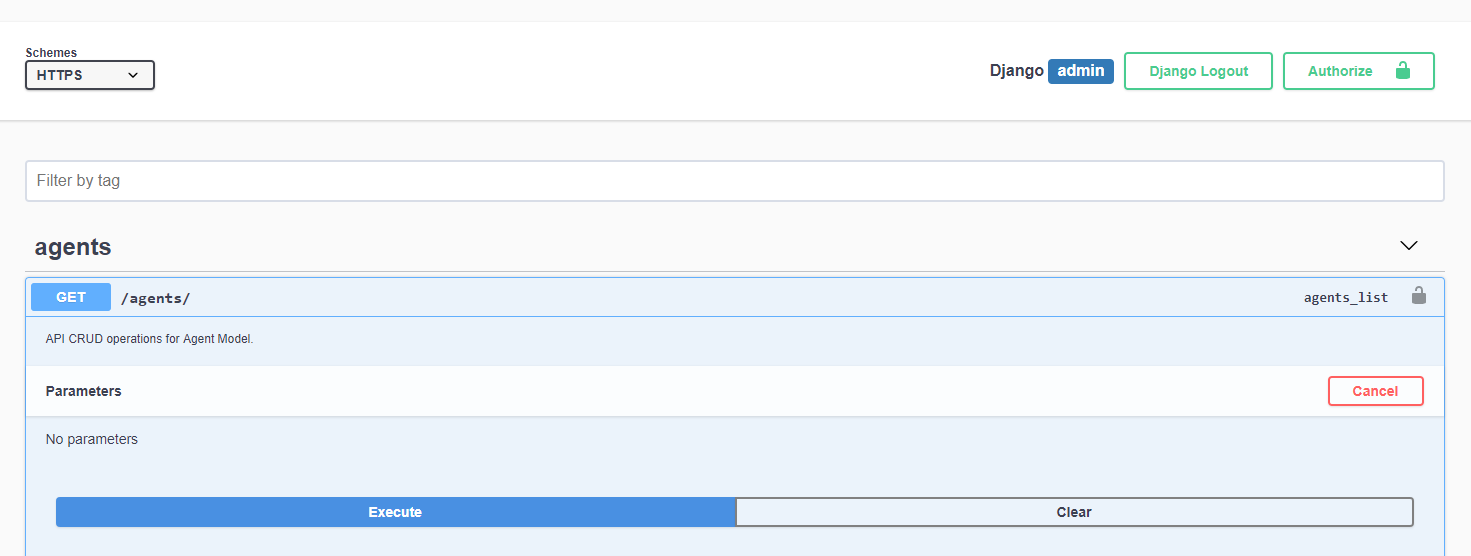

There are 3 ways to explore and play around with the API. The first is the Django REST Framework view:

You can also dig through the API documentation using ReDoc:

Lastly, you can interact with the API using Swagger:

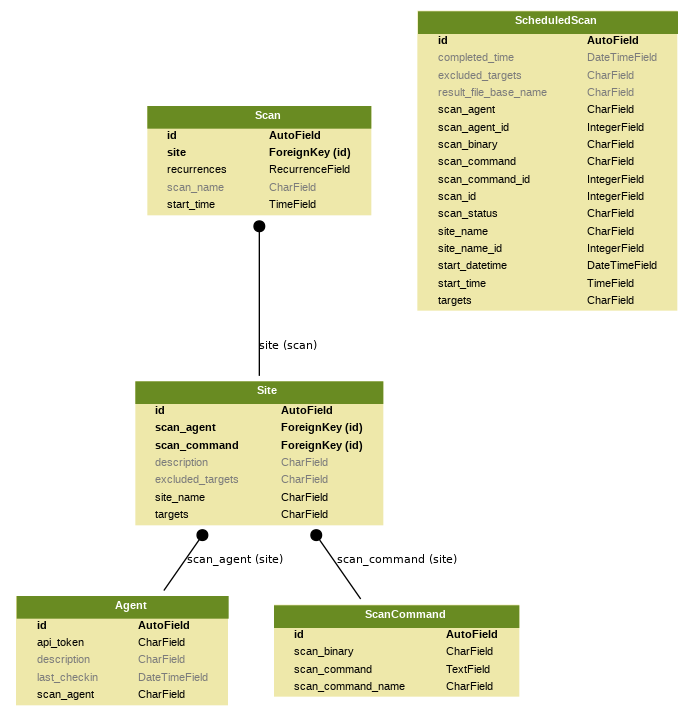

Generated using django-extensions's graph_models.

If you would like to contribute, please adhere to the Python code black formatter rules specifying a line length of 120.

More information about black can be found here (https://github.com/ambv/black)

Robot lovingly delivered by Robohash.org (https://robohash.org)