In this module I applied several technique of machine learning that already given by the professor. They include:

- Application of future engineering: label encoder and one-hot-encoder

- Explainble AI by applying

permutation importance,future importance, andshap - Try different model to get the best accuracy

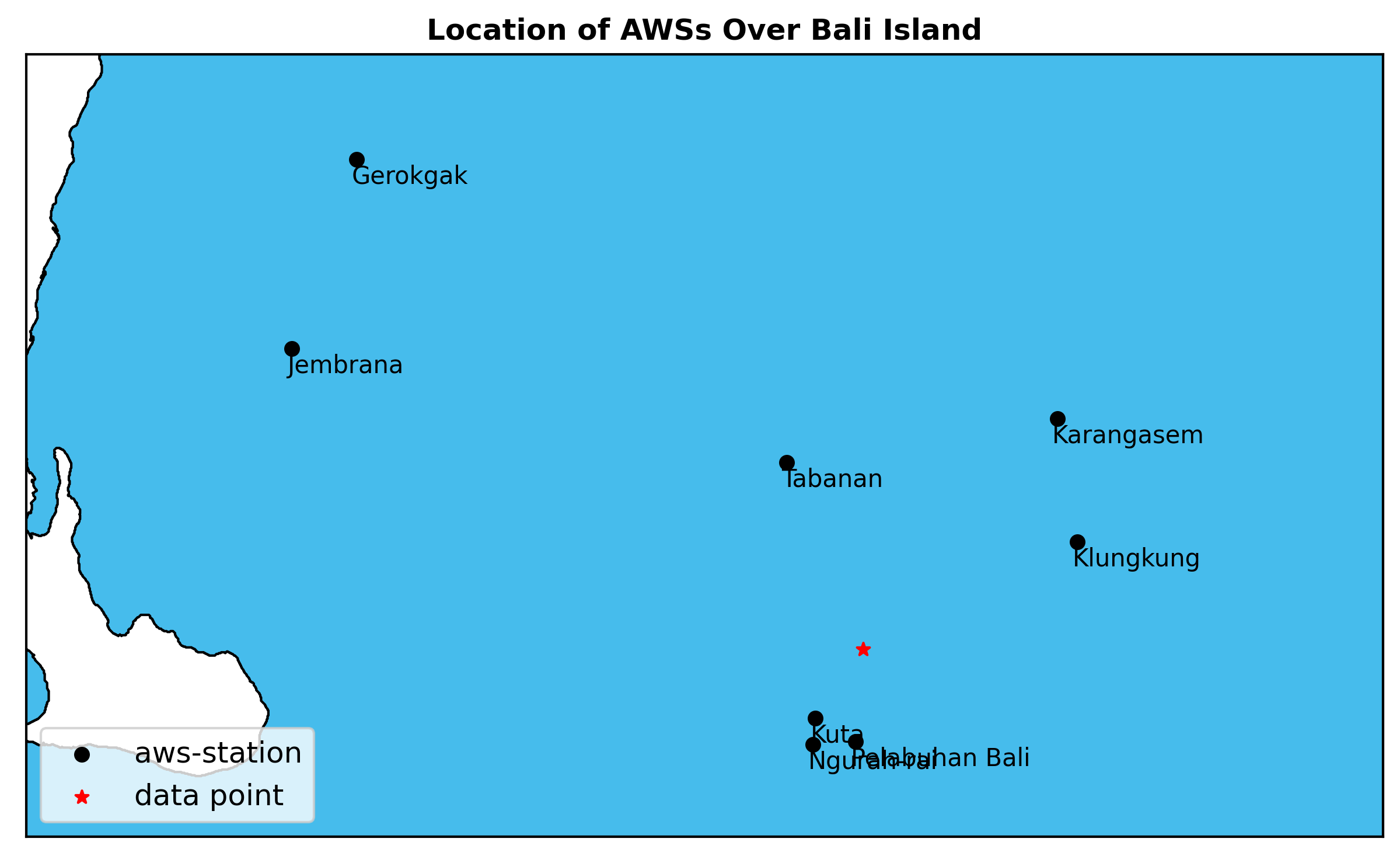

Data for this module retrieved from Kaggle. This is a weather data for Denpasar location (-8.652497, 115.219117). The data contains several weather variables for period between 1990 and 2020, yet I, personally, am curious from which tools this data was produced as they have clouds number which usually need a certain equipment (called ceilometer) to calculate the clouds, otherwise it should be manual observation. Meanwhile from this strange figure below (basemap does not have Bali shp I think), the location is not one of the listed official AWS owned by Indonesia Agency of Meteorology (BMKG). Otherwise, it could be model data that has been downscalled.

I did a quick EDA, and decide this:

- drop unwanted columns () because they have no data

- drop columns that has static data and will no effect to the built model ()

- set columns of

weather_mainas target so that the model will be supervised machine learning classification - no attempt to drop seasonailty of several weather variables (pressure and any temperature variables)

- drop any variables that directly influenced to the target such as

rainandclouds_all

First, I tried to build GBM Classifier as I know that this type of model has several advantages:

- Able to read categorical data without labeling/convert into numeric

- No care about data distribution and outliers

But still I want to try label-encoder and one-hot-encoded. Here are the accuracy for each built models:

- GBM with plain data: 84%

- GBM after applying OHE: 85%

- XGB with applied OHE: 88%

- Random Forest Classifier with applied OHE: 89%

- The application of feature engineering both label and one-hot encoder not really improved the accuracy

- Every model has different weight of the most importance feature

- The built models have accuracy between 84 - 89%, and the random forest classifier is the best model among them

- SHAP cannot use for GBM Classifier as it requires target with binary number (0 and 1) only and it took very long time to be applied for random forest model

- SHAP, permutation importance, and feature importance helps to understand the way model predict the target, yet SHAP has more features to see the individual class.

No attempt to tune the hyperparameters except for GBM Classifier with learning-rate

Full code can be found on here: App. Explainable Machine Learning.