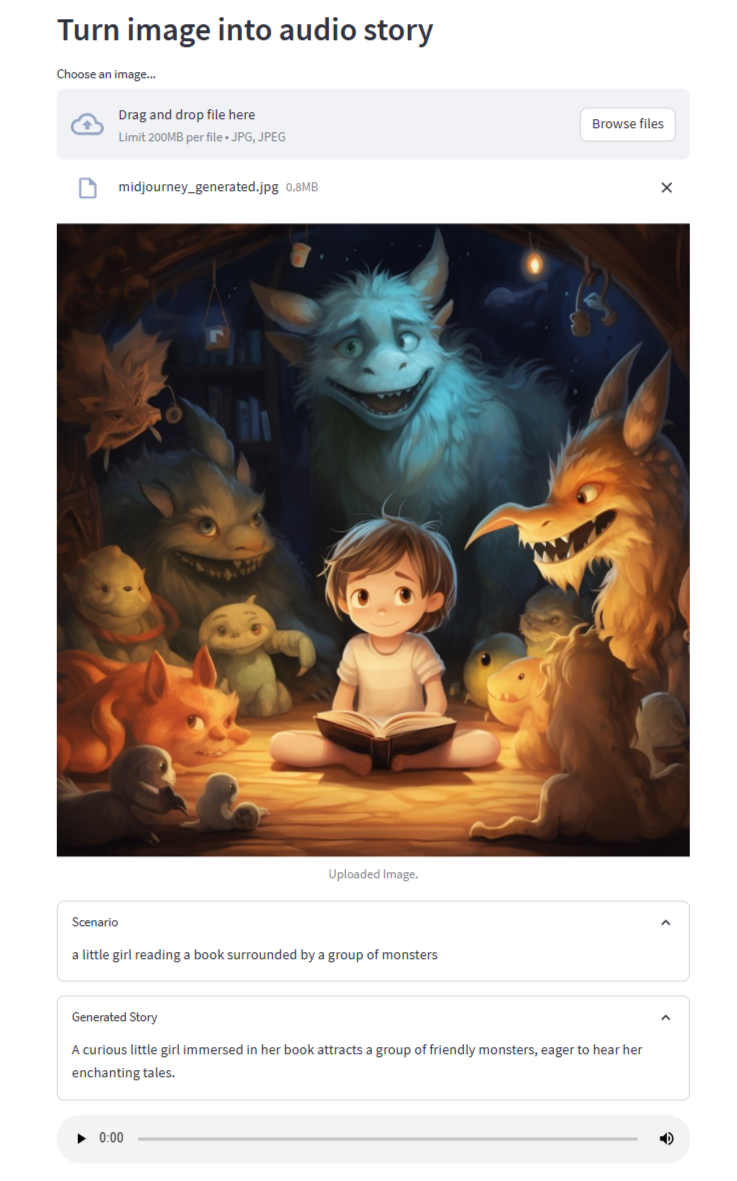

Convert images into captivating audio stories using a combination of image-to-text, language models, and text-to-speech technologies.

This project allows you to turn images into audio stories. It employs image-to-text conversion, language models, and text-to-speech synthesis to create an engaging experience. Extract text from uploaded images, generate short stories based on the extracted text, and listen to the generated stories as audio clips.

-

Clone the Repository:

git clone https://github.com/fshnkarimi/Image2AudioStoryConverter.git cd Image2AudioStoryConverter -

Install Dependencies:

pip install -r requirements.txt

-

Set Up Environment Variables: Create a

.envfile in the project directory and add your Hugging Face API token:HUGGINGFACEHUB_API_TOKEN=your_token_here

-

Run the Streamlit App:

streamlit run app.py

-

Upload an Image:

- Use the interface to upload an image.

- The app will process the image, extract text, and generate a story.

-

Experience the Story:

- View the extracted scenario and the generated story in expandable sections.

- Listen to the generated story as an audio clip.

Contributions are welcome! If you'd like to contribute to this project, please follow these steps:

- Fork this repository.

- Create a new branch for your feature or bug fix.

- Make your changes and submit a pull request.

Enjoy turning your images into captivating audio stories! Feel free to customize and enhance this project as you see fit. If you have any questions or ideas for improvement, please don't hesitate to get in touch.