A step-by-step tutorial to classify audio signals using continuous wavelet transform (CWT) as features.

-

- Create a virtual environment by using the command:

virtualenv venv - Activate the environment:

source venv/bin/activate - Install the requirements.txt file by typing:

pip install -r requirements.txt - Extract the recordings.zip file

- Create a virtual environment by using the command:

-

- recordings.zip: The contains recordings from the Free Spoken Digit Dataset (FSDD). You can also find this data here.

- training_raw_audio.npz: We are only classifying 3 speakers here: george, jackson, and lucas. All the training data from these 3 speakers is in this numpy zip file.

- testing_raw_audio.npz: We are only classifying 3 speakers here: george, jackson, and lucas. All the testing data from these 3 speakers is in this numpy zip file.

- requirements.txt: It contains the required libraries.

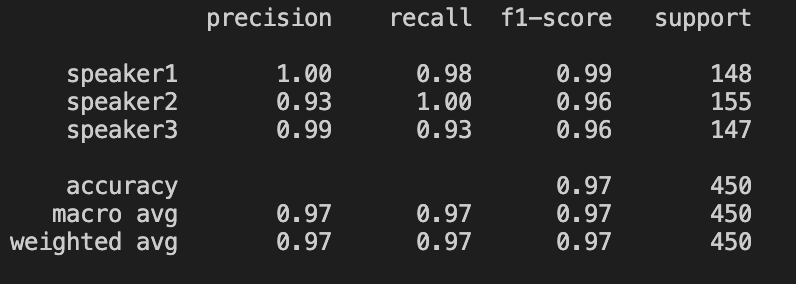

classification_report