S-NeRF: Neural Radiance Fields for Street Views,

Ziyang Xie, Junge Zhang, Wenye Li, Feihu Zhang, Li Zhang

ICLR 2023

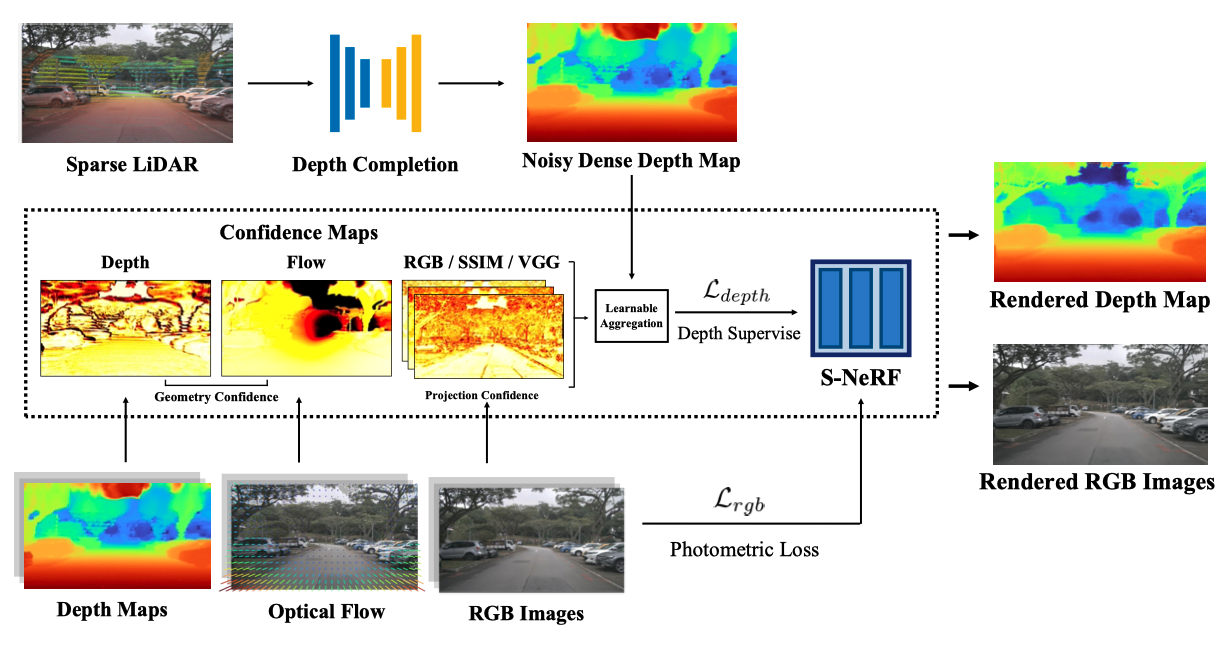

We introduce S-NeRF, a robust system to synthesizing large unbounded street views for autonomous driving using Neural Radiance Fields (NeRFs). This project aims to enhance the realism and accuracy of street view synthesis and improve the robustness of NeRFs for real-world applications. (e.g. autonomous driving simulation, robotics, and augmented reality)

[8/06/24] We released the code for S-NeRF++: Autonomous Driving Simulation via Neural Reconstruction and Generation. A comprehensive driving simulation system based on neural reconstruction.

- Check it out 👉🏻 branch s-nerf++

-

Large-scale Street View Synthesis: S-NeRF is able to synthesize large-scale street views with high fidelity and accuracy.

-

Improved Realism and Accuracy: S-NeRF significantly improves the realism and accuracy of specular reflections and street view synthesis.

-

Robust Geometry and Reprojection: By utilizing noisy and sparse LiDAR points, S-NeRF learns a robust geometry and reprojection based confidence to address the depth outliers.

-

Foreground Moving Vehicles: S-NeRF extends its capabilities for reconstructing moving vehicles, a task that is impracticable for conventional NeRFs.

- Env Installation

- Pose Preparation Scripts

- Depth & Flow Preparation Scripts

- Code for training and testing

- Foreground Vehicle Reconstruction Foreground-branch

Create a conda environment:

conda create -n S-NeRF python=3.8

conda activate S-NeRF

Install predependents:

pip install "git+https://github.com/facebookresearch/pytorch3d.git@stable"

Install the required packages:

pip install -r requiremnets.txt

- Prepare Dataset according to the following file tree

s-nerf/data/

├── nuScenes/

│ ├── mini/

│ └── trainval/

└── waymo/

└── scenes/

- Put scene name and its token in

scene_dict.json

{

"scene-0916": "325cef682f064c55a255f2625c533b75",

...

}- Prepare the poses, images and depth in S-NeRF format

- nuScenes

python scripts/nuscenes_preprocess.py

--version [v1.0-mini / v1.0-trainval] \

--dataroot ./data/<YOUR DATASET ROOT> \

- Waymo

python scripts/waymo_preprocess.py

- Prepare the depth data

Put the first smaple token of the scene in./data/depth/sample_tokens.txt

Then follow the Depth Preparation Instruction

cd s-nerf

python train.py --config [CONFIG FILE]

For the foreground vehicle reconstruction, please refer to branch foreground.

If you find this work useful, please cite:

@inproceedings{ziyang2023snerf,

author = {Xie, Ziyang and Zhang, Junge and Li, Wenye and Zhang, Feihu and Zhang, Li},

title = {S-NeRF: Neural Radiance Fields for Street Views},

booktitle = {International Conference on Learning Representations (ICLR)},

year = {2023}

}