Tensor parallelism is all you need. Run LLMs on weak devices or make powerful devices even more powerful by distributing the workload and dividing the RAM usage. This project proves that it's possible split the workload of LLMs across multiple devices and achieve a significant speedup. Distributed Llama allows you to run huge LLMs in-house. The project uses TCP sockets to synchronize the state. You can easily configure your AI cluster by using a home router.

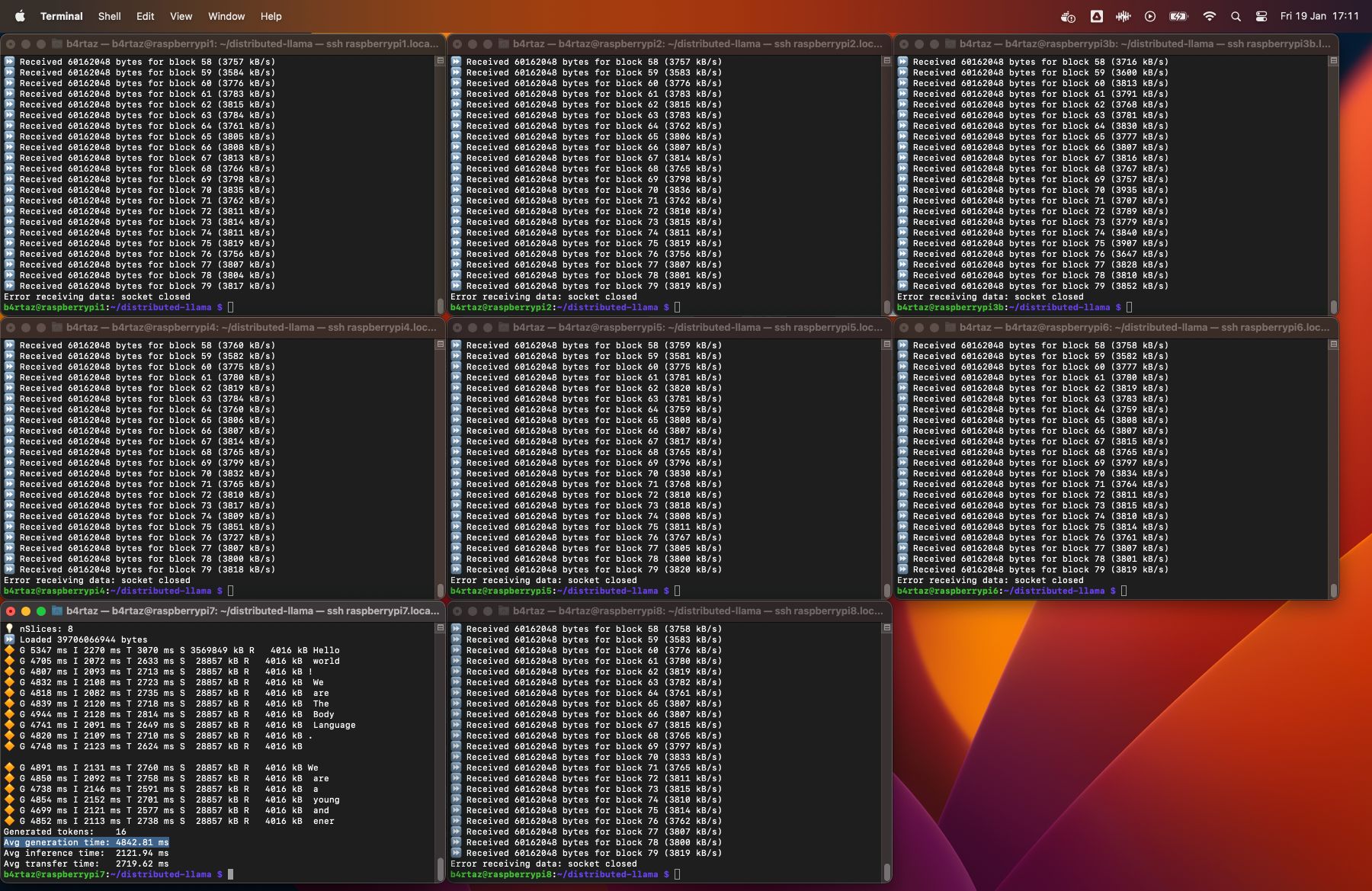

Distributed Llama running Llama 2 70B on 8 Raspberry Pi 4B devices

Python 3 and C++ compiler required. The command will download the model and the tokenizer.

| Model | Purpose | Size | Command |

|---|---|---|---|

| TinyLlama 1.1B 3T Q40 | Benchmark | 844 MB | python launch.py tinyllama_1_1b_3t_q40 |

| Llama 3 8B Q40 | Benchmark | 6.32 GB | python launch.py llama3_8b_q40 |

| Llama 3 8B Instruct Q40 | Chat, API | 6.32 GB | python launch.py llama3_8b_instruct_q40 |

| Llama 3.1 8B Instruct Q40 | Chat, API | 6.32 GB | python launch.py llama3_1_8b_instruct_q40 |

Supported architectures: Llama, Mixtral, Grok

- You can run Distributed Llama only on 1, 2, 4... 2^n nodes.

- The maximum number of nodes is equal to the number of KV heads in the model #70.

- CPU support only, GPU support is planned, optimized for (weights format × buffer format):

- ARM CPUs

- ✅ F32 × F32

- ❌ F16 × F32

- ✅ Q40 × F32

- ✅ Q40 × Q80

- x86_64 AVX2 CPUs

- ✅ F32 × F32

- ❌ F16 × F32

- ✅ Q40 × F32

- ✅ Q40 × Q80

- ARM CPUs

The project is split up into two parts:

- Root node - it's responsible for loading the model and weights and forward them to workers. Also, it synchronizes the state of the neural network. The root node is also a worker, it processes own slice of the neural network.

- Worker node - it processes own slice of the neural network. It doesn't require any configuration related to the model.

You always need the root node and you can add 2^n - 1 worker nodes to speed up the inference. The RAM usage of the neural network is split up across all nodes. The root node requires a bit more RAM than worker nodes.

dllama inference- run the inference with a simple benchmark,dllama chat- run the CLI chat,dllama worker- run the worker node,dllama-api- run the API server.

Inference, Chat, API

| Argument | Description | Example |

|---|---|---|

--model <path> |

Path to model. | dllama_model_meta-llama-3-8b_q40.m |

--tokenizer <path> |

Tokenizer to model. | dllama_tokenizer_llama3.t |

--buffer-float-type <type> |

Float precision of synchronization. | q80 |

--workers <workers> |

Addresses of workers (ip:port), separated by space. | 10.0.0.1:9991 10.0.0.2:9991 |

Inference, Chat, Worker, API

| Argument | Description | Example |

|---|---|---|

--nthreads <n> |

Amount of threads. Don't set a higher value than number of CPU cores. | 4 |

Worker, API

| Argument | Description | Example |

|---|---|---|

--port <port> |

Binding port. | 9999 |

Inference

| Argument | Description | Example |

|---|---|---|

--prompt <prompt> |

Initial prompt. | "Hello World" |

--steps <steps> |

Number of tokens to generate. | 256 |

I - inference time of the root node, T - network transfer time of the root node.

Raspberry Pi 5 8GB

Weights = Q40, Buffer = Q80, nSamples = 16, switch = TP-Link LS1008G, tested on 0.3.1 version

| Model | 1 x RasPi 5 8 GB | 2 x RasPi 5 8 GB | 4 x RasPi 5 8 GB |

|---|---|---|---|

| Llama 2 7B | 441.09 ms, 2.26 t/s I: 434.84 ms, T: 5.25 ms |

341.46 ms, 2.92 t/s I: 257.78 ms, T: 83.27 ms |

219.08 ms, 4.56 t/s 🔥 I: 163.42 ms, T: 55.25 ms |

| Llama 3 8B | 564.31 ms, 1.77 t/s I: 556.67 ms, T: 6.17 ms |

444.27 ms, 2.25 t/s I: 362.73 ms, T: 80.11 ms |

331.47 ms, 3.01 t/s 🔥 I: 267.62 ms, T: 62.34 ms |

Raspberry Pi 4B 8 GB

Weights = Q40, Buffer = Q80, nSamples = 16, switch = TP-Link LS1008G, tested on 0.1.0 version

| Model | 1 x RasPi 4B 8 GB | 2 x RasPi 4B 8 GB | 4 x RasPi 4B 8 GB | 8 x RasPi 4B 8 GB |

|---|---|---|---|---|

| Llama 2 7B | 1312.50 ms I: 1307.94 ms, T: 1.81 ms |

793.69 ms I: 739.00 ms, T: 52.50 ms |

494.00 ms 🔥 I: 458.81 ms, T: 34.06 ms |

588.19 ms I: 296.69 ms, T: 289.75 ms |

| Llama 2 13B | Not enough RAM | 1497.19 ms I: 1465.06 ms, T: 30.88 ms |

848.19 ms 🔥 I: 746.88 ms, T: 99.50 ms |

1114.88 ms I: 460.8 ms, T: 652.88 ms |

| Llama 2 70B | Not enough RAM | Not enough RAM | Not enough RAM | 4842.81 ms 🔥 I: 2121.94 ms, T: 2719.62 ms |

x86_64 CPU Cloud Server

Weights = Q40, Buffer = Q80, nSamples = 16, VMs = c3d-highcpu-30, tested on 0.1.0 version

| Model | 1 x VM | 2 x VM | 4 x VM |

|---|---|---|---|

| Llama 2 7B | 101.81 ms I: 101.06 ms, T: 0.19 ms |

69.69 ms I: 61.50 ms, T: 7.62 ms |

53.69 ms 🔥 I: 40.25 ms, T: 12.81 ms |

| Llama 2 13B | 184.19 ms I: 182.88 ms, T: 0.69 ms |

115.38 ms I: 107.12 ms, T: 7.81 ms |

86.81 ms 🔥 I: 66.25 ms, T: 19.94 ms |

| Llama 2 70B | 909.69 ms I: 907.25 ms, T: 1.75 ms |

501.38 ms I: 475.50 ms, T: 25.00 ms |

293.06 ms 🔥 I: 264.00 ms, T: 28.50 ms |

F32 Buffer

| Model | 2 devices | 4 devices | 8 devices |

|---|---|---|---|

| Llama 3 8B | 2048 kB | 6144 kB | 14336 kB |

Q80 Buffer

| Model | 2 devices | 4 devices | 8 devices |

|---|---|---|---|

| Llama 3 8B | 544 kB | 1632 kB | 3808 kB |

- Install

Raspberry Pi OS Lite (64 bit)on your Raspberry Pi devices. This OS doesn't have desktop environment. - Connect all devices to your switch or router.

- Connect to all devices via SSH.

ssh user@raspberrypi1.local

ssh user@raspberrypi2.local

- Install Git:

sudo apt install git- Clone this repository and compile Distributed Llama on all devices:

git clone https://github.com/b4rtaz/distributed-llama.git

make dllama

make dllama-api- Transfer weights and the tokenizer file to the root device.

- Optional: assign static IP addresses.

sudo ip addr add 10.0.0.1/24 dev eth0 # 1th device

sudo ip addr add 10.0.0.2/24 dev eth0 # 2th device- Run worker nodes on worker devices:

sudo nice -n -20 ./dllama worker --port 9998 --nthreads 4- Run root node on the root device:

sudo nice -n -20 ./dllama inference --model dllama_model_meta-llama-3-8b_q40.m --tokenizer dllama_tokenizer_llama3.t --buffer-float-type q80 --prompt "Hello world" --steps 16 --nthreads 4 --workers 10.0.0.2:9998To add more worker nodes, just add more addresses to the --workers argument.

./dllama inference ... --workers 10.0.0.2:9998 10.0.0.3:9998 10.0.0.4:9998

You need x86_64 AVX2 CPUs or ARM CPUs. Different devices may have different CPUs.

The below instructions are for Debian-based distributions but you can easily adapt them to your distribution, macOS.

- Install Git and GCC:

sudo apt install git build-essential- Clone this repository and compile Distributed Llama on all computers:

git clone https://github.com/b4rtaz/distributed-llama.git

make dllama

make dllama-apiContinue to point 3.

- Install Git and Mingw (via Chocolatey):

choco install mingw- Clone this repository and compile Distributed Llama on all computers:

git clone https://github.com/b4rtaz/distributed-llama.git

make dllama

make dllama-apiContinue to point 3.

- Transfer weights and the tokenizer file to the root computer.

- Run worker nodes on worker computers:

./dllama worker --port 9998 --nthreads 4- Run root node on the root computer:

./dllama inference --model dllama_model_meta-llama-3-8b_q40.m --tokenizer dllama_tokenizer_llama3.t --buffer-float-type q80 --prompt "Hello world" --steps 16 --nthreads 4 --workers 192.168.0.1:9998To add more worker nodes, just add more addresses to the --workers argument.

./dllama inference ... --workers 192.168.0.1:9998 192.168.0.2:9998 192.168.0.3:9998

This project is released under the MIT license.

@misc{dllama,

author = {Bartłomiej Tadych},

title = {Distributed Llama},

year = {2024},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/b4rtaz/distributed-llama}},

commit = {7eb77ca93ec0d502e28d36b6fb20039b449cbea4}

}