KeySearchWiki is a dataset for evaluating keyword search systems over Wikidata. This dataset is particularly designed for the Type Search task (as defined by J. Pound et al. under Type Query), where the goal is to retrieve a list of entities having a specific type given by a user query (e.g., Paul Auster novels).

KeySearchWiki consists of 16,605 queries and their corresponding relevant Wikidata entities. The dataset was automatically generated by leveraging Wikidata and Wikipedia set categories (e.g., Category:American television directors) as data sources for both relevant entities and queries. Relevant entities are gathered by carefully navigating the Wikipedia set categories hierarchy in all available languages. Furthermore, those categories are refined and combined to derive more complex queries (e.g., multi-hop queries).

The dataset generation workflow is explained in detail in the paper and the steps needed to reproduce the current dataset or generate a new dataset version are described under Dataset Generation. Furthermore, a concrete use case of the dataset is demonstrated under Experiments and the steps for evaluating the accuracy of relevant entities are presented under Evaluation.

The dataset can be used to evaluate Keyword Search Systems over Wikidata, specifically over the Wikidata Dump Version of 2021-09-20. KeySearchWiki is intended for evaluating retrieval systems that answer a user keyword query by returning a list of entities given by their IRIs.

Both queries and relevant entities are provided following the format described in Format. More insights about the dataset characteristics can be found here. A concrete use case of the dataset is demonstrated in Experiments using an approach based on a document-centric information retrieval system.

Potential users could either directly use the provided dataset version, or generate a new one that is in-line with their target knowledge graph. The process of automatically generating a new dataset is described under Dataset Generation.

The KeySearchWiki dataset is published on Zenodo in two different formats: Standard TREC format and JSON format.

JSON Format

KeySearchWiki-JSON.json gives a detailed version of the dataset. Each data entry consists of the following properties:

| Property | Description | Example |

|---|---|---|

queryID |

Unique identifier of the query given by prefix-number, where prefix = [NT (native), MK (multi-keyword), or MH (multi-hop)] |

NT1149, MK79540, MH161 |

query |

Natural language query in this form: <keyword1 keyword2 ... target> | male television actor human |

keywords |

IRIs of Wikidata the entities (or literals) corresponding to the keywords, together with their labels, types and a boolean indicator isiri. If the keyword is literal isiri = false, if it is an IRI isiri = true |

{"iri":"Q10798782","label":"television actor","isiri":"true","types":[{"type":"Q28640","typeLabel":"profession"}]}] |

target |

Type of entities to retrieve given by its Wikidata IRI and label | {"iri":"Q5","label":"human"} |

relevantEntities |

Entities that are relevant results to the query given by their Wikidata IRI and label | {"iri":"Q16904614","label":"Zoological Garden of Monaco"} as relevant result to the query Europe zoo |

TREC Format

KeySearchWiki-queries-label.txt: A text file containing the queries. Each line containing space-seperated queryID and query:MK79540 programmer University of Houston human.KeySearchWiki-queries-iri.txt: A text file containing queries, each line contains space-seperated queryID and IRIs of query elements:MK79540 Q5482740 Q1472358 Q5(could be be directly used by systems that omit a preceding Entity Linking step).KeySearchWiki-queries-naturalized.txt: A text file containing queries including 1826 adjusted queries, each line containing space-seperated queryID and query:NT5239 diplomat Germany 20th century. This is a list of queries that was partially automatically adjusted (naturalized) to better reflect natural query formulation. For example, by transforming the queryNT5239 diplomat Germany 20th century humanintoNT5239 diplomat Germany 20th century. This is done by removing the target from the query if one of its keywords is a target descendant via subclass of (P279). In the previous example,diplomatis in the subclass hierarchy ofhuman.KeySearchWiki-qrels-trec.txt: A text file containing relevant entities in the TREC format:MK79540 0 Q92877 1.

| queryID | query | keywords | target | relevantEntities |

|---|---|---|---|---|

| NT1149 | male television actor human | male(Q6581097), television actor(Q10798782) | human(Q5) | e.g., Q100028, Q100293 |

| MK79540 | programmer University of Houston human | programmer(Q5482740), University of Houston(Q1472358) | human(Q5) | e.g., Q92877, Q6847972 |

| MH161 | World Music Awards album | World Music Awards(Q375990) | album(Q482994) | e.g., Q4695167, Q1152760 |

The dataset generation workflow could be used either (1) to reproduce the current dataset version or (2) to generate a new dataset using other underlying Wikidata/Wikipedia versions. We provide two options for dataset generation.

- First, two kind of dump versions should be selected:

- Wikidata JSON Dump (

wikidata-<version>-all.json.gz) - Wikipedia SQL Dumps (available dataset versions could be checked by visiting e.g., enwiki for English Wikipedia)

- Second, Setup a MariaDB database (place where Wikipedia SQL Dumps will be imported)

- Install MariaDB :

sudo apt-get install mariadb-server. - Set root password:

$ sudo mysql -u root MariaDB [(none)]> SET PASSWORD = PASSWORD('DB_PASSWORD'); MariaDB [(none)]> update mysql.user set plugin = 'mysql_native_password' where User='root'; MariaDB [(none)]> FLUSH PRIVILEGES; - Create a Database:

$ sudo mysql -u root MariaDB [(none)]> create database <DB_NAME> character set binary; Query OK, 1 row affected (0.00 sec) MariaDB [(none)]> use <DB_NAME>; Database changed - Optimize Database import by setting following parameter in

/etc/mysql/my.cnfand then restart the database serverservice mysql restart.

wait_timeout = 604800

innodb_buffer_pool_size = 8G

innodb_log_buffer_size = 1G

innodb_log_file_size = 512M

innodb_flush_log_at_trx_commit = 2

innodb_doublewrite = 0

innodb_write_io_threads = 16

- Update configuration file in

./src/cache-population/config.js

// wikidata dump version

wdDump: path.join(__dirname,'..', '..', '..', 'wikidata-<version>-all.json.gz'),

// wikipedia dump version

dumpDate : <wikipedia-version>,

// wikipedia database user

user: 'root',

// wikipedia database password

password: <DB_PASSWORD>,

// wikipedia database name

databaseName: <DB_NAME>,

- Install Node.js (minimum v14.16.1)

- First download the repository and install dependencies: run

npm installin the project root folder. - Populate caches from dumps :

npm run runner - Continue with the steps described under Dataset Generation Workflow

This option does not need local setup or prior cache population. It allows to directly send requests to Wikidata/Wikipedia public endpoints:

To generate a dataset from endpoints, the steps under Dataset Generation Workflow can directly be followed.

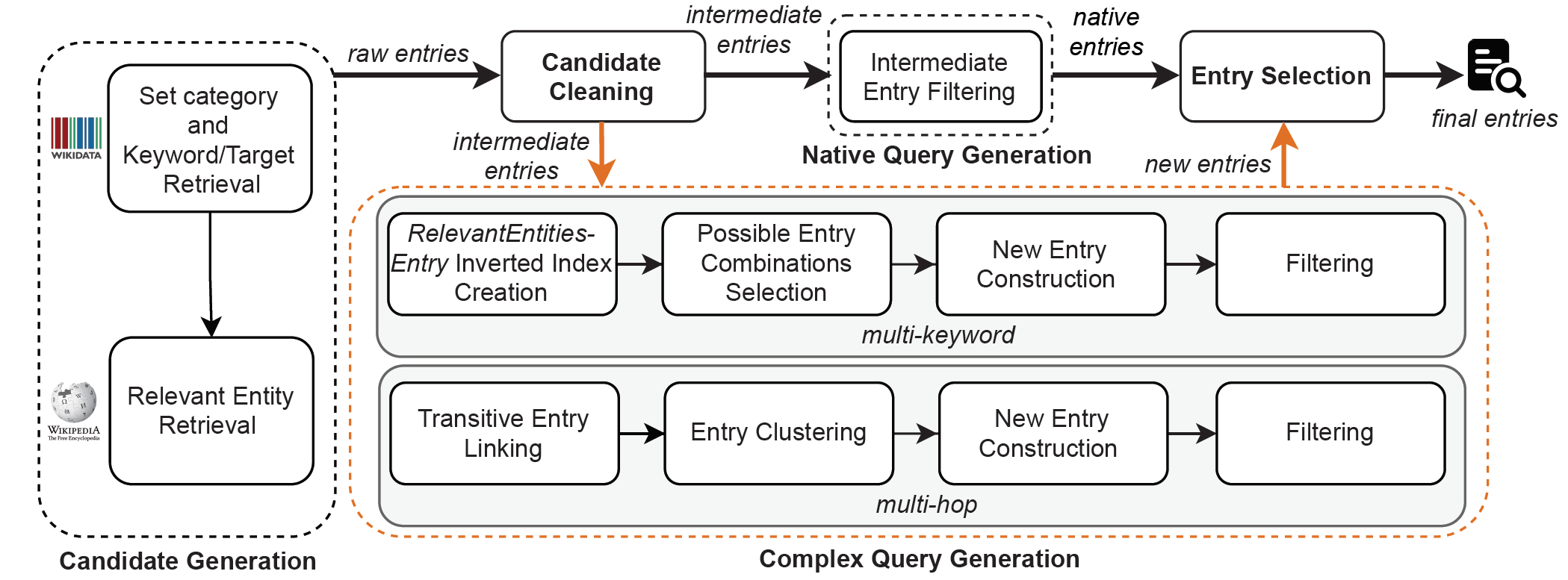

The dataset generation workflow is illustrated in the following figure (see paper for more details).

To reproduce the current KeySearchWiki version, one can make use of the already filled caches. The dataset is accompanied with cache files (KeySearchWiki-cache.zip), a collection of SQLite database files containing all data retrieved from Wikidata JSON Dump and Wikipedia SQL Dumps of 2021-09-20.

To reproduce current dataset or generate a new one, the steps below should be followed (start directly with step 3 if Node.js and dependencies were already installed in From Dumps):

- Install Node.js (minimum v14.16.1)

- First download the repository and install dependencies: run

npm installin the project root folder.

- Generate from Endpoints : setup

endpointEnabled: truein./src/config/config.js. - Generate from Dumps : setup

endpointEnabled: falsein./src/config/config.js. - Reproduce current dataset : setup

endpointEnabled: falsein./src/config/config.js. In the root folder create a folder./cache/, unzipKeySearchWiki-cache.zipincachefolder.

- To generate the raw entries run

npm run generateCandidatein the root folder. The output files can be found under./dataset/. In addition to log files (debugging), statistics files, the pipeline initial output is:./dataset/raw-data.json. - To generate the intermediate entries run

npm run cleanCandidatein the root folder. Find the output entries under:./dataset/intermediate-dataset.json. - To generate the native entries run

npm run generateNativeEntryin the root folder. Find the output entries under:./dataset/native-dataset.json(together with statistics (dataset characteristics) and metrics (Filtering criteria) files). - To generate the multi-hop new entries: first create the Keyword Index by running

npm run generateKeywordIndex. After the process has finished, runnpm run generateNewEntryHopto generate the entries. Find the output data under:./dataset/new-dataset-multi-hop.json(together with statistics/metrics files). - To generate the multi-keyword new entries run

npm run generateNewEntryKW. Find the output under:./dataset/new-dataset-multi-key.json(together with statistics/metrics files). - To generate the final entries. First merge all the entries by running

npm run mergeEntries. After the process has finished, runnpm run diversifyEntriesto perform the Entry Selection step. Find the output file under:./dataset/final-dataset.json. Generate statistics/metrics files by runningnpm run generateStatFinal. - Generate the files in final formats described in Format by running

npm run generateFinalFormat. All KeySearchWiki dataset files are also found under./dataset. - Naturalized queries are generated by running

npm run naturalizeQueries. The output fileKeySearchWiki-queries-naturalized.txtis found under./dataset.

Note that some steps will take a long time. Consider waiting till each process has finished.

The parameters used to perform Filtering (see Workflow) could be set in the following config files depending on query type:

🔴 Note that since the 06th March 2022, the Wikidata "Wikimedia set categories (Q59542487)" were merged with their initially superclasses "Wikimedia categories (Q4167836)".

This was done by redirecting the "Wikimedia set categories (Q59542487)" to the latter entity.

While the generation of the current dataset version is reproducible, generating new datasets based on "Wikimedia set categories (Q59542487)" will be only possible on Wikidata Dump/Endpoints based on versions released before 2022-03-06, where the differentiation between the two types was still existing.

Theoretically, to generate a new dataset using the general "Wikimedia categories (Q4167836)" from any Dump/Endpoint version, one should only adjust the entity IRI in the corresponding project global config file (categoryIRI).

However, executing the entire pipeline on the public endpoint will likely result in timeouts due to the larger number of categories compared to the previously used set categories.

A custom server/endpoint can be configured with larger timeout thresholds to account for this issue. Using the dumps does not suffer from this issue. We also plan to publish a new dataset version based on the more general Wikimedia categories.

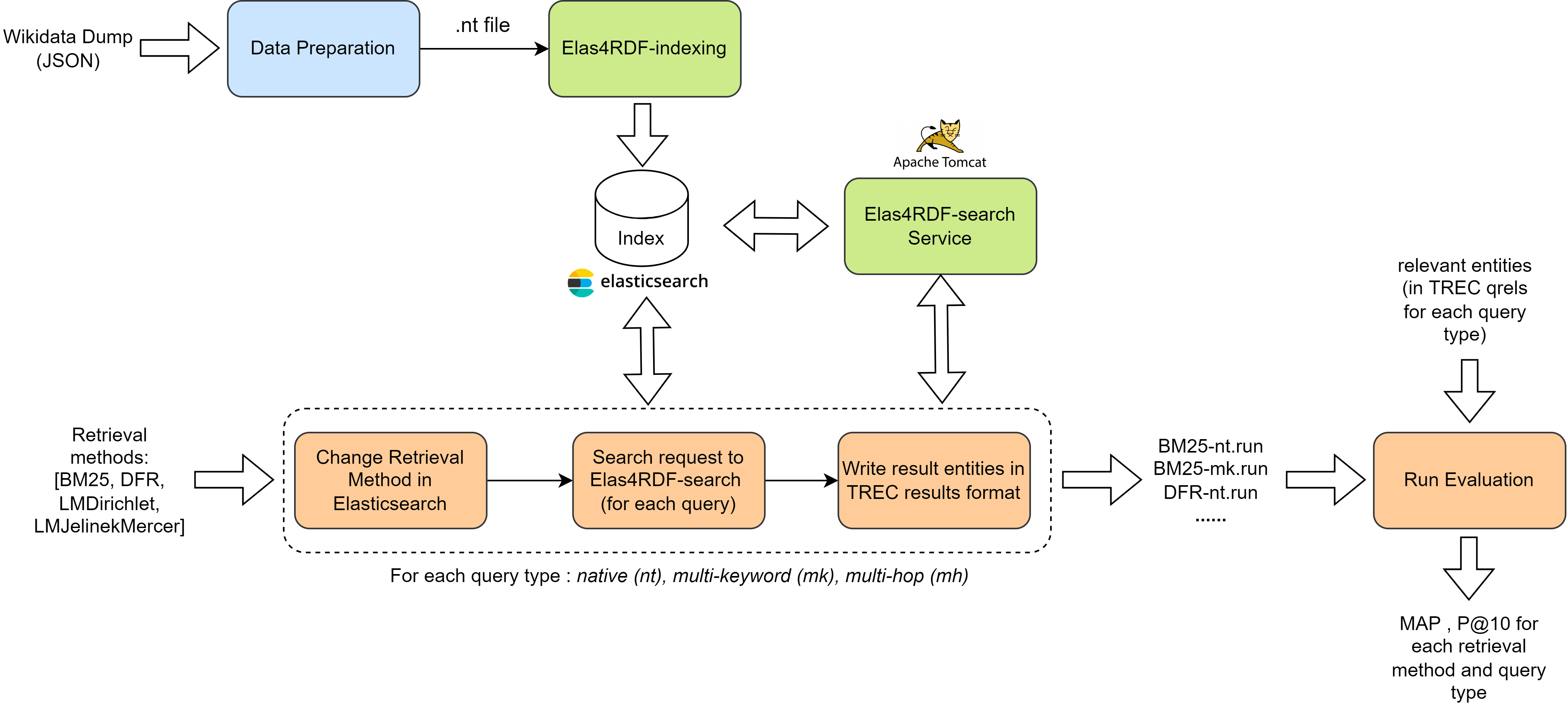

We demonstrate the usability of KeySearchWiki dataset for the task of keyword search over Wikidata by applying it on different traditional retrieval methods using the approach proposed by G. Kadilierakis et al.. This method proposes a configuration of Elasticsearch search engine for RDF.

In particular, two services are provided:

- Elas4RDF-index Service: creates an index of an RDF dataset based on a given configuration.

- Elas4RDF-search Service: performs keyword search over the indexed data and returns a list of results (triples, entities).

The following figure depicts the experiments pipeline for evaluating some retrieval methods over KeySearchWiki using both Elas4RDF-index and Elas4RDF-search services:

This step consists of preparing the data in the N-Triples format accepted by the Elas4RDF-index service. The first step is extracting a subset of triples from Wikidata JSON Dump. To avoid indexing triples involving all Wikidata entities and keep indexing time reasonable, experiments are performed on a subset of KeySearchWiki queries (having one of top-10 targets). Therefore, we index triples involving Wikidata entities that are either instance of the target itself or any of its subclasses. This way we keep 99% (only 112 queries discarded) of the queries from all the types (native: 1,037, multi-keyword: 15,343, multi-hop: 113). For each Wikidata entity of interest we store the following information (needed by indexing step):

{

id:"Q23",

description:"1st president of the United States (1732−1799)",

label:"George Washington",

claims:{

"P25":[{"value":"Q458119","type":"wikibase-item"}]

"P509":[{"value":"Q1347065","type":"wikibase-item"},{"value":"Q3827083","type":"wikibase-item"}],

...

}

}

In the second step, we get the description and label for all the objects (wikibase-item) related to the selected entities (e.g., Q1347065).

Finally, N-Triples are generated from entities and their objects description/label:

<http://www.wikidata.org/entity/Q23> <http://www.w3.org/2000/01/rdf-schema#label> "George Washington"@en .

<http://www.wikidata.org/entity/Q23> <http://schema.org/description> "1st president of the United States (1732−1799)"@en .

<http://www.wikidata.org/entity/Q23> <http://www.wikidata.org/prop/direct/P25> <http://www.wikidata.org/entity/Q458119> .

<http://www.wikidata.org/entity/Q23> <http://www.wikidata.org/prop/direct/P509> <http://www.wikidata.org/entity/Q1347065> .

<http://www.wikidata.org/entity/Q23> <http://www.wikidata.org/prop/direct/P509> <http://www.wikidata.org/entity/Q3827083> .

...

Data preparation for our experiments is reproduced by running the command npm run prepareData in the project's root folder.

We used the same best performing indexing (extended (s)(p)(o)2) reported by G. Kadilierakis et al., where each triple is represented with an Elasticsearch document consisting of the following fields:

- Keywords of subject/predicate/object: Represent the literal value. If triple component is not a literal, the IRI’s namespace part is removed and the rest is tokenized into keywords.

- Descriptions of subject/object.

- Labels of subject/object.

Example document indexed in Elasticsearch corresponding to the triple <http://www.wikidata.org/entity/Q23> <http://www.wikidata.org/prop/direct/P25> <http://www.wikidata.org/entity/Q458119>:

{

"subjectKeywords": "Q23",

"predicateKeywords": "P25",

"objectKeywords": "Q458119",

"rdfs_comment_sub": ["1st president of the United States (1732−1799) @en"],

"rdf_label_sub": ["George Washington @en"],

"rdfs_comment_obj": ["mother of George Washington @en"],

"rdf_label_obj": ["Mary Ball Washington @en"]

}

First, Elas4RDF-index service is adapted to Wikidata by manually replacing the namespace in Elas4RDF-index/index/extended.py on line 212 by: http://www.wikidata.org/entity.

Then indexing is started by following the instructions in Elas4RDF-index

Make sure that:

- The Elasticsearch server is started.

- The generated N-Triples file is put within the place specified in the

/Elas4RDF-index/res/configuration/.propertiesconfiguration file under the propertyindex.data. Our used configuration file can be found here./data/Elas4RDF-config/wiki-subset.properties.

Indexing was started using this command: python3 indexer_service.py -config ./Elas4RDF-index/res/configuration/wiki-subset.properties in Elas4RDF-index root folder.

First, Elas4RDF-search service is adapted to Wikidata by manually adding the namespace http://www.wikidata.org/entity in Elas4RDF-search/src/main/java/gr/forth/ics/isl/elas4rdfrest/Controller.java on line 43.

Then, the search service is setup by following the instructions in Elas4RDF-search.

The index initialization for search was performed using the following command: curl --header "Content-Type: application/json" -X POST localhost:8080/elas4rdf-rest-0.0.1-SNAPSHOT/datasets -d "@/Elas4RDF-index/output.json".

The file output.json is automatically generated after the indexing process.

The created output file in our case can be found here ./data/Elas4RDF-config/output.json.

The evaluation pipeline (orange) takes a list of retrieval methods (provided by Elasticsearch), communicates with the Elasticsearch index and the Elas4RDF-search service to generate search results (runs), and finally calculates evaluation metrics for each run.

For the evaluation, the TREC format for KeySearchWiki is used.

We start by grouping the queries (KeySearchWiki-queries-label.txt) and relevant results (KeySearchWiki-qrels-trec.txt) files by query type to allow for comparison.

This results in 3 files for both queries and relevance judgements (qrels) for each query type (native, multi-keyword, multi-hop).

Used queries and qrels files can be found respectively under: KeySearchWiki-experiments/queries and KeySearchWiki-experiments/qrels in KeySearchWiki-experiments.

The first step is to send a request to the Elasticsearch index to change the retrieval method.

Then, for each query type and for each query, a search request is sent to the Elas4RDF-search service.

A list of ranked relevant entities (refer to Section 4.5 in G. Kadilierakis et al.) is returned and the results are written in the TREC results format: <queryID> Q0 <RetrievedEntityIRI> <rank> <score> <runID>.

The resulted runs are under: KeySearchWiki-experiments/runs in KeySearchWiki-experiments.

After all runs are generated, each run and its corresponding qrels file are given as input to the standard trec_eval tool to calculate the Mean Average Precision (MAP) and the Precision at rank 10 (P@10). To run the evaluation pipeline follow the steps below:

- Download the trec_eval v9.0.7.

- Unzip the tool in

./src/experiments/trec-tool/trec_eval-9.0.7(after folders creation), and compile it by typingmakein the command line. - Run the evaluation using

npm run runEval(output under./experiments). Evaluation results are found under:KeySearchWiki-experiments/resultsin KeySearchWiki-experiments.

The following table summarizes the experiment results of the different retrieval methods. We use MAP and P@10 as evaluation metrics (considering the top-1000 results):

| Method | Native | Multi-Keyword | Multi-hop | |||

|---|---|---|---|---|---|---|

| MAP | P@10 | MAP | P@10 | MAP | P@10 | |

| BM25 | 0.211 | 0.225 | 0.025 | 0.039 | 0.014 | 0.032 |

| DFR | 0.209 | 0.211 | 0.023 | 0.029 | 0.015 | 0.024 |

| LM Dirichlet | 0.182 | 0.180 | 0.020 | 0.025 | 0.015 | 0.018 |

| LM Jelinek-Mercer | 0.212 | 0.215 | 0.023 | 0.029 | 0.018 | 0.022 |

We evaluate the accuracy of relevant entities in KeySearchWiki by comparing with existing SPARQL queries. For this purpose we calculate the Precision and Recall of KeySearchWiki entities with respect to SPARQL query results. To run the evaluation scripts the steps below should be followed:

- run

npm run compareSPARQLin the root folder. The output files can be found under./eval/. - run

npm run generatePlotsin the root folder to generate metrics plots. The output can be found under./charts/.

Note that the compareSPARQL script uses the ./dataset/native-dataset.json as input.

For that steps 1-6 from Dataset generation workflow should be executed first.

Current evaluation results could be found under ./data/eval-results.

Detailed analysis of the results can be found here.

This project is licensed under the MIT License.