Table of Contents

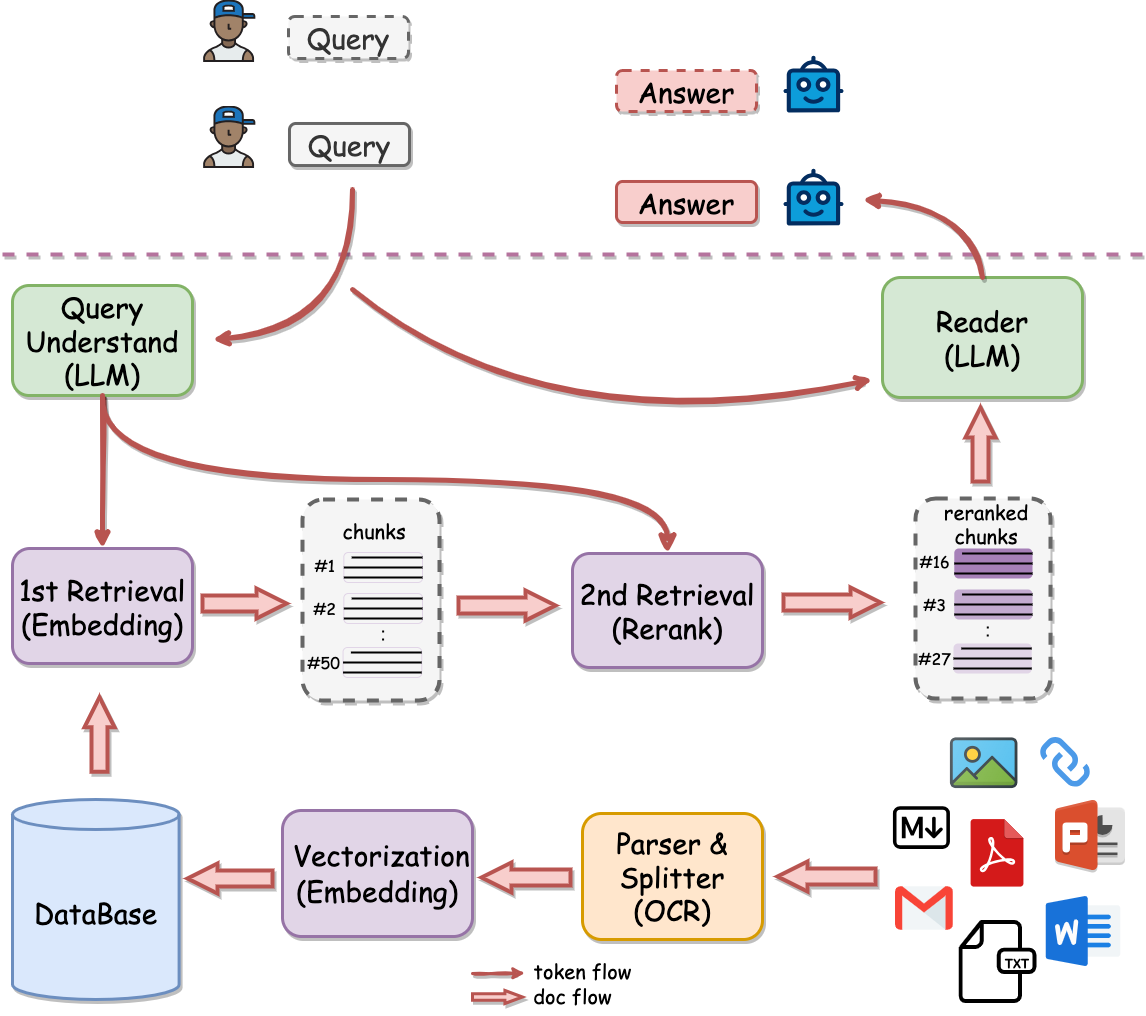

Question and Answer based on Anything (QAnything) is a local knowledge base question-answering system designed to support a wide range of file formats and databases, allowing for offline installation and use.

With QAnything, you can simply drop any locally stored file of any format and receive accurate, fast, and reliable answers.

Currently supported formats include: PDF, Word (doc/docx), PPT, Markdown, Eml, TXT, Images (jpg, png, etc.), Web links and more formats coming soon…

- Data Security, supports installation and usage with network cable unplugged throughout the process.

- Cross-language QA support, freely switch between Chinese and English QA, regardless of the language of the document.

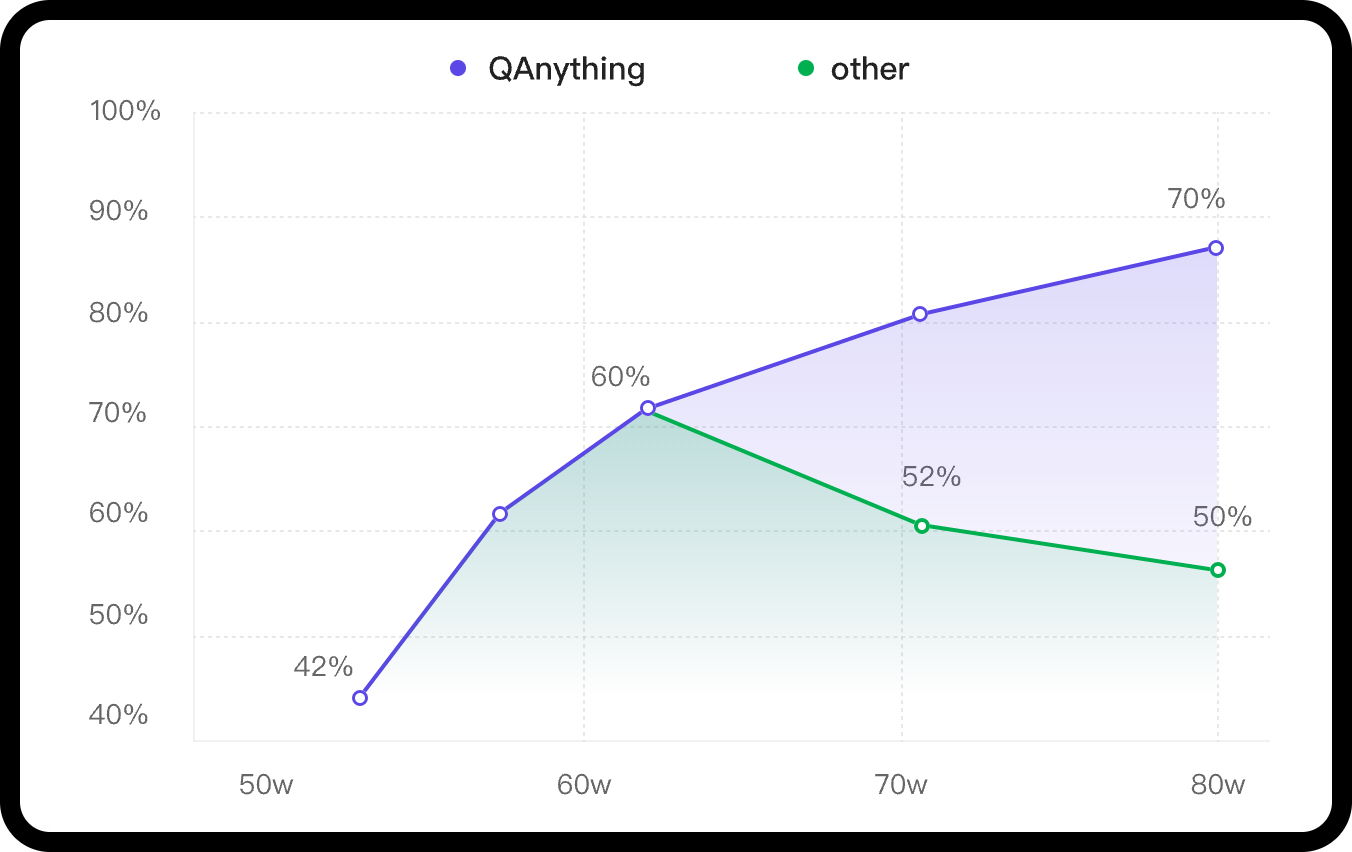

- Supports massive data QA, two-stage retrieval ranking, solving the degradation problem of large-scale data retrieval; the more data, the better the performance.

- High-performance production-grade system, directly deployable for enterprise applications.

- User-friendly, no need for cumbersome configurations, one-click installation and deployment, ready to use.

- Multi knowledge base QA Support selecting multiple knowledge bases for Q&A

In scenarios with a large volume of knowledge base data, the advantages of a two-stage approach are very clear. If only a first-stage embedding retrieval is used, there will be a problem of retrieval degradation as the data volume increases, as indicated by the green line in the following graph. However, after the second-stage reranking, there can be a stable increase in accuracy, the more data, the better the performance.

QAnything uses the retrieval component BCEmbedding, which is distinguished for its bilingual and crosslingual proficiency. BCEmbedding excels in bridging Chinese and English linguistic gaps, which achieves

- A high performence on Semantic Representation Evaluations in MTEB;

- A new benchmark in the realm of RAG Evaluations in LlamaIndex.

| Model | Retrieval | STS | PairClassification | Classification | Reranking | Clustering | Avg |

|---|---|---|---|---|---|---|---|

| bge-base-en-v1.5 | 37.14 | 55.06 | 75.45 | 59.73 | 43.05 | 37.74 | 47.20 |

| bge-base-zh-v1.5 | 47.60 | 63.72 | 77.40 | 63.38 | 54.85 | 32.56 | 53.60 |

| bge-large-en-v1.5 | 37.15 | 54.09 | 75.00 | 59.24 | 42.68 | 37.32 | 46.82 |

| bge-large-zh-v1.5 | 47.54 | 64.73 | 79.14 | 64.19 | 55.88 | 33.26 | 54.21 |

| jina-embeddings-v2-base-en | 31.58 | 54.28 | 74.84 | 58.42 | 41.16 | 34.67 | 44.29 |

| m3e-base | 46.29 | 63.93 | 71.84 | 64.08 | 52.38 | 37.84 | 53.54 |

| m3e-large | 34.85 | 59.74 | 67.69 | 60.07 | 48.99 | 31.62 | 46.78 |

| bce-embedding-base_v1 | 57.60 | 65.73 | 74.96 | 69.00 | 57.29 | 38.95 | 59.43 |

- More evaluation details please check Embedding Models Evaluation Summary。

| Model | Reranking | Avg |

|---|---|---|

| bge-reranker-base | 57.78 | 57.78 |

| bge-reranker-large | 59.69 | 59.69 |

| bce-reranker-base_v1 | 60.06 | 60.06 |

- More evaluation details please check Reranker Models Evaluation Summary

NOTE:

- In

WithoutRerankersetting, ourbce-embedding-base_v1outperforms all the other embedding models. - With fixing the embedding model, our

bce-reranker-base_v1achieves the best performence. - The combination of

bce-embedding-base_v1andbce-reranker-base_v1is SOTA. - If you want to use embedding and rerank separately, please refer to BCEmbedding

The open source version of QAnything is based on QwenLM and has been fine-tuned on a large number of professional question-answering datasets. It greatly enhances the ability of question-answering. If you need to use it for commercial purposes, please follow the license of QwenLM. For more details, please refer to: QwenLM

| Required item | Minimum Requirement | Note |

|---|---|---|

| NVIDIA GPU Memory | >= 16GB | NVIDIA 3090 recommended |

| NVIDIA Driver Version | >= 525.105.17 | |

| CUDA Version | >= 12.0 | |

| docker compose version | >= 2.12.1 | docker compose install |

git clone https://github.com/netease-youdao/QAnything.git

This project provides multiple model download platforms. Choose one of the methods for downloading.

👉【WiseModel】 👉【ModelScope】 👉【HuggingFace】

Download method 1:WiseModel(recommend👍)

cd QAnything

# Make sure you have git-lfs installed (https://git-lfs.com)

git lfs install

git clone https://www.wisemodel.cn/Netease_Youdao/qanything.git

unzip qanything/models.zip # in root directory of the current project

Download method 2:ModelScope

cd QAnything

# Make sure you have git-lfs installed (https://git-lfs.com)

git lfs install

git clone https://www.modelscope.cn/netease-youdao/QAnything.git

unzip QAnything/models.zip # in root directory of the current project

Download method 3:HuggingFace

cd QAnything

# Make sure you have git-lfs installed (https://git-lfs.com)

git lfs install

git clone https://huggingface.co/netease-youdao/QAnything

unzip QAnything/models.zip # in root directory of the current project

vim docker-compose-windows.yaml # change CUDA_VISIBLE_DEVICES to your gpu device id

vim front_end/.env.production # set the excetly host.

vim docker-compose-linux.yaml # change CUDA_VISIBLE_DEVICES to your gpu device id

vim front_end/.env.production # set the excetly host.

Beginner's recommendation!

# Front desk startup, log prints to the screen in real time, press ctrl+c to stop.

docker-compose -f docker-compose-windows.yaml up qanything_localRecommended for experienced players!

# Background startup, ctrl+c will not stop.

docker-compose -f docker-compose-windows.yaml up -d

# Execute the following command to view the log.

docker-compose -f docker-compose-windows.yaml logs qanything_local

# Stop service

docker-compose -f docker-compose-windows.yaml downBeginner's recommendation!

# Front desk startup, log prints to the screen in real time, press ctrl+c to stop.

docker-compose -f docker-compose-linux.yaml up qanything_localRecommended for experienced players!

# Background startup, ctrl+c will not stop.

docker-compose -f docker-compose-linux.yaml up -d

# Execute the following command to view the log.

docker-compose -f docker-compose-linux.yaml logs qanything_local

# Stop service

docker-compose -f docker-compose-linux.yaml downAfter successful installation, you can experience the application by entering the following addresses in your web browser.

-

Frontend address: http://{your_host}:5052/qanything/

-

API address: http://{your_host}:5052/api/

For detailed API documentation, please refer to QAnything API 文档

multi_paper_qa.mp4

information_extraction.mp4

various_files_qa.mp4

web_qa.mp4

If you need to access the API, please refer to the QAnything API documentation.

Welcome to scan the QR code below and join the WeChat group.

Reach out to the maintainer at one of the following places:

- Github issues

- Contact options listed on this GitHub profile

QAnything is licensed under Apache 2.0 License

QAnything adopts dependencies from the following:

- Thanks to our BCEmbedding for the excellent embedding and rerank model.

- Thanks to Qwen for strong base language models.

- Thanks to Triton Inference Server for providing great open source inference serving.

- Thanks to FasterTransformer for highly optimized LLM inference backend.

- Thanks to Langchain for the wonderful llm application framework.

- Thanks to Langchain-Chatchat for the inspiration provided on local knowledge base Q&A.

- Thanks to Milvus for the excellent semantic search library.

- Thanks to PaddleOCR for its ease-to-use OCR library.

- Thanks to Sanic for the powerful web service framework.