This repository will comprise primary optimization algorithms implementations in Python language. These algorithms based on Gradient Descent Algorithm. You can try this algorithms on my Heroku App.

- Gradient Descent Algorithm

- Steepest Descent Algorithm

- Gradient Descent with Momentum Algorithm

- RMSprop Algorithm

- Adam Optimization Algorithm

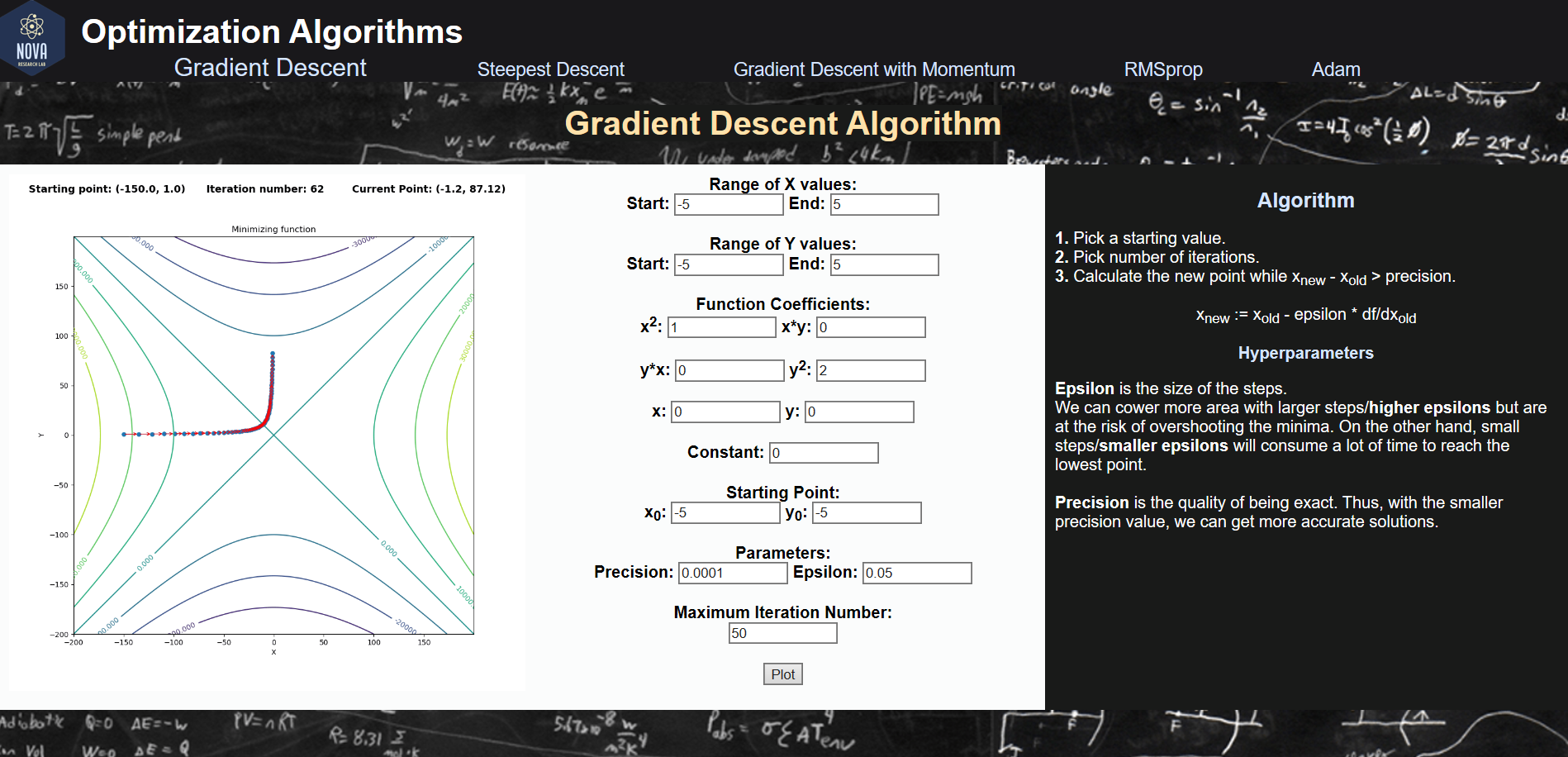

I made a simple website with Python-Flask for using this algorithms much more simpler. These are screenshots from the site.

I put useful links below to learn Optimization Techniques.