Julia implementation of the GP-NLS algorithm for symbolic regression described in the paper:

The documentation of this package is available here.

Symbolic regression is the task of finding a good mathematical expression

to describe the relationship between a set of independent variables

with a dependent variable

, normally represented as tabular data:

In other words, suppose that you have available observations with

variables, and a response variable that you supose that have a relationship

, but the function

is unknown: we can only see how the response changes when the input changes, but we don't know how the response is described by the variables of the problem. Symbolic regression tries to find a function

that approximates the output of the unknown function just by learning mathematical structures from the data itself.

The idea behind the use of evolutionary algorithms is to manipulate a population of mathematical expressions computationally (represented using expression trees). A fitness function, which measures how good each expression is (this function could be, for example, the Mean Squared Error) the individuals of the population have their fitness to represent how well each function describes the data. Through variation operations (which define the power of exploration of the algorithm, done in the full search space, or the power of exploration, which performs a local search) and selective pressure (which promote the maintenance of good solutions in the population), a simulation of the evolutionary process tends to converge to good solutions present in the population. However, it is worth noting that there is no guarantee that the optimal solution will be found, although generally, the algorithm will return a good solution if possible.

The genetic programming algorithm starts with a random population of solutions, which are represented by trees, and then using a fitness function, it repeats the process of selecting the parents, performing the cross between them, applying a mutation on the child solutions, and, finally set a new generation choice between parents and children. This process is repeated until a stop criteria is met.

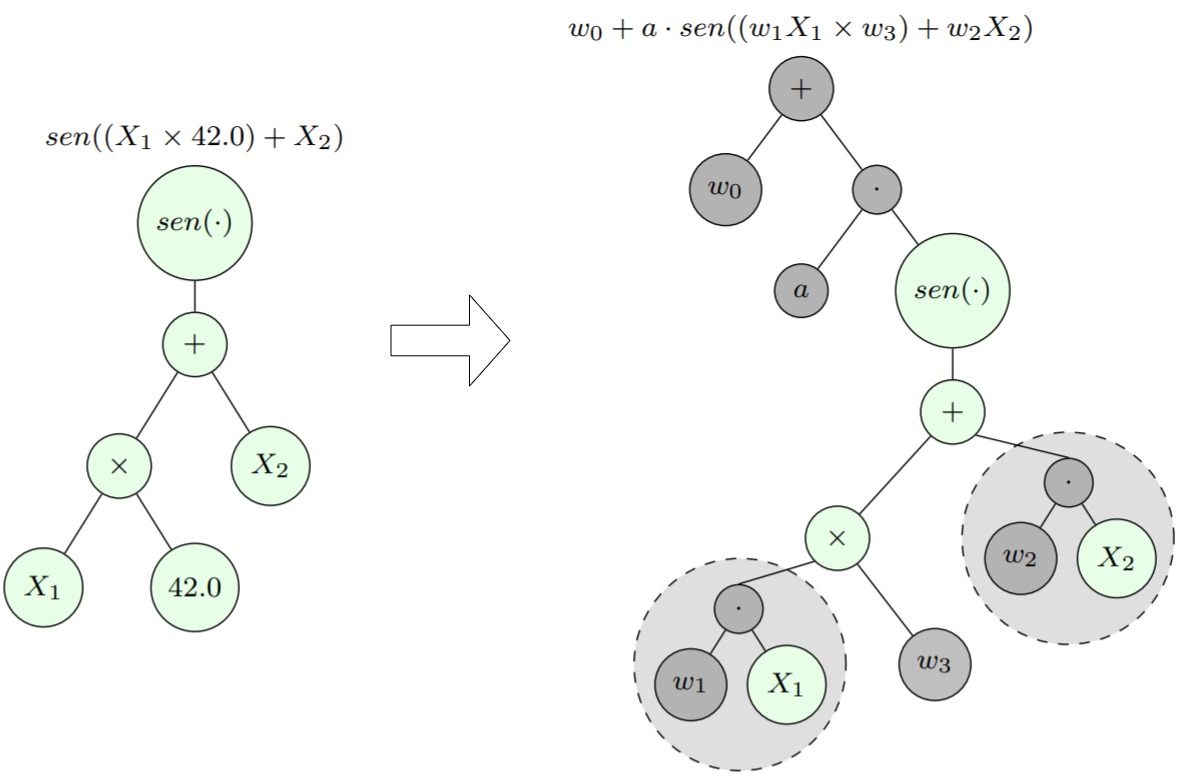

GP-NLS creates symbolic trees but expands them by adding an intercept, slope, and a coefficient to every variable. The new free parameters are then adjusted using the non-linear optimization method called Levenberg-Marquardt.

The implementation is within folder ./GeneticProgrammingNLS. In ./docs you can

find an automatically generated documentation for the package. In ./experiments

there are some scripts to evaluate the different ways of creating/optimizing

free parameters of the symbolic regression models, with results reported in

./experiments/results and comparative plots in ./experiments/plots

inside the git root folder (which contains the implementation of GP-NLS for

symbolic regression) start a julia terminal and enter in the pkg manager

environment by pressing ]. To add GP_NLS to your local packages:

dev ./GP_NLSNow you can use the "original" GP as well as the GP with non-linear least squares optimization by importing it:

using GP_NLSThe first time you import, Julia wil precompile all files, this can take a while.

There is a simple pluto notebook showing how to load a data set and

execute the GP algorithm for symbolic regression inside ./examples.

inside .GP-NLS folder (which contains the implementation of GP-NLS for

symbolic regression) start a julia terminal and enter in the pkg manager

environment by pressing ].

First you need to activate the local package:

activate .Then you can run the tests:

testThere are tree scripts to benchmark different ways of creating/optimizing

the constants in the expression trees. You can execute the experiments

for a list of datasets with the scripts inside the ./experiments folder.

For example, to test the GP-NLS coefficient optimization

for the airfoil and cars data set:

julia LsqOptExperiment.jl airfoil carsThe experiment script will perform 30 executions for the data set, and the

results are saved inside ./experiments/results. To further analyse the

results, you can run the post_hoc_analysis.jl script (which is a pluto

notebook):

julia post_hoc_analysis.jland the plots comparing the methods will be saved in ./experiments/plots.

You need to have Documenter. First, install it using the package manager:

import Pkg; Pkg.add("Documenter")Then, inside the ./docsource folder, run in the terminal:

julia make.jl