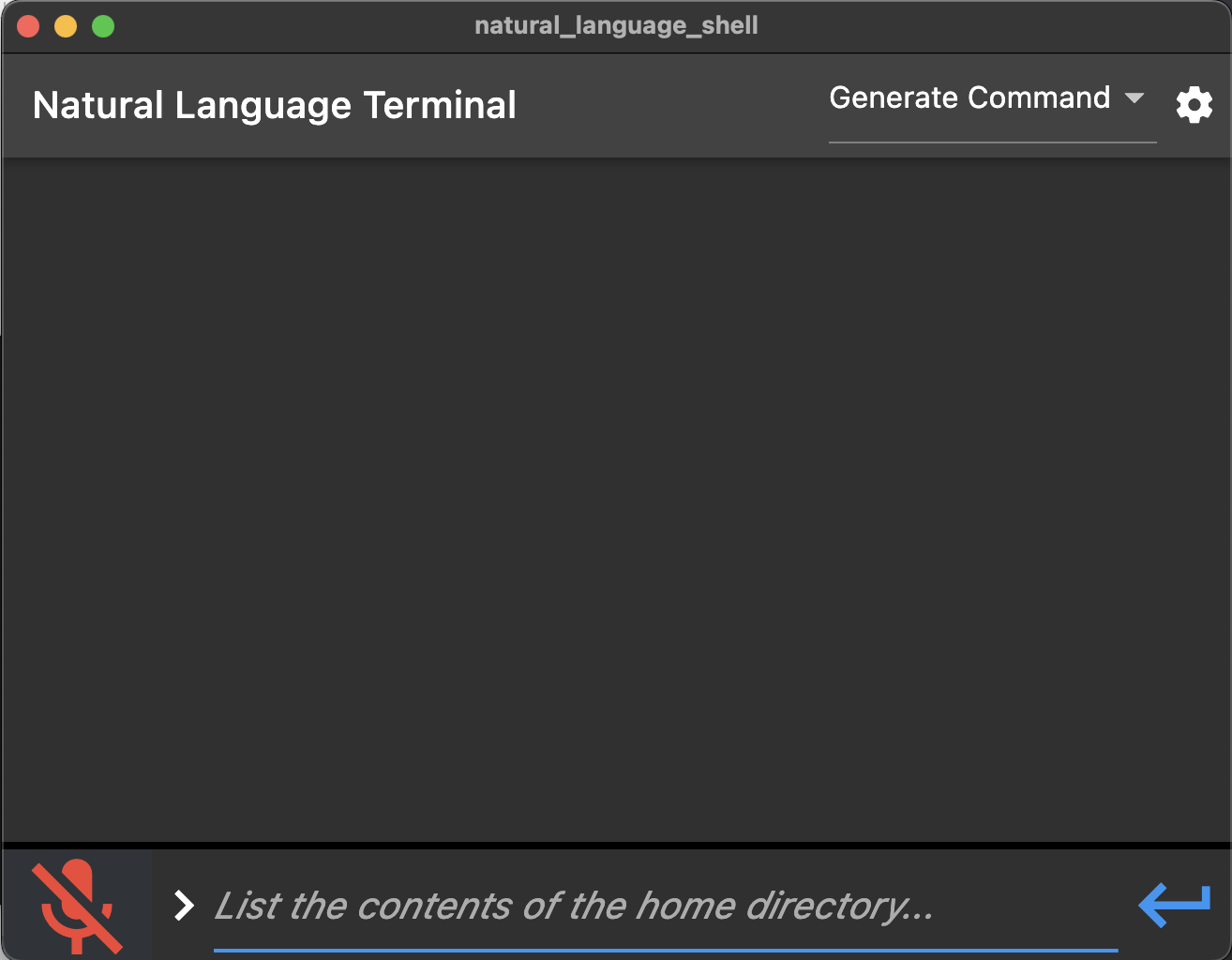

This project is a terminal that is controlled with natural language. It supports both keyboard and voice input. Bash commands are generated by an OpenAI model and are executed locally after validation.

- (Note: best support for os's are on mac and linux)

- Clone repo

- "cd natural-language-shell/frontend/natural_language_shell/cppCode/"

- Run "cmake ." and "make" in the

shellApisub directory (you may need to install cmake or make)- whisper speech to text integration only works on M1 Macs. If the microphone button doesn't work, dictation is not supported on your computer.

- Install fluter on your system following these instructions.

- Add

{"key":"<your openai api key>"}tonatural-language-shell/frontend/natural_language_shell/assets/keys/secret.json - Run

flutter runinnatural-language-shell/frontend/natural_language_shell

- The Frontend is built using Flutter.

- Users enter the desired requirements of their bash command and submit it--either with the enter key or the application's enter button.

- This is displayed as dialogue on the terminal and a response is generated below from the given prompt.

- The natural language terminal relies on OpenAI's Davinci model to convert natural language into terminal commands.

- After validating with the user that the command output meets their requirements, the command is routed to the terminal execution section of the backend.

- The backend speech to text engine runs on a C++ whisper implementation found here.

- This component of the backend is responsible for executing the bash commands generated by the OpenAI model. It consists of a simple C++ shell written by us.

- Flutter uses a foreign function interface to interact with the C++ backend. Setting this up with the necessary C++ libraries took a bit of time.

Run these commands in the terminal to get their equivalent bash form.

- List the contents of the home directory

- Find any files or folders related to ssl

- Show the permissions of the files in the current directory

- Generate a random password

ls ~android-sdk-linuxflutter

find / -name "*ssl*"/usr/share/perl-openssl-defaults/perl-openssl.make/usr/bin/rsync-ssl/usr/bin/dh_perl_openssl/usr/bin/openssl/var/lib/dpkg/info/libssl3:amd64.symbols

ls -l-rw-r--r-- 1 vscode vscode 643 Feb 5 11:08 Installation.txt-rwxrwxrwx 1 root root 0 Feb 4 17:41 README.md-rw-r--r-- 1 vscode vscode 106521876 Feb 4 22:01 httplib.h.gch-rw-r--r-- 1 vscode vscode 907858 Feb 5 12:03 json.hpp

tr -dc A-Za-z0-9 < /dev/urandom | head -c 16rX4Bs1u6MvJQdXg1

- Use a locally runnable LLM like LaMMa.cpp to do NLP tasks.

- To improve overall model performance, we could generate training data for bash commands and their natural language description. This would be used with OpenAI's Fine-Tuning feature or a LaMMa fine tune to concentrate our model on terminal command generation.

- Create a more cohesive experience where commands are automatically explained and UI is easier to understand.