Pytorch implementation for the Dynamic Concept Learner (DCL).

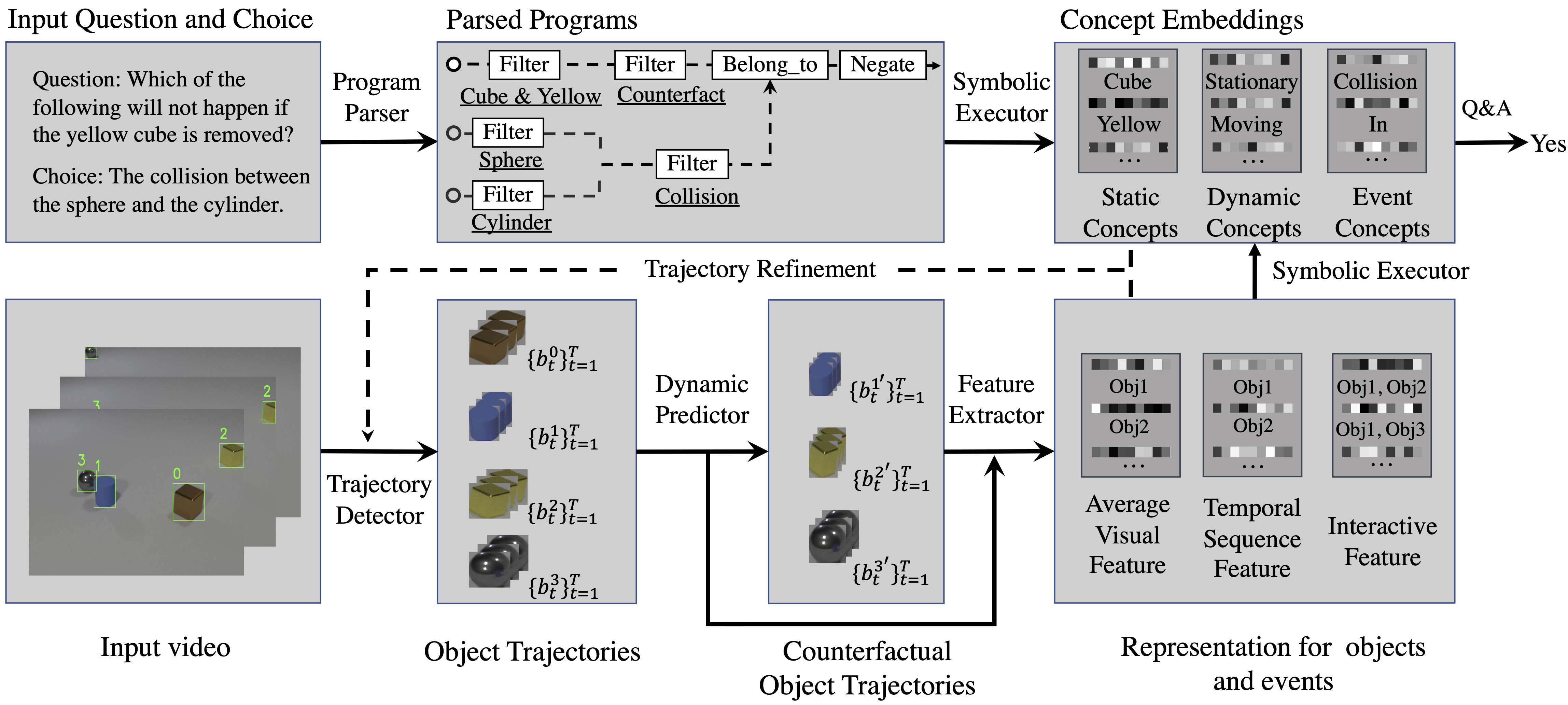

Grounding Physical Object and Event Concepts Through Dynamic Visual Reasoning

Zhenfang Chen,

Jiayuan Mao,

Jiajun Wu,

Kwan-Yee K. Wong,

Joshua B. Tenenbaum, and

Chuang Gan

- Python 3

- PyTorch 1.0 or higher, with NVIDIA CUDA Support

- Other required python packages specified by

requirements.txt. See the Installation.

Install Jacinle: Clone the package, and add the bin path to your global PATH environment variable:

git clone https://github.com/vacancy/Jacinle --recursive

export PATH=<path_to_jacinle>/bin:$PATH

Clone this repository:

git clone https://github.com/zfchenUnique/DCL-Release-Private.git --recursive

Create a conda environment for NS-CL, and install the requirements. This includes the required python packages

from both Jacinle NS-CL. Most of the required packages have been included in the built-in anaconda package:

- Download videos, video annotation, questions and answers, and object proposals accordingly from the official website

- Transform videos into ".png" frames with ffmpeg.

- Organize the data as shown below.

clevrer ├── annotation_00000-01000 │ ├── annotation_00000.json │ ├── annotation_00001.json │ └── ... ├── ... ├── image_00000-01000 │ │ ├── 1.png │ │ ├── 2.png │ │ └── ... │ └── ... ├── ... ├── questions │ ├── train.json │ ├── validation.json │ └── test.json ├── proposals │ ├── proposal_00000.json │ ├── proposal_00001.json │ └── ...

- Download the extracted object trajectories from google drive.

- Git clone the dynamic model, download image proposals and the pretrained propNet models and make dynamic prediction by

git clone https://github.com/zfchenUnique/clevrer_dynamic_propnet.git

cd clevrer_dynamic_propnet

sh ./scripts/eval_fast_release_v2.sh 0

- Download the pretrained DCL model and parsed programs.

sh scripts/script_test_prp_clevrer_qa.sh 0

- Get the accuracy on evalAI.

- Step 1: download the proposals from the region proposal network and extract object trajectories for train and val set by

sh scripts/script_gen_tubes.sh

- Step 2: train a concept learner with descriptive and explanatory questions for static concepts (i.e. color, shape and material)

sh scripts/script_train_dcl_stage1.sh 0

- Step 3: extract static attributes & refine object trajectories extract static attributes

sh scripts/script_extract_attribute.sh

refine object trajectories

sh scripts/script_gen_tubes_refine.sh

- Step 4: extract predictive and counterfactual scenes by

cd clevrer_dynamic_propnet

sh ./scripts/train_tube_box_only.sh # train

sh ./scripts/train_tube.sh # train

sh ./scripts/eval_fast_release_v2.sh 0 # val

- Step 5: train DCL with all questions and the refined trajectories

sh scripts/script_train_dcl_stage2.sh 0

- Step 1: download expression annotation and parsed programs from google drive

- Step 2: evaluate the performance on CLEVRER-Grounding

sh ./scripts/script_grounding.sh 0

jac-crun 0 scripts/script_evaluate_grounding.py

- Step 1: download expression annotation and parsed programs from google drive

- Step 2: evaluate the performance on CLEVRER-Retrieval

sh ./scripts/script_retrieval.sh 0

jac-crun 0 scripts/script_evaluate_retrieval.py

- Step 1: download question annotation and videos from google drive

- Step 2: train on Tower block QA

sh ./scripts/script_train_blocks.sh 0

- Step 3: download the pretrain model from google drive and evaluate on Tower block QA

sh ./scripts/script_eval_blocks.sh 0

- Qualitative Results

- CLEVRER-Grounding training set Annotation

- CLEVRER-Retrieval training set Annotation

If you find this repo useful in your research, please consider citing:

@inproceedings{zfchen2021iclr,

title={Grounding Physical Object and Event Concepts Through Dynamic Visual Reasoning},

author={Chen, Zhenfang and Mao, Jiayuan and Wu, Jiajun and Wong, Kwan-Yee K and Tenenbaum, Joshua B. and Gan, Chuang},

booktitle={International Conference on Learning Representations},

year={2021}

}