This repository contains the code for the paper FIRST: Faster Improved Listwise Reranking with Single Token Decoding

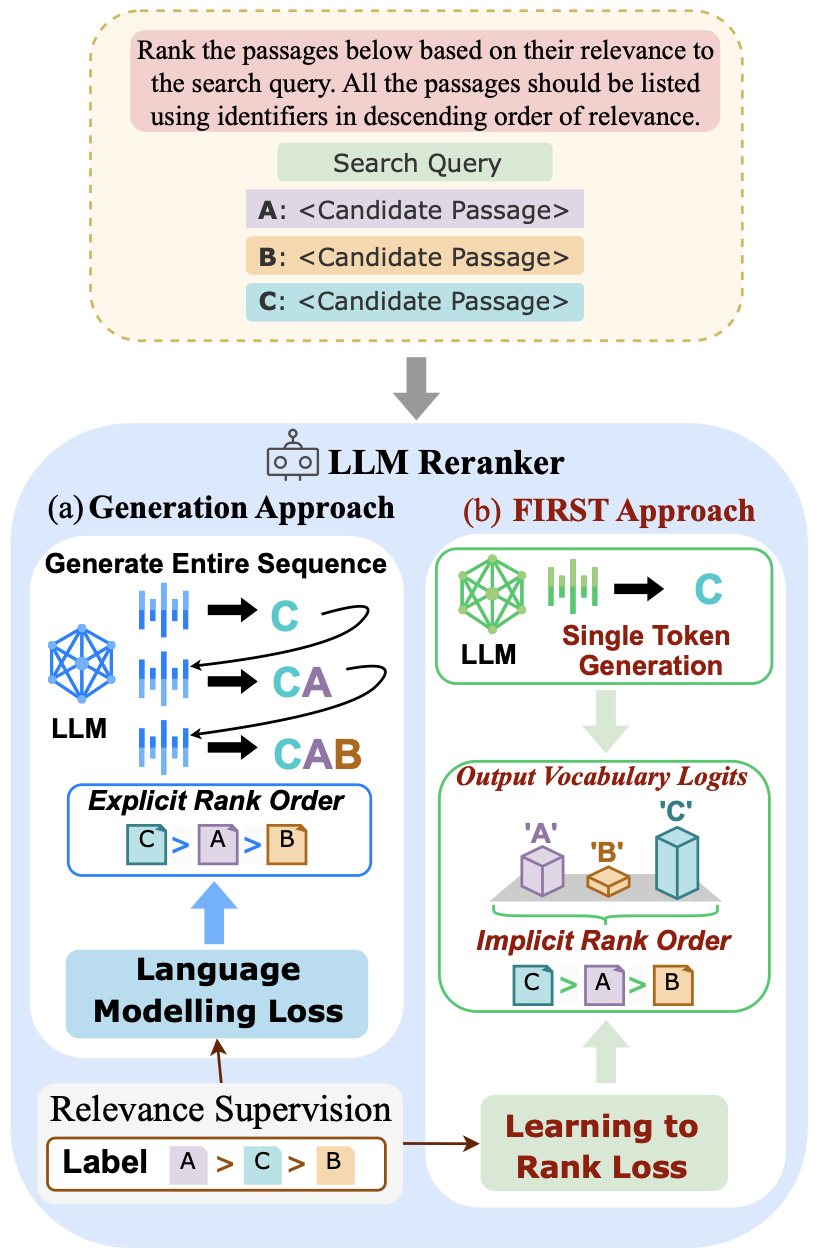

FIRST is a novel listwise LLM reranking approach leveraging the output logits of the first generated identifier to obtain a ranked ordering of the input candidates directly. FIRST incorporates a learning-to-rank loss during training, prioritizing ranking accuracy for the more relevant passages.

You need to install the tevatron library (original source here) which provides the framework for retrieval.

git clone https://github.com/gangiswag/llm-reranker.git

cd llm-reranker

conda create --name reranker python=3.9.18

cd tevatron

pip install --editable .

pip install beir

Note: You need to install the vLLM library (instructions here) which provides optimization for LLM inference.

Before running the scripts below, do

export REPO_DIR=<path to the llm-reranker directory

We use contriever as the underlying retrieval model. The precomputed query and passage embeddings for BEIR are available here.

Note: If you wish to not run the retrieval yourself, the retrieval results are provided here and you can directly jump to Reranking

To run the contriever retrieval using the precomputed encodings

bash bash/beir/run_1st_retrieval.sh <Path to folder with BEIR encodings>

To get the retrieval scores, run:

bash bash/beir/run_eval.sh rank

To run the baseline cross encoder re-ranking, run:

bash bash/beir/run_rerank.sh

To convert the retrieval results to input for LLM reranking, run:

bash bash/beir/run_convert_results.sh

We provide the trained FIRST reranker here.

To run the FIRST reranking, run:

bash bash/beir/run_rerank_llm.sh

To evaluate the reranking performance, run:

bash bash/run_eval.sh rerank

Note: Set flag --suffix to "llm_FIRST_alpha" for FIRST reranker evaluation or "ce" for cross encoder reranker

We also provide the data and scripts to train the LLM reranker by yourself if you wish to do so.

Converted training dataset (alphabetic IDs) is on HF. The standard numeric training dataset can be found here.

We support three training objectives:

- Ranking: The Ranking objective uses a learning-to-rank algorithm to output the logits for the highest-ranked passage ID.

- Generation: The Generation objective follows the principles of Causal Language Modeling, focusing on permutation generation.

- Combined: The Combined objective, which we introduce in our paper, is a novel weighted approach that seamlessly integrates both ranking and generation principles, and is the setting applied to the FIRST model.

To train the model, run:

bash bash/beir/run_train.sh

To train a gated model, login to Huggingface and get token access at huggingface.co/settings/tokens.

huggingface-cli login

We also provide scripts here to use the LLM reranker for a downstream task, such as relevance feedback. Inference-time relevance feedback uses the reranker's output to distill the retriever's query embedding to improve recall.

To prepare dataset(s) for relevance feedback, run:

bash bash/beir/run_prepare_distill.sh <Path to folder with BEIR encodings>

You can choose to run distillation with either the cross encoder or the LLM reranker or both sequentially. To perform the relevance feedback distillation step, run:

bash bash/beir/run_distill.sh

This step creates new query embeddings after distillation.

To perform the retrieval step with the new query embedding after distillation, run:

bash bash/beir/run_2nd_retrieval.sh <Path to folder with BEIR encodings>

To evaluate the 2nd retrieval step, run:

bash bash/beir/run_eval.sh rank_refit

If you found this repo useful for your work, please consider citing our papers:

@article{reddy2024first,

title={FIRST: Faster Improved Listwise Reranking with Single Token Decoding},

author={Reddy, Revanth Gangi and Doo, JaeHyeok and Xu, Yifei and Sultan, Md Arafat and Swain, Deevya and Sil, Avirup and Ji, Heng},

journal={arXiv preprint arXiv:2406.15657},

year={2024}

}

@article{reddy2023inference,

title={Inference-time Re-ranker Relevance Feedback for Neural Information Retrieval},

author={Reddy, Revanth Gangi and Dasigi, Pradeep and Sultan, Md Arafat and Cohan, Arman and Sil, Avirup and Ji, Heng and Hajishirzi, Hannaneh},

journal={arXiv preprint arXiv:2305.11744},

year={2023}

}

We also acknowledge the following opens-source repos, which were instrumental for this work: