IGEV++: Iterative Multi-range Geometry Encoding Volumes for Stereo Matching

Gangwei Xu, Xianqi Wang, Zhaoxing Zhang, Junda Cheng, Chunyuan Liao, Xin Yang

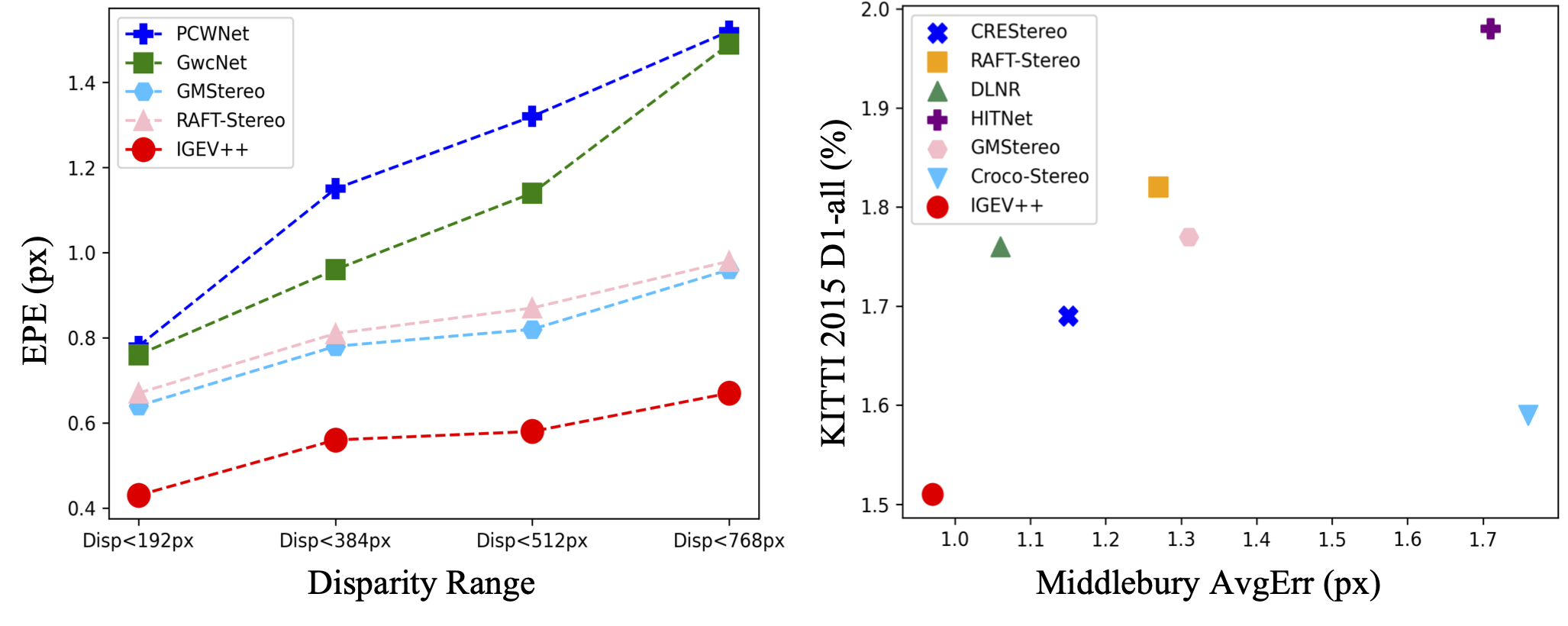

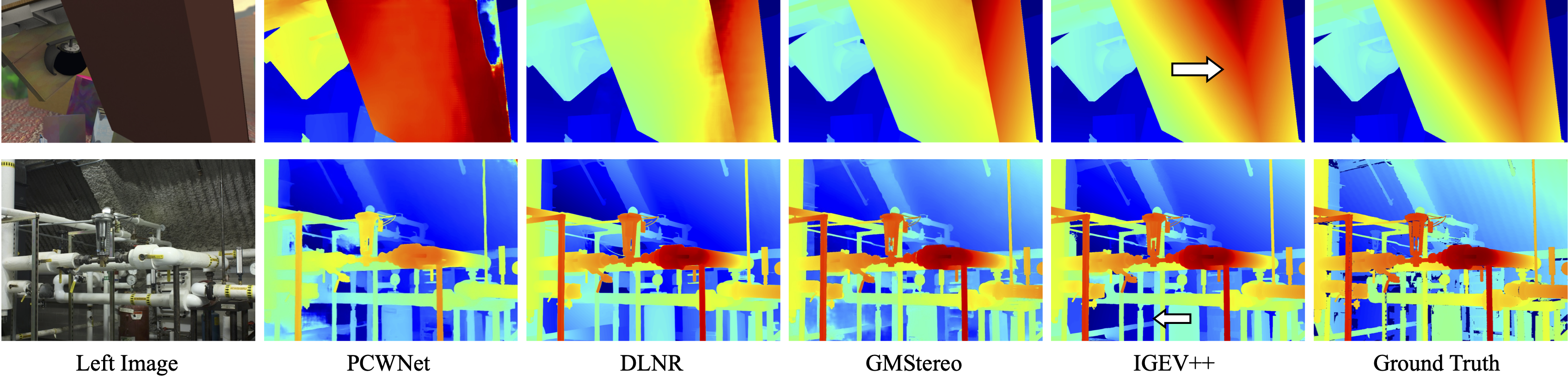

PCWNet is a volume filtering-based method, DLNR is an iterative optimization-based method, and GMStereo is a transformer-based method. Our IGEV++ performs well in large textureless regions at close range with large disparities.

PCWNet is a volume filtering-based method, DLNR is an iterative optimization-based method, and GMStereo is a transformer-based method. Our IGEV++ performs well in large textureless regions at close range with large disparities.

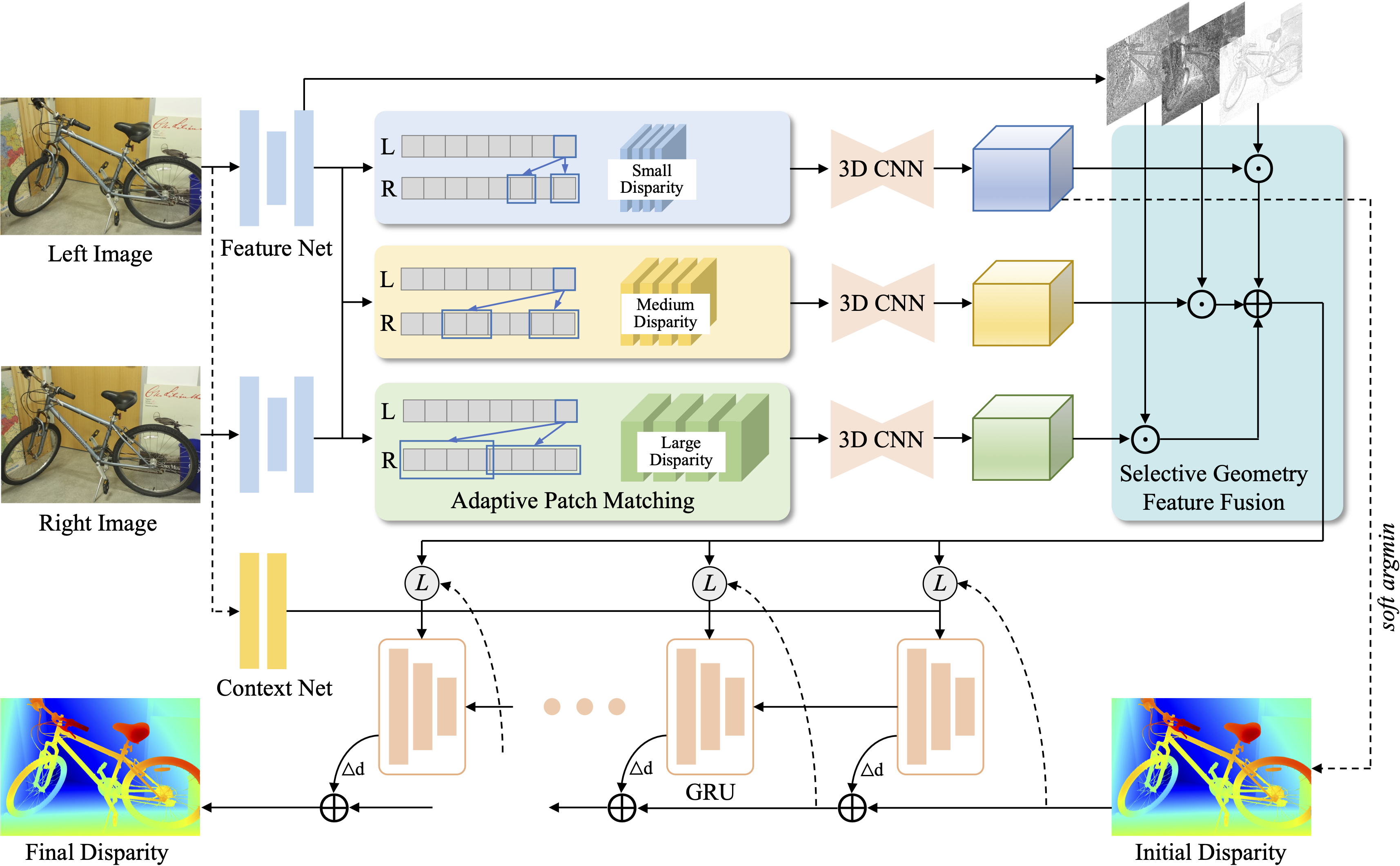

The IGEV++ first builds Multi-range Geometry Encoding Volumes (MGEV) via Adaptive Patch Matching (APM). MEGV encodes coarse-grained geometry information of the scene for textureless regions and large disparities and fine-grained geometry information for details and small disparities after 3D aggregation or regularization. Then we regress an initial disparity map from MGEV through soft argmin, which serves as the starting point for ConvGRUs. In each iteration, we index multi-range and multi-granularity geometry features from MGEV, selectively fuse them, and then input them into ConvGRUs to update the disparity field.

The IGEV++ first builds Multi-range Geometry Encoding Volumes (MGEV) via Adaptive Patch Matching (APM). MEGV encodes coarse-grained geometry information of the scene for textureless regions and large disparities and fine-grained geometry information for details and small disparities after 3D aggregation or regularization. Then we regress an initial disparity map from MGEV through soft argmin, which serves as the starting point for ConvGRUs. In each iteration, we index multi-range and multi-granularity geometry features from MGEV, selectively fuse them, and then input them into ConvGRUs to update the disparity field.

Pretrained models can be downloaded from google drive

We assume the downloaded pretrained weights are located under the pretrained_models directory.

You can demo a trained model on pairs of images. To predict stereo for demo-imgs directory, run

python demo_imgs.py --restore_ckpt ./pretrained_models/igev_plusplus/sceneflow.pth --left_imgs './demo-imgs/*/im0.png' --right_imgs './demo-imgs/*/im1.png'You can switch to your own test data directory, or place your own pairs of test images in ./demo-imgs.

- NVIDIA RTX 3090

- python 3.8

- torch 1.12.1+cu113

conda create -n IGEV_plusplus python=3.8

conda activate IGEV_pluspluspip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 torchaudio==0.12.1 --extra-index-url https://download.pytorch.org/whl/cu113

pip install tqdm

pip install scipy

pip install opencv-python

pip install scikit-image

pip install tensorboard

pip install matplotlib

pip install timm==0.5.4

- SceneFlow

- KITTI

- ETH3D

- Middlebury

- TartanAir

- CREStereo Dataset

- FallingThings

- InStereo2K

- Sintel Stereo

- HR-VS

To evaluate IGEV++ on Scene Flow or Middlebury, run

python evaluate_stereo.py --restore_ckpt ./pretrained_models/igev_plusplus/sceneflow.pth --dataset sceneflowor

python evaluate_stereo.py --restore_ckpt ./pretrained_models/igev_plusplus/sceneflow.pth --dataset middlebury_HTo evaluate RT-IGEV++ (real-time version) on Scene Flow, run

python evaluate_stereo_rt.py --dataset sceneflow --restore_ckpt ./pretrained_models/igev_rt/sceneflow.pthTo train IGEV++ on Scene Flow or KITTI, run

python train_stereo.py --train_datasets sceneflowor

python train_stereo.py --train_datasets kitti --restore_ckpt ./pretrained_models/igev_plusplus/sceneflow.pthTo train IGEV++ on Middlebury or ETH3D, you need to run

python train_stereo.py --train_datasets middlebury_train --restore_ckpt ./pretrained_models/igev_plusplus/sceneflow.pth --image_size 384 512 --num_steps 200000

python train_stereo.py --tarin_datasets middlebury_finetune --restore_ckpt ./checkpoints/middlebury_train.pth --image_size 384 768 --num_steps 100000or

python train_stereo.py --train_datasets eth3d_train --restore_ckpt ./pretrained_models/igev_plusplus/sceneflow.pth --image_size 384 512 --num_steps 300000

python train_stereo.py --tarin_datasets eth3d_finetune --restore_ckpt ./checkpoints/eth3d_train.pth --image_size 384 512 --num_steps 100000For IGEV++ submission to the KITTI benchmark, run

python save_disp.pyFor RT-IGEV++ submission to the KITTI benchmark, run

python save_disp_rt.pyIf you find our works useful in your research, please consider citing our papers:

@article{xu2024igev++,

title={IGEV++: Iterative Multi-range Geometry Encoding Volumes for Stereo Matching},

author={Xu, Gangwei and Wang, Xianqi and Zhang, Zhaoxing and Cheng, Junda and Liao, Chunyuan and Yang, Xin},

journal={arXiv preprint arXiv:2409.00638},

year={2024}

}

@inproceedings{xu2023iterative,

title={Iterative Geometry Encoding Volume for Stereo Matching},

author={Xu, Gangwei and Wang, Xianqi and Ding, Xiaohuan and Yang, Xin},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={21919--21928},

year={2023}

}This project is based on RAFT-Stereo, GMStereo, and CoEx. We thank the original authors for their excellent works.