======

This is a baseline framework for wrapping custom machine learning and deep learning models for the lending tabular

dataset. The wrapper model has fit, predict, predict_proba and evaluate

methods following the scikit-learn model api.

The model's tune_parameters method does hyperparameter tuning on selected model parameters using the Optuna library.

The wrapper validates the schema defining the data and underlying model parameters before training and prediction

using pydantic and pandera. This allows for property based testing using hypothesis and run time data validation.

This baseline framework can be extended to deep learning models developed using pytorch and pytorch-lightning. The

performance of these models can be compared to models from scikit-learn.

Assuming python, pip and virtualenv are installed on the system.

Clone repository:

git clone https://github.com/ganprad/ml-tdd-example.git

cd ml-tdd-example

Useconda and pip-tools:

Use conda to setup a python environment for CUDA and MKL optimized packages and pip-tools

to manage python dependencies.

To install CUDA toolkit: make conda-gpu

To install Without CUDA: make conda-cpu

Use pip-tools to install python dependencies:

make pip-tools

Usevirtualenv:

Setup python environment:

On Linux and Mac:

virtualenv -p /usr/bin/python3 venv --prompt ml_wrapper

Activate the environment:

source ./venv/bin/activate

Install requirements:

pip install -r requirements/requirements.txt

Install the package:

pip install -e .

- The columns

emp_lengthcontainsnavalues. These are considered as not available and replaced with the value-1.0. NaNvalues in columns are replaced with-1.0in both categorical and numerical columns.- Assuming all categories in the column

purpose_catrepresent small business based categories. For example, the columnpurpose_catcontains values equal tosmall businessandsmall business small business. The preprocessing code changessmall business small businessvalues tosmall business. This increases the count ofsmall business. - Numerical columns are scaled using the

MinMaxScalerclass from scikit-learn. - Categorical columns are converted to one hot encoded columns using the scikit-learn

OneHotEncoder. - The input dataframe is split into a dataframe of features

Xand a 1D numpy array of target valuesy. - Preprocessing functions are located in

utils.py.

Functionality:

- Model inherits from an abstract base class called

BaseWrapperModel. - The

BaseWrapperModelis a template that defines the functional requirements of the model using abstract methods.

Underlying model:

LogisticRegressionfrom thescikit-learnlibrary is chosen as the underlying model.- The baseline model has an elastic net penalty. This allows for adjusting

l1regularization strength in relation tol2regularization.

Tuning parameters:

- Three model parameters can be tuned, these are

C,max_iterandl1_ratio. - Hyper parameters are defined as custom

pydantictypes. OptunaSearchCVis used for performing cross validation during model training:- Hyper-parameter sampling is done using

optuna.distributions. - f1-score is used as the tuning objective.

- The type of cross validation is

RepeatedStratifiedKfoldwhenn_repeats > 0otherwise it isStratifiedKFold. - After cross-validation the model is refit with the best parameters from the study and saved into the models directory.

- Hyper-parameter sampling is done using

- The output of

tune_parametersis a dictionary containing the best search parameters and corresponding scores.

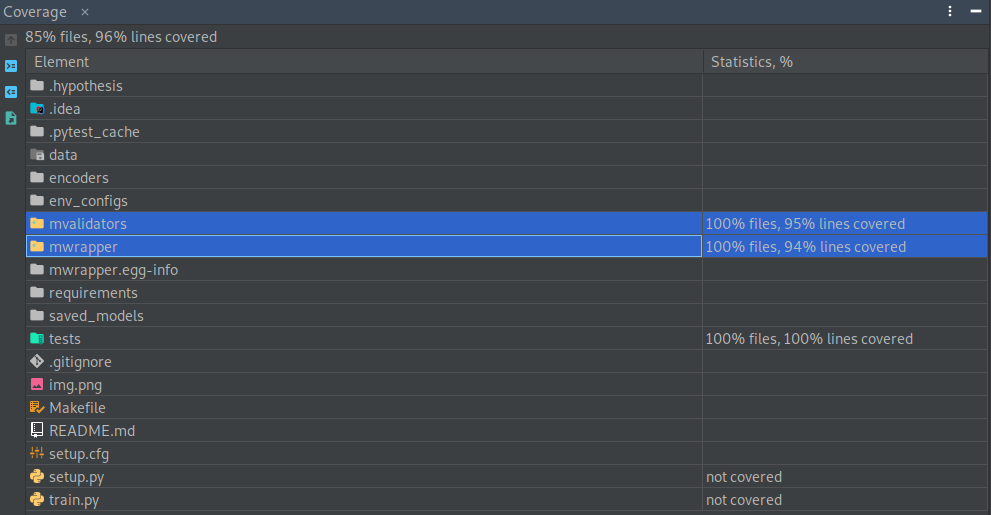

Hypothesis was used for writing parametrized schema tests in combination with pydantic and pandera.

The model inputs are validated by schemas defined using pydantic. The dataset features have to be checked to ensure

that the model makes predictions on data that originates from the same data generating process as the training data.

The pandera library makes it possible to do schema validation in combination with statistical validation. It also

allows for synthesising data for testing.

References:

References: