Official PyTorch Implementation of Learning to Detect Multi-class Anomalies with Just One Normal Image Prompt, Accepted by ECCV 2024.

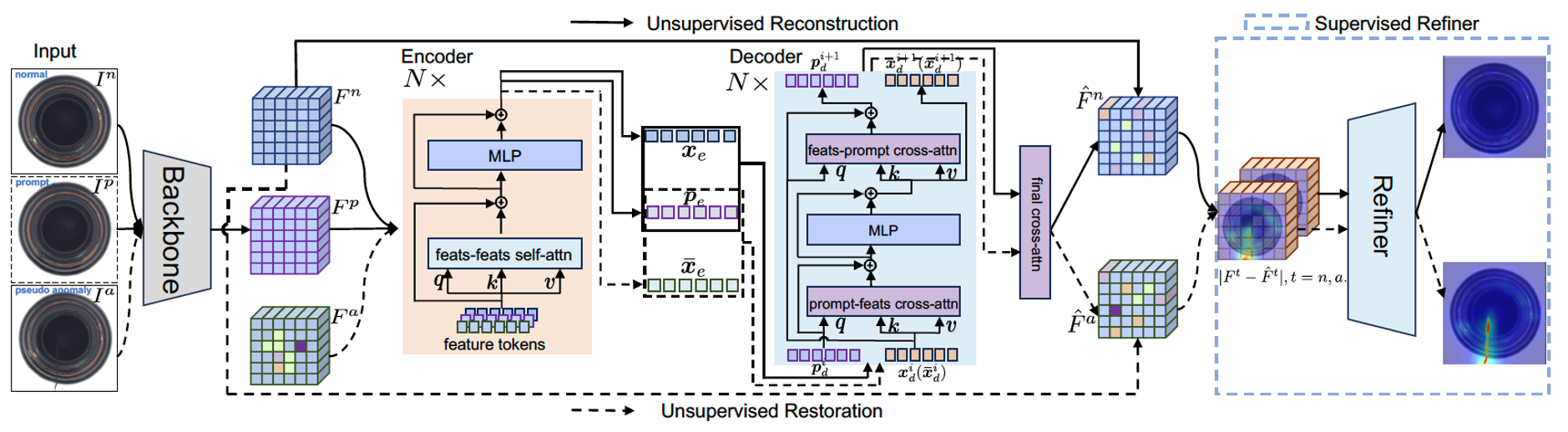

OneNIP mainly consists of Unsupervised Reconstruction, Unsupervised Restoration, and Supervised Refiner. Unsupervised Reconstruction and Unsupervised Restoration share the same encoder-decoder architectures and weights. Supervised Refiner is implemented by two transposed convolution blocks, and each following a 1×1 convolution layer.

- Unsupervised Reconstruction reconstructs normal tokens;

- Unsupervised Restoration restores pseudo anomaly tokens to the corresponding normal tokens;

- Supervised Refiner refines reconstruction/restoration errors to achieve more accurate anomaly segmentation.

All pre-trained model weights are stored in Google Drive.

| Dataset | Input-Reslution | I-AUROC | P-AUROC | P-AUAP | checkpoints-v2 | Test-Log |

|---|---|---|---|---|---|---|

| MVTec | 224 |

98.0 | 97.9 | 63.9 | model weight | testlog |

| MVTec | 256 |

97.9 | 97.9 | 64.8 | model weight | testlog |

| MVTec | 320 |

98.4 | 98.0 | 66.7 | model weight | testlog |

| VisA | 224 |

92.8 | 98.7 | 42.5 | model weight | testlog |

| VisA | 256 |

93.4 | 98.9 | 44.9 | model weight | testlog |

| VisA | 320 |

94.8 | 98.9 | 46.1 | model weight | testlog |

| BTAD | 224 |

93.2 | 97.4 | 56.3 | model weight | testlog |

| BTAD | 256 |

95.2 | 97.6 | 57.7 | model weight | testlog |

| BTAD | 320 |

96.0 | 97.8 | 58.6 | model weight | testlog |

| MVTec+VisA+BTAD | 224 |

94.6 | 98.0 | 53.5 | model weight | testlog |

| MVTec+VisA+BTAD | 256 |

94.9 | 98.0 | 53.1 | model weight | testlog |

| MVTec+VisA+BTAD | 320 |

95.6 | 97.9 | 54.1 | model weight | testlog |

Download MVTec, BTAD, VisA and DTD datasets. Unzip and move them to ./data. The data directory should be as follows.

├── data

│ ├── btad

│ │ ├── 01

│ │ ├── 02

│ │ ├── 03

│ │ ├── test.json

│ │ ├── train.json

│ ├── dtd

│ │ ├── images

│ │ ├── imdb

│ │ ├── labels

│ ├── mvtec

│ │ ├── bottle

│ │ ├── cable

│ │ ├── ...

│ │ └── zipper

│ │ ├── test.json

│ │ ├── train.json

│ ├── mvtec+btad+visa

│ │ ├── 01

│ │ ├── bottle

│ │ ├── ...

│ │ └── zipper

│ │ ├── test.json

│ │ ├── train.json

│ ├── visa

│ │ ├── candle

│ │ ├── capsules

│ │ ├── ...

│ │ ├── pipe_fryum

│ │ ├── test.json

│ │ ├── train.json

Download pre-trained checkpoints-v2 to ./checkpoints-v2

cd ./exps

bash eval_onenip.sh 8 0,1,2,3,4,5,6,7

cd ./exps

bash train_onenip.sh 8 0,1,2,3,4,5,6,7

If you find this code useful in your research, please consider citing us:

@inproceedings{gao2024onenip,

title={Learning to Detect Multi-class Anomalies with Just One Normal Image Prompt},

author={Gao, Bin-Bin},

booktitle={18th European Conference on Computer Vision (ECCV 2024)},

pages={-},

year={2024}

}

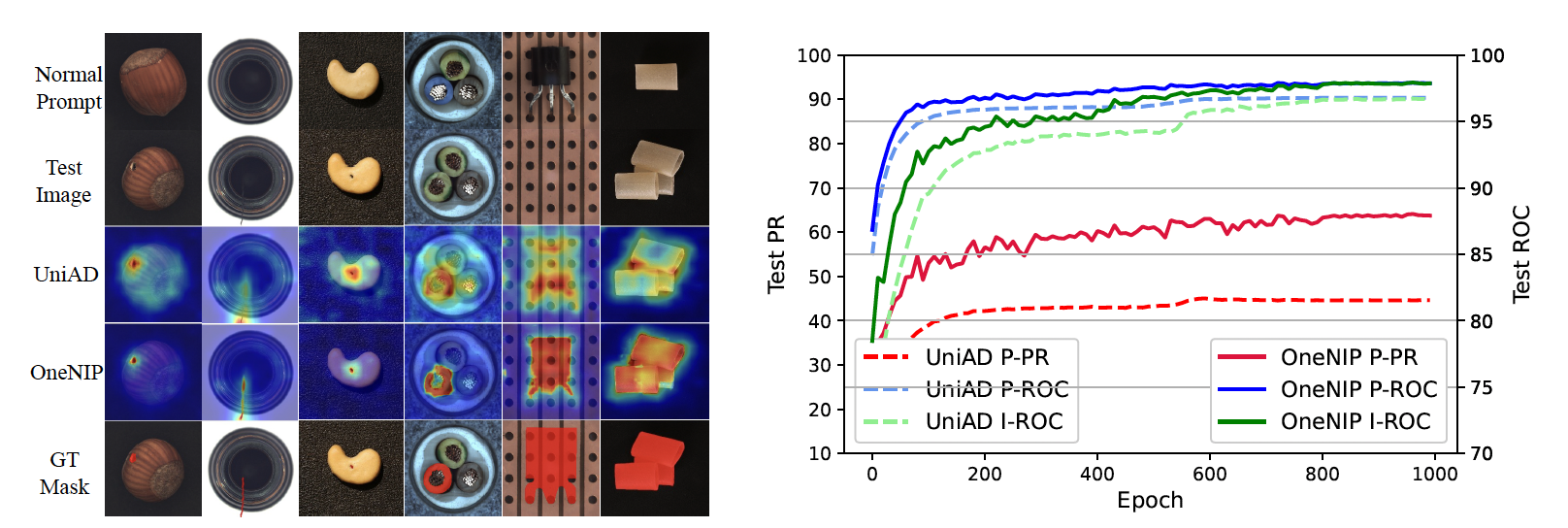

Our OneNIP is build on UniAD. Thank the authors of UniAD for open-sourcing their implementation codes!