Show Me When and Where: Towards Referring Video Object Segmentation in the Wild

1. Overview, 2. Benchmark, 3. Baseline (OMFormer)

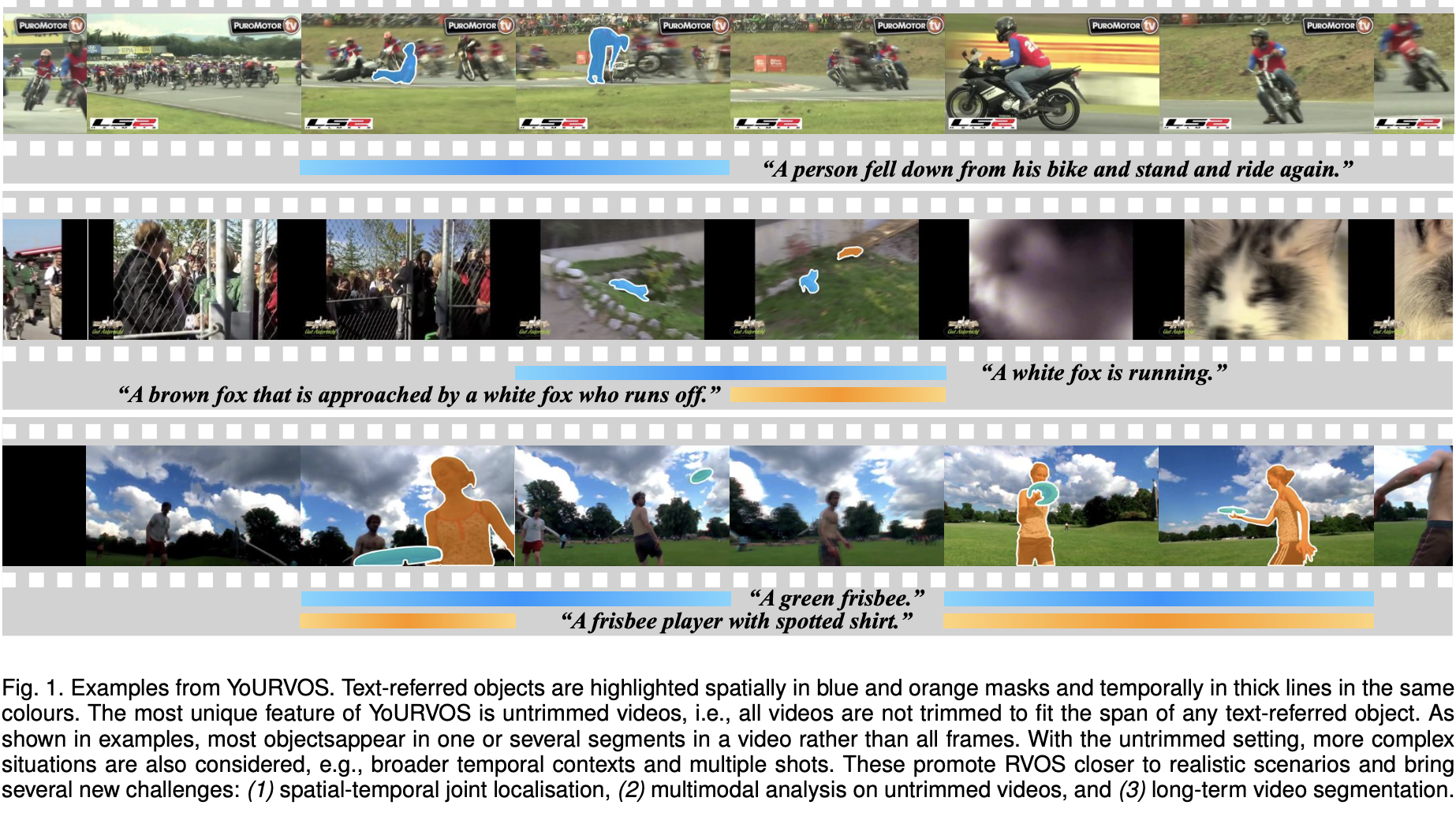

YoURVOS (Youtube Untrimmed Referring Video Object Segmentation) is a benchmark to close the gap between Referring Video Object Segmentation (RVOS) studies and realistic scenarios. Unlike previous RVOS benchmarks, where videos are trimmed to enable text-referred objects to always be present, YoURVOS comes from untrimmed videos. Thus, the targets could appear anytime, anywhere in a video. This poses great challenges to RVOS methods that need to show not only when but also where the objects appear in a video.

| Method | Venue | Backbone | J&F | J | F | tIoU |

| ReferFormer | CVPR 2022 | ResNet-50 | 12.0 | 12.1 | 11.9 | 32.2 |

| ResNet-101 | 22.4 | 22.4 | 22.4 | 33.4 | ||

| Swin-T | 22.6 | 22.7 | 22.6 | 34.1 | ||

| Swin-L | 24.9 | 24.6 | 25.2 | 34.4 | ||

| V-Swin-T | 23.0 | 22.8 | 23.1 | 33.7 | ||

| V-Swin-B | 24.6 | 24.3 | 24.8 | 34.5 | ||

| LBDT | CVPR 2022 | ResNet-50 | 14.6 | 14.6 | 14.5 | 32.6 |

| MTTR | CVPR 2022 | V-Swin-T | 21.4 | 21.3 | 21.6 | 33.6 |

| UNINEXT | CVPR 2023 | ResNet-50 | 23.1 | 22.9 | 23.3 | 32.6 |

| Conv-L | 24.2 | 23.9 | 24.5 | 32.6 | ||

| ViT-L | 24.8 | 24.4 | 25.2 | 32.6 | ||

| R2VOS | ICCV 2023 | ResNet-50 | 24.9 | 25.0 | 24.9 | 35.3 |

| LMPM | ICCV 2023 | Swin-T | 13.0 | 12.8 | 13.3 | 21.9 |

| DEVA | ICCV 2023 | Swin-L | 21.9 | 21.6 | 22.2 | 33.6 |

| OnlineRefer | ICCV 2023 | ResNet-50 | 22.5 | 22.4 | 22.5 | 33.8 |

| Swin-L | 25.0 | 24.4 | 25.6 | 34.9 | ||

| SgMg | ICCV 2023 | V-Swin-T | 24.3 | 24.1 | 24.5 | 34.4 |

| V-Swin-B | 25.3 | 25.1 | 25.5 | 34.7 | ||

| SOC | NeurIPS 2023 | V-Swin-T | 23.5 | 23.2 | 23.8 | 34.4 |

| V-Swin-B | 24.2 | 23.8 | 24.6 | 33.6 | ||

| MUTR | AAAI 2024 | ResNet-50 | 22.4 | 22.3 | 22.6 | 33.3 |

| ResNet-101 | 23.3 | 23.1 | 23.4 | 33.7 | ||

| Swin-L | 26.2 | 25.9 | 26.5 | 35.1 | ||

| V-Swin-T | 23.2 | 23.1 | 23.4 | 33.5 | ||

| V-Swin-B | 25.7 | 25.5 | 26.0 | 34.6 | ||

| OMFormer | Ours 2024 | ResNet-50 | 33.7 | 33.6 | 33.8 | 44.9 |

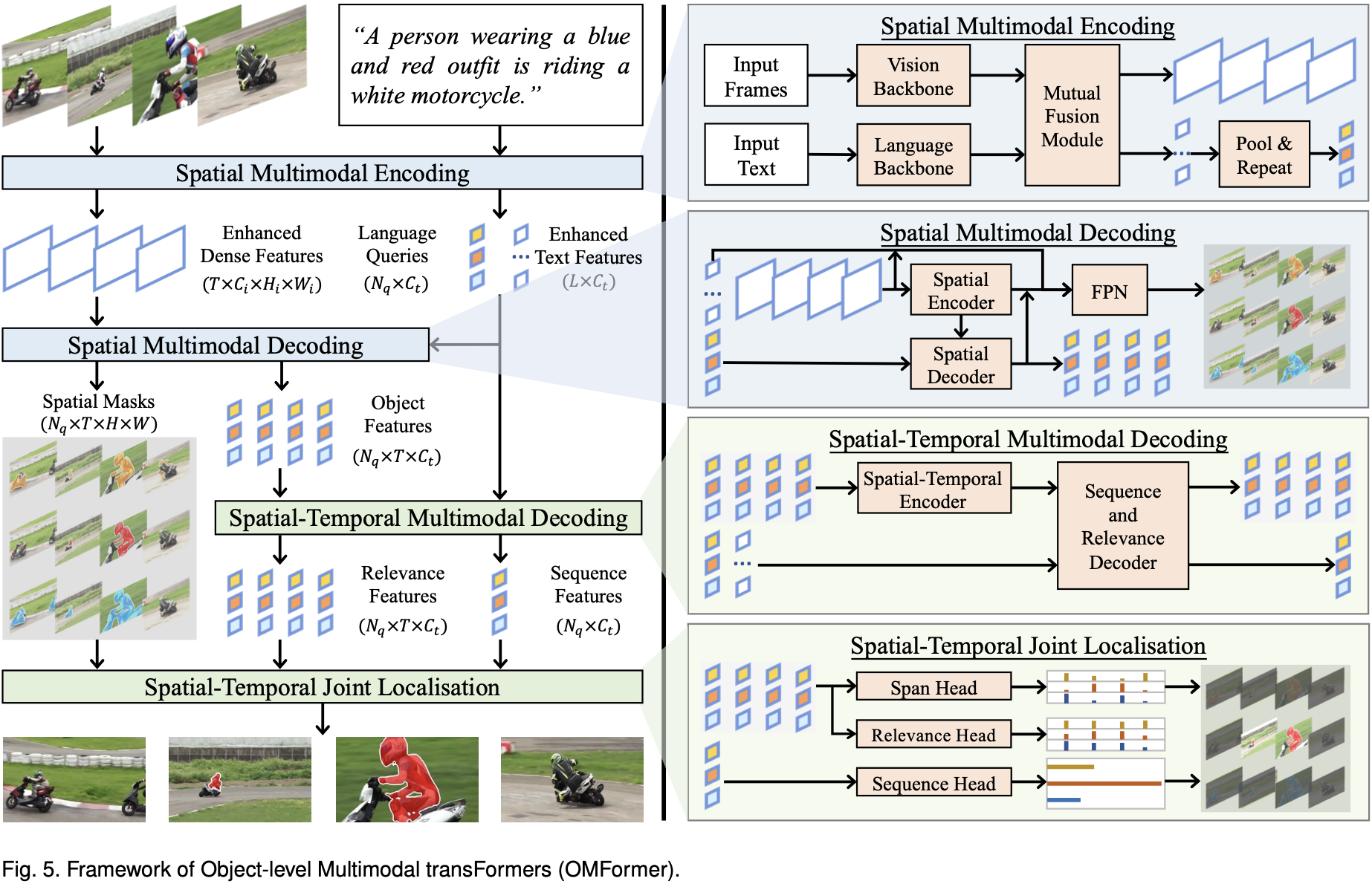

Object-level Multimodal transFormers (OMFormer) for RVOS.

We test the code on Python=3.9, PyTorch=1.10.1, CUDA=11.3

cd baseline

pip install -r requirements.txt

cd models/ops

python setup.py build install

cd ../..python inference_yourvos.py \

--freeze_text_encoder \

--output_dir [path to output] \

--resume [path to checkpoint]/omformer_r50.pth \

--ngpu [number of gpus] \

--batch_size 1 \

--backbone resnet50 \

--yourvos_path [path to YoURVOS]Checkpoint (on Hugging Face 🤗): omformer_r50.pth

YoURVOS test videos (on Hugging Face 🤗): YoURVOS

cd evaluation/vos-benchmark

# J&F

python benchmark.py -g [path to gt] -m [path to predicts] --do_not_skip_first_and_last_frame

# tIoU

python tiou.py [path to predicts] spans.txt