Fast and accurate open-vocabulary end-to-end object detection

- 03/25/2024: Inference code and a pretrained OmDet-Turbo-Tiny model released.

- 03/12/2024: Github open-source project created

If you are interested in our research, we welcome you to explore our other wonderful projects.

🔆 How to Evaluate the Generalization of Detection? A Benchmark for Comprehensive Open-Vocabulary Detection(AAAI24) 🏠Github Repository

🔆 OmDet: Large-scale vision-language multi-dataset pre-training with multimodal detection network(IET Computer Vision)

This repository is the official PyTorch implementation for OmDet-Turbo, a fast transformer-based open-vocabulary object detection model.

⭐️Highlights

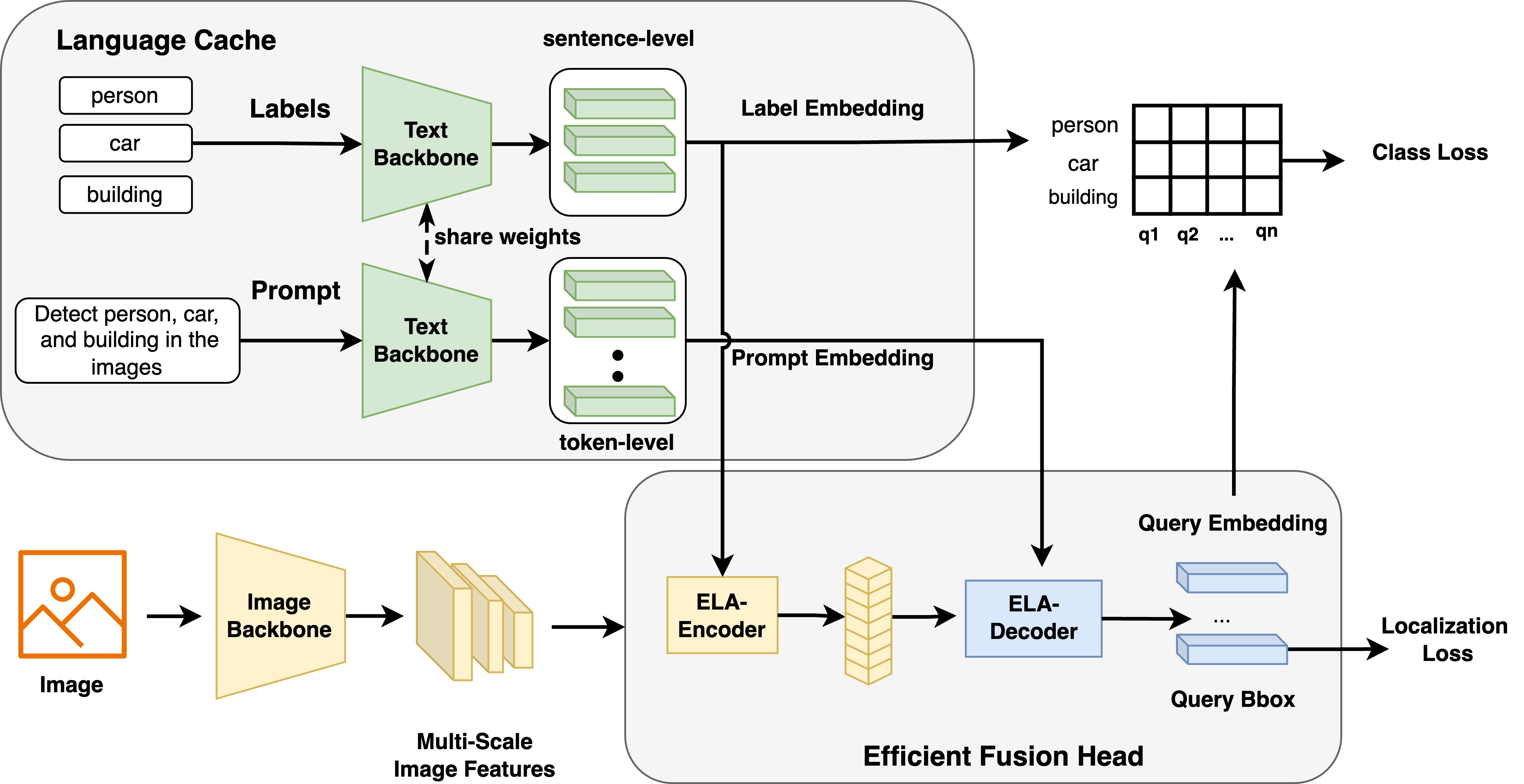

- OmDet-Turbo is a transformer-based real-time open-vocabulary detector that combines strong OVD capabilities with fast inference speed. This model addresses the challenges of efficient detection in open-vocabulary scenarios while maintaining high detection performance.

- We introduce the Efficient Fusion Head, a swift multimodal fusion module designed to alleviate the computational burden on the encoder and reduce the time consumption of the head with ROI.

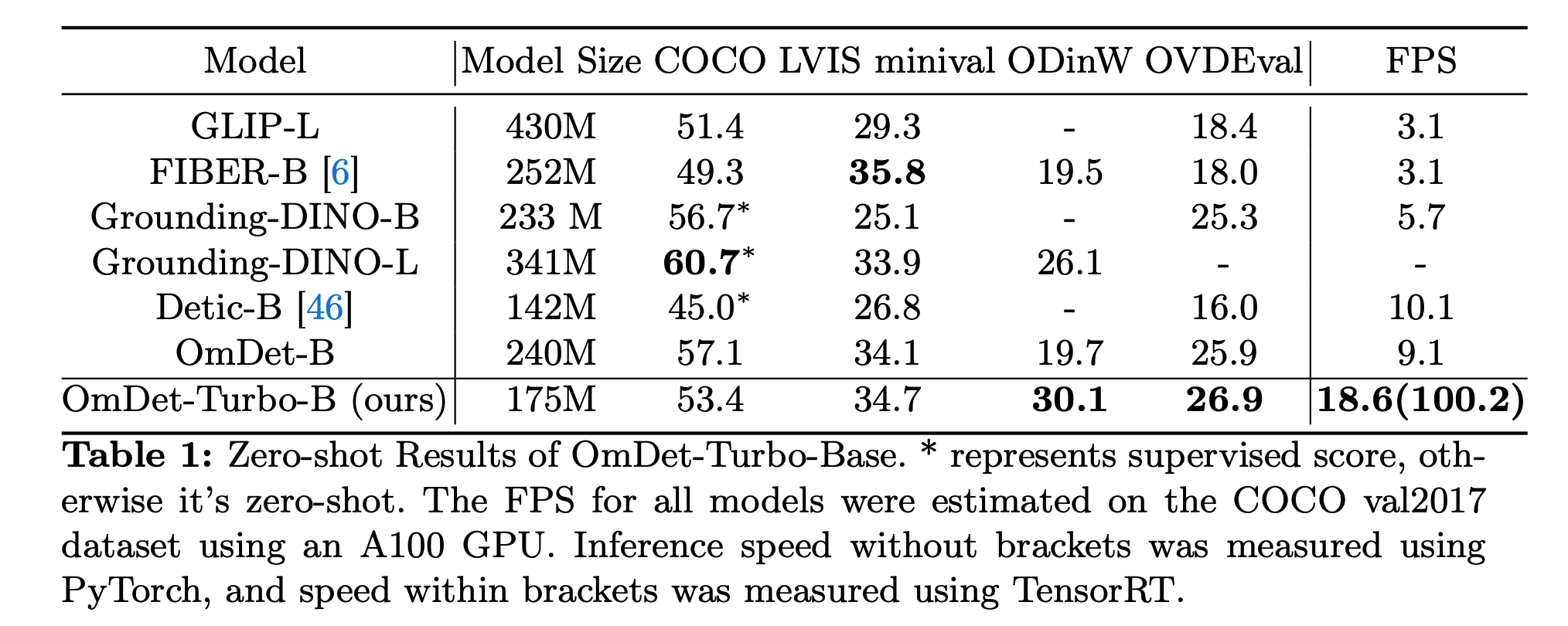

- OmDet-Turbo-Base model, achieves state-of-the-art zero-shot performance on the ODinW and OVDEval datasets, with AP scores of 30.1 and 26.86, respectively.

- The inference speed of OmDetTurbo-Base on the COCO val2017 dataset reach 100.2 FPS on an A100 GPU.

For more details, check out our paper Real-time Transformer-based Open-Vocabulary Detection with Efficient Fusion Head

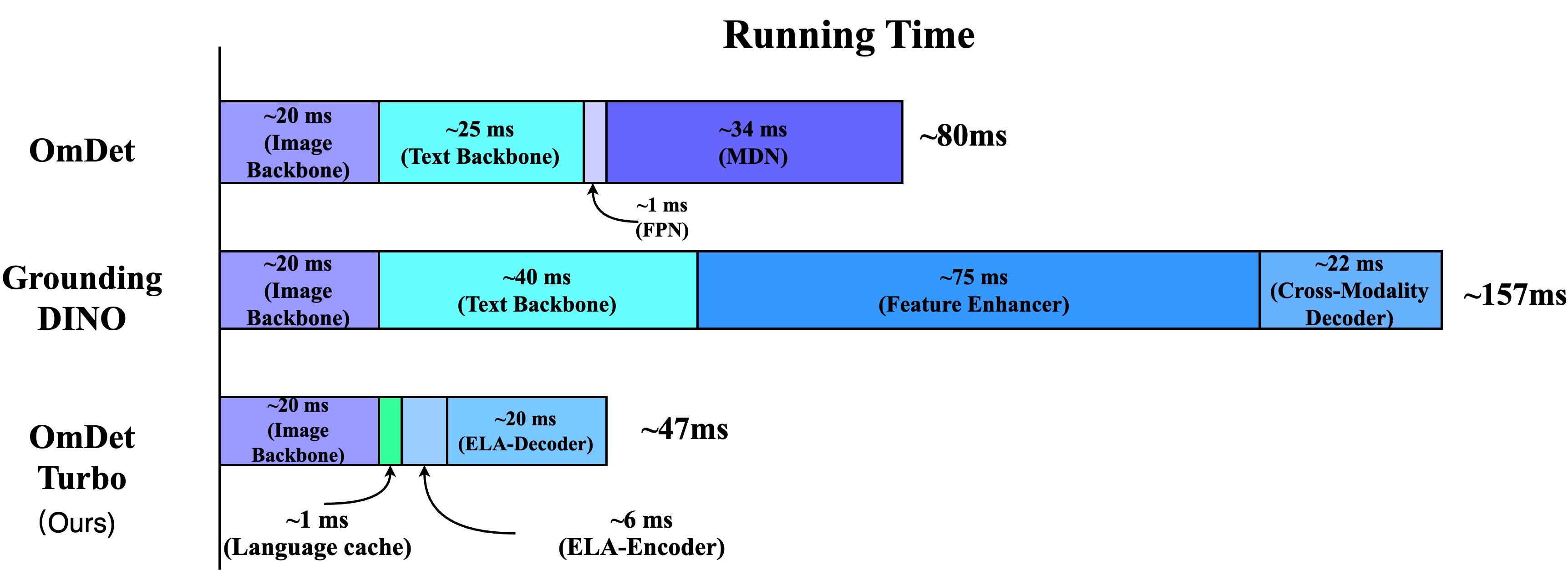

Comparison of inference speeds for each component in tiny-size model.

Follow the Installation Instructions to set up the environments for OmDet-Turbo

- Download our pretrained model and the CLIP checkpoints.

- Create a folder named resources, put downloaded models into this folder.

- Run run_demo.py, the images with predicted results will be saved at ./outputs folder.

We already added language cache while inferring with run_demo.py. For more details, please open and check run_demo.py scripts.

The performance of COCO and LVIS are evaluated under zero-shot setting.

| Model | Backbone | Pre-Train Data | COCO | LVIS | FPS (pytorch/trt) | Weight |

|---|---|---|---|---|---|---|

| OmDet-Turbo-Tiny | Swin-T | O365,GoldG | 42.5 | 30.3 | 21.5/140.0 | weight |

Please consider citing our papers if you use our projects:

@article{zhao2024real,

title={Real-time Transformer-based Open-Vocabulary Detection with Efficient Fusion Head},

author={Zhao, Tiancheng and Liu, Peng and He, Xuan and Zhang, Lu and Lee, Kyusong},

journal={arXiv preprint arXiv:2403.06892},

year={2024}

}

@article{zhao2024omdet,

title={OmDet: Large-scale vision-language multi-dataset pre-training with multimodal detection network},

author={Zhao, Tiancheng and Liu, Peng and Lee, Kyusong},

journal={IET Computer Vision},

year={2024},

publisher={Wiley Online Library}

}