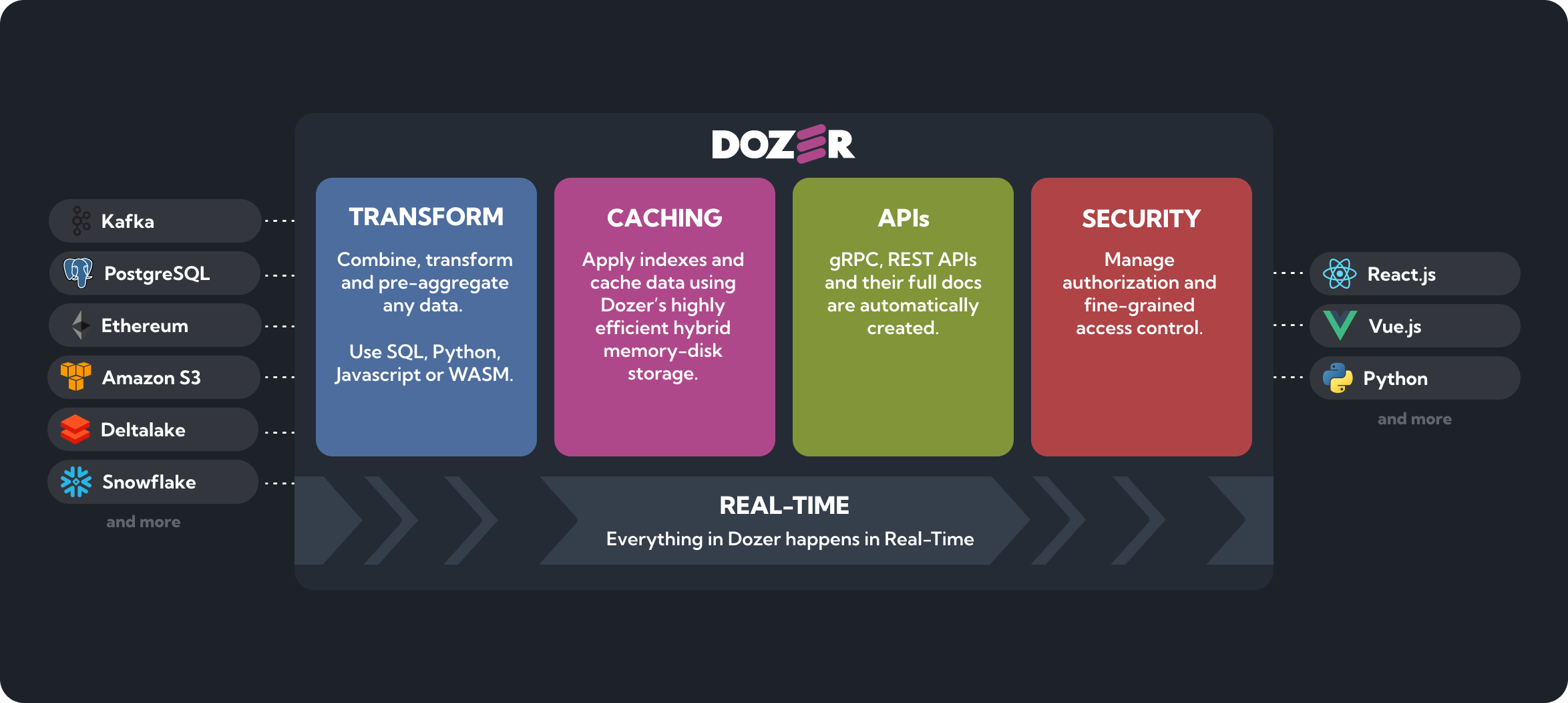

Connect any data source, combine them in real-time and instantly get low-latency data APIs.

⚡ All with just a simple configuration! ⚡️

Dozer makes it easy to build low-latency data APIs (gRPC and REST) from any data source. Data is transformed on the fly using Dozer's reactive SQL engine and stored in a high-performance cache to offer the best possible experience. Dozer is useful for quickly building data products.

Follow the instruction below to install Dozer on your machine and run a quick sample using the NY Taxi Dataset

MacOS Monterey (12) and above

brew tap getdozer/dozer && brew install dozerUbuntu 20.04 and above

# amd64

curl -sLO https://github.com/getdozer/dozer/releases/latest/download/dozer-linux-amd64.deb && sudo dpkg -i dozer-linux-amd64.deb

# aarch64

curl -sLO https://github.com/getdozer/dozer/releases/latest/download/dozer-linux-aarch64.deb && sudo dpkg -i dozer-linux-aarch64.debDozer requires protobuf-compiler, installation instructions can be found in additional steps

Build from source

cargo install --path dozer-cli --lockedDownload sample configuration and data

Create a new empty directory and run the commands below. This will download a sample configuration file and a sample NY Taxi Dataset file.

curl -o dozer-config.yaml https://raw.githubusercontent.com/getdozer/dozer-samples/main/connectors/local-storage/dozer-config.yaml

curl --create-dirs -o data/trips/fhvhv_tripdata_2022-01.parquet https://d37ci6vzurychx.cloudfront.net/trip-data/fhvhv_tripdata_2022-01.parquetRun Dozer binary

dozer -c dozer-config.yamlDozer will start processing the data and populating the cache. You can see a progress of the execution from the console.

Query the APIs

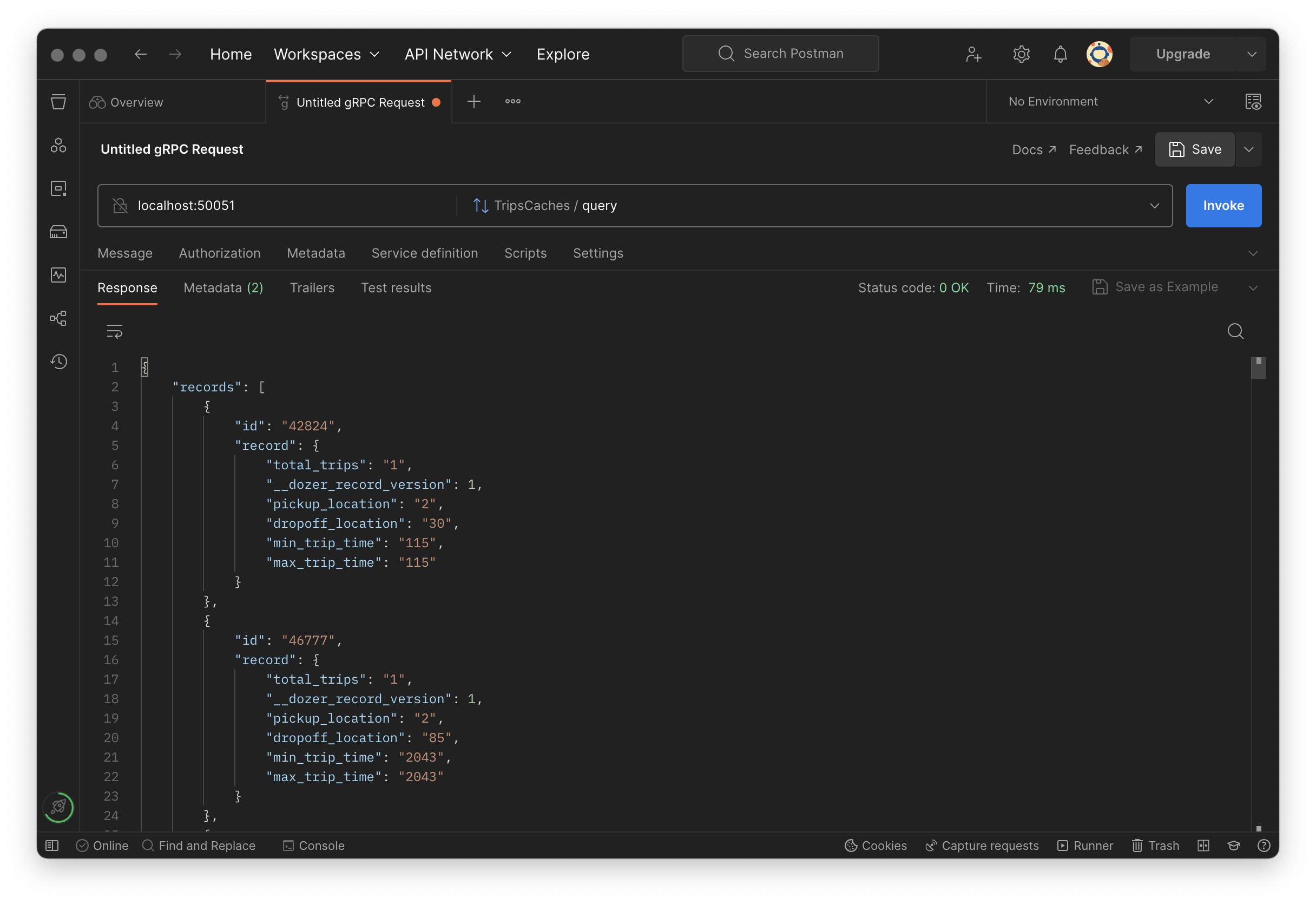

When some data is loaded, you can query the cache using gRPC or REST

# gRPC

grpcurl -d '{"query": "{\"$limit\": 1}"}' -plaintext localhost:50051 dozer.generated.trips_cache.TripsCaches/query

# REST

curl -X POST http://localhost:8080/trips/query --header 'Content-Type: application/json' --data-raw '{"$limit":3}'Alternatively, you can use Postman to discover gRPC endpoints through gRPC reflection

Read more about Dozer here. And remember to star 🌟 our repo to support us!

| Library | Language | License |

|---|---|---|

| dozer-python | Dozer Client library for Python | Apache-2.0 |

| dozer-js | Dozer Client library for Javascript | Apache-2.0 |

| dozer-react | Dozer Client library for React with easy to use hooks | Apache-2.0 |

from pydozer.api import ApiClient

api_client = ApiClient('trips')

api_client.query()import { ApiClient } from "@dozerjs/dozer";

const flightsClient = new ApiClient('flights');

flightsClient.count().then(count => {

console.log(count);

});import { useCount } from "@dozerjs/dozer-react";

const AirportComponent = () => {

const [count] = useCount('trips');

<div> Trips: {count} </div>

}Check out Dozer's samples repository for more comprehensive examples and use case scenarios.

| Type | Sample | Notes |

|---|---|---|

| Connectors | Postgres | Load data using Postgres CDC |

| Local Storage | Load data from local files | |

| Ethereum | Load data from Ethereum | |

| Kafka | Load data from kafka stream | |

| Snowflake (Coming soon) | Load data using Snowflake table streams | |

| SQL | Using JOINs | Dozer APIs over multiple sources using JOIN |

| Using Aggregations | How to aggregate using Dozer | |

| Using Window Functions | Use Hop and Tumble Windows |

|

| Use Cases | Flight Microservices | Build APIs over multiple microservices. |

| Use Dozer to Instrument (Coming soon) | Combine Log data to get real time insights | |

| Real Time Model Scoring (Coming soon) | Deploy trained models to get real time insights as APIs | |

| Client Libraries | Dozer React Starter | Instantly start building real time views using Dozer and React |

| Ingest Polars/Pandas Dataframes | Instantly ingest Polars/Pandas dataframes using Arrow format and deploy APIs | |

| Authorization | Dozer Authorziation | How to apply JWT Auth on Dozer |

Refer to the full list of connectors and example configurations here.

| Connector | Status | Type | Schema Mapping | Frequency | Implemented Via |

|---|---|---|---|---|---|

| Postgres | Available ✅ | Relational | Source | Real Time | Direct |

| Snowflake | Available ✅ | Data Warehouse | Source | Polling | Direct |

| Local Files (CSV, Parquet) | Available ✅ | Object Storage | Source | Polling | Data Fusion |

| Delta Lake | Alpha | Data Warehouse | Source | Polling | Direct |

| AWS S3 (CSV, Parquet) | Alpha | Object Storage | Source | Polling | Data Fusion |

| Google Cloud Storage(CSV, Parquet) | Alpha | Object Storage | Source | Polling | Data Fusion |

| Ethereum | Available ✅ | Blockchain | Logs/Contract ABI | Real Time | Direct |

| Kafka Stream | Available ✅ | Schema Registry | Real Time | Debezium | |

| MySQL | In Roadmap | Relational | Source | Real Time | Debezium |

| Google Sheets | In Roadmap | Applications | Source | ||

| Excel | In Roadmap | Applications | Source | ||

| Airtable | In Roadmap | Applications | Source |

| Library | Language | License |

|---|---|---|

| dozer-log-python | Python binding for reading Dozer logs | Apache-2.0 |

| dozer-log-js | Node.js binding for reading Dozer logs | Apache-2.0 |

we support CPython >= 3.10 on Windows, MacOS and Linux, both amd and arm architectures.

import pydozer_log

reader = await pydozer_log.LogReader.new('.dozer', 'trips')

print(await reader.next_op())const dozer_log = require('@dozerjs/log');

const runtime = dozer_log.Runtime();

reader = await runtime.create_reader('.dozer', 'trips');

console.log(await reader.next_op());We release Dozer typically every 2 weeks and is available on our releases page. Currently, we publish binaries for Ubuntu 20.04, Apple(Intel) and Apple(Silicon).

Please visit our issues section if you are having any trouble running the project.

Please refer to Contributing for more details.