class: center, middle

Gerard Escudero, 2020

.footnote[source]

class: left, middle, inverse

-

.cyan[Introduction]

-

Finite State Machines

-

Decision Trees

-

Behaviour Trees

-

Planning Systems

-

References

.footnote[.red[(Millington, 2019)]]

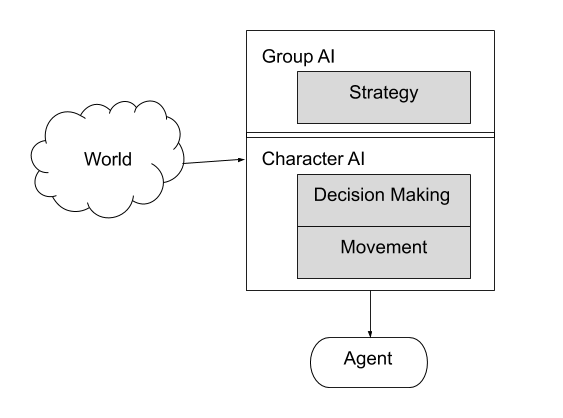

.cols5050[ .col1[

-

.blue[Input]: World Knowledge

-

.blue[Output]: Action

-

.blue[Important rule]:

Decision Making should NOT execute every frame! -

Main algorithms:

- Finite State Machines

- Behaviour Trees

- Goal Oriented Action Planning

.small[GOAP]

]

.col2[

.small[GOAP]

]

.col2[

.small[FSM]

.small[BT]

]]

class: left, middle, inverse

-

.brown[Introduction]

-

.cyan[Finite State Machines]

-

.cyan[Code (delegates)]

-

Visual Scripting

-

Hierarchical FSM

-

-

Decision Trees

-

Behaviour Trees

-

Planning Systems

-

References

Example:

using UnityEngine;

using System.Collections;

public class WaitForSecondsExample : MonoBehaviour {

void Start() {

StartCoroutine(“Example”);

}

IEnumerator Example() {

Debug.Log(Time.time);

yield return new WaitForSeconds(5);

Debug.Log(Time.time);

}

}-

StartCoroutine: type of asynchronous "functions" -

IEnumerator: returning type -

yield: stops execution until something happens

assigning functions to variables

Example:

public class DelegateScript : MonoBehaviour {

delegate void MyDelegate(int num);

MyDelegate myDelegate;

void Start () {

myDelegate = PrintNum;

myDelegate(50);

myDelegate = DoubleNum;

myDelegate(50);

}

void PrintNum(int num) {

Debug.Log(num);

}

void DoubleNum(int num) {

Debug.Log(num * 2);

}

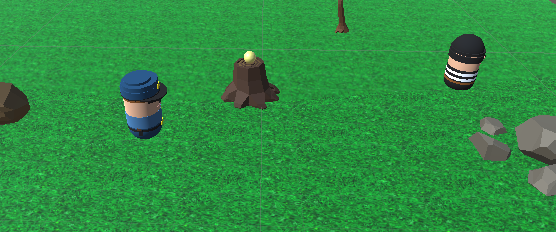

}.blue[Task]: FSM for the robber:

.blue[Code template]:

public class FSM : MonoBehaviour

{

...

private WaitForSeconds wait = new WaitForSeconds(0.05f); // 1 / 20

delegate IEnumerator State();

private State state;

IEnumerator Start()

{

...

state = Wander;

while (enabled)

yield return StartCoroutine(state());

}

IEnumerator Wander()

{

Debug.Log("Wander state");

...

}

}-

Coroutine that executes 20 times per second and goes forever

-

Explicit every state change with

Debub.Log -

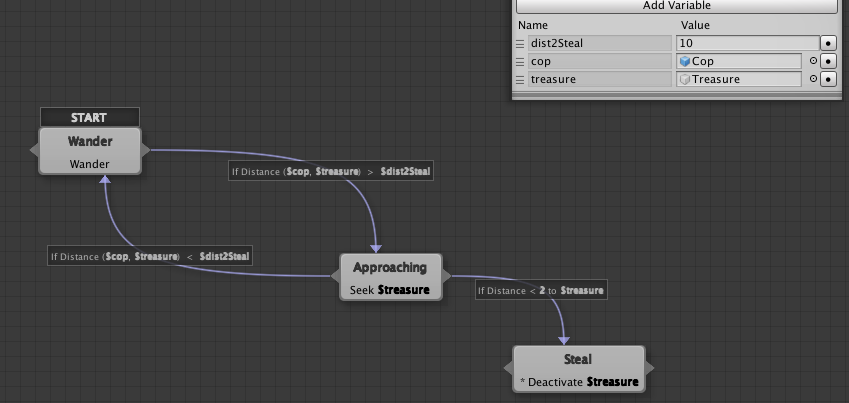

First behaviour is slowly .blue[wander]

-

When the cop walks away from the treasure he has to .blue[approach] quickly to steal it

-

If the cop comes back he returns to .blue[wander] slowly and so on

-

If the robbery is successful (the treasure must disappear), he begins to permanently .blue[hide] in the obstacle closest to the cop

.blue[solution]: view.red[*] / download

- Watch the videos (5mn): Killzone 2 Review about AI & F.E.A.R. 2 - A.I.

.footnote[.red[*] made with .red[hightlighting]]

class: left, middle, inverse

-

.brown[Introduction]

-

.cyan[Finite State Machines]

-

.brown[Code (delegates)]

-

.cyan[Visual Scripting]

-

Hierarchical FSM

-

-

Decision Trees

-

Behaviour Trees

-

Planning Systems

-

References

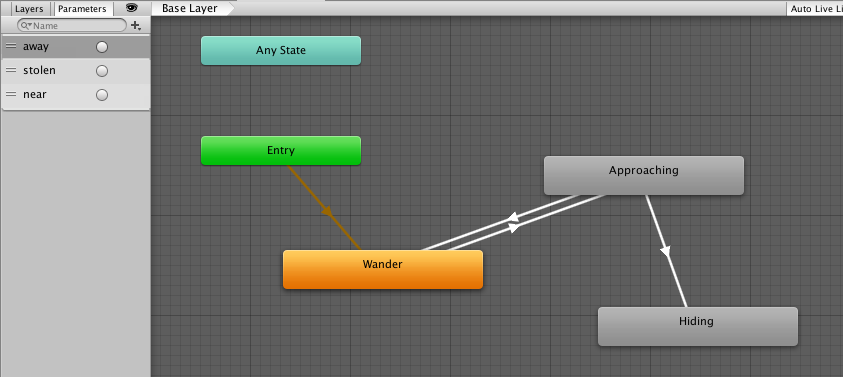

-

Visual editors helps handling complex behaviours

-

Separates coders from game designers

-

Many options:

- CryEngine’s flowgraph

- Unreal Kismet / Blueprint

- Unity PlayMaker

- ...

-

Includes FSM, hierarchical FSM and Behavior Trees

-

Decent documentation

-

It allows the creation of new actions

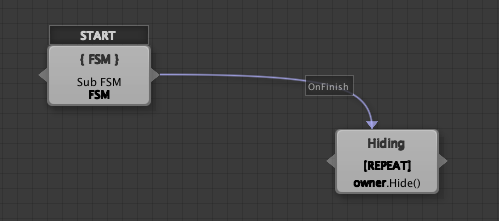

- Wander State: view.red[*] / download

- Approaching State: view.red[*] / download

- Hiding State: view.red[*] / download

- BlackBoard: view.red[*] / download

.footnote[.red[*] made with .red[hightlighting]]

class: left, middle, inverse

-

.brown[Introduction]

-

.cyan[Finite State Machines]

-

.brown[Code (delegates)]

-

.brown[Visual Scripting]

-

.cyan[Hierarchical FSM]

-

-

Decision Trees

-

Behaviour Trees

-

Planning Systems

-

References

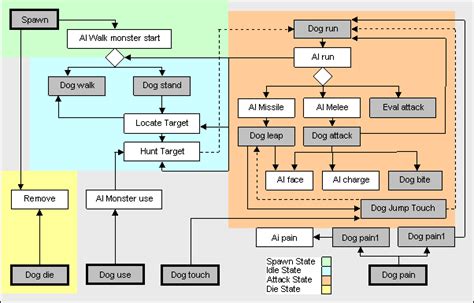

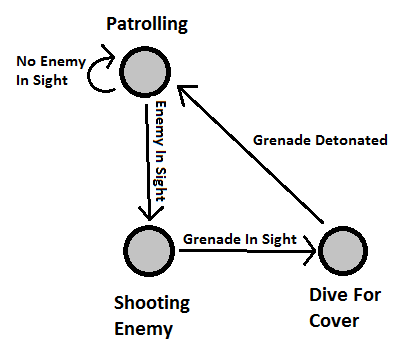

.blue[Complex Behaviours]:

.footnote[.red[source]]

class: left, middle, inverse

-

.brown[Introduction]

-

.brown[Finite State Machines]

-

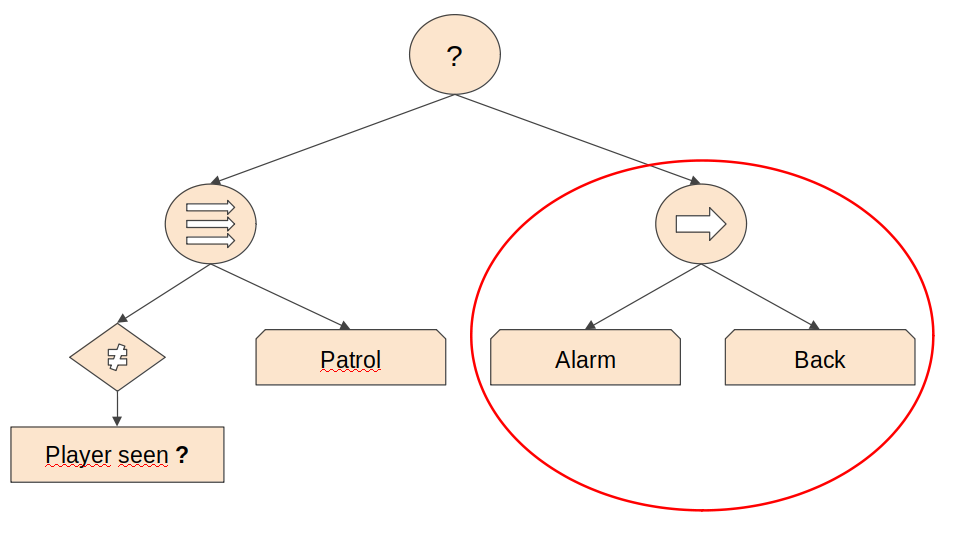

.cyan[Decision Trees]

-

Behaviour Trees

-

Planning Systems

-

References

-

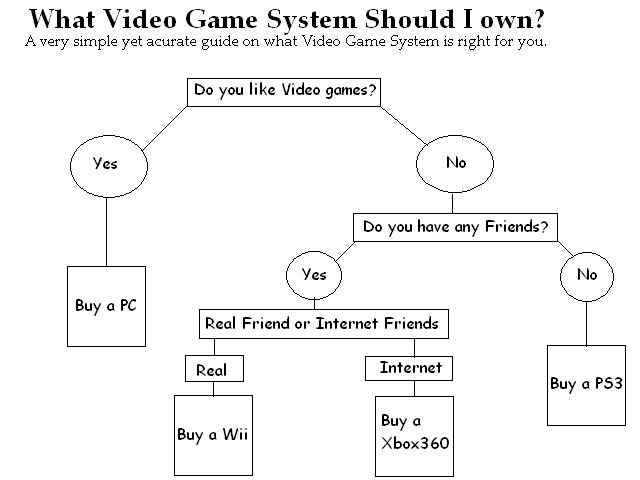

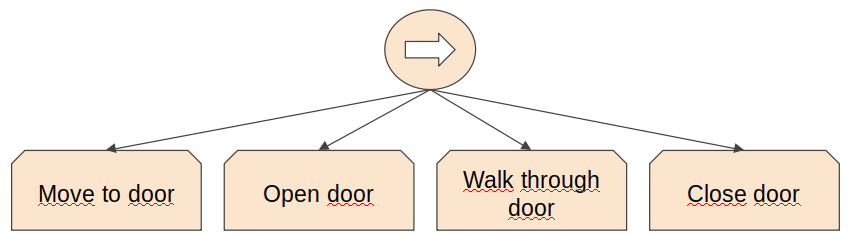

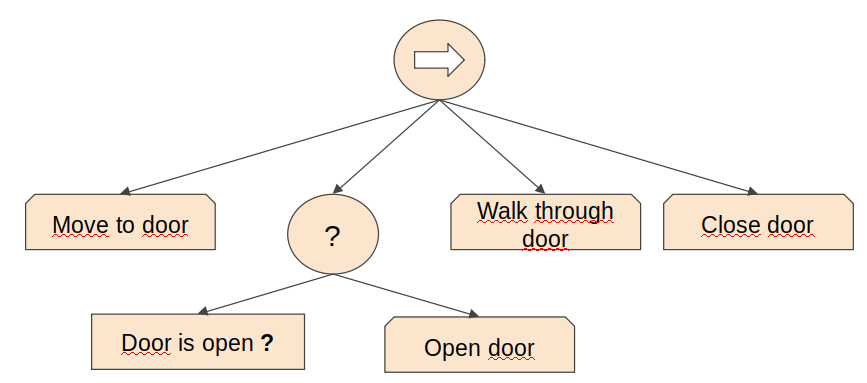

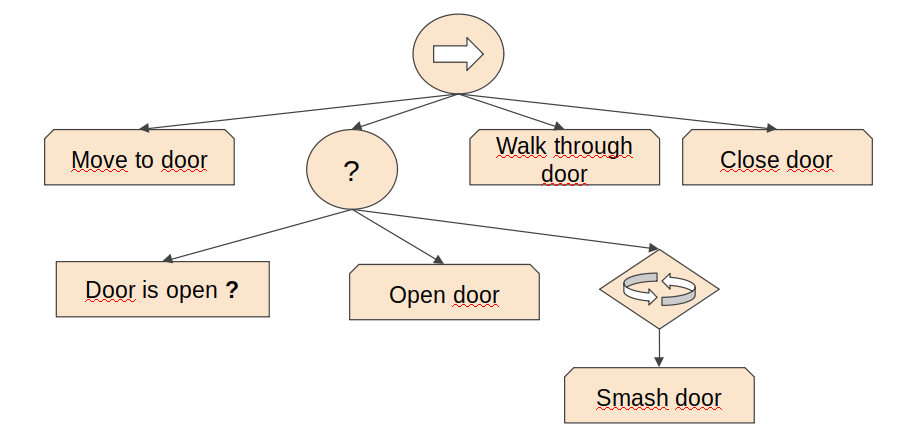

FSM: .blue[States] (with Actions) & .blue[Transitions] (with conditions)

-

DTs: .blue[Conditions] (tree nodes) & .blue[Actions] (leafs).

-

It has no notion of state; we have to go through the whole tree every time we run it.

-

How could we use decision trees in games?

- NPCs Dialogs

- Bosses that switch state every % HP

- … ?

-

Decision trees can be generated automatically.

We will see this in the topic of machine learning.

class: left, middle, inverse

-

.brown[Introduction]

-

.brown[Finite State Machines]

-

.brown[Decision Trees]

-

.cyan[Behaviour Trees]

-

.cyan[Design]

-

NodeCanvas

-

Behaviour Bricks

-

-

Planning Systems

-

References

.cols5050[ .col1[

-

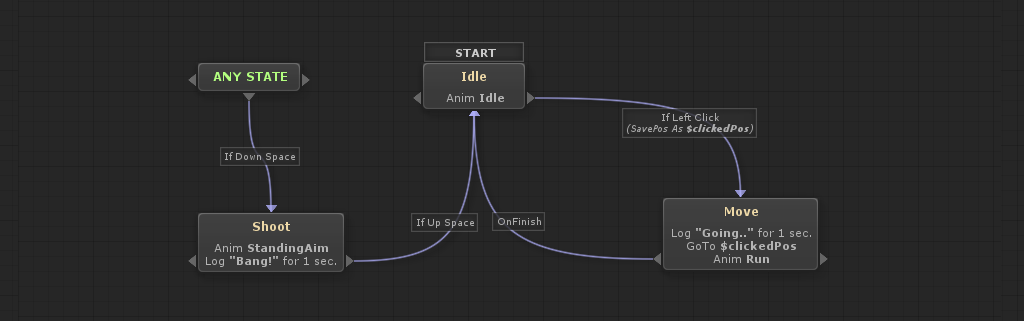

Sort of visual programming for AI behaviour (Isla, 2005)

- Reusability & modularity

- Major engines: unreal, cryengine, unity

-

Behavior Tree combine both:

- Decision trees: execute all at once

- State machines: current state implicit

- the execution stays in one of the nodes

-

.blue[Designing Trees is a hard task!]

Reference: Behavior trees for AI: How they work] .col2[ ]]

]]

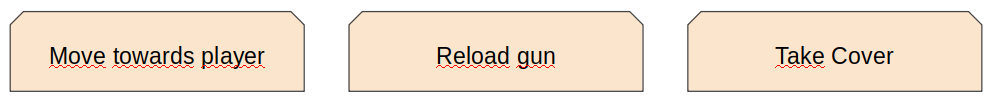

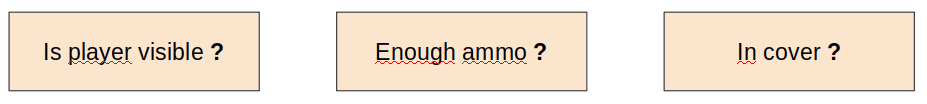

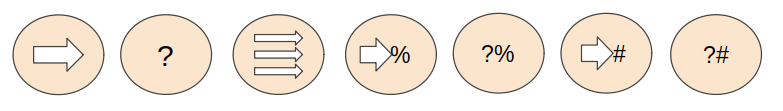

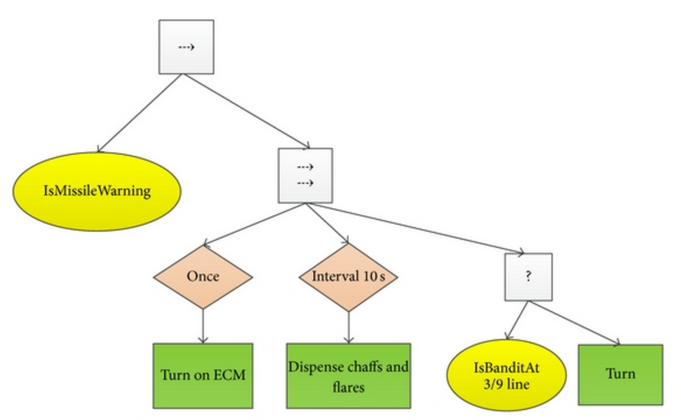

- All should return Running, Success or Failure

- They can take a while!

- Most of the time they will be leaf nodes

- All should return True or False

- Conditions normally refer to the blackboard for questioning the world state

- All should return True or False

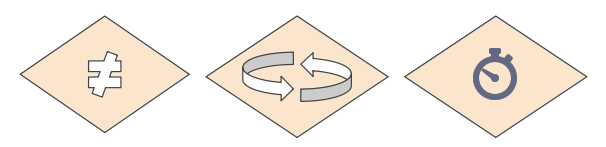

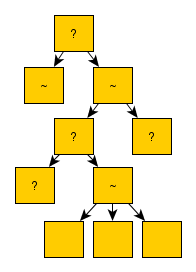

- They iterate all childs from left to right in a specific fashion:

- .blue[Sequence] (AND): A node that executes all its children until one fails

- .blue[Selector] (OR): A node that executes all its children until one succeeds

- .blue[Parallel] (Concurrent AND): Execute all its children at the same time until one fails

- .blue[Random Sequence or Selector] (with %?): Same as sequence or selector but randomly

- .blue[Priority Sequence or Selector] (with %#): Same as sequence or selector but follow a mutable priority

- All should return Running, Success or Failure

- Add enormous flexibility and power to the tree execution flow

- They modify one specific child in some fashion:

- .blue[Inverter] (NOT): invert the result of the child node

- .blue[Repeater] (until fail, N or infinite): basically repeat the child node until fail or N times

- .blue[Wait until] (seconds, condition, etc.): basically a generic delay

.footnote[Behavior trees for AI: How they work]

--

.footnote[Behavior trees for AI: How they work]

- Exercise / homework:

template for design BTs / a solution

class: left, middle, inverse

-

.brown[Introduction]

-

.brown[Finite State Machines]

-

.brown[Decision Trees]

-

.cyan[Behaviour Trees]

-

.brown[Design]

-

.cyan[NodeCanvas]

-

Behaviour Bricks

-

-

Planning Systems

-

References

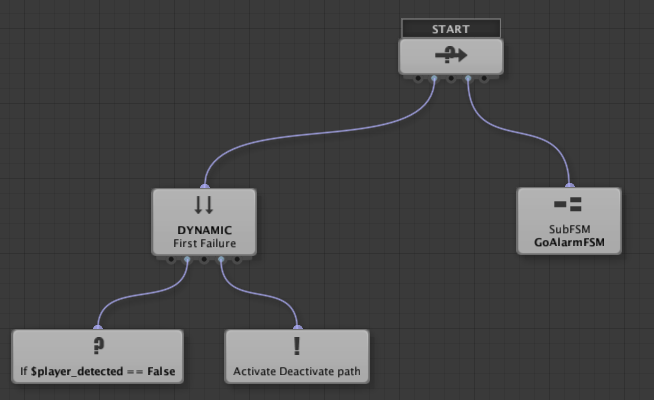

- Add Component - Behaviour Tree Owner (handout)

.footnote[.blue[Need of Success and/or Failure States]]

class: left, middle, inverse

-

.brown[Introduction]

-

.brown[Finite State Machines]

-

.brown[Decision Trees]

-

.cyan[Behaviour Trees]

-

.brown[Design]

-

.brown[NodeCanvas]

-

.cyan[Behaviour Bricks]

-

-

Planning Systems

-

References

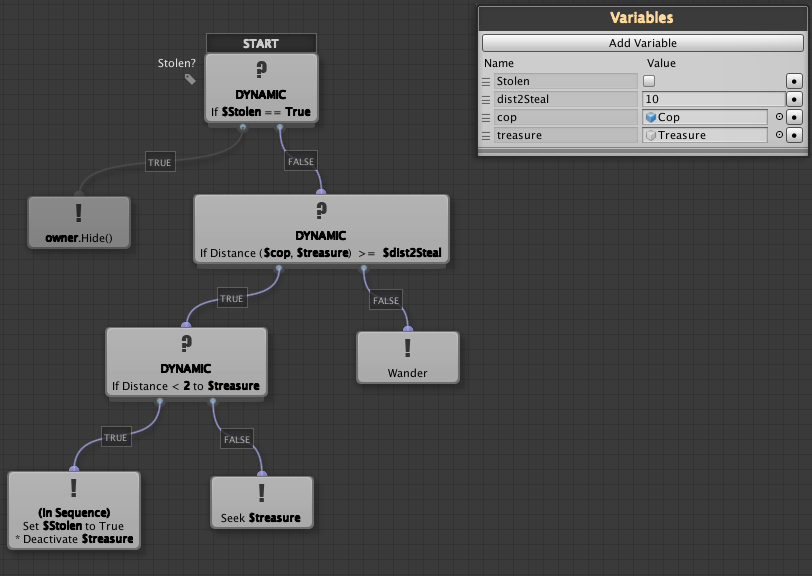

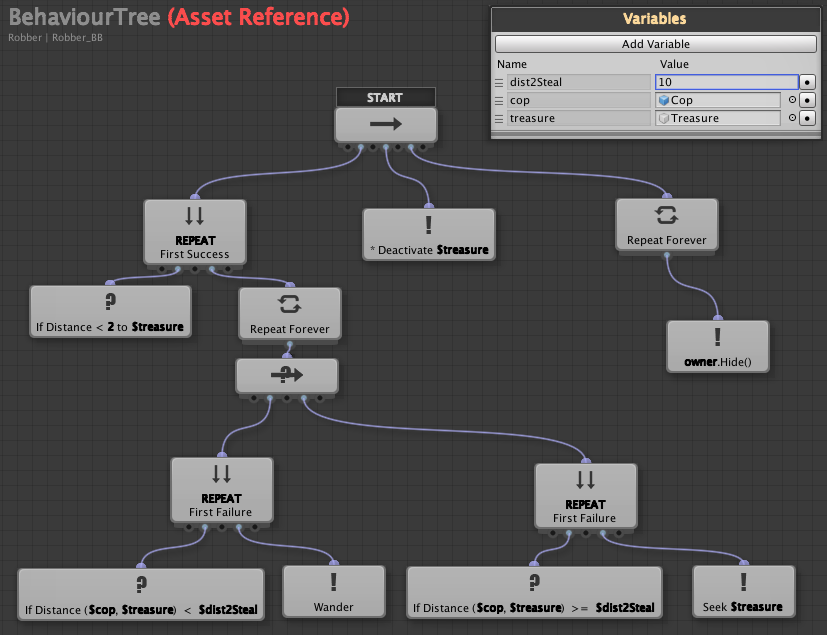

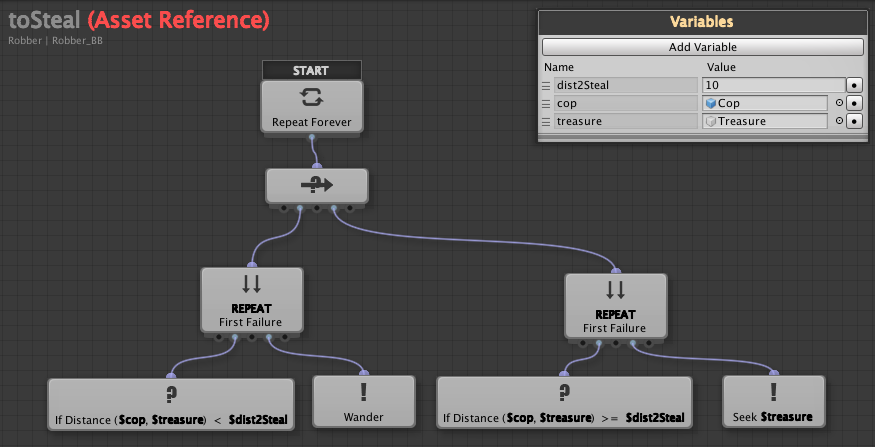

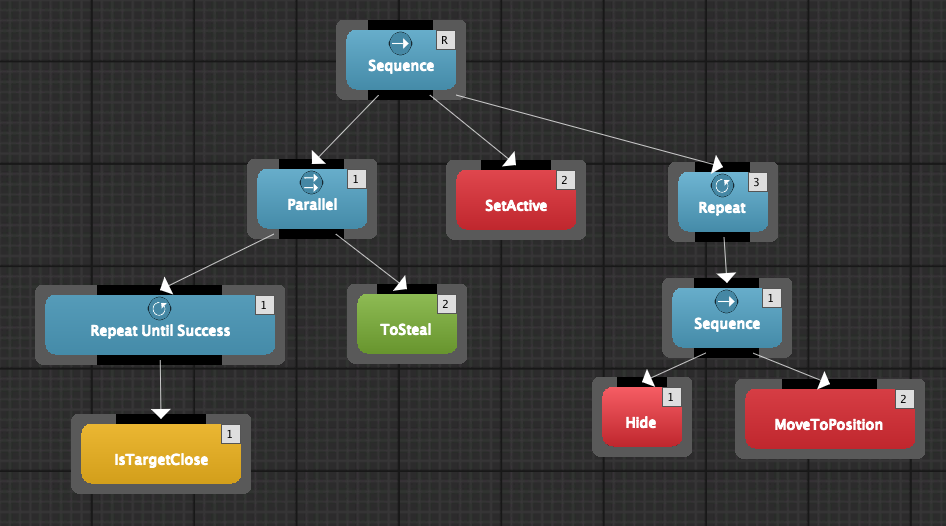

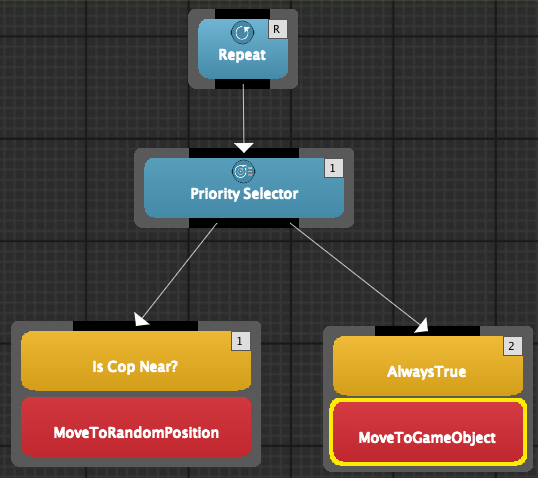

.blue[ToSteal] behaviour tree:

.cols5050[ .col1[ .blue[Starting]:

- Handout

- Editor:

Window - Behavior Bricks - Editor - Robber:

Add Component - Behavior executor component

.blue[BlackBoard / properties]:

MoveToRandomPosition: FloorMoveToGameObject: Treasure

.blue[Conditions]:

- Custom conditions / download

]

.col2[

]]

]]

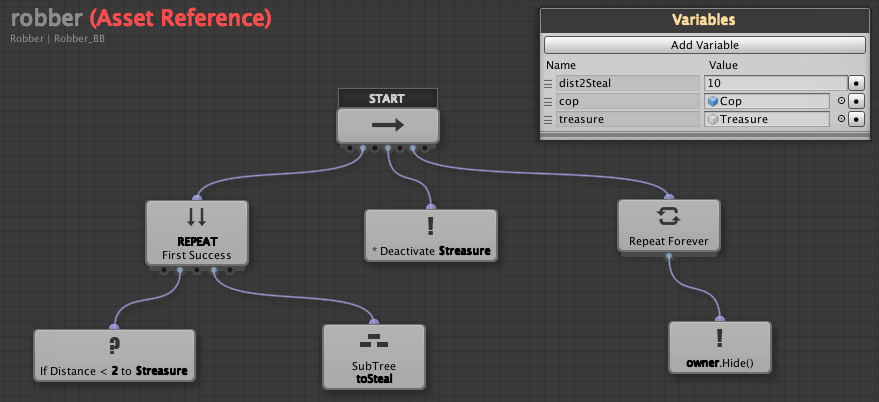

.blue[ToSteal] behaviour tree:

.blue[ToSteal] behaviour tree:

.blue[BlackBoard / properties]:

IsTargetClose: Treasure, 2ToSteal: Floor, TreasureSetActive: false, TresureMoveToPosition: hide

.blue[Actions]:

class: left, middle, inverse

-

.brown[Introduction]

-

.brown[Finite State Machines]

-

.brown[Decision Trees]

-

.brown[Behaviour Trees]

-

.cyan[Planning Systems]

-

.cyan[Goal Oriented Behaviour]

-

Goal Oriented Action Planning

-

AI Planner

-

-

References

-

How to achieve goals

$\rightarrow$ AI -

Exs: FSM, DT, BT

-

World behaviour

$+$ goals$\rightarrow$ AI -

AI decides how to achieve its goals

-

Ex: Planners

- FEAR, Fallout 3, Total War, Deus Ex: Human Revolution, Shadow of Mordor, Tomb Raider

-

each agent can have many active, and they could change

-

try to fulfill its goals or reduce its .blue[insistence] (importance or priority as a number)

-

examples: eat, drink, kill enemy, regenerate health, etc.

-

atomic behaviours that fulfill a requirement

-

combination of positive and negative effects

Ex: “play game console” increases happiness but decreases energy -

environment can generate or activate new available actions (.blue[smart objects])

.footnote[.red[(Millington, 2019)]]

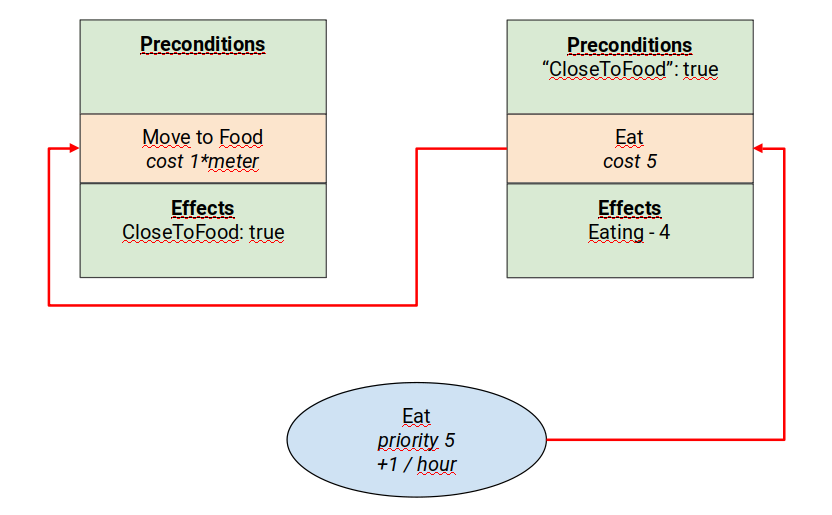

Goal: Eat = 4

Goal: Sleep = 3

Action: Get-Raw-Food (Eat − 3)

Action: Get-Snack (Eat − 2)

Action: Sleep-In-Bed (Sleep − 4)

Action: Sleep-On-Sofa (Sleep − 2)

-

.blue[heuristic] needed: most pressing goal, random...

-

.blue[$+$] fast, simple

-

.blue[$-$] side effects, no timing information

.footnote[.red[(Millington, 2019)]]

--

Goal: Eat = 4

Goal: Bathroom = 3

Action: Drink-Soda (Eat − 2; Bathroom + 3)

Action: Visit-Bathroom (Bathroom − 4)

It is an energy metric to minimize:

-

Sum of insistence values of all goals

-

Sum of square values: it accentuates high values

Goal: Eat = 4

Goal: Bathroom = 3

Action: Drink-Soda (Eat − 2; Bathroom + 2)

after: Eat = 2, Bathroom = 5: Discontentment = 29

Action: Visit-Bathroom (Bathroom − 4)

after: Eat = 4, Bathroom = 0: Discontentment = 16

Solution: Visit-Bathroom

.footnote[.red[(Millington, 2019)]]

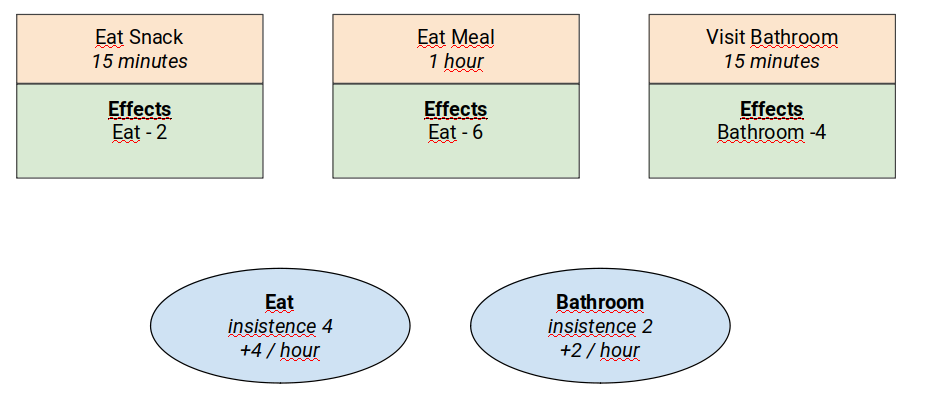

Goal: Eat = 4 changing at + 4 per hour

Goal: Bathroom = 3 changing at + 2 per hour

Action: Eat-Snack (Eat − 2) 15 minutes

after: Eat = 2, Bathroom = 3.5: Discontentment = 16.25

Action: Eat-Main-Meal (Eat − 4) 1 hour

after: Eat = 0, Bathroom = 5: Discontentment = 25

Action: Visit-Bathroom (Bathroom − 4) 15 minutes

after: Eat = 5, Bathroom = 0: Discontentment = 25

Solution: Eat-Snack

.footnote[.red[(Millington, 2019)]]

Goal: Eat = 4 changing at + 4 per hour

Goal: Bathroom = 3 changing at + 2 per hour

Action: Eat-Snack (Eat − 2) 15 minutes

Action: Eat-Main-Meal (Eat − 4) 1 hour

Action: Visit-Bathroom (Bathroom − 4) 15 minutes

class: left, middle, inverse

-

.brown[Introduction]

-

.brown[Finite State Machines]

-

.brown[Decision Trees]

-

.brown[Behaviour Trees]

-

.cyan[Planning Systems]

-

.brown[Goal Oriented Behaviour]

-

.cyan[Goal Oriented Action Planning]

-

AI Planner

-

-

References

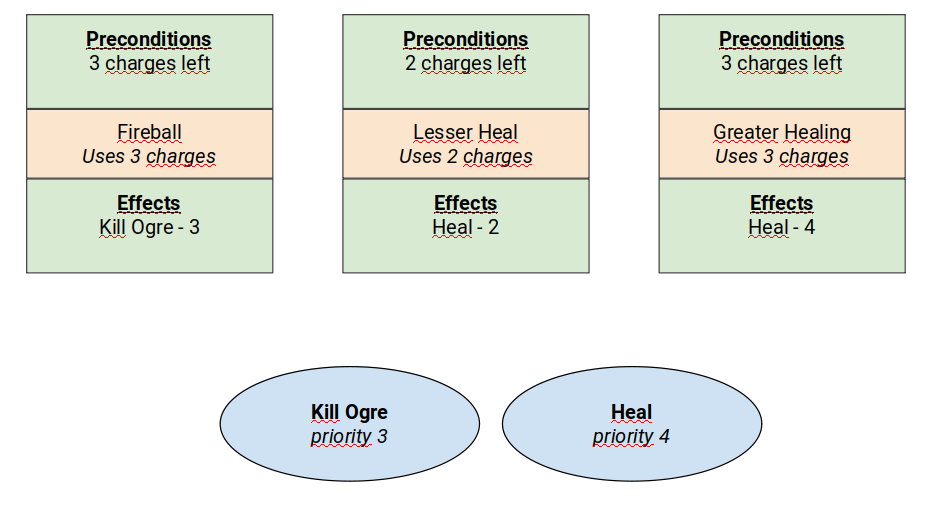

- Mage character

- 5 charges in its wand

- need for healing

- an ogre approaching him aggressively

- plan

Goal: Heal = 4

Goal: Kill-Ogre = 3

Action: Fireball (Kill-Ogre − 2) 3 charges

Action: Lesser-Healing (Heal − 2) 2 charges

Action: Greater-Healing (Heal − 4) 3 charges

Best combination: Lesser-Healing + Fireball

GOB solution: Greate-Healing

.blue[GOB is limited in its prediction, the situation needs to go some steps ahead!]

.footnote[.red[(Millington, 2019)]]

-

.blue[preconditions] for chaining actions

-

.blue[states] for satisfying preconditions

-

.blue[search algorithm] for selecting "best" branches

(each goal is the root of a tree)

-

.blue[BFS]

increasing the number of actions and goals it becomes quickly inefficient -

.blue[A*]

perhaps distance heuristic cannot be formulated -

.blue[Dijkstra]: usual solution

Goal: Heal = 4

Goal: Kill-Ogre = 3

Action: Fireball (Kill-Ogre − 2) 3 charges

Action: Lesser-Healing (Heal − 2) 2 charges

Action: Greater-Healing (Heal − 4) 3 charges

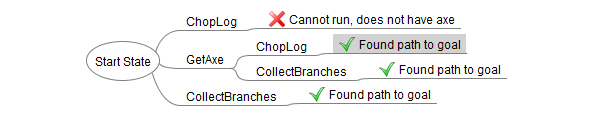

.blue[First approach]:

class: left, middle, inverse

-

.brown[Introduction]

-

.brown[Finite State Machines]

-

.brown[Decision Trees]

-

.brown[Behaviour Trees]

-

.cyan[Planning Systems]

-

.brown[Goal Oriented Behaviour]

-

.brown[Goal Oriented Action Planning]

-

.cyan[AI Planner]

-

-

References

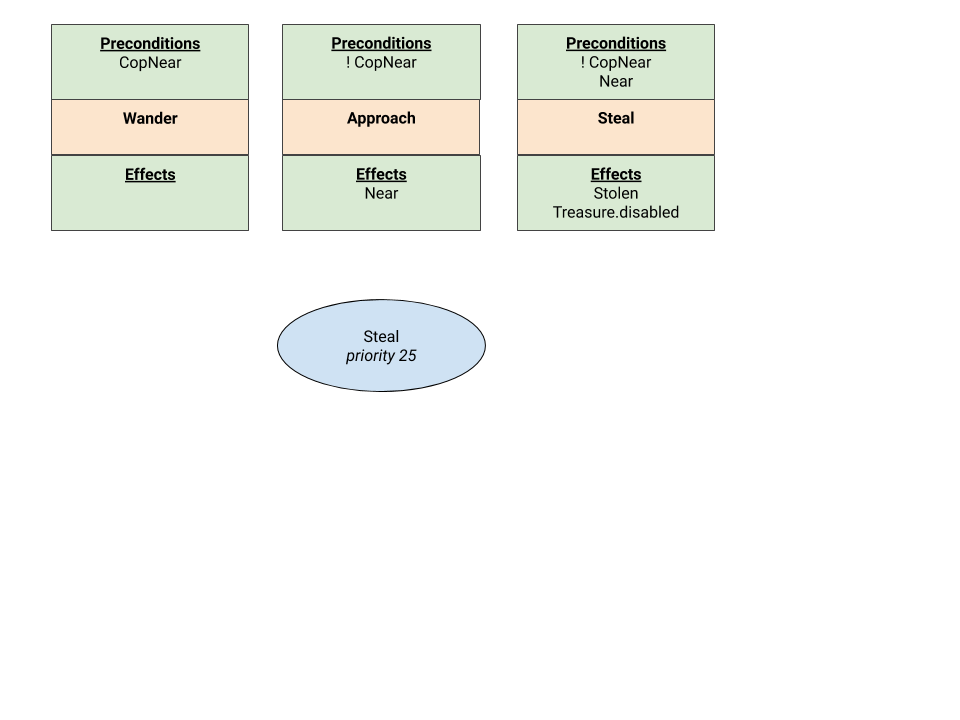

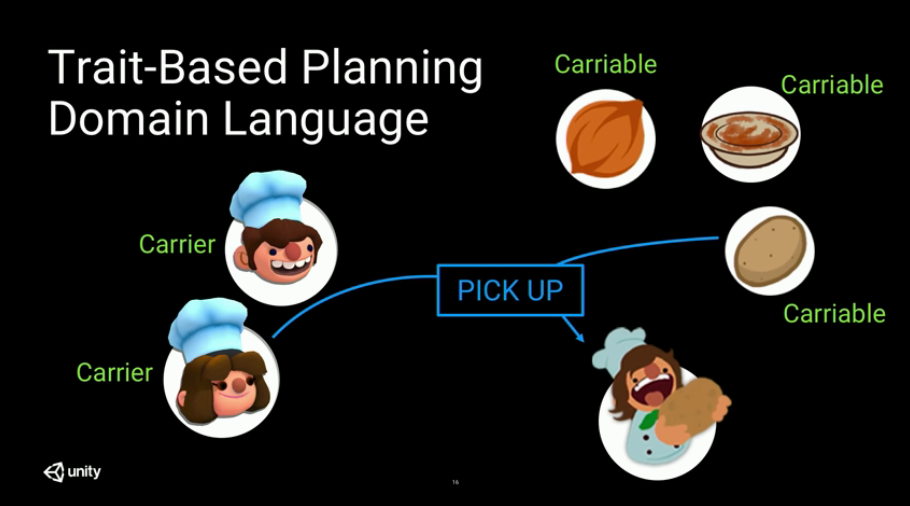

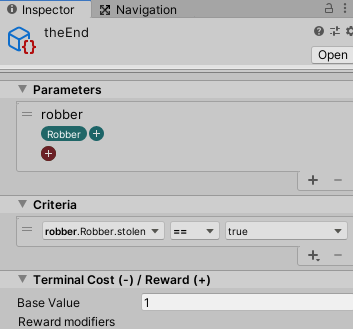

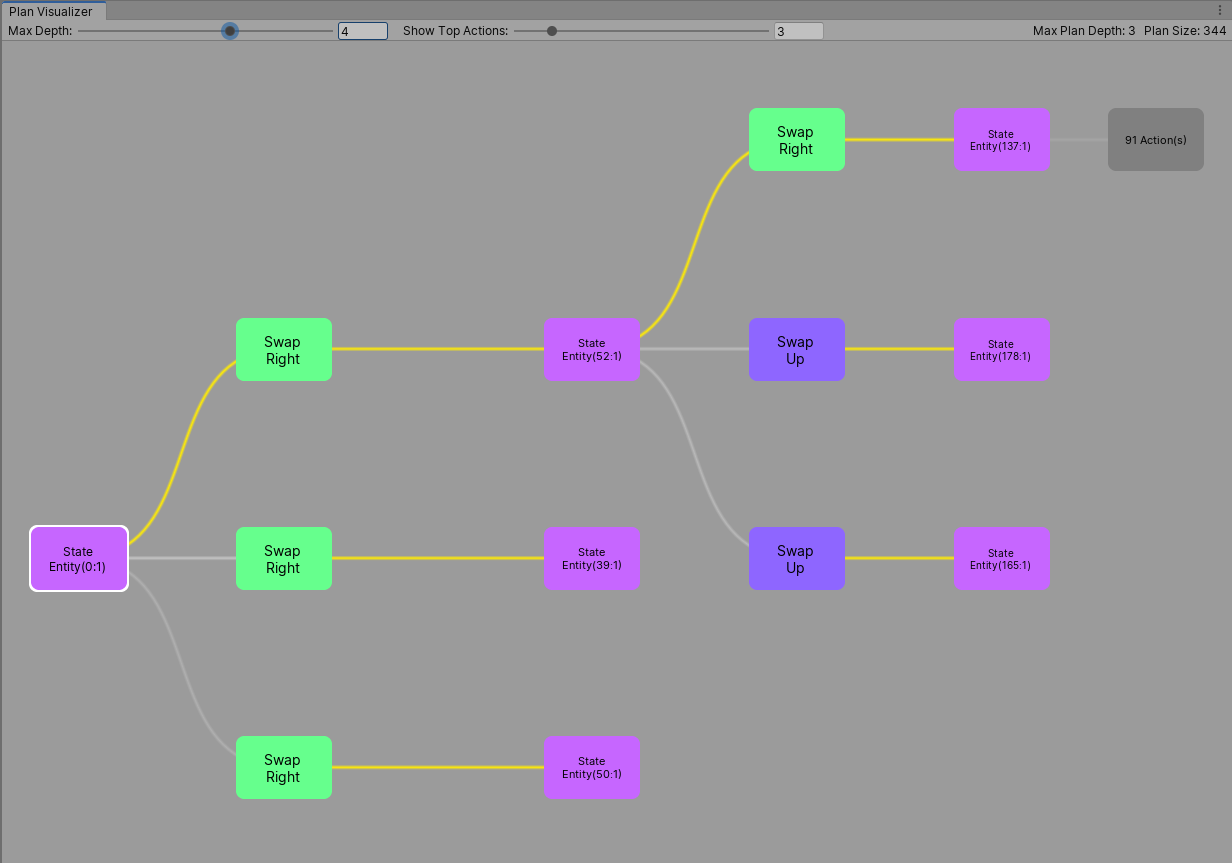

The AI Planner package can generate optimal plans for use in agent AI

-

.red[Reference]

-

it contains a plan visualizer

.cols5050[ .col1[

Create - AI - Trait- fundamental data (game state)

- quality of objects (components)

- contains attributes

- .blue[Valuable] (Treasure)

- .blue[Cop]:

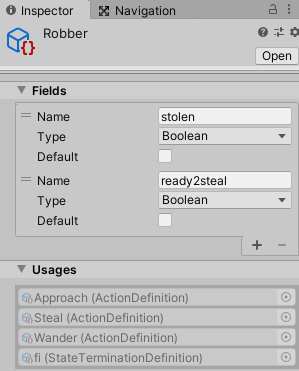

farAway (false) - .blue[Robber]:

ready2steal (false) stolen (false)

.cols5050[ .col1[

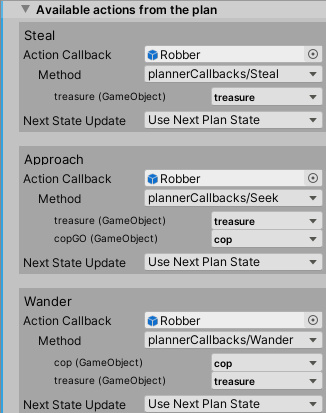

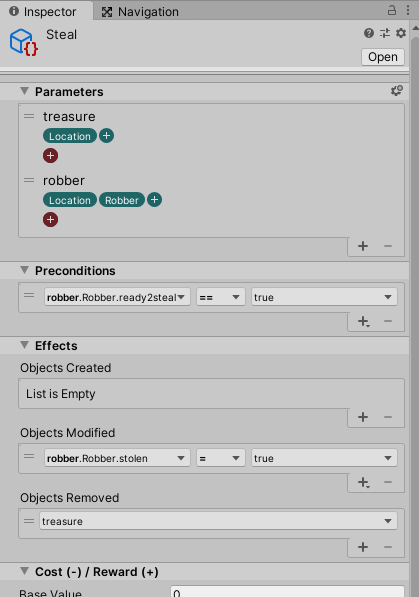

Create - AI - Planner - Action Definition- planner potential decisions

- executes nothing

- Properties:

name, parameters,

preconditions, effects,

cost / reward

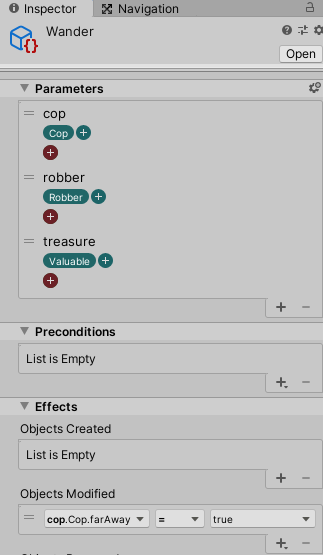

.blue[Wander]

.cols5050[ .col1[

.blue[Approach]

- parameters: cop, robber, treasure

- precondition:

farAway == true - effect:

ready2steal = true

.blue[Steal]

- parameters: robber, treasure

- precondition:

ready2steal == true - effect:

stolen = true,

treasureremoved ] .col2[ ]]

]]

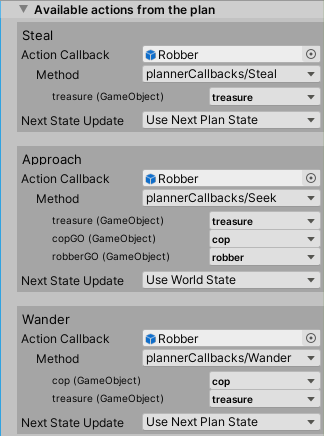

.cols5050[ .col1[

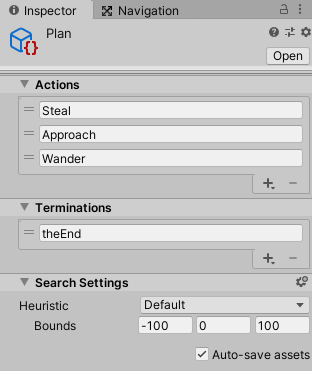

Create - AI - Planner - Plan Definition

Create - AI - Planner - State Termination Definition

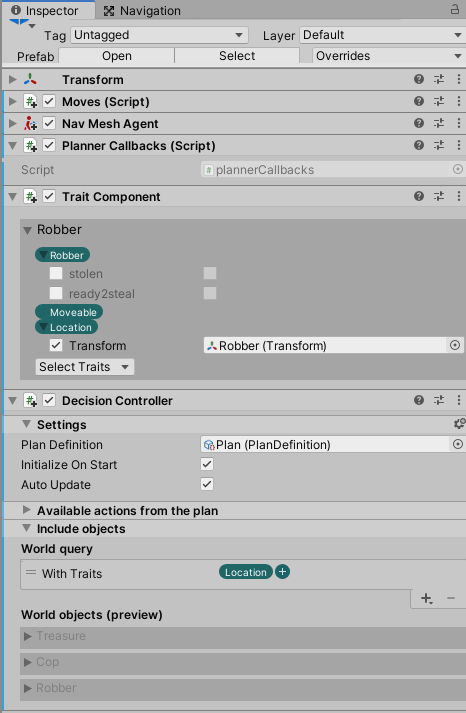

.cols5050[ .col1[

-

Add Component - TraitComponentto the GameObjects -

Add Component - DecisionController

to the AI agent GameObject

-

Add the plan definition

-

Add the world objects

with traits

- Create and link the

callbacks...

.cols5050[ .col1[

-

ActionDefinitions components are .blue[not] applied to the scene

-

It is the Action Callbacks goal

-

.blue[Coroutine] is the choice for actions that execute over multiple frames

.footnote[.red[*] made with .red[hightlighting]]

Window - AI - Plan Visualizer

Example: .blue[Non linear behaviour of Robber]

-

Go to

Assets - Planner - Traits -

Create - Assembly Definition -

Inspector - Version Defines - Resource - generated.ai.planner.staterepresentation

-

Approach:

Cost/Reward: -1 -

Steal:

Cost/Reward: 5 -

Wander:

Cost/Reward: -2

.cols5050[ .col1[

- Approach:

Method - roberGO

Next State Update

- Robber video

]

.col2[

]]

]]

.footnote[.red[*] made with .red[hightlighting]]

class: left, middle, inverse

-

.brown[Introduction]

-

.brown[Finite State Machines]

-

.brown[Decision Trees]

-

.brown[Behaviour Trees]

-

.brown[Planning Systems]

-

.cyan[References]

-

Ian Millington. AI for Games (3rd edition). CRC Press, 2019.

-

Penny de Byl. Artificial Intelligence for Beginners. Unity Course.

-

Chris Simpson. Behavior trees for AI: How they work. Gamasutra, 2014.

-

Unity Technologies. AI Planner. 2020.

-

Damian Isla. Handling Complexity in the Halo 2 AI. GDC, 2005.

-

Ricard Pillosu. Previous year slides of the AI course, 2019.