Hand Gesture Recognition

This simple sample project recognizes hands in realtime. 👋 It serves as a basic example for recognizing your own objects. Suitable for AR 🤓. Written for the tutorial “Create your own Object Recognizer”.

Tech: iOS 11, ARKit, CoreML, iPhone 7 plus, Xcode 9.1, Swift 4.0

Notes:

This demonstrates basic Object Recognition (for spread hand 🖐, fist 👊, and no hands ❎). It serves as a building block for object detection, localization, gesture-recognition, and hand tracking.

Disclaimer:

The sample model provided here was captured in 1 hour and is biased to one human hand 👋🏼. It’s intended as a placeholder for your own models. (See Tutorial)

Steps Taken (Overview)

Here’s an overview of the steps taken. (You can also view my commit history to see steps involved.)

- Build an Intuition by playing with Google CL's Teachable Machine.

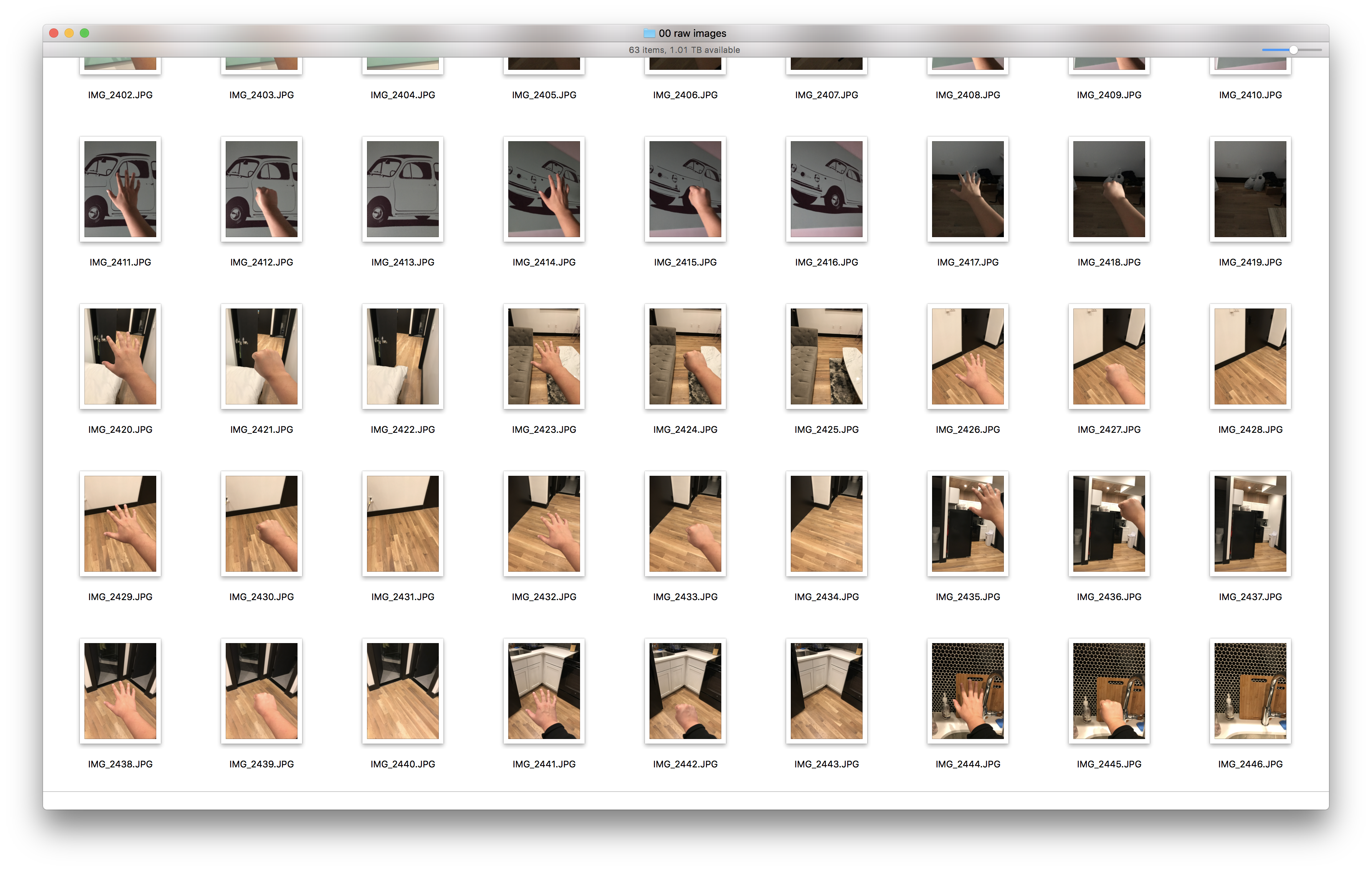

- Build dataset.

- Create a Core ML Model using Microsoft's CustomVision.ai.

- Run the model in realtime with ARKit.

P.S. A few well selected images are sufficient for CustomVision.ai . For the sample model here, I did 3 rounds of data collection (adding 63, 38, 21 images per round). Alternating classes during data collection also appeared to work better than gathering all the class images at once.

License

MIT Open Source License. 🧞 Use as you wish. Have fun! 😁