Improving GANs with A Dynamic Discriminator

Ceyuan Yang*, Yujun Shen*, Yinghao Xu, Deli Zhao, Bo Dai, Bolei Zhou

arXiv preprint arXiv:2209.09897

[Paper] [Project Page]

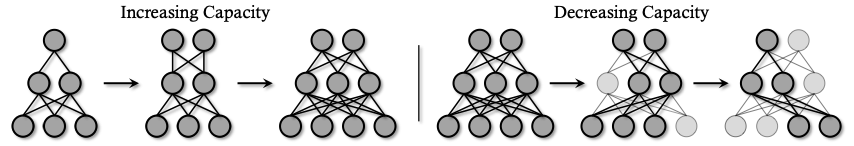

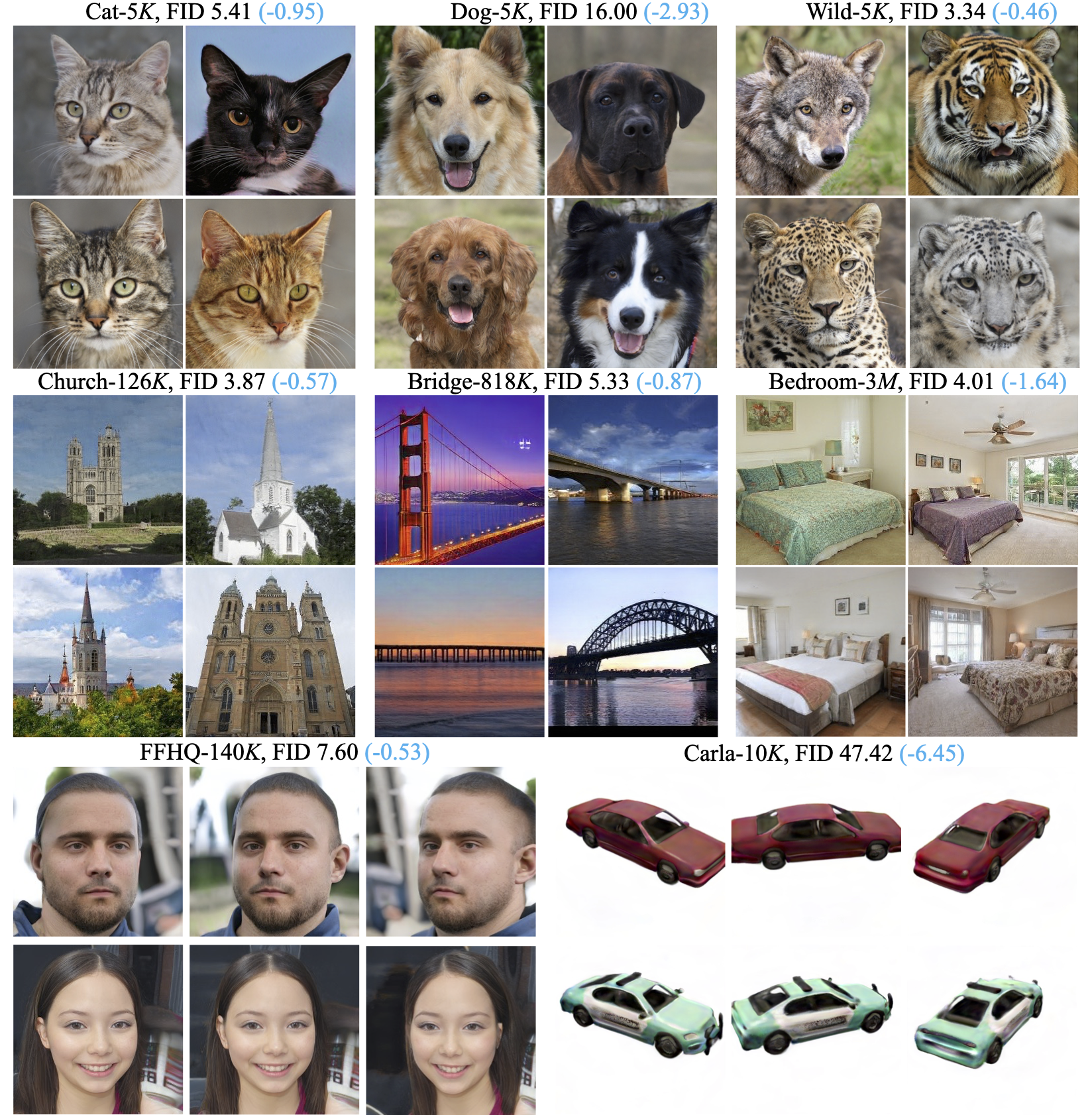

This work aims at adjusting the capacity of a discriminator on-the-fly to better accommodate the time-varying bi-classification task. A comprehensive empirical study confirms that the proposed training strategy, termed as DynamicD, improves the synthesis performance without incurring any additional computation cost or training objectives. Two capacity adjusting schemes are developed for training GANs under different data regimes: i) given a sufficient amount of training data, the discriminator benefits from a progressively increased learning capacity, and ii) when the training data is limited, gradually decreasing the layer width mitigates the over-fitting issue of the discriminator. Experiments on both 2D and 3D-aware image synthesis tasks conducted on a range of datasets substantiate the generalizability of our DynamicD as well as its substantial improvement over the baselines. Furthermore, DynamicD is synergistic to other discriminator-improving approaches (including data augmentation, regularizers, and pre-training), and brings continuous performance gain when combined for learning GANs.

Here we provide synthesis with corresponding FID. Numbers in blue highlight the improvements over baselines.

This repository is built based on styleGAN2-ada-pytorch. In particular, we introduce several options that control the behavior of on-the-fly capacity adjusting:

--occupy_startspecifies the capacity ratio in the beginning.--occupy_endspecifies the capacity ratio in the end.--randomly_selectdetermines whether to randomly sample a subnet.--keepddetermines the layers to be excluded from adjusting strategy.

For instance, using the following command enables the increasing strategy:

--occupy_start 0.5 --occupy_end 1.0while command like this

--occupy_start 1.0 --occupy_end 0.5 --randomly_select TRUE --keepd 9is for the decreasing strategy.

- Pretrained weights

- Training code verification

This work is made available under the Nvidia Source Code License.

@article{yang2022improving,

title = {Improving GANs with A Dynamic Discriminator},

author = {Yang, Ceyuan and Shen, Yujun and Xu, Yinghao and Zhao, Deli and Dai, Bo and Zhou, Bolei},

article = {arXiv preprint arXiv:2209.09897},

year = {2022}

}