Sicheng Mo1*, Fangzhou Mu2*, Kuan Heng Lin1, Yanli Liu3, Bochen Guan3, Yin Li2, Bolei Zhou1

1 UCLA, 2 University of Wisconsin-Madison, 3 Innopeak Technology, Inc

* Equal contribution

Computer Vision and Pattern Recognition (CVPR), 2024

Environment Setup

- We proovide a conda env file for environment setup.

conda env create -f environment.yml

conda activate freecontrolSample Semantic Bases

- We provide two example file under the scripts folder as an example of how to compute target semantic bases.

- You can also download from google drive to use our pre-computed bases.

- After downloading the file, you can put it under the dataset folder and use the gradio demo.

Gradio demo

- We provide the user interface for testing out method. Ruuning the following commend to start the demo.

python gradio_app.pyWe are building a gallery generated with FreeControl. You are wellcomed to share your generated images with us.

@article{mo2023freecontrol,

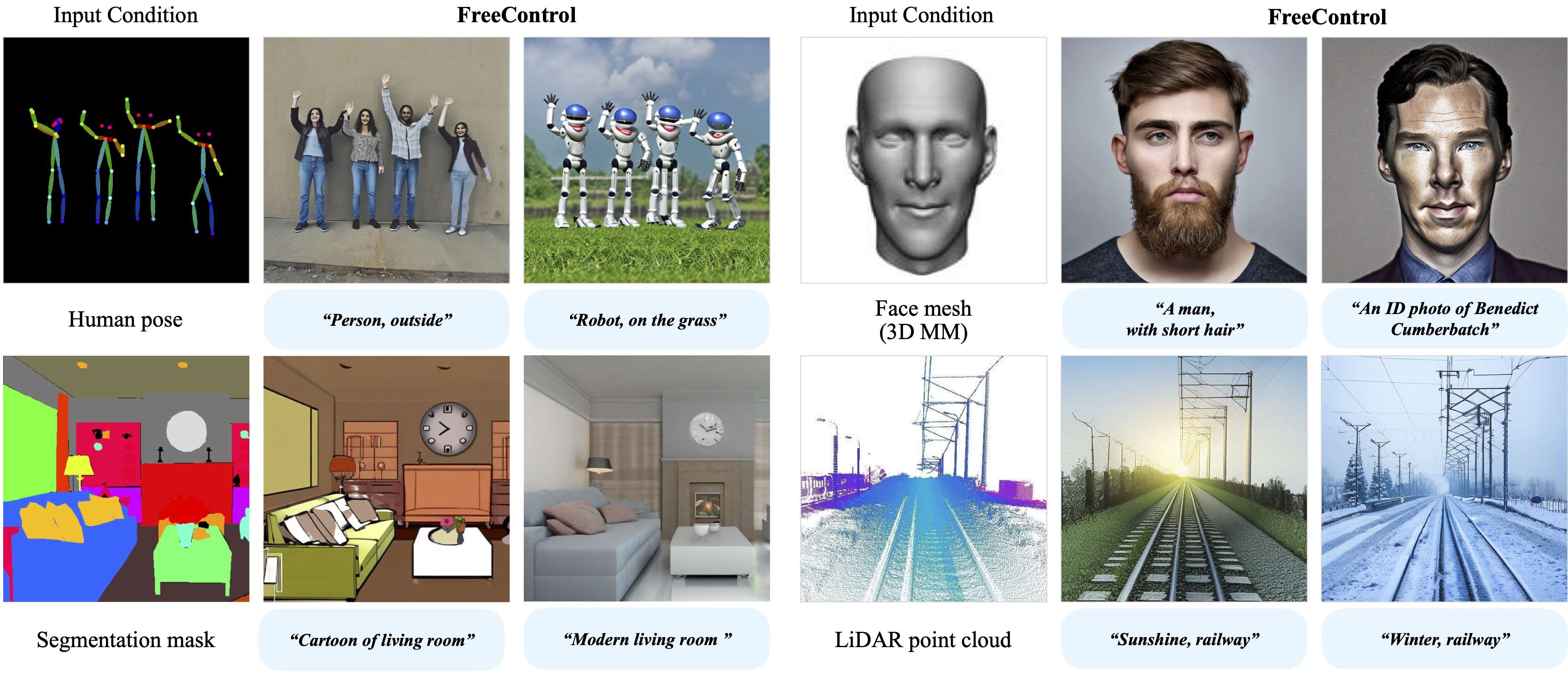

title={FreeControl: Training-Free Spatial Control of Any Text-to-Image Diffusion Model with Any Condition},

author={Mo, Sicheng and Mu, Fangzhou and Lin, Kuan Heng and Liu, Yanli and Guan, Bochen and Li, Yin and Zhou, Bolei},

journal={arXiv preprint arXiv:2312.07536},

year={2023}

}