This is the repo for the project of studying the paper A Variational Analysis of Stochastic Gradient Algorithms .

First move to the project root directory

cd ./VSGA

Then install the requirements using python-pip

pip install -r requirements.txt

Every command below goes from the project root directory.

You may decide to run a part of our experiments by executing the command for the sections below. Note that an important part of our experiments is done in Notebook files (task 4).

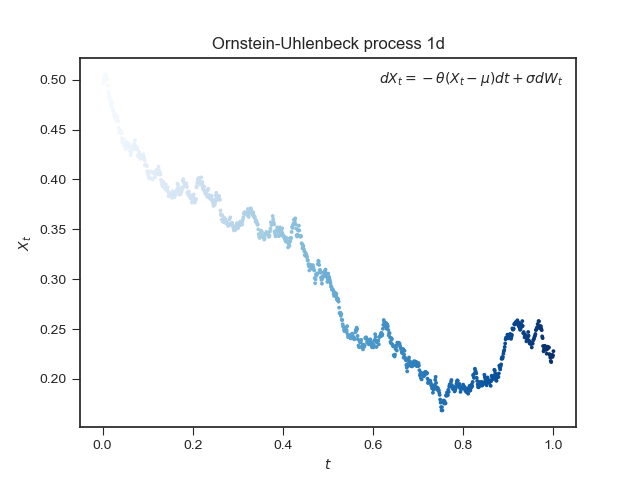

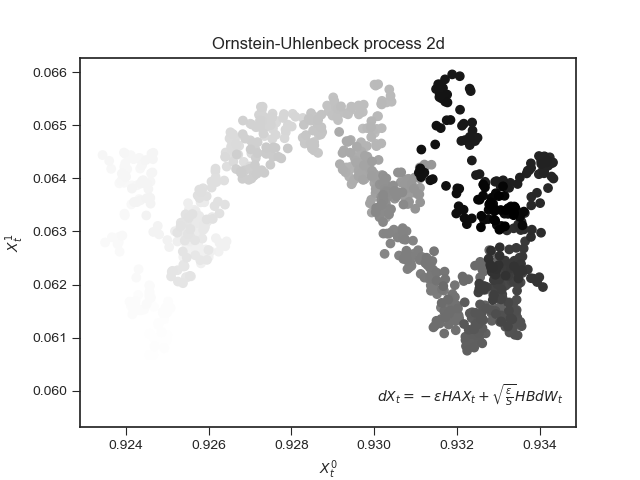

An Ornstein-Uhlenbeck (OU) process is a continuous mean-reverting process (act like a mechanical spring) commonly used in finance. In we paper we study, the authors have modeled the SGD as a OU process. This interpretation will yield interesting use cases for bayesian optimization. Below is a realization of a 1D OU process (left) and 2D OU process (right)

The same figures can be generated by executing the following command.

python3 ou_process.py

For each task, the code to execute it follows (ID=01, 02, 03)

cd ./tasks && python3 taskID.py

This task refers to the section about hyper-parameters optimization (§4.2). We aim at optimizing hyper-parameters by minimizing a posterior distribution. We use softmax regression on MNIST (50000 items for training, 10000 for validation, 784 features per item). Please note that for this task, important details about the implementation were not found in the paper, therefore, our results may be far for those of the original paper.

This task is identical to task 1, except we have changed the dataset to ForestCoverType (464810 items for training, 116202 for validation, 54 categorical and continuous features) We have a normalization to the categorical features.

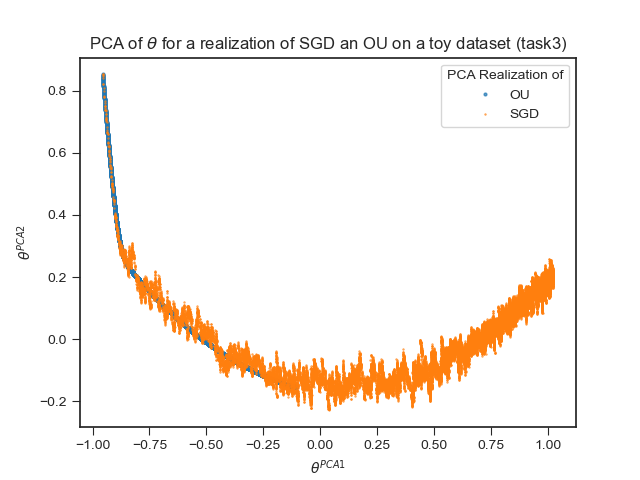

For this task, we used the same pipeline, but applied to a toy dataset (taken from scikit-learn). The goal of this task is to be able to visualize the SGD process as well as a realization of the OU process.

We use same parameters

For this experiment we use the Red Wine quality dataset , trying to predict using a linear model the quality of a red wine given some chemical properties. The all pipeline of the experiment is in the notebook linear_model.ipynb where you can follow the experiment and execute the cells step by step. The code for the experiment is in ./variational_sgd/.

https://stats.stackexchange.com/questions/60680/kl-divergence-between-two-multivariate-gaussians http://www2.myoops.org/cocw/mit/NR/rdonlyres/Mathematics/18-441Statistical-InferenceSpring2002/C4505E54-35C3-420B-B754-75D763B8A60D/0/feb192002.pdf https://doi.org/10.1093/imamci/9.4.275 https://en.wikipedia.org/wiki/Lyapunov_equation https://link.aps.org/doi/10.1103/PhysRev.36.823