Trying to understand trends in the latest AI papers.

Docker or, for local installation:

- Python 3.11+

- Poetry

Note: Poetry installation currently not working due to a bug when installing fasttext.

To make it easier to run the code, with or without Docker, I created a few helpers. Both ways use start_here.sh as an entry point. Since there are a few quirks when calling the specific code, I created this file with all the necessary commands to run the code. All you need to do is to uncomment the relevant lines and run the script:

cluster_conferences=1

find_words_usage_over_conf=1You first need to install Python Poetry. Then, you can install the dependencies and run the code:

poetry install

bash start_here.shTo help with the Docker setup, I created a Dockerfile and a Makefile. The Dockerfile contains all the instructions to create the Docker image. The Makefile contains the commands to build the image, run the container, and run the code inside the container. To build the image, simply run:

makeTo call start_here.sh inside the container, run:

make runThe data used in this project is the result from running AI Papers Search Tool. We need both the data/ and model_data/ directories.

All the work is done based on the abstracts of the papers. It uses the fasttext library to build paper representations, t-SNE to reduce the dimensionality of the data, and k-means to cluster the papers.

This script clusters the papers from a specific conference/year.

This script clusters the words from a specific conference/year.

This script clusters the papers that contain a specific word or similar words.

The best way to visualize the embeddings is through the Embedding Projector, which I use inside Comet. If you want to use Comet, just create a file named .comet.config in the root folder here, and add the following lines:

[comet]

api_key=YOUR_API_KEY

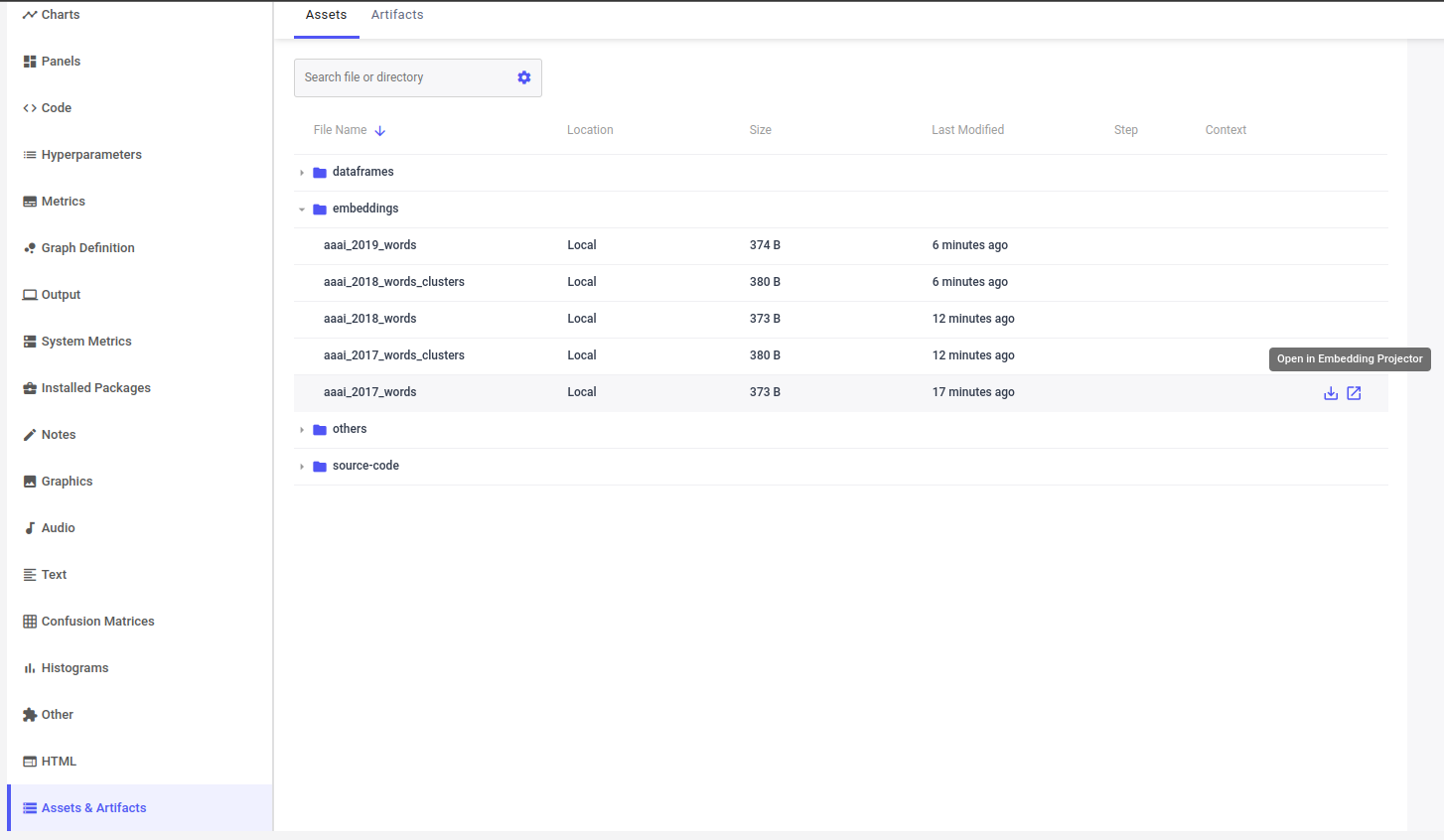

An example of these experiments logged in Comet can be found here. To visualize the embeddings, click on the experiment on the left, then navigate to Assets & Artifacts, open the embeddings directory, and click Open in Embedding Projector.

- create n-gram from abstracts before clustering