Supported model providers: OpenAI, Anthropic

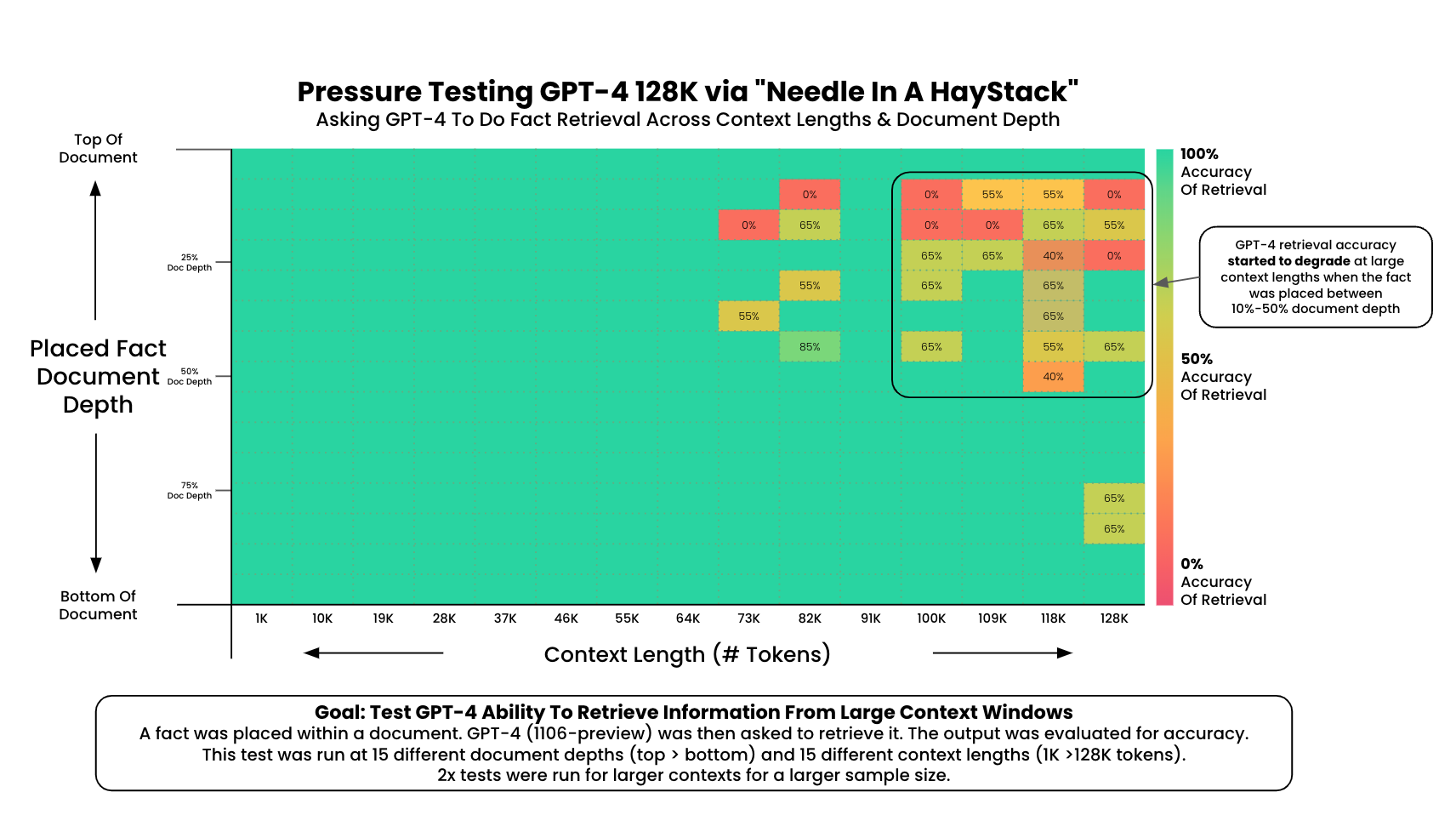

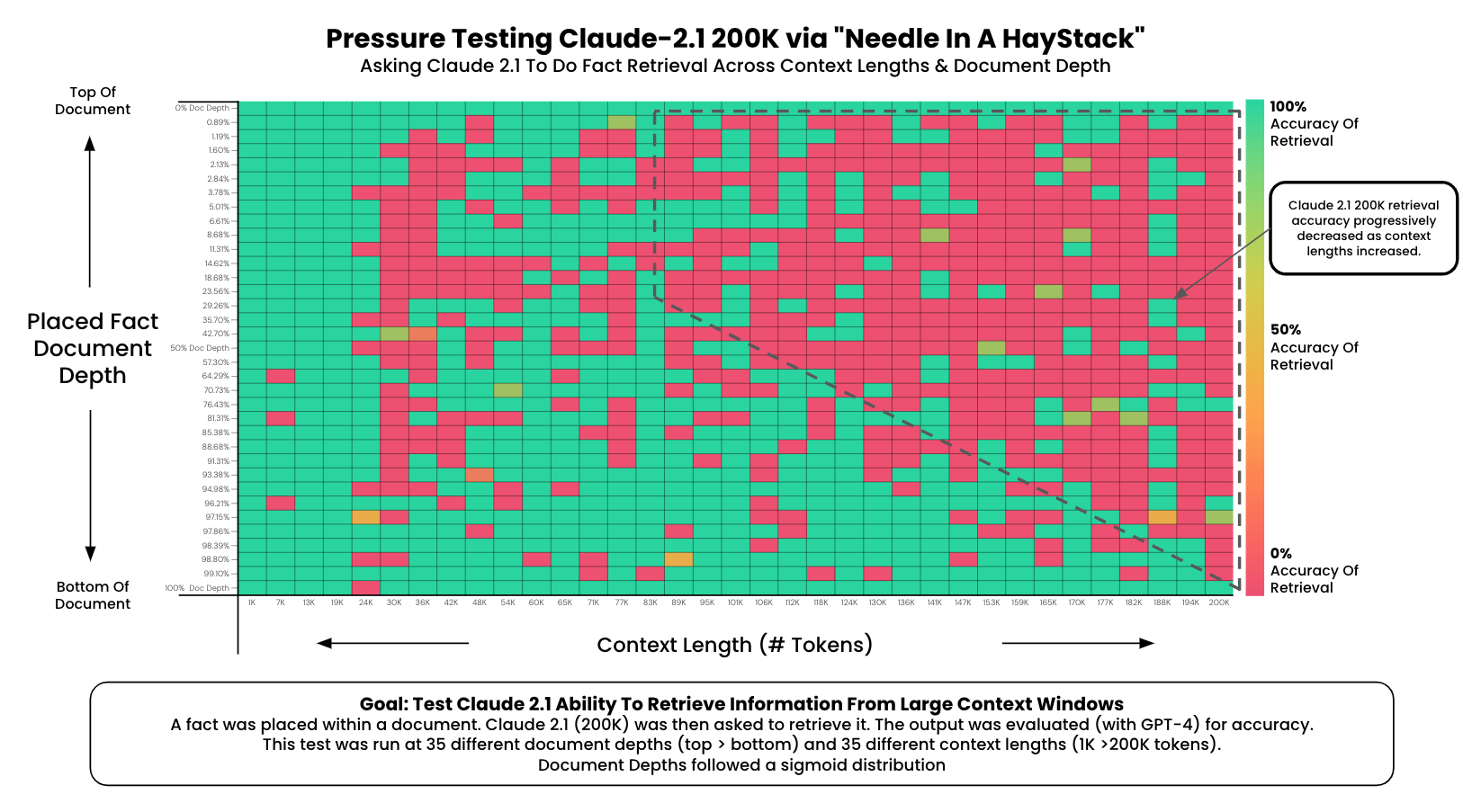

A simple 'needle in a haystack' analysis to test in-context retrieval ability of long context LLMs.

Get the behind the scenes on the overview video.

$ git clone https://github.com/prabha-git/LLMTest_NeedleInAHaystack.git

$ cd LLMTest_NeedleInAHaystack

$ python -m venv venv

$ pip install -r requirements.txt

$ export OPENAI_API_KEY=<<openai_key>>

$ Python

>>> from OpenAIEvaluator import OpenAIEvaluator

>>> openai_ht = OpenAIEvaluator(model_name='gpt-4-1106-preview', evaluation_method='gpt4')

>>> openai_ht.start_test()

Starting Needle In A Haystack Testing...

- Model: gpt-4-1106-preview

- Context Lengths: 35, Min: 1000, Max: 200000

- Document Depths: 35, Min: 0%, Max: 100%

- Needle: The best thing to do in San Francisco is eat a sandwich and sit in Dolores Park on a sunny day.

- Place a random fact or statement (the 'needle') in the middle of a long context window (the 'haystack')

- Ask the model to retrieve this statement

- Iterate over various document depths (where the needle is placed) and context lengths to measure performance

This is the code that backed this OpenAI and Anthropic analysis.

If ran and save_results = True, then this script will populate a result/ directory with evaluation information. Due to potential concurrent requests each new test will be saved as a few file.

I've put the results from the original tests in /original_results. I've upgraded the script since those test were ran so the data formats may not match your script results.

The key parameters:

needle- The statement or fact which will be placed in your context ('haystack')haystack_dir- The directory which contains the text files to load as background context. Only text files are supportedretrieval_question- The question with which to retrieve your needle in the background contextresults_version- You may want to run your test multiple times for the same combination of length/depth, change the version number if socontext_lengths_min- The starting point of your context lengths list to iteratecontext_lengths_max- The ending point of your context lengths list to iteratecontext_lengths_num_intervals- The number of intervals between your min/max to iterate throughdocument_depth_percent_min- The starting point of your document depths. Should be int > 0document_depth_percent_max- The ending point of your document depths. Should be int < 100document_depth_percent_intervals- The number of iterations to do between your min/max pointsdocument_depth_percent_interval_type- Determines the distribution of depths to iterate over. 'linear' or 'sigmoidmodel_name- The name of the model you'd like to test. Should match the exact value which needs to be passed to the api. Ex:gpt-4-1106-previewsave_results- Whether or not you'd like to save your results to file. They will be temporarily saved in the object regardless. True/Falsesave_contexts- Whether or not you'd like to save your contexts to file. Warning these will get very long. True/False

Other Parameters:

context_lengths- A custom set of context lengths. This will override the values set forcontext_lengths_min, max, and intervals if setdocument_depth_percents- A custom set of document depths lengths. This will override the values set fordocument_depth_percent_min, max, and intervals if setopenai_api_key- Must be supplied. GPT-4 is used for evaluation. Can either be passed when creating the object or an environment variableanthropic_api_key- Only needed if testing Anthropic models. Can either be passed when creating the object or an environment variablenum_concurrent_requests- Default: 1. Set higher if you'd like to run more requests in parallel. Keep in mind rate limits.final_context_length_buffer- The amount of context to take off each input to account for system messages and output tokens. This can be more intelligent but using a static value for now. Default 200 tokens.seconds_to_sleep_between_completions- Default: None, set # of seconds if you'd like to slow down your requestsprint_ongoing_status- Default: True, whether or not to print the status of test as they completeevaluation_method- Choose between gpt4 and simple substring matching to evaluate, default is gpt4

LLMNeedleInHaystackVisualization.ipynb holds the code to make the pivot table visualization. The pivot table was then transferred to Google Slides for custom annotations and formatting. See the google slides version. See an overview of how this viz was created here.

This project is licensed under the MIT License - see the LICENSE file for details. Use of this software requires attribution to the original author and project, as detailed in the license.