This is a library developed to incorporate useful properties and methods in relevant data science packages, such as scikit-learn and pycaret, in order to provide a pipeline which suits every supervised problem. Therefore, data scientists can spend less time working on building pipelines and use this time more wisely to create new features and tune the best model.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_splitdf = pd.read_csv("juice.csv")df.head()| Id | Purchase | WeekofPurchase | StoreID | PriceCH | PriceMM | DiscCH | DiscMM | SpecialCH | SpecialMM | LoyalCH | SalePriceMM | SalePriceCH | PriceDiff | Store7 | PctDiscMM | PctDiscCH | ListPriceDiff | STORE | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | CH | 237 | 1 | 1.75 | 1.99 | 0.00 | 0.0 | 0 | 0 | 0.500000 | 1.99 | 1.75 | 0.24 | No | 0.000000 | 0.000000 | 0.24 | 1 |

| 1 | 2 | CH | 239 | 1 | 1.75 | 1.99 | 0.00 | 0.3 | 0 | 1 | 0.600000 | 1.69 | 1.75 | -0.06 | No | 0.150754 | 0.000000 | 0.24 | 1 |

| 2 | 3 | CH | 245 | 1 | 1.86 | 2.09 | 0.17 | 0.0 | 0 | 0 | 0.680000 | 2.09 | 1.69 | 0.40 | No | 0.000000 | 0.091398 | 0.23 | 1 |

| 3 | 4 | MM | 227 | 1 | 1.69 | 1.69 | 0.00 | 0.0 | 0 | 0 | 0.400000 | 1.69 | 1.69 | 0.00 | No | 0.000000 | 0.000000 | 0.00 | 1 |

| 4 | 5 | CH | 228 | 7 | 1.69 | 1.69 | 0.00 | 0.0 | 0 | 0 | 0.956535 | 1.69 | 1.69 | 0.00 | Yes | 0.000000 | 0.000000 | 0.00 | 0 |

X = df.iloc[:, 2:]

y = df.iloc[:, 1].apply(lambda x: 1 if x=="CH" else 0)train_X, test_X, train_y, test_y = train_test_split(X, y, test_size=0.2, random_state=0)Importing our class for classification problems

from automl.classification import ClassifierPyCaretDefining object

my_clf = ClassifierPyCaret(metric="AUC")Training all models and selecting the one with best performance

my_clf.fit(train_X, train_y)| Model | Accuracy | AUC | Recall | Prec. | F1 | Kappa | |

|---|---|---|---|---|---|---|---|

| 0 | Linear Discriminant Analysis | 0.8312 | 0.8994 | 0.8724 | 0.8589 | 0.8649 | 0.6396 |

| 1 | Logistic Regression | 0.8338 | 0.8965 | 0.8787 | 0.858 | 0.8674 | 0.6444 |

| 2 | CatBoost Classifier | 0.8104 | 0.889 | 0.8514 | 0.8459 | 0.8475 | 0.5963 |

| 3 | Extreme Gradient Boosting | 0.8078 | 0.8838 | 0.8599 | 0.8379 | 0.8475 | 0.5873 |

| 4 | Gradient Boosting Classifier | 0.8039 | 0.8804 | 0.8577 | 0.8338 | 0.8444 | 0.5789 |

| 5 | Light Gradient Boosting Machine | 0.7831 | 0.8745 | 0.8284 | 0.8255 | 0.8256 | 0.5381 |

| 6 | Ada Boost Classifier | 0.8169 | 0.8628 | 0.8787 | 0.8358 | 0.8563 | 0.6042 |

| 7 | Random Forest Classifier | 0.7831 | 0.8401 | 0.7951 | 0.848 | 0.8185 | 0.5493 |

| 8 | K Neighbors Classifier | 0.7818 | 0.8367 | 0.8451 | 0.8117 | 0.8278 | 0.5302 |

| 9 | Naive Bayes | 0.761 | 0.8308 | 0.7658 | 0.8381 | 0.7994 | 0.5049 |

| 10 | Extra Trees Classifier | 0.7532 | 0.8279 | 0.8034 | 0.8004 | 0.8006 | 0.4764 |

| 11 | Quadratic Discriminant Analysis | 0.713 | 0.7364 | 0.7844 | 0.7625 | 0.7716 | 0.3838 |

| 12 | Decision Tree Classifier | 0.7325 | 0.7222 | 0.7659 | 0.7991 | 0.7797 | 0.438 |

| 13 | SVM - Linear Kernel | 0.7727 | 0 | 0.8682 | 0.7921 | 0.8261 | 0.4988 |

| 14 | Ridge Classifier | 0.8338 | 0 | 0.8704 | 0.8641 | 0.8661 | 0.6465 |

my_clf.best_modelLinearDiscriminantAnalysis(n_components=None, priors=None, shrinkage=0.001,

solver='lsqr', store_covariance=False, tol=0.0001)

Predicting

my_clf.predict(test_X)array([0, 1, 1, 1, 1, 0, 0, 1, 1, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 1, 1,

0, 1, 0, 0, 1, 1, 1, 1, 0, 1, 0, 1, 1, 1, 0, 0, 0, 0, 1, 0, 1, 0,

0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 0, 0, 0, 1, 0, 1, 0, 1,

0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 1, 0, 1, 1, 1, 0, 0, 0, 1, 1, 1,

0, 0, 1, 0, 1, 1, 1, 1, 1, 0, 1, 1, 1, 1, 0, 1, 0, 1, 1, 0, 0, 0,

0, 0, 1, 0, 0, 1, 0, 1, 0, 0, 0, 1, 1, 0, 1, 1, 0, 1, 1, 0, 1, 0,

1, 1, 1, 0, 1, 0, 0, 1, 0, 0, 1, 0, 1, 0, 1, 1, 0, 0, 1, 0, 0, 1,

0, 1, 1, 0, 1, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 1, 0, 0, 1, 0, 1,

1, 1, 0, 0, 1, 0, 1, 1, 1, 0, 0, 1, 1, 1, 1, 0, 1, 0, 1, 0, 1, 1,

1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1])

test_y.valuesarray([0, 1, 1, 1, 0, 0, 0, 1, 1, 0, 1, 1, 0, 1, 1, 1, 0, 0, 1, 1, 1, 0,

0, 1, 0, 0, 1, 1, 1, 1, 0, 1, 1, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 0,

0, 1, 1, 0, 0, 1, 1, 0, 1, 0, 0, 1, 1, 1, 0, 0, 0, 1, 0, 1, 0, 0,

0, 1, 0, 1, 0, 1, 1, 0, 1, 1, 0, 1, 0, 1, 1, 1, 0, 0, 0, 1, 0, 1,

0, 0, 1, 0, 1, 1, 1, 1, 1, 0, 1, 1, 1, 1, 0, 1, 0, 1, 1, 0, 0, 1,

0, 0, 1, 1, 0, 1, 0, 1, 1, 0, 1, 1, 1, 0, 1, 1, 0, 1, 1, 0, 0, 0,

1, 1, 0, 1, 1, 1, 0, 1, 0, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1,

0, 0, 1, 0, 1, 1, 1, 0, 0, 1, 0, 1, 0, 1, 1, 0, 1, 1, 0, 1, 0, 1,

1, 0, 0, 1, 1, 0, 1, 1, 1, 0, 0, 1, 1, 1, 0, 0, 1, 0, 1, 0, 1, 0,

1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1])

Predicting score

my_clf.predict_proba(test_X)[:15]array([[0.81394134, 0.18605866],

[0.29250523, 0.70749477],

[0.0556436 , 0.9443564 ],

[0.34100222, 0.65899778],

[0.30329665, 0.69670335],

[0.93766687, 0.06233313],

[0.79220565, 0.20779435],

[0.20849993, 0.79150007],

[0.03889261, 0.96110739],

[0.96823484, 0.03176516],

[0.03828661, 0.96171339],

[0.05635964, 0.94364036],

[0.34055076, 0.65944924],

[0.01090129, 0.98909871],

[0.00782886, 0.99217114]])

Preprocessed test set

my_clf.preprocess(test_X)| WeekofPurchase | StoreID | PriceCH | PriceMM | SpecialCH | SpecialMM | LoyalCH | SalePriceMM | SalePriceCH | PriceDiff | PctDiscMM | PctDiscCH | ListPriceDiff | STORE | Store7_Yes | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 928 | -1.332854 | -0.420137 | -0.778278 | 0.026205 | -0.411581 | -0.436512 | -1.199095 | 0.497515 | -0.176508 | 0.562370 | -0.581691 | -0.446505 | 0.764189 | 0.958208 | 0.0 |

| 780 | -1.462320 | -1.284649 | -1.769803 | -0.724024 | 2.429655 | -0.436512 | 0.038105 | 0.097597 | -0.875681 | 0.562370 | -0.581691 | -0.446505 | 0.764189 | -0.435549 | 0.0 |

| 564 | 0.738609 | 1.308888 | -0.084210 | 0.326297 | 2.429655 | -0.436512 | 0.082491 | 0.657482 | -2.274027 | 1.840059 | -0.581691 | 2.722738 | 0.484560 | -1.132427 | 1.0 |

| 520 | 0.285476 | -0.852393 | -0.084210 | 0.701412 | -0.411581 | 2.290890 | 0.341921 | -0.742231 | 0.312913 | -0.865637 | 1.253504 | -0.446505 | 0.950608 | 0.261329 | 0.0 |

| 399 | 1.062275 | 1.308888 | -0.084210 | 0.326297 | -0.411581 | -0.436512 | -0.470514 | 0.657482 | 0.312913 | 0.449632 | -0.581691 | -0.446505 | 0.484560 | -1.132427 | 1.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 231 | -1.721253 | 1.308888 | -1.769803 | -2.974714 | -0.411581 | -0.436512 | -0.127836 | -1.102158 | -0.875681 | -0.565004 | -0.581691 | -0.446505 | -2.032101 | -1.132427 | 1.0 |

| 669 | -0.038190 | 0.012119 | 1.204772 | 1.526665 | 2.429655 | -0.436512 | -0.241797 | 1.297351 | 1.221837 | 0.562370 | -0.581691 | -0.446505 | 0.764189 | 1.655086 | 0.0 |

| 821 | -1.721253 | 0.012119 | -0.778278 | -2.224484 | -0.411581 | -0.436512 | 0.537512 | -0.702239 | -0.176508 | -0.565004 | -0.581691 | -0.446505 | -2.032101 | 1.655086 | 0.0 |

| 54 | 0.091277 | 0.012119 | 1.204772 | 1.526665 | -0.411581 | -0.436512 | 0.302929 | 1.297351 | 1.221837 | 0.562370 | -0.581691 | -0.446505 | 0.764189 | 1.655086 | 0.0 |

| 34 | -0.232389 | -0.420137 | 1.204772 | 1.076527 | -0.411581 | -0.436512 | -0.099110 | 1.057400 | 1.221837 | 0.336895 | -0.581691 | -0.446505 | 0.204931 | 0.958208 | 0.0 |

214 rows × 15 columns

Evaluating final model

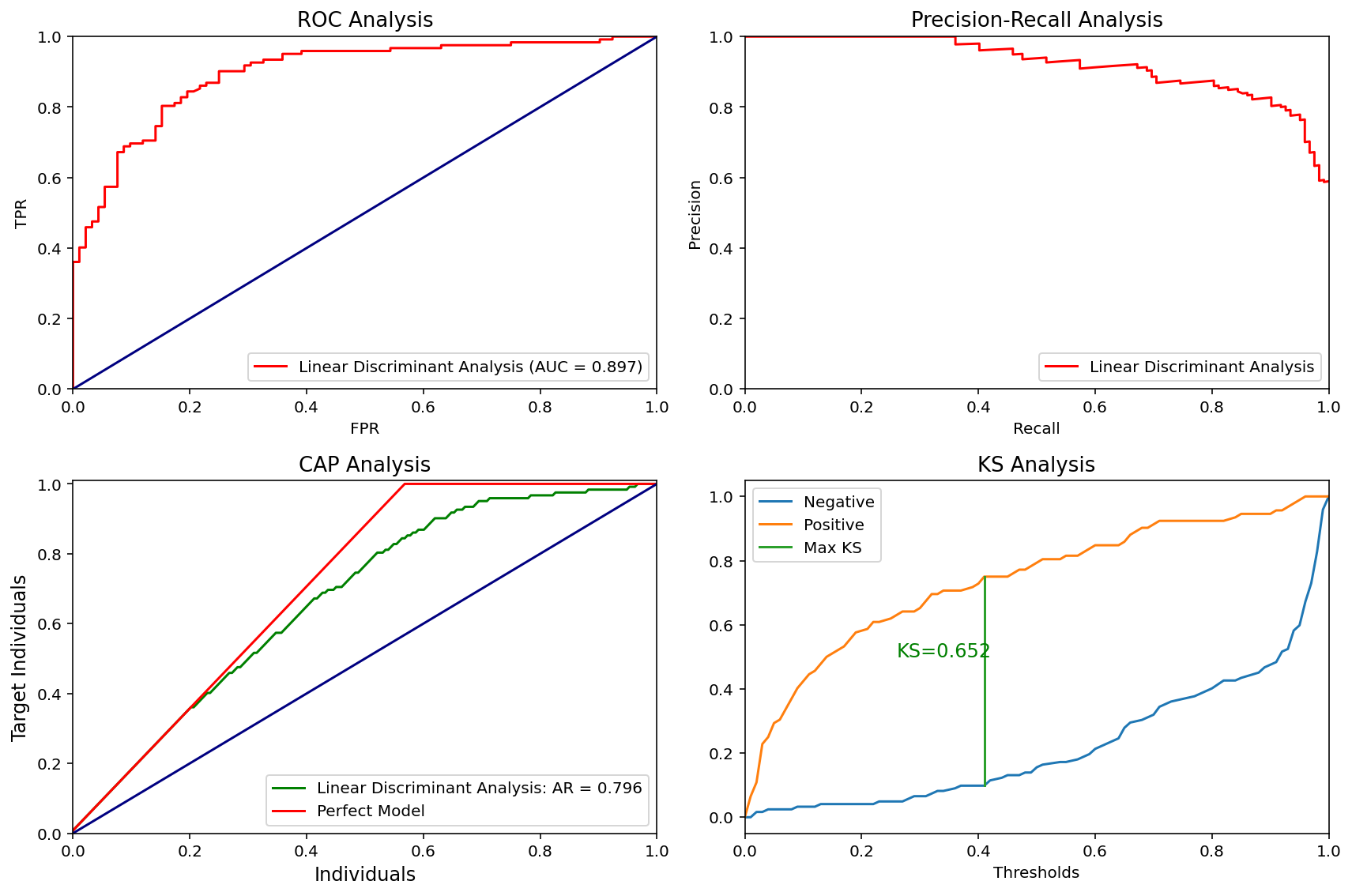

my_clf.evaluate(test_X, test_y)| Accuracy | Recall | Precision | F1-Score | AUC-ROC | |

|---|---|---|---|---|---|

| Linear Discriminant Analysis | 0.82243 | 0.844262 | 0.844262 | 0.844262 | 0.896605 |

Ploting curves to evaluate performace

my_clf.binary_evaluation_plot(test_X, test_y)df = pd.read_csv("boston.csv")df.head()| crim | zn | indus | chas | nox | rm | age | dis | rad | tax | ptratio | black | lstat | medv | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.00632 | 18.0 | 2.31 | 0 | 0.538 | 6.575 | 65.2 | 4.0900 | 1 | 296 | 15.3 | 396.90 | 4.98 | 24.0 |

| 1 | 0.02731 | 0.0 | 7.07 | 0 | 0.469 | 6.421 | 78.9 | 4.9671 | 2 | 242 | 17.8 | 396.90 | 9.14 | 21.6 |

| 2 | 0.02729 | 0.0 | 7.07 | 0 | 0.469 | 7.185 | 61.1 | 4.9671 | 2 | 242 | 17.8 | 392.83 | 4.03 | 34.7 |

| 3 | 0.03237 | 0.0 | 2.18 | 0 | 0.458 | 6.998 | 45.8 | 6.0622 | 3 | 222 | 18.7 | 394.63 | 2.94 | 33.4 |

| 4 | 0.06905 | 0.0 | 2.18 | 0 | 0.458 | 7.147 | 54.2 | 6.0622 | 3 | 222 | 18.7 | 396.90 | 5.33 | 36.2 |

X = df.iloc[:, :-1]

y = df.iloc[:, -1]train_X, test_X, train_y, test_y = train_test_split(X, y, test_size=0.2, random_state=0)Importing our class for classification problems

from automl.regression import RegressorPyCaretDefining object

my_reg = RegressorPyCaret(metric="RMSE")Training all models and selecting the one with best performance

my_reg.fit(train_X, train_y)| Model | MAE | MSE | RMSE | R2 | RMSLE | MAPE | |

|---|---|---|---|---|---|---|---|

| 0 | Extra Trees Regressor | 2.0117 | 9.8317 | 3.0117 | 0.882 | 0.1313 | 0.1 |

| 1 | CatBoost Regressor | 2.0328 | 10.0328 | 3.0457 | 0.8825 | 0.1337 | 0.1004 |

| 2 | Gradient Boosting Regressor | 2.0817 | 10.3726 | 3.1033 | 0.8773 | 0.1392 | 0.1055 |

| 3 | Extreme Gradient Boosting | 2.1903 | 11.3859 | 3.2391 | 0.8657 | 0.1424 | 0.1093 |

| 4 | Light Gradient Boosting Machine | 2.2946 | 12.4216 | 3.429 | 0.8533 | 0.1456 | 0.1116 |

| 5 | Random Forest | 2.2884 | 12.7449 | 3.4698 | 0.8493 | 0.1494 | 0.1139 |

| 6 | AdaBoost Regressor | 2.6787 | 14.0651 | 3.6835 | 0.8322 | 0.1674 | 0.1377 |

| 7 | K Neighbors Regressor | 2.7906 | 19.2193 | 4.2479 | 0.777 | 0.1706 | 0.1285 |

| 8 | Bayesian Ridge | 3.1667 | 21.2922 | 4.546 | 0.7493 | 0.2214 | 0.1552 |

| 9 | Ridge Regression | 3.1846 | 21.357 | 4.5549 | 0.7483 | 0.2214 | 0.1558 |

| 10 | Linear Regression | 3.1907 | 21.3876 | 4.5588 | 0.7479 | 0.2215 | 0.156 |

| 11 | Huber Regressor | 3.02 | 21.9739 | 4.5865 | 0.7425 | 0.2423 | 0.1459 |

| 12 | Random Sample Consensus | 3.141 | 23.5192 | 4.6983 | 0.7248 | 0.257 | 0.1529 |

| 13 | Decision Tree | 3.1003 | 23.0186 | 4.7735 | 0.7244 | 0.2072 | 0.156 |

| 14 | Least Angle Regression | 3.4683 | 26.3764 | 5.0549 | 0.6804 | 0.2535 | 0.1757 |

| 15 | Lasso Regression | 3.586 | 26.3971 | 5.0742 | 0.6933 | 0.2454 | 0.1818 |

| 16 | Elastic Net | 3.6377 | 27.6603 | 5.2005 | 0.6777 | 0.2227 | 0.1786 |

| 17 | Support Vector Machine | 3.2355 | 29.4341 | 5.3048 | 0.6639 | 0.2138 | 0.1569 |

| 18 | TheilSen Regressor | 3.6964 | 33.5251 | 5.6568 | 0.6107 | 0.3268 | 0.1814 |

| 19 | Passive Aggressive Regressor | 4.3705 | 38.8214 | 6.203 | 0.5339 | 0.3688 | 0.2342 |

| 20 | Orthogonal Matching Pursuit | 4.7336 | 43.4094 | 6.4761 | 0.4999 | 0.3263 | 0.2297 |

| 21 | Lasso Least Angle Regression | 6.8136 | 86.403 | 9.261 | -0.0147 | 0.3952 | 0.3674 |

my_reg.best_modelExtraTreesRegressor(bootstrap=False, ccp_alpha=0.0, criterion='mse',

max_depth=50, max_features='auto', max_leaf_nodes=None,

max_samples=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=7, min_weight_fraction_leaf=0.0,

n_estimators=230, n_jobs=None, oob_score=False,

random_state=4079, verbose=0, warm_start=False)

Predicting

my_reg.predict(test_X)array([23.59301449, 23.86976812, 22.9758913 , 10.77536232, 21.7523913 ,

20.45304348, 21.64648551, 20.05999275, 20.34348551, 19.65515942,

8.15554348, 15.19625362, 15.24094203, 8.6743913 , 48.79373913,

34.54835507, 21.51931159, 36.47942754, 25.99400725, 21.06105072,

23.53934783, 22.12610145, 19.5293913 , 24.70913043, 20.46073188,

17.92857246, 17.57008696, 16.32171014, 43.31463043, 19.55130435,

15.54263768, 18.10597101, 21.12585507, 21.45034058, 24.27658696,

19.78678986, 9.05228261, 25.52650725, 14.11785507, 15.47104348,

22.96647826, 20.59884058, 21.83624638, 16.47018841, 24.62587681,

23.3175942 , 20.49226087, 18.62208696, 15.1505942 , 24.18486232,

16.4717971 , 20.65637681, 21.94365217, 38.90871739, 15.64312319,

21.3097029 , 19.55873913, 19.6977971 , 19.44619565, 20.55236957,

21.44344203, 22.00573188, 32.4110942 , 28.30823188, 19.36657246,

27.20982609, 15.82344928, 22.58002899, 15.18563768, 22.05265217,

20.84502174, 22.77226812, 24.42430435, 31.16600725, 26.7797029 ,

8.60797101, 42.23894928, 22.59955797, 23.76918841, 19.81447826,

25.12301449, 18.41964493, 19.59877536, 42.00841304, 41.19852899,

23.88085507, 22.77155797, 14.20571739, 26.48037681, 15.24131884,

19.44444203, 11.67923188, 22.47393478, 31.38963043, 21.19071739,

22.03372464, 12.33304348, 23.81121014, 14.16386957, 18.81552899,

23.94378986, 19.97833333])

test_y.valuesarray([22.6, 50. , 23. , 8.3, 21.2, 19.9, 20.6, 18.7, 16.1, 18.6, 8.8,

17.2, 14.9, 10.5, 50. , 29. , 23. , 33.3, 29.4, 21. , 23.8, 19.1,

20.4, 29.1, 19.3, 23.1, 19.6, 19.4, 38.7, 18.7, 14.6, 20. , 20.5,

20.1, 23.6, 16.8, 5.6, 50. , 14.5, 13.3, 23.9, 20. , 19.8, 13.8,

16.5, 21.6, 20.3, 17. , 11.8, 27.5, 15.6, 23.1, 24.3, 42.8, 15.6,

21.7, 17.1, 17.2, 15. , 21.7, 18.6, 21. , 33.1, 31.5, 20.1, 29.8,

15.2, 15. , 27.5, 22.6, 20. , 21.4, 23.5, 31.2, 23.7, 7.4, 48.3,

24.4, 22.6, 18.3, 23.3, 17.1, 27.9, 44.8, 50. , 23. , 21.4, 10.2,

23.3, 23.2, 18.9, 13.4, 21.9, 24.8, 11.9, 24.3, 13.8, 24.7, 14.1,

18.7, 28.1, 19.8])

Preprocessed test set

my_reg.preprocess(test_X)| crim | zn | indus | chas | nox | rm | age | dis | rad | tax | ptratio | black | lstat | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 329 | -0.408359 | -0.499608 | -1.128729 | -0.272888 | -0.833369 | 0.044972 | -1.846215 | 0.695069 | -0.624648 | 0.159137 | -0.712729 | 0.185476 | -0.736103 |

| 371 | 0.719251 | -0.499608 | 0.998884 | -0.272888 | 0.652840 | -0.123657 | 1.103327 | -1.251749 | 1.687378 | 1.542121 | 0.792674 | 0.083165 | -0.435692 |

| 219 | -0.402575 | -0.499608 | 0.396108 | 3.664502 | -0.051154 | 0.102623 | 0.832597 | -0.195833 | -0.509046 | -0.743319 | -0.940821 | 0.394727 | -0.302632 |

| 403 | 2.634810 | -0.499608 | 0.998884 | -0.272888 | 1.191699 | -1.373240 | 0.960837 | -0.994916 | 1.687378 | 1.542121 | 0.792674 | 0.430412 | 0.968974 |

| 78 | -0.409685 | -0.499608 | 0.244340 | -0.272888 | -1.033268 | -0.100597 | -0.545994 | 0.598583 | -0.509046 | -0.028387 | 0.108400 | 0.311840 | -0.050232 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 56 | -0.414103 | 3.100922 | -1.486672 | -0.272888 | -1.267933 | 0.117036 | -1.187199 | 2.606998 | -0.855850 | -0.526496 | -0.530256 | 0.430412 | -0.951467 |

| 455 | 0.168069 | -0.499608 | 0.998884 | -0.272888 | 1.365525 | 0.321696 | 0.622424 | -0.642174 | 1.687378 | 1.542121 | 0.792674 | -3.476599 | 0.744008 |

| 60 | -0.398260 | 0.559372 | -0.858124 | -0.272888 | -0.894208 | -0.808261 | -0.100713 | 1.662728 | -0.162242 | -0.696439 | 0.564583 | 0.410198 | 0.060880 |

| 213 | -0.399343 | -0.499608 | -0.076377 | -0.272888 | -0.581322 | 0.105505 | -1.308316 | 0.084292 | -0.624648 | -0.737459 | 0.062782 | 0.305177 | -0.456268 |

| 108 | -0.400881 | -0.499608 | -0.367026 | -0.272888 | -0.311892 | 0.248191 | 1.000022 | -0.643570 | -0.509046 | -0.110428 | 1.112002 | 0.411666 | -0.059834 |

102 rows × 13 columns

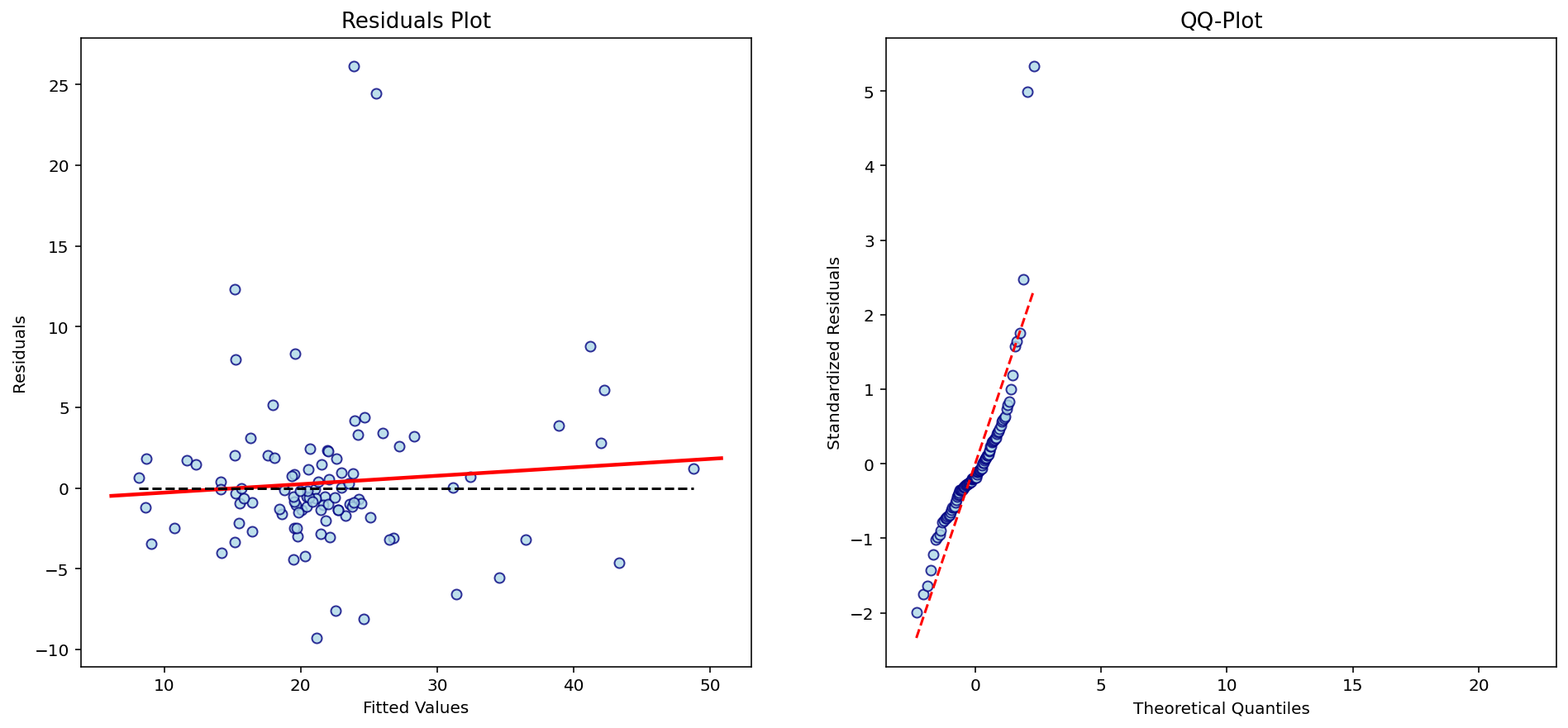

Evaluating final model

my_reg.evaluate(test_X, test_y)| MAE | MSE | RMSE | R2 | RMLSE | MAPE | |

|---|---|---|---|---|---|---|

| Extra Trees Regressor | 2.804562 | 23.271022 | 4.824005 | 0.714215 | 0.182163 | 0.128135 |

Checking on residuals

my_reg.plot_analysis(test_X, test_y)Copyright (c) 2018 The Python Packaging Authority

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.