Inference of Stable Diffusion in pure C/C++

- Plain C/C++ implementation based on ggml, working in the same way as llama.cpp

- 16-bit, 32-bit float support

- 4-bit, 5-bit and 8-bit integer quantization support

- Accelerated memory-efficient CPU inference

- AVX, AVX2 and AVX512 support for x86 architectures

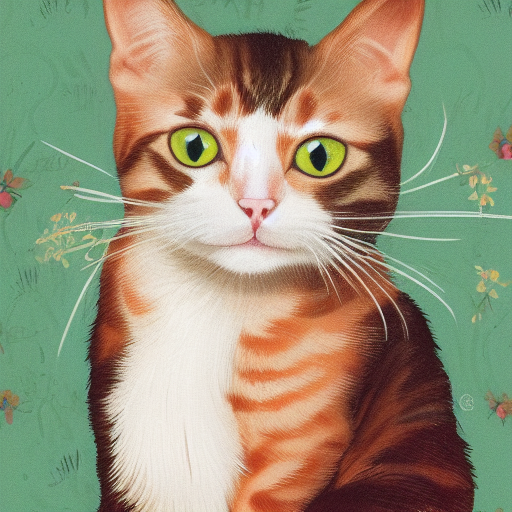

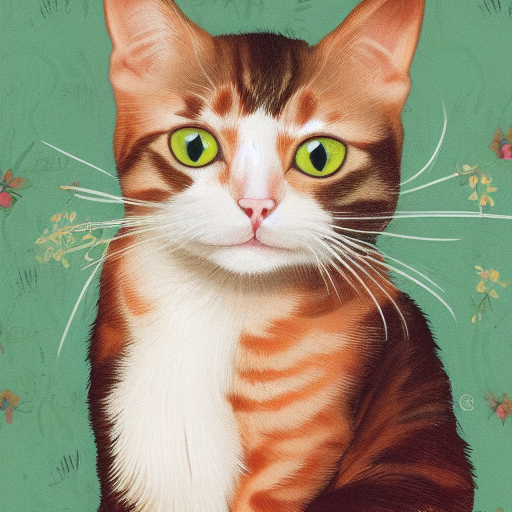

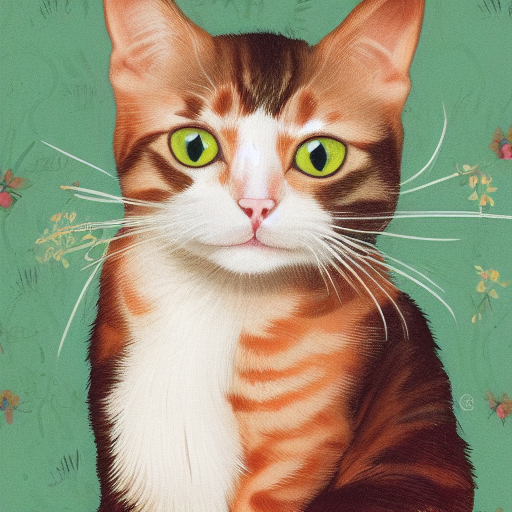

- Original

txt2imgmode - Negative prompt

- Sampling method

Euler A

- Supported platforms

- Linux

- Mac OS

- Windows

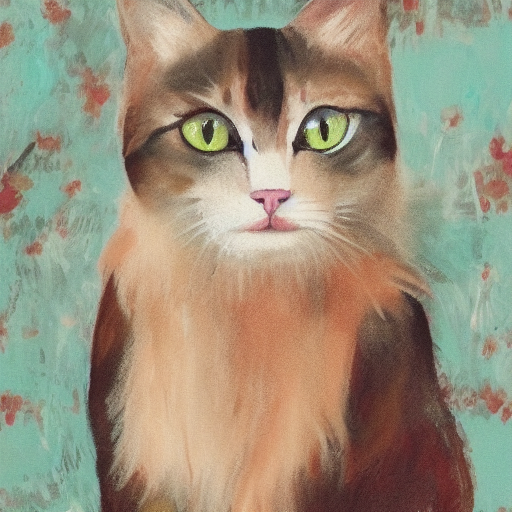

- Original

img2imgmode - More sampling methods

- GPU support

- Make inference faster

- The current implementation of ggml_conv_2d is slow and has high memory usage

- Continuing to reduce memory usage (quantizing the weights of ggml_conv_2d)

- stable-diffusion-webui style tokenizer (eg: token weighting, ...)

- LoRA support

- k-quants support

git clone --recursive https://github.com/leejet/stable-diffusion.cpp

cd stable-diffusion.cpp

-

download original weights(.ckpt or .safetensors). For example

- Stable Diffusion v1.4 from https://huggingface.co/CompVis/stable-diffusion-v-1-4-original

- Stable Diffusion v1.5 from https://huggingface.co/runwayml/stable-diffusion-v1-5

curl -L -O https://huggingface.co/CompVis/stable-diffusion-v-1-4-original/resolve/main/sd-v1-4.ckpt # curl -L -O https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned-emaonly.safetensors -

convert weights to ggml model format

cd models pip install -r requirements.txt python convert.py [path to weights] --out_type [output precision] # For example, python convert.py sd-v1-4.ckpt --out_type f16

You can specify the output model format using the --out_type parameter

f16for 16-bit floating-pointf32for 32-bit floating-pointq8_0for 8-bit integer quantizationq5_0orq5_1for 5-bit integer quantizationq4_0orq4_1for 4-bit integer quantization

mkdir build

cd build

cmake ..

cmake --build . --config Releasecmake .. -DGGML_OPENBLAS=ON

cmake --build . --config Release

usage: ./sd [arguments]

arguments:

-h, --help show this help message and exit

-t, --threads N number of threads to use during computation (default: -1).

If threads <= 0, then threads will be set to the number of CPU cores

-m, --model [MODEL] path to model

-o, --output OUTPUT path to write result image to (default: .\output.png)

-p, --prompt [PROMPT] the prompt to render

-n, --negative-prompt PROMPT the negative prompt (default: "")

--cfg-scale SCALE unconditional guidance scale: (default: 7.0)

-H, --height H image height, in pixel space (default: 512)

-W, --width W image width, in pixel space (default: 512)

--sample-method SAMPLE_METHOD sample method (default: "eular a")

--steps STEPS number of sample steps (default: 20)

-s SEED, --seed SEED RNG seed (default: 42, use random seed for < 0)

-v, --verbose print extra info

For example

./sd -m ../models/sd-v1-4-ggml-model-f16.bin -p "a lovely cat"

Using formats of different precisions will yield results of varying quality.

| f32 | f16 | q8_0 | q5_0 | q5_1 | q4_0 | q4_1 |

|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

| precision | f32 | f16 | q8_0 | q5_0 | q5_1 | q4_0 | q4_1 |

|---|---|---|---|---|---|---|---|

| Disk | 2.8G | 2.0G | 1.7G | 1.6G | 1.6G | 1.5G | 1.5G |

| Memory(txt2img - 512 x 512) | ~4.9G | ~4.1G | ~3.8G | ~3.7G | ~3.7G | ~3.6G | ~3.6G |